- 1 introduction

- 2 Embedded Architectures

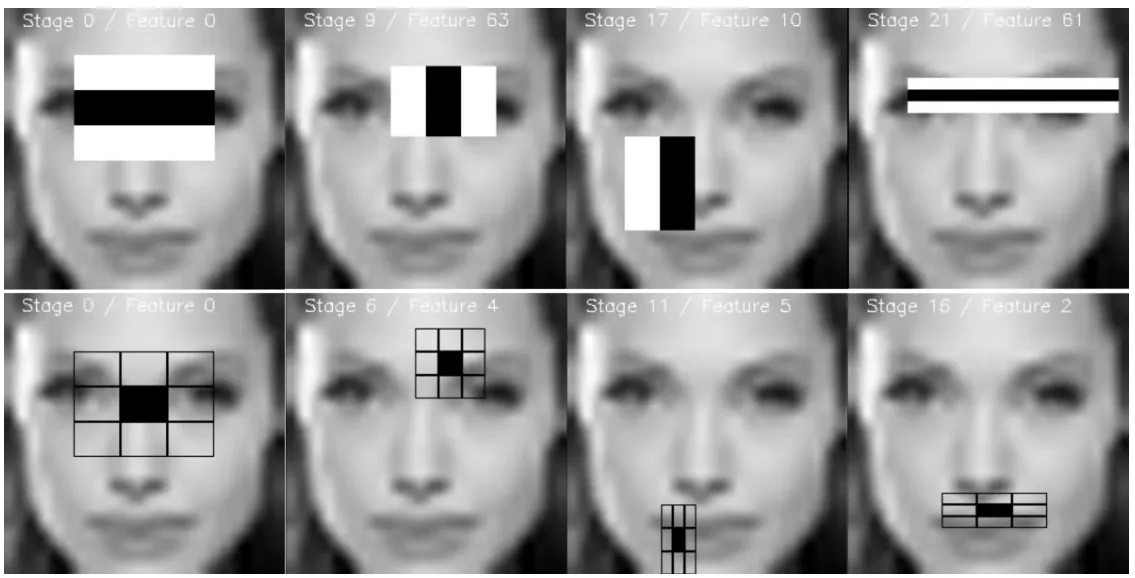

- 3 Sensors and Sensing

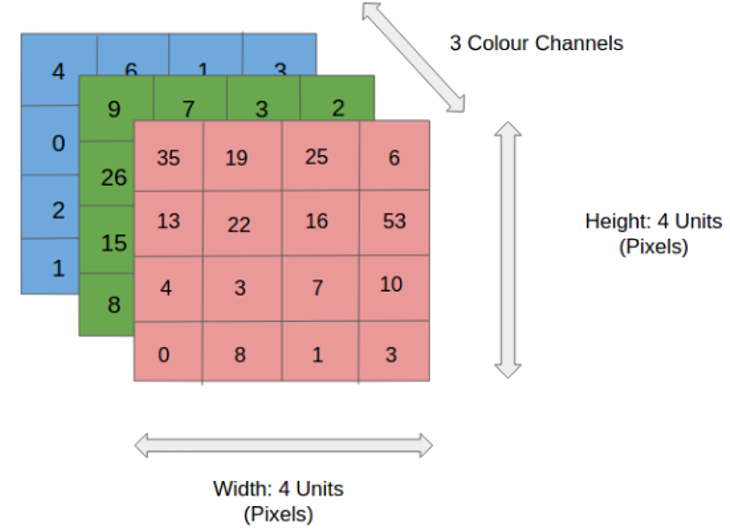

- 4 Real-Time Operating Systems

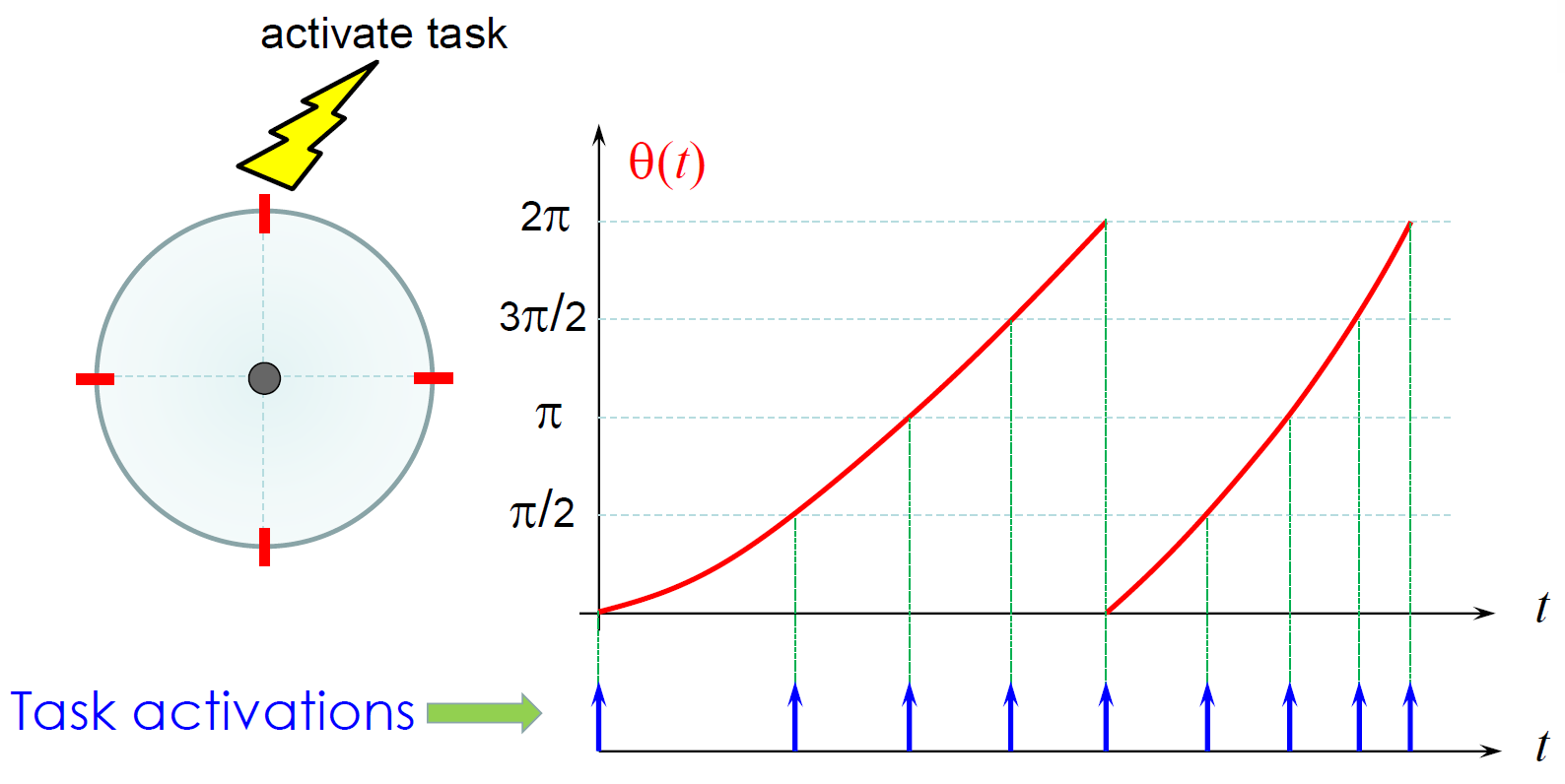

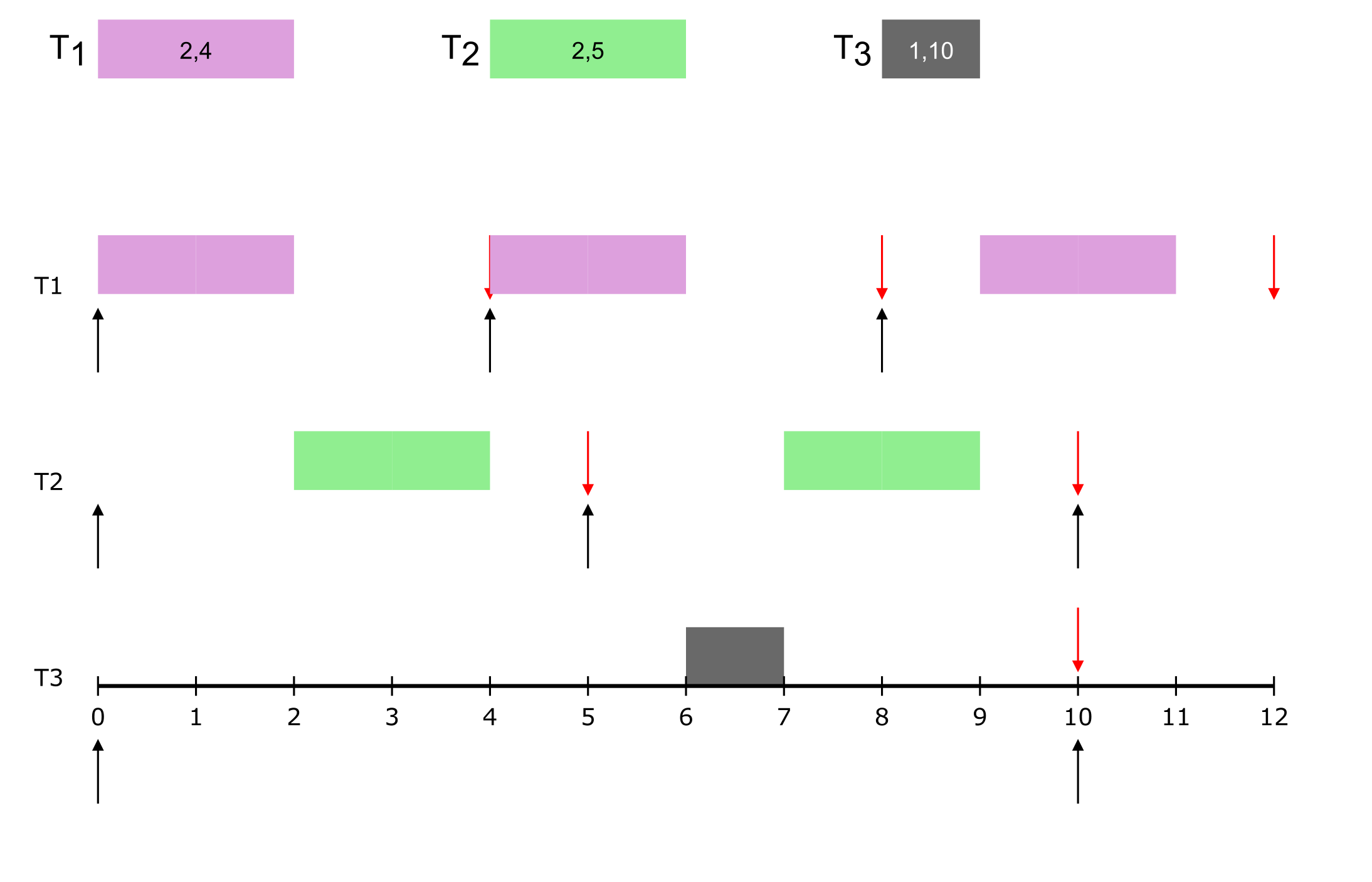

- 5 Scheduling for Real-Time Systems

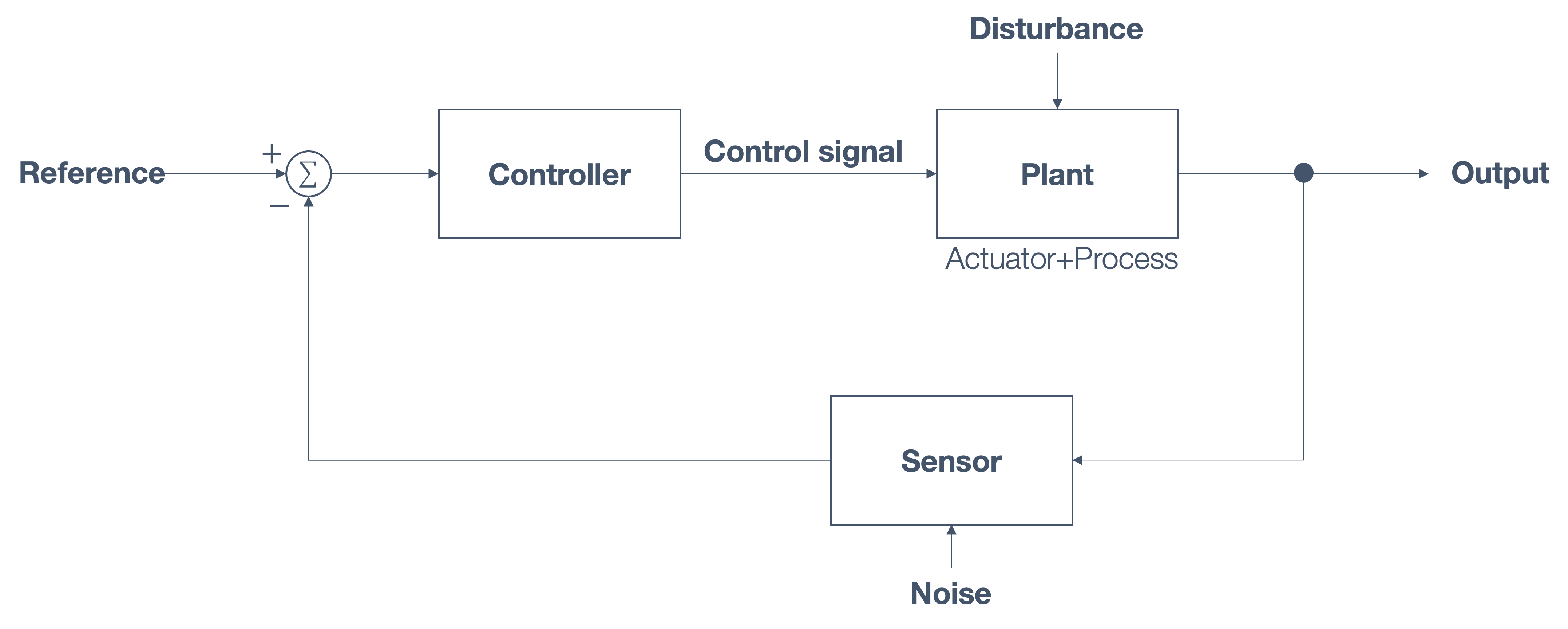

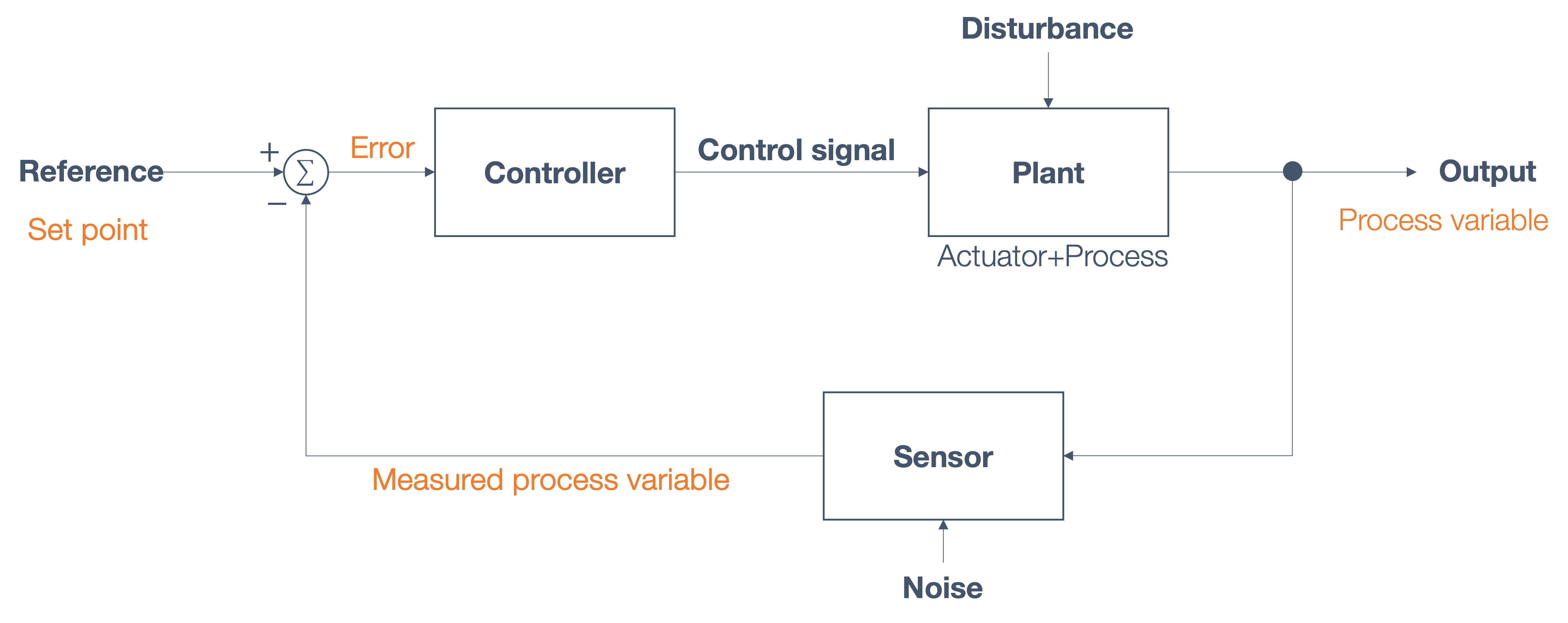

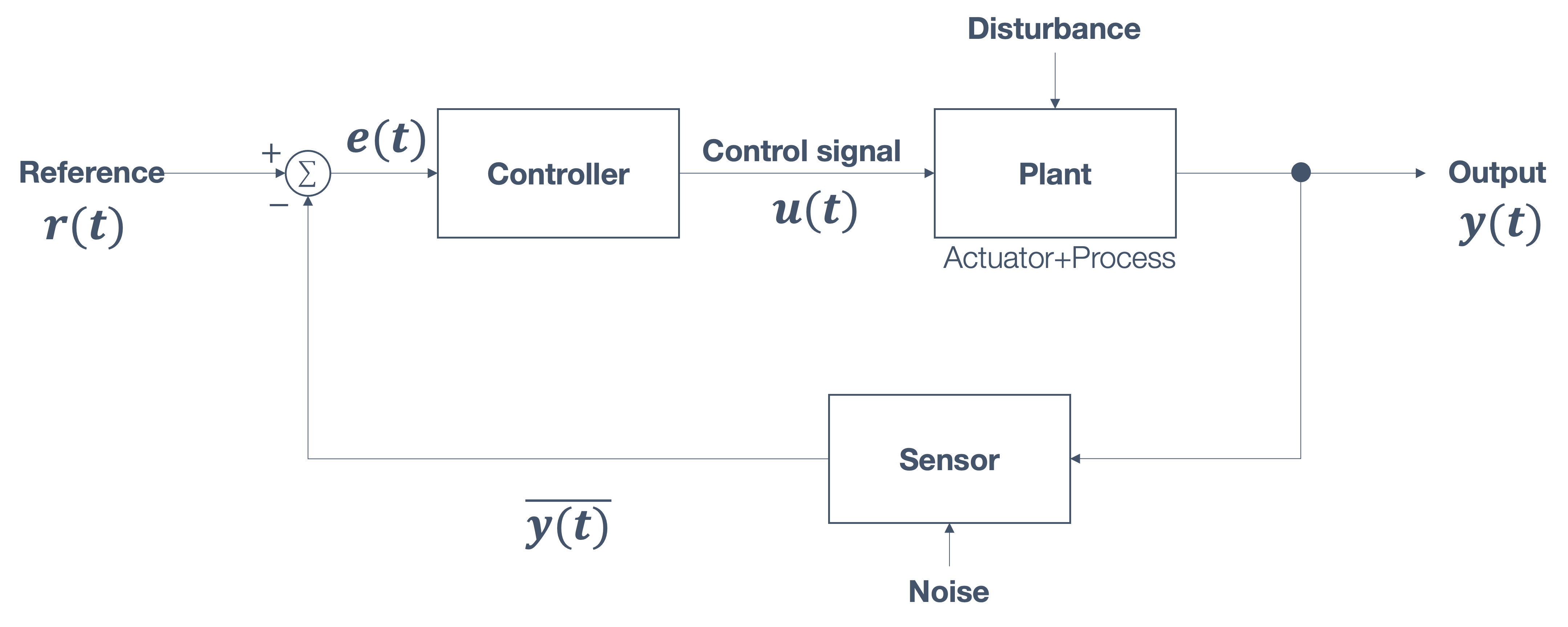

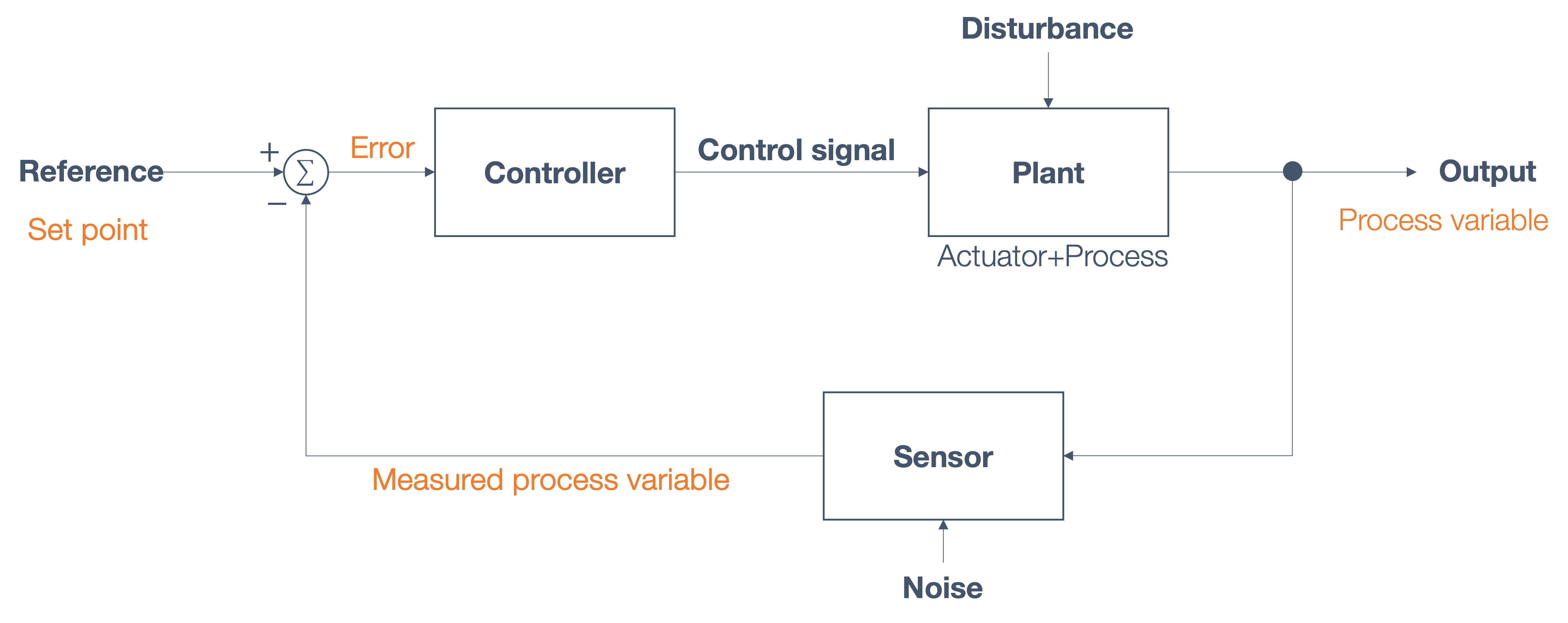

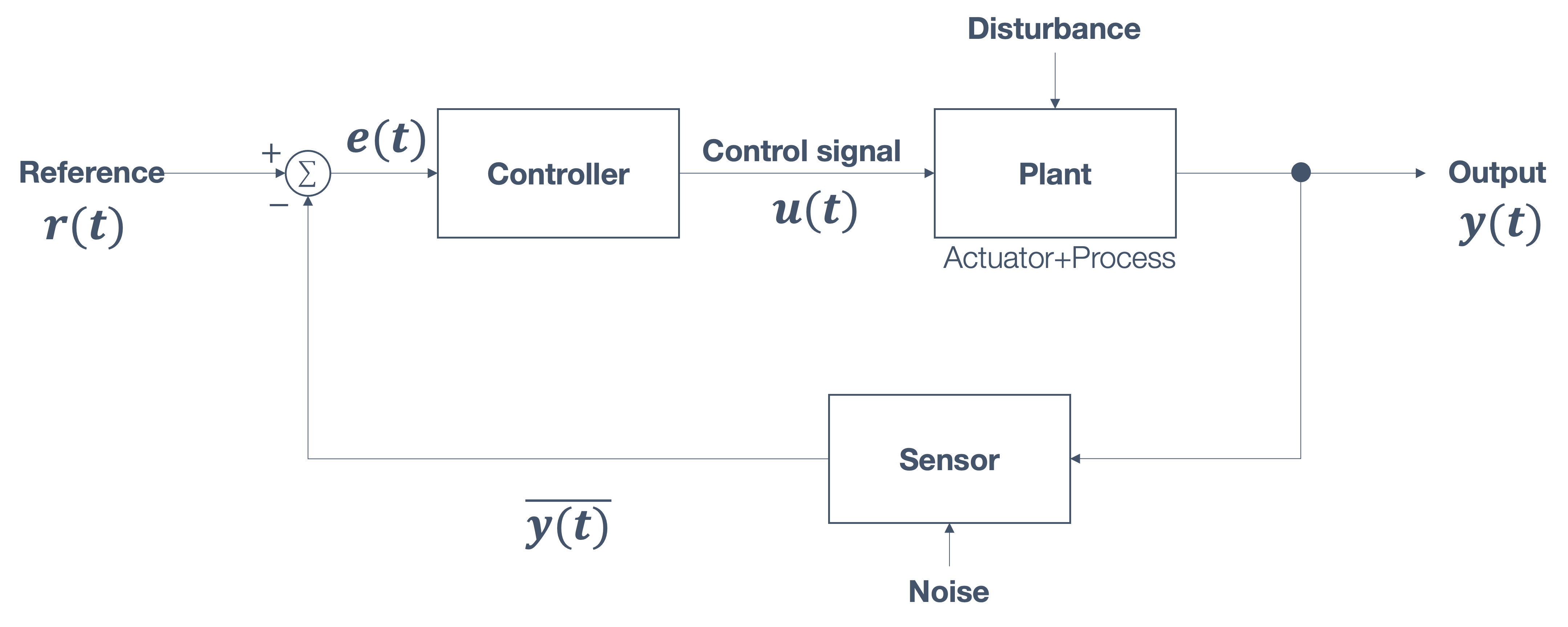

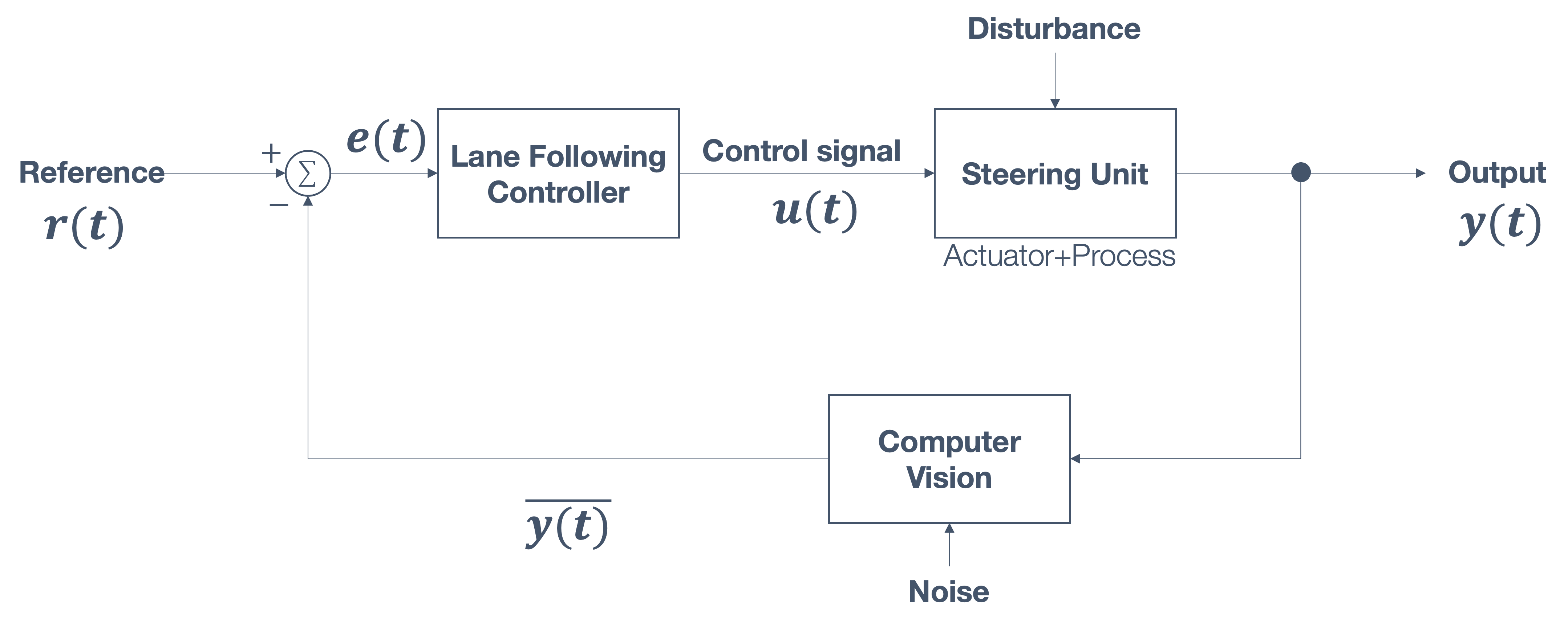

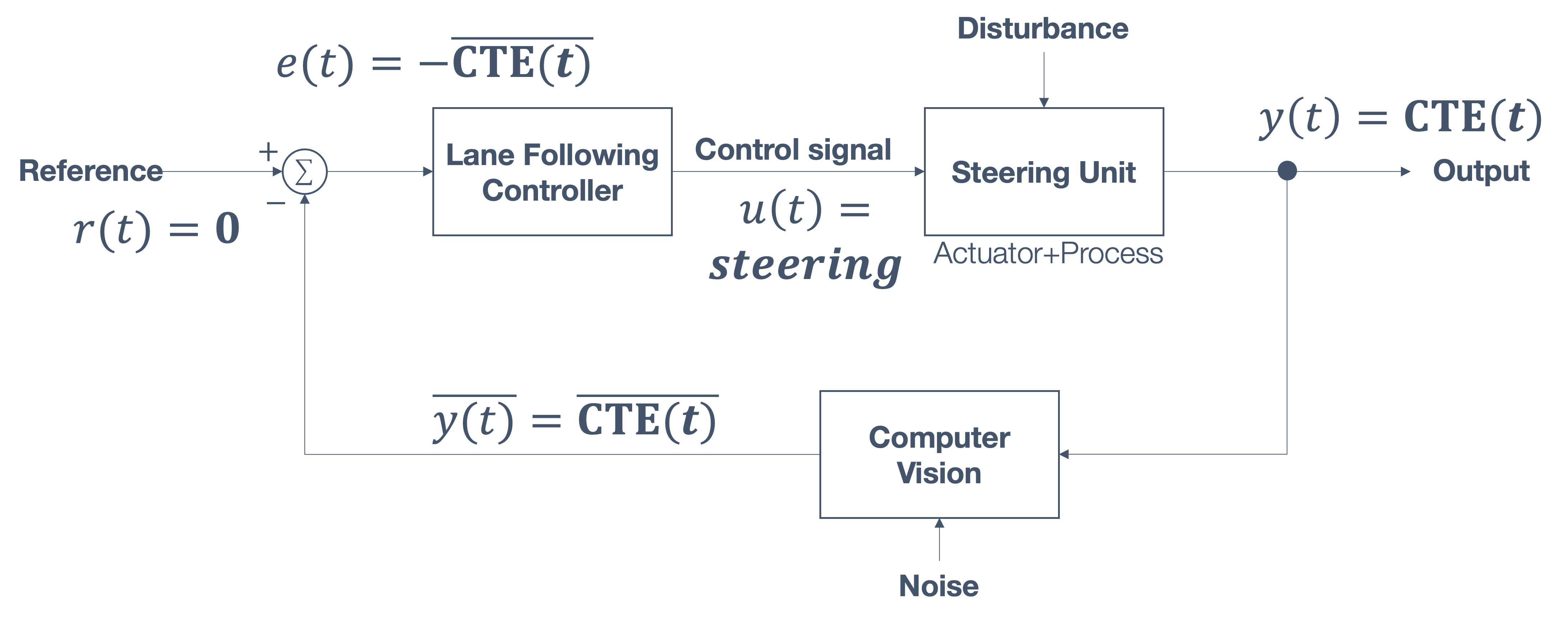

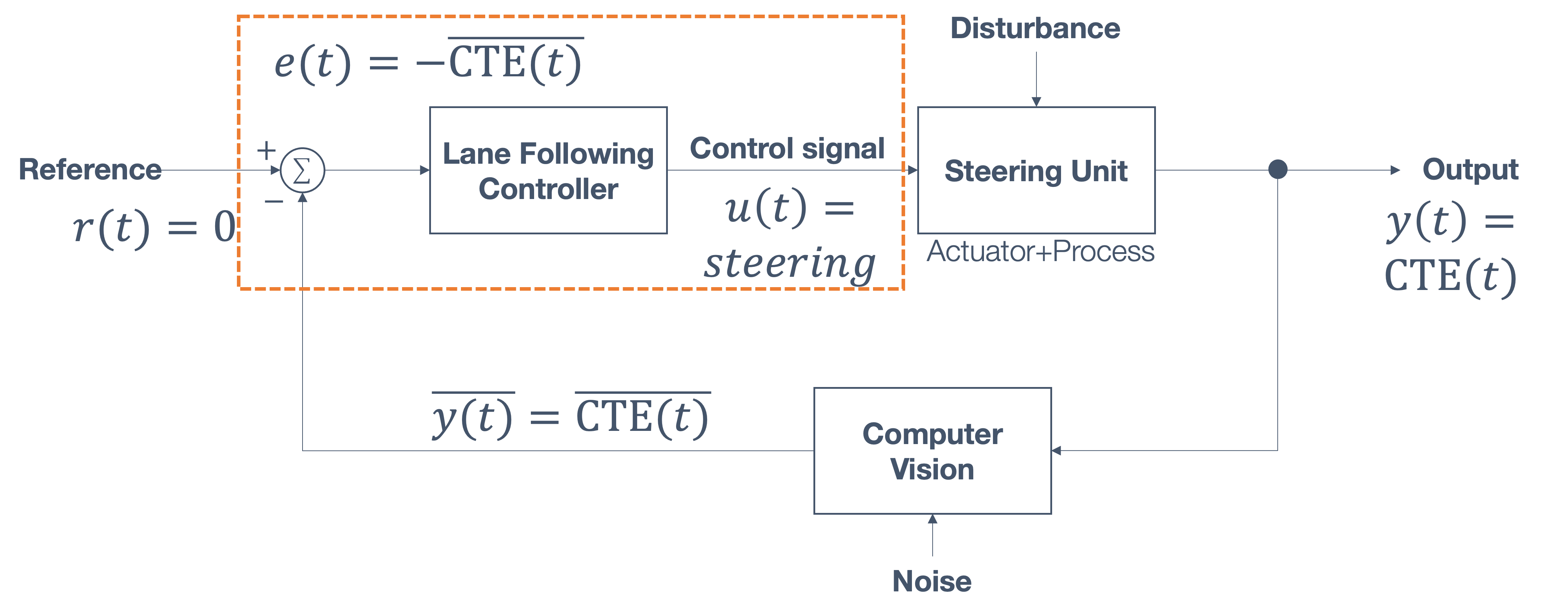

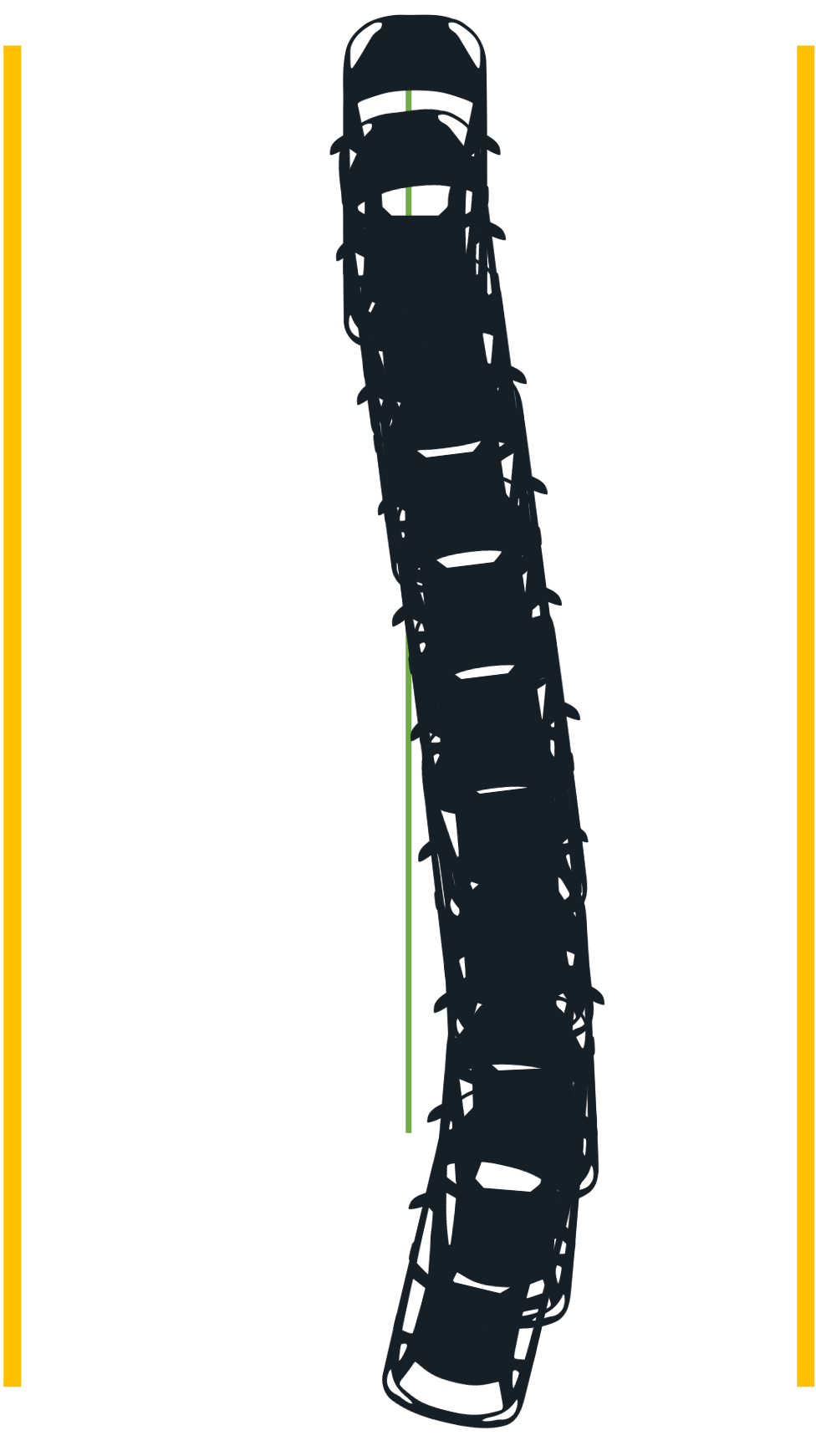

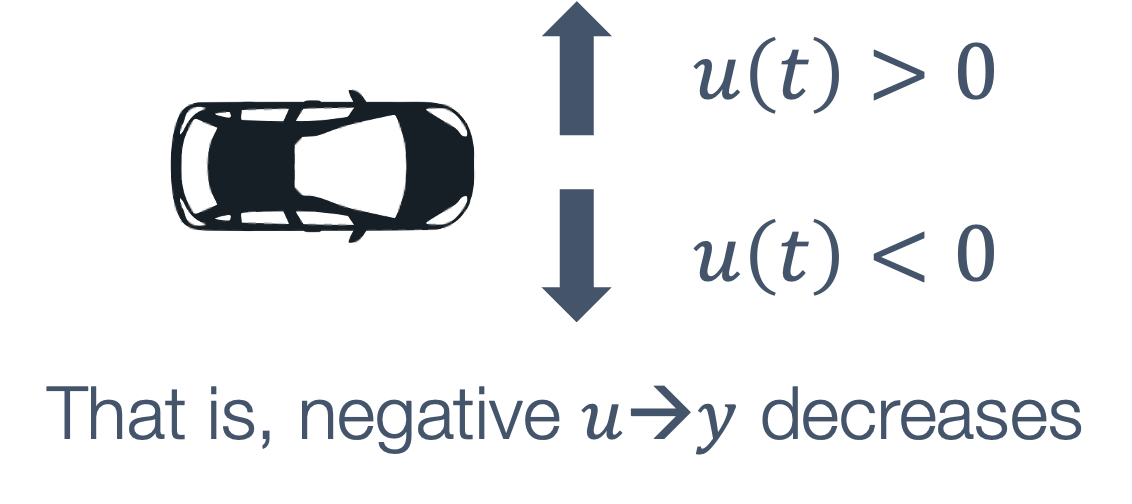

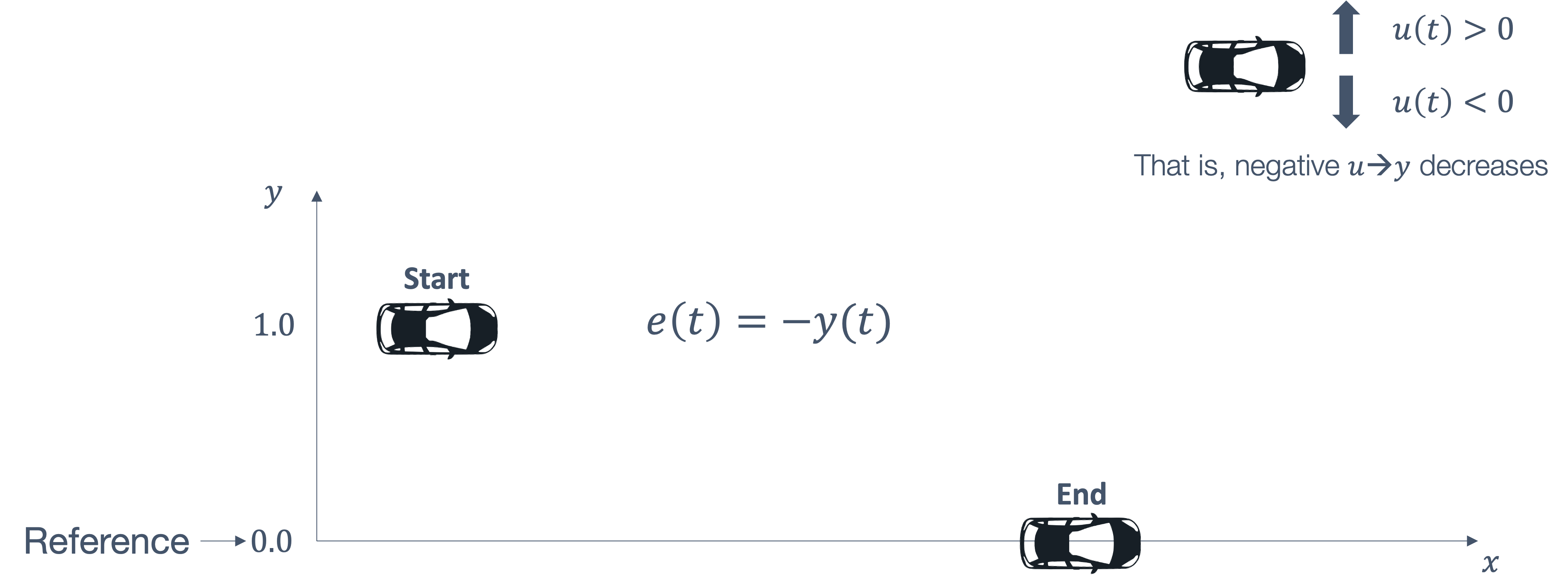

- 6 Control Theory

- 7 Actuation

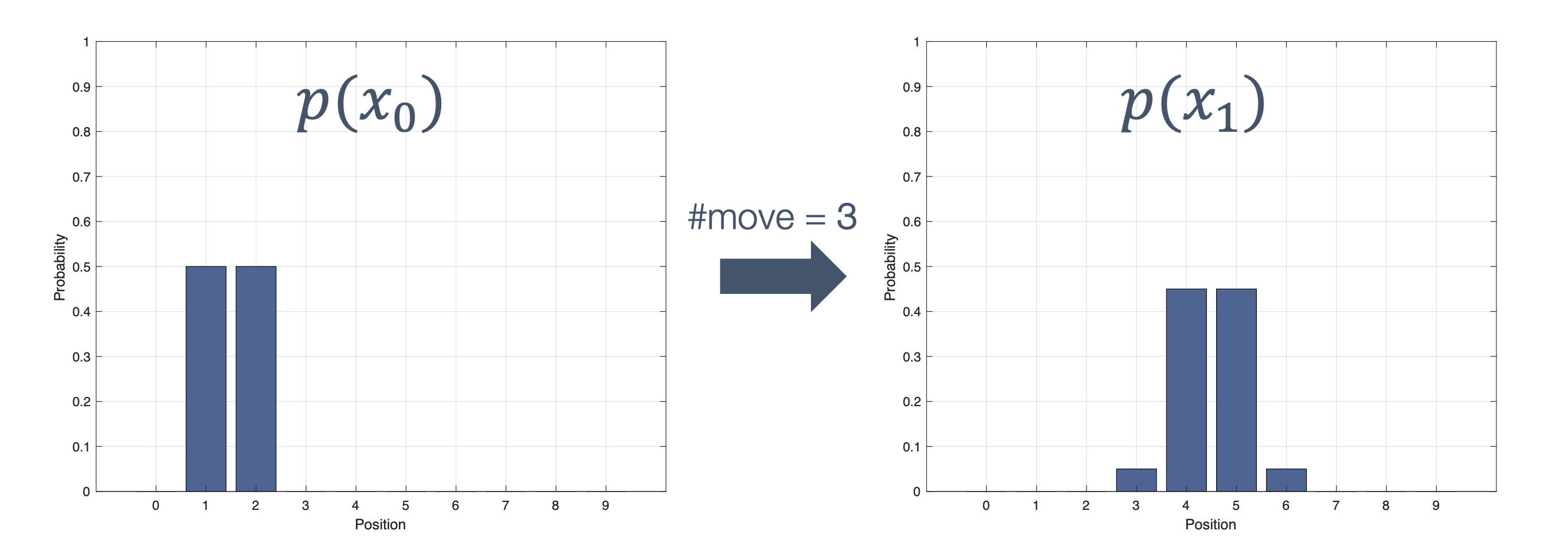

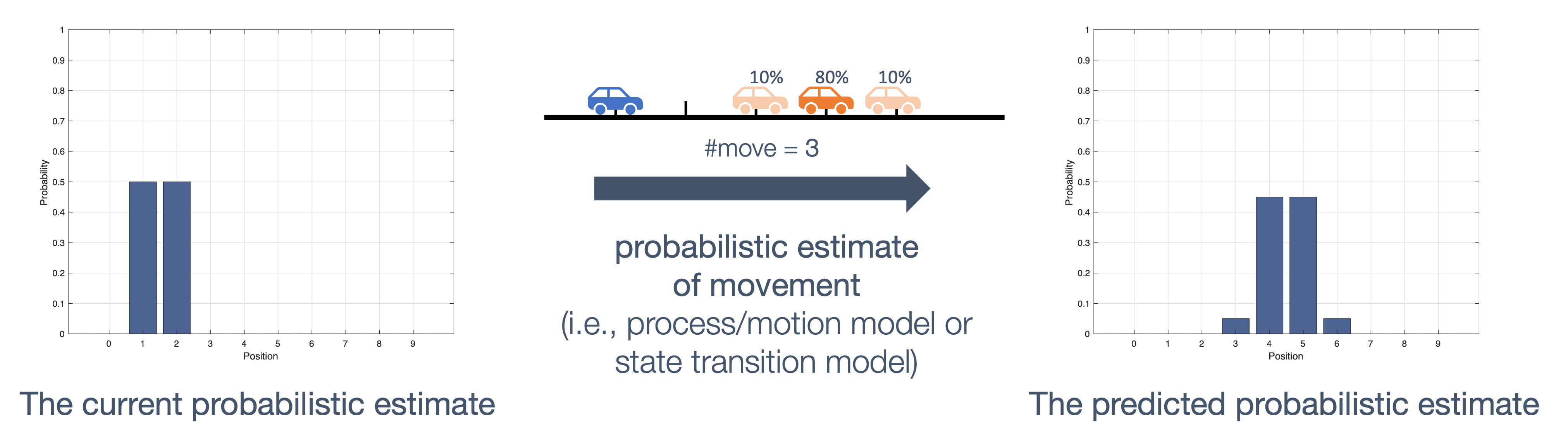

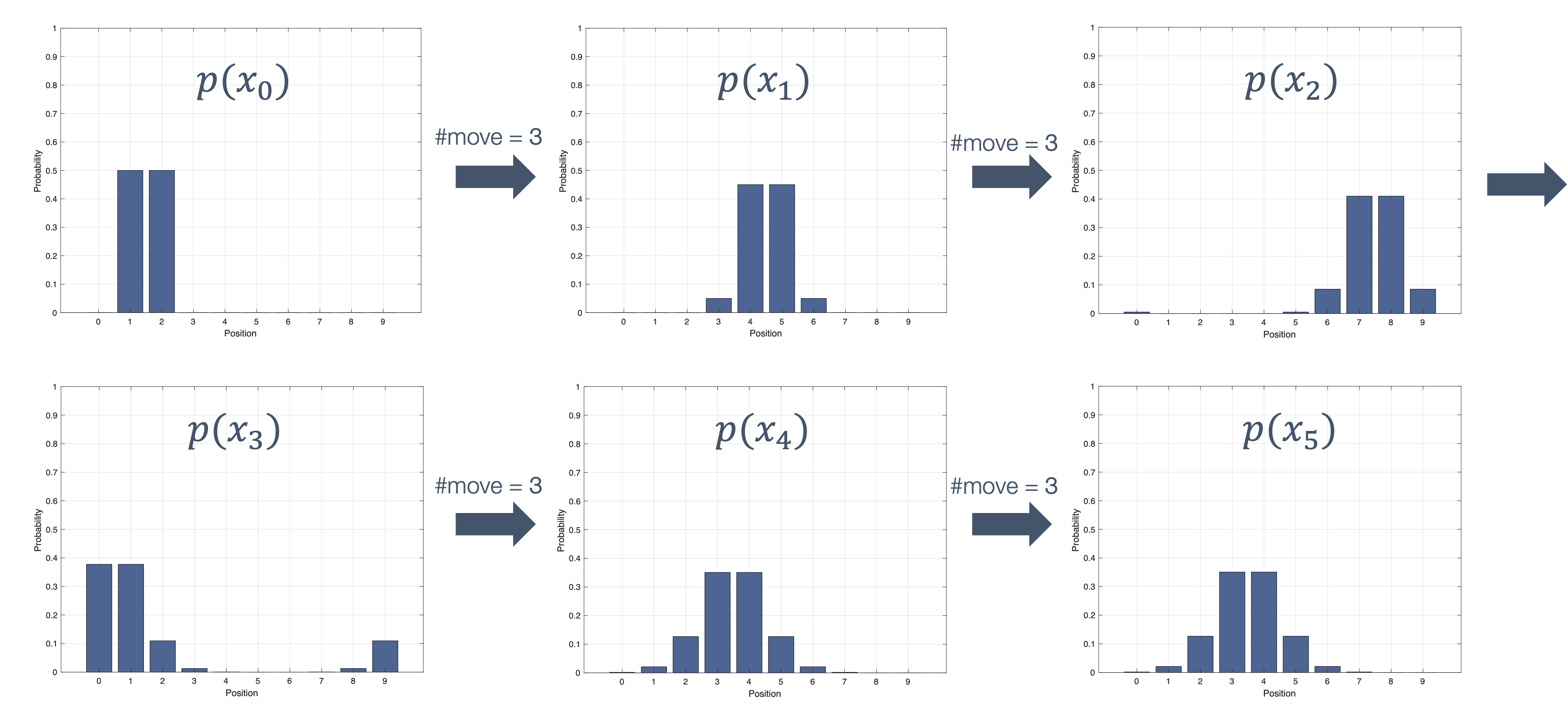

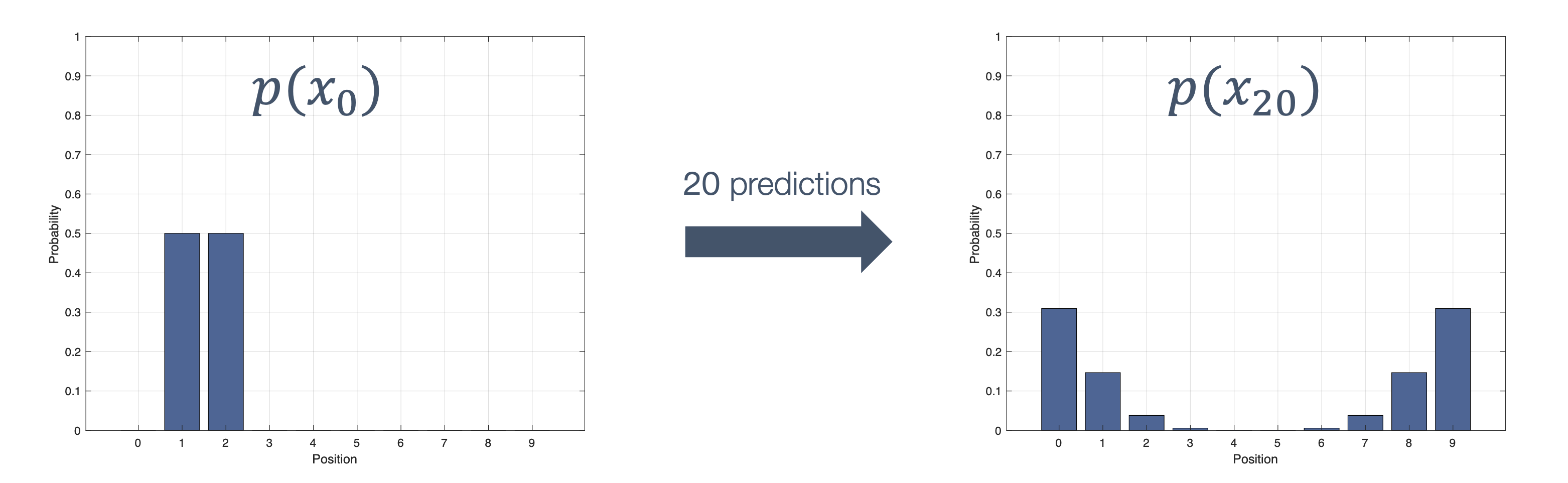

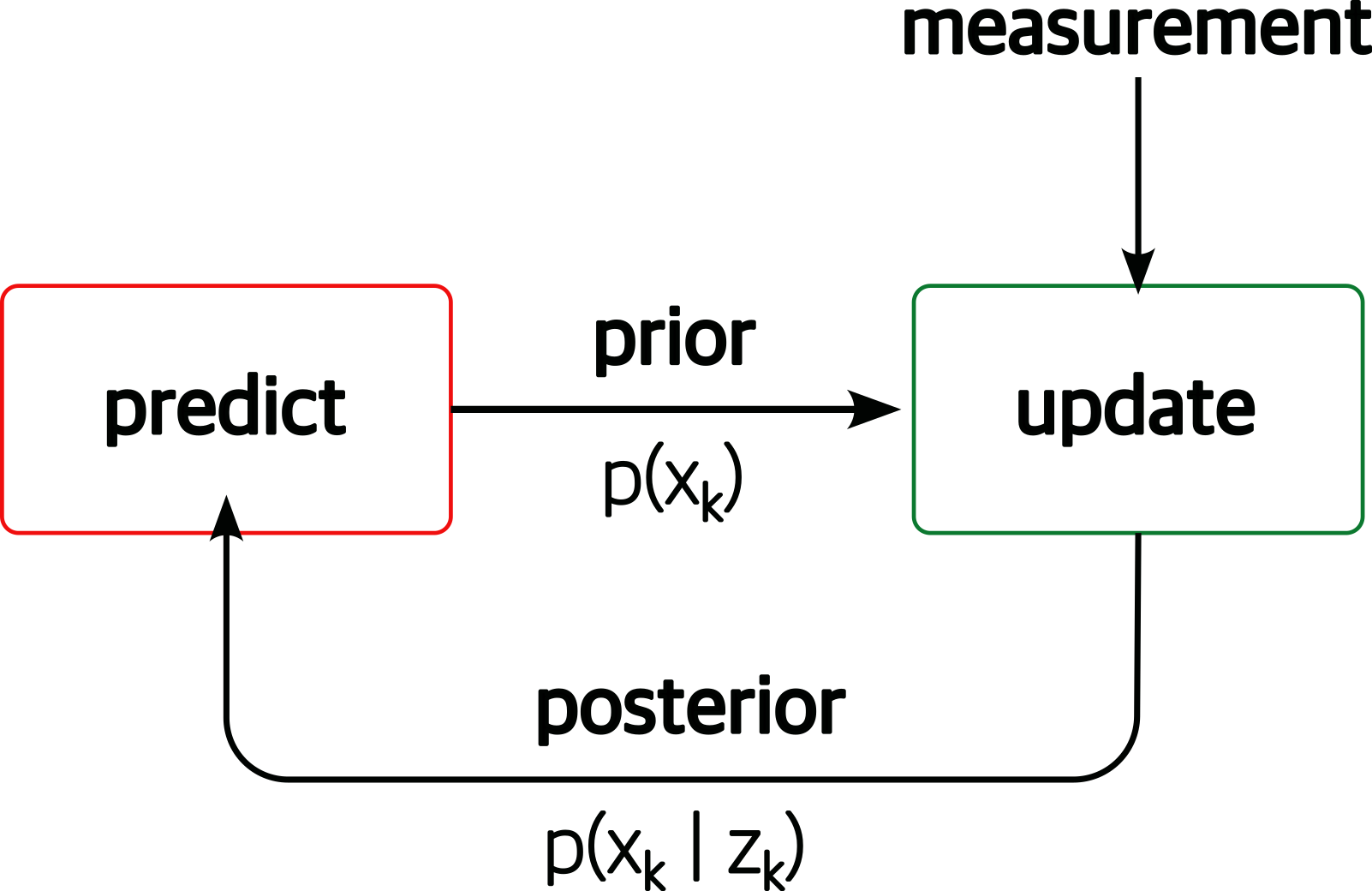

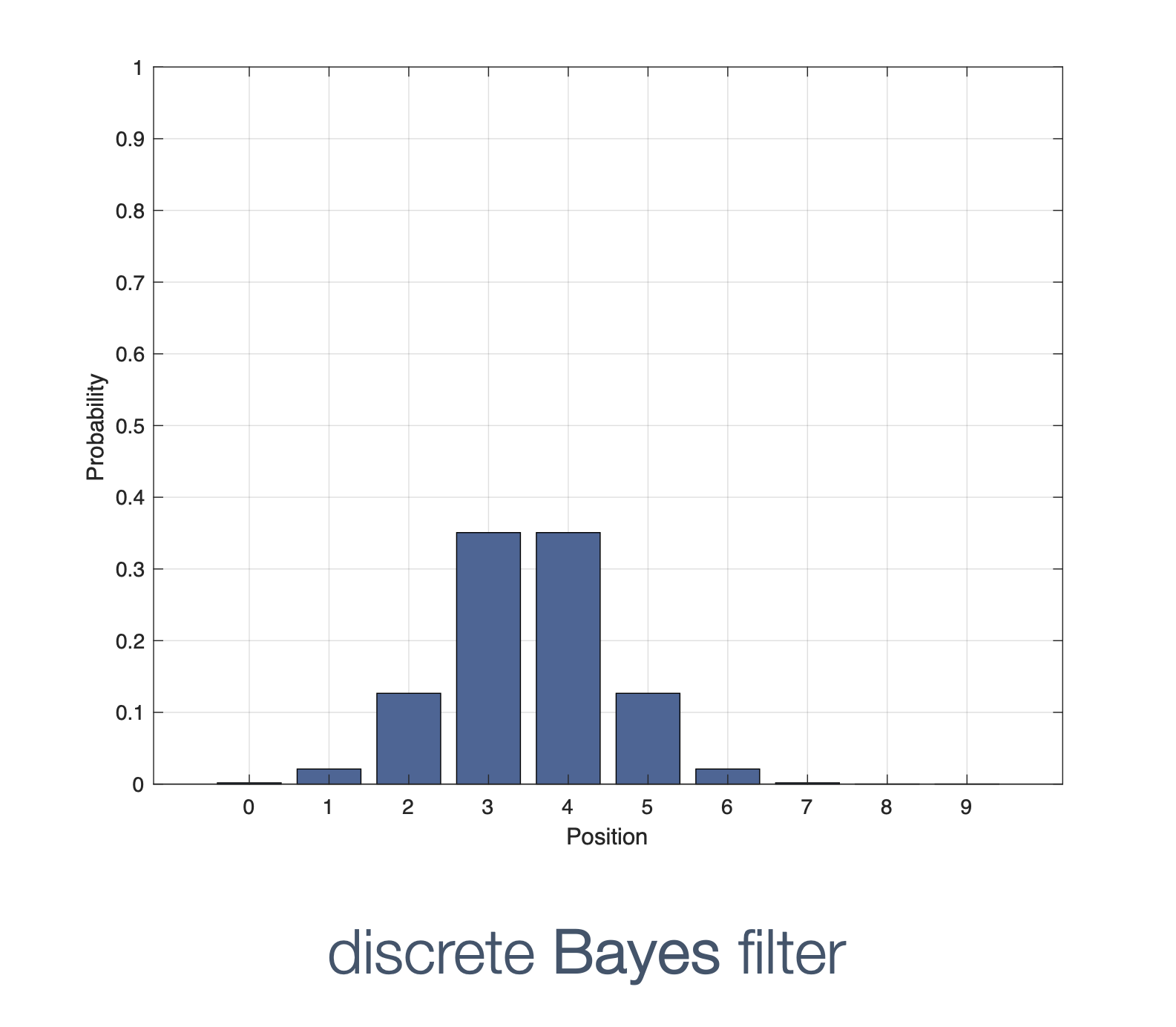

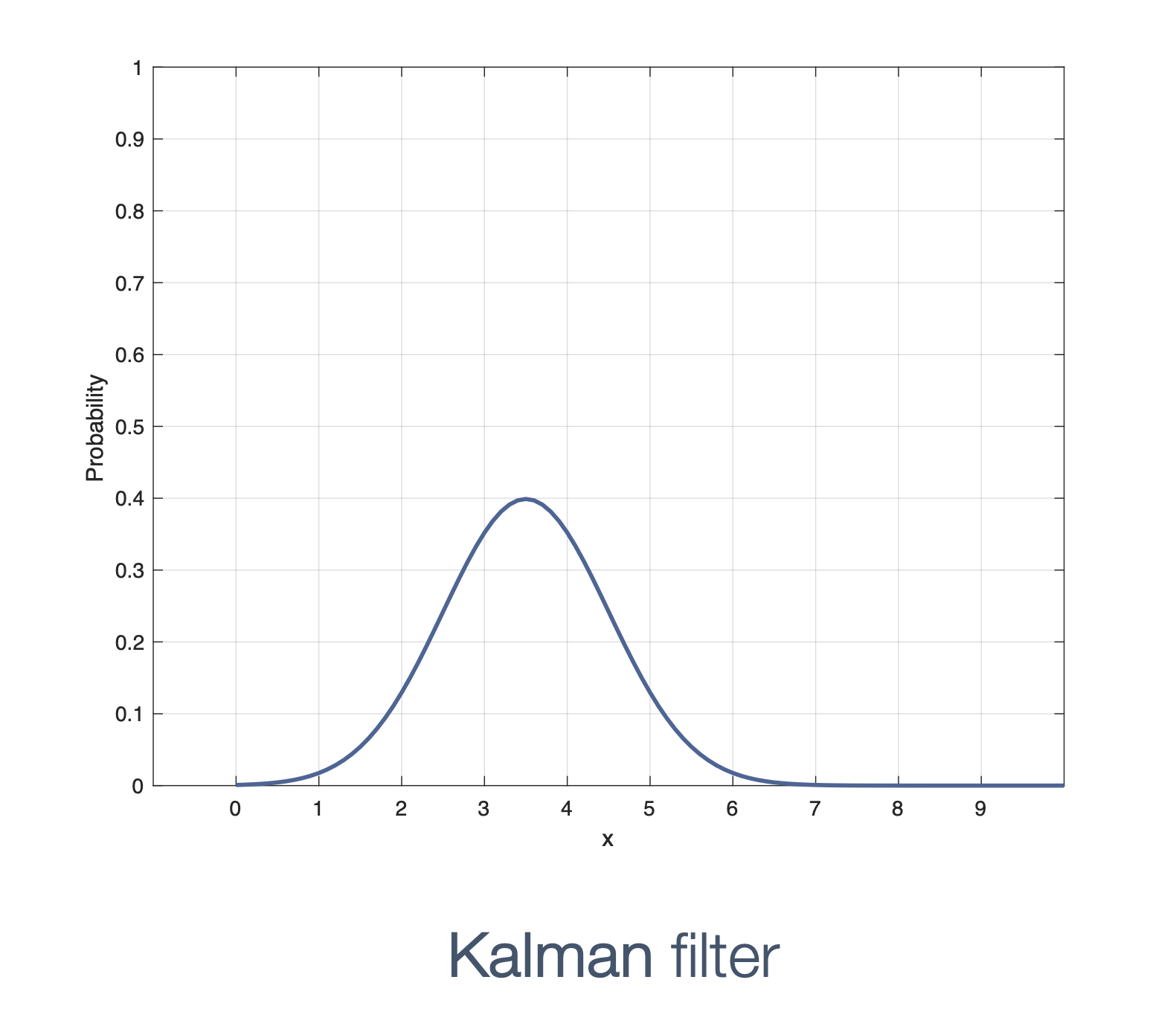

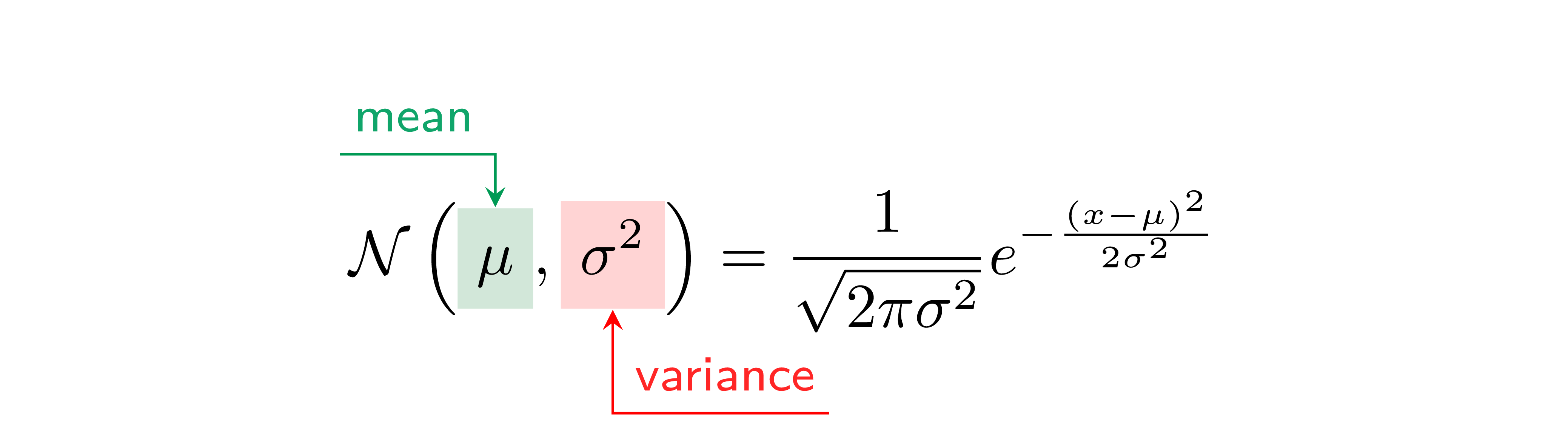

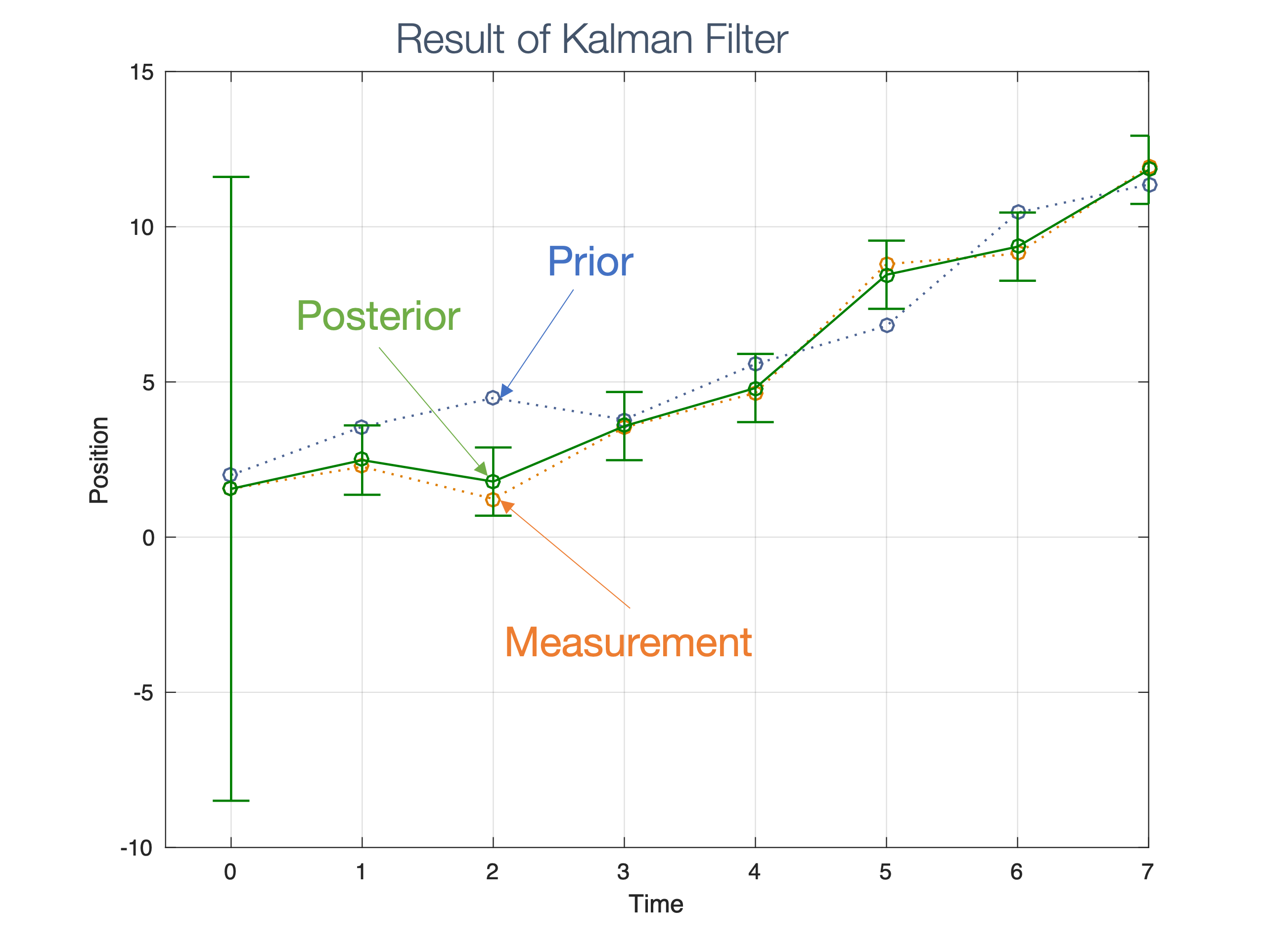

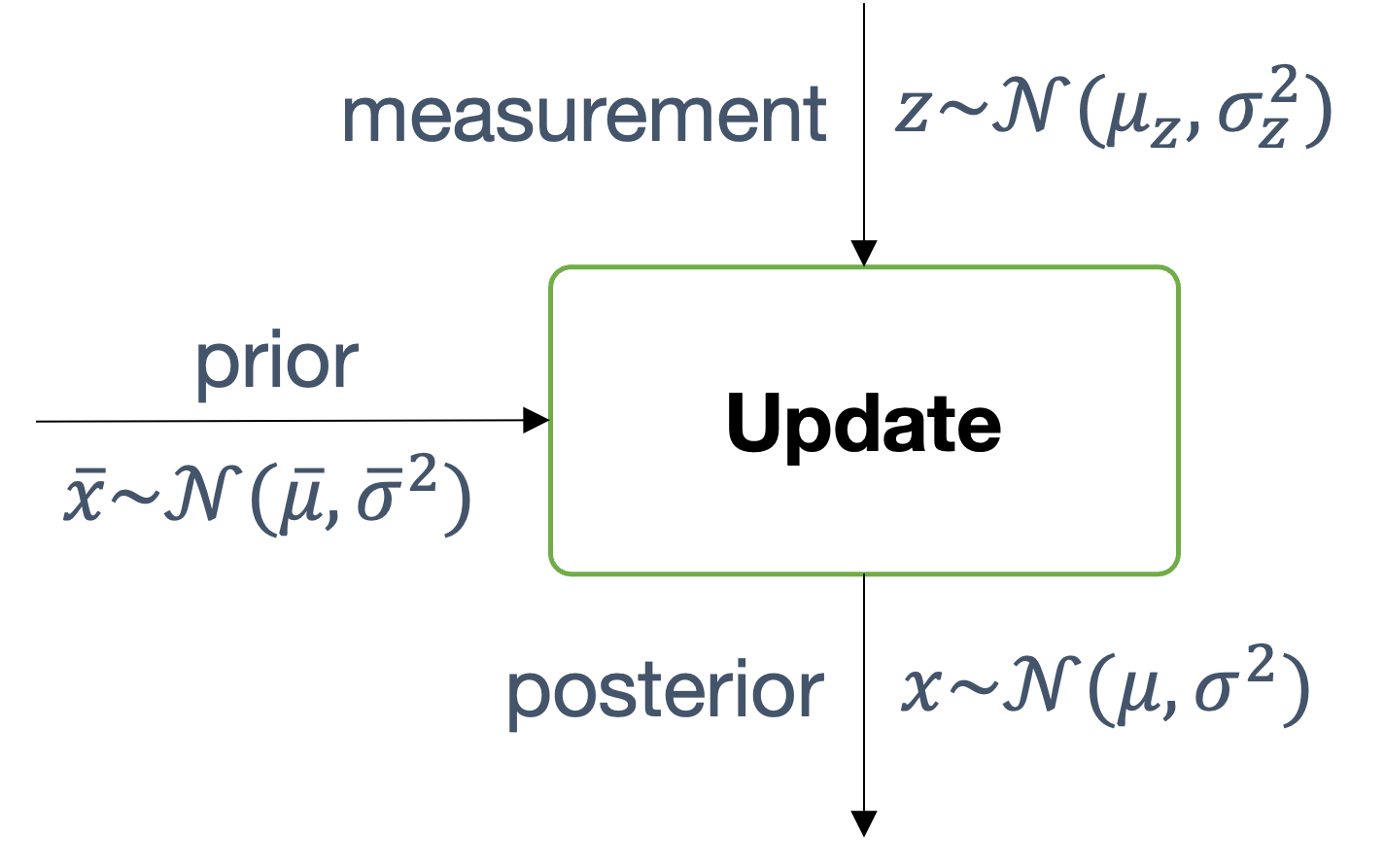

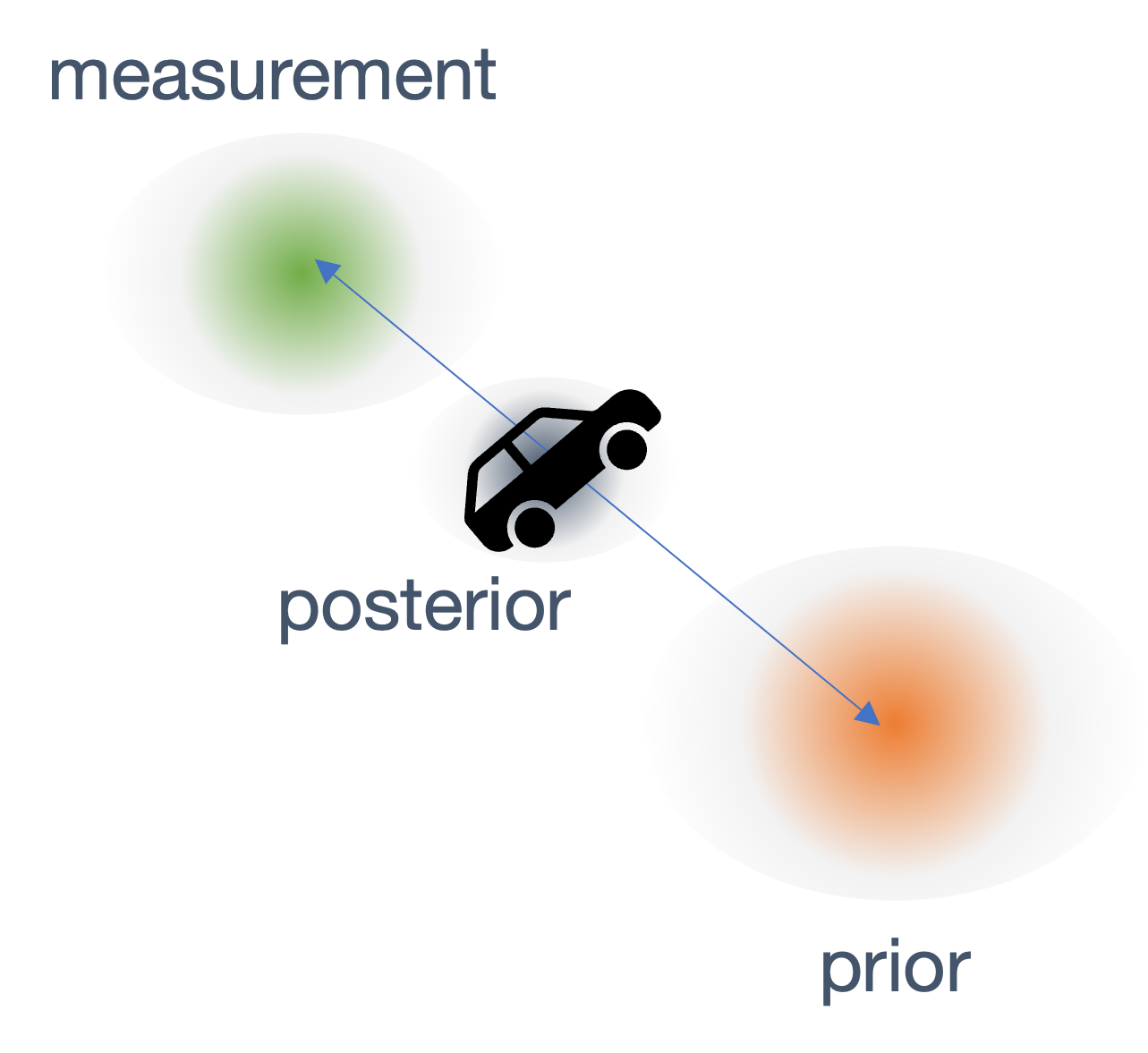

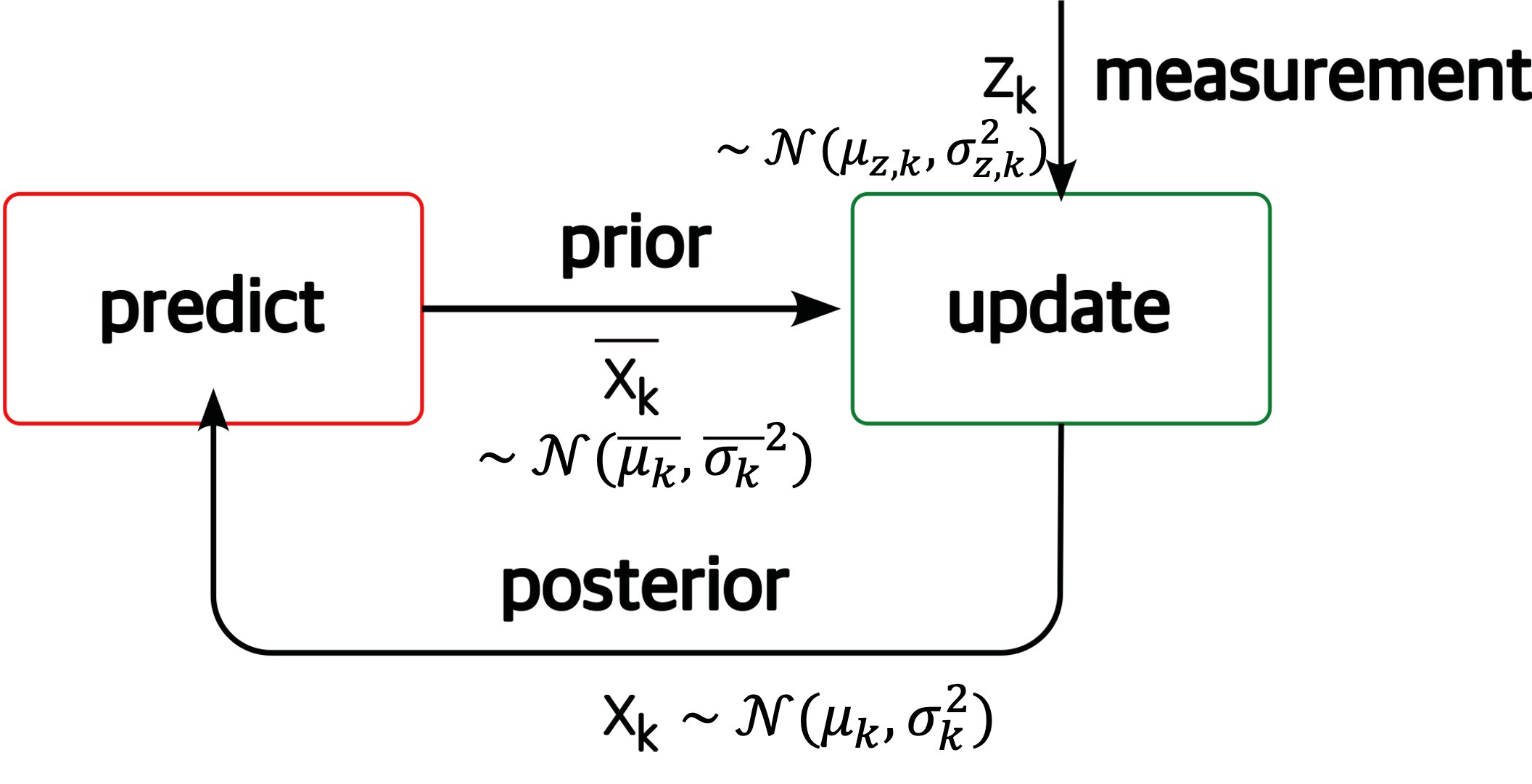

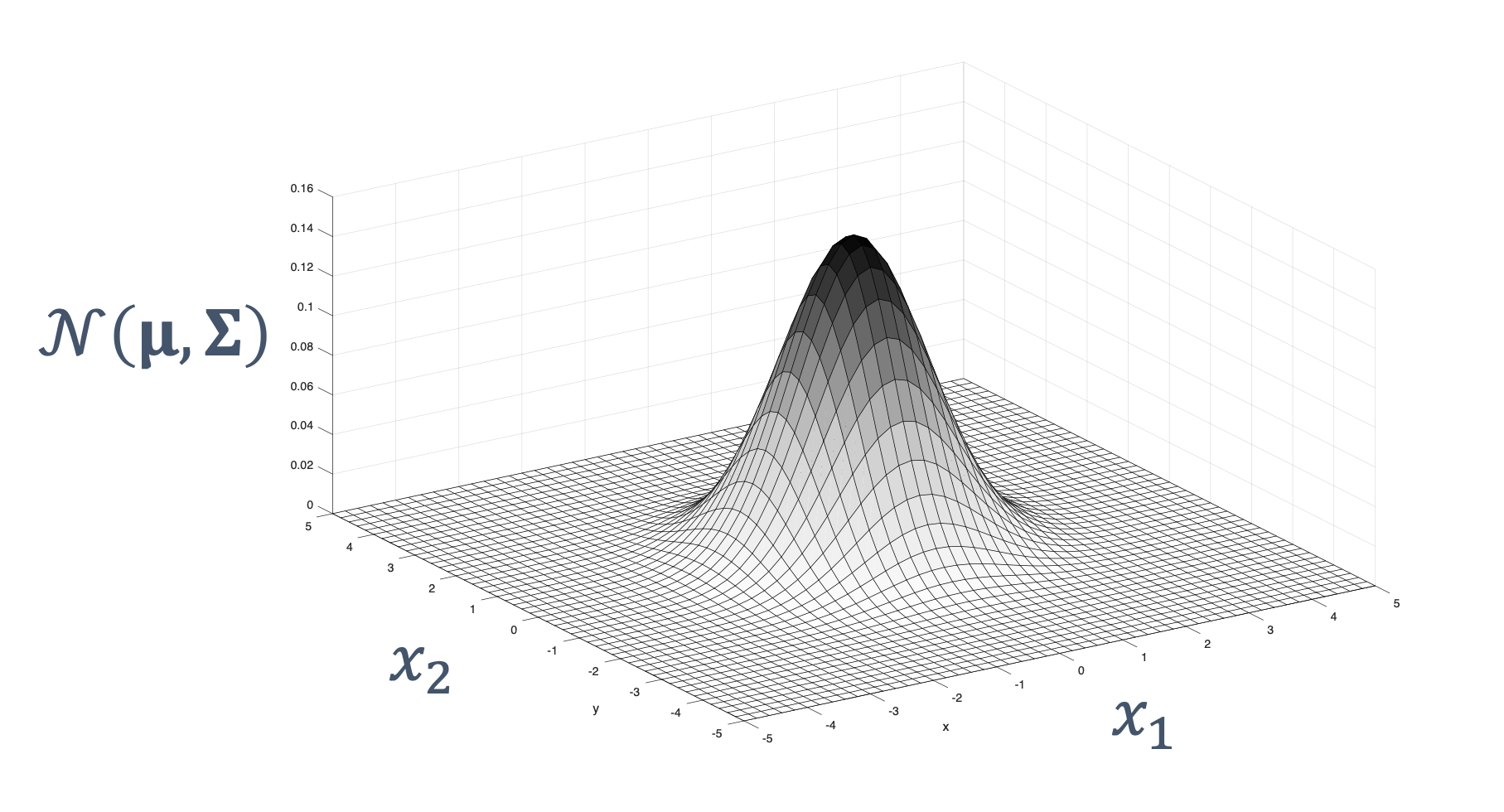

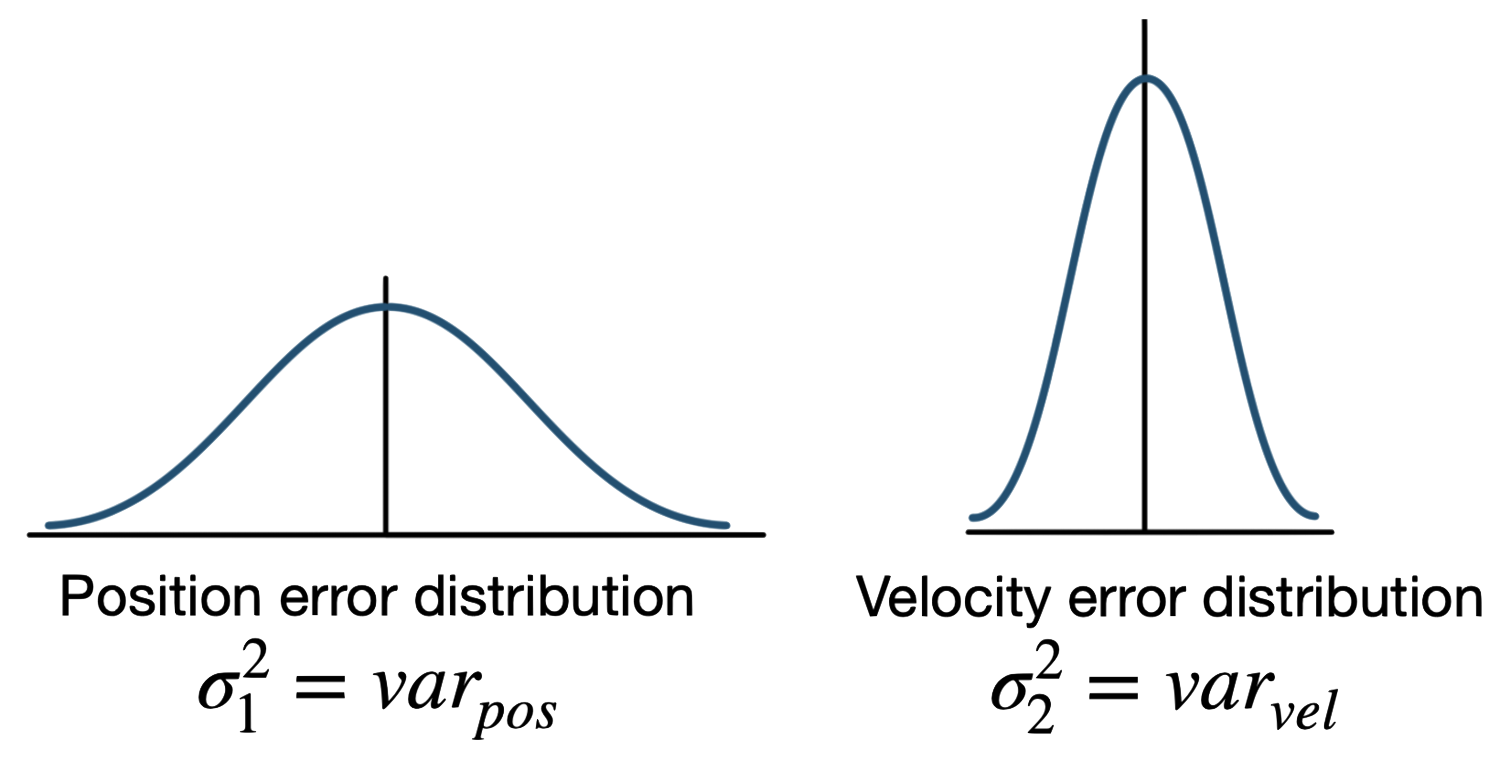

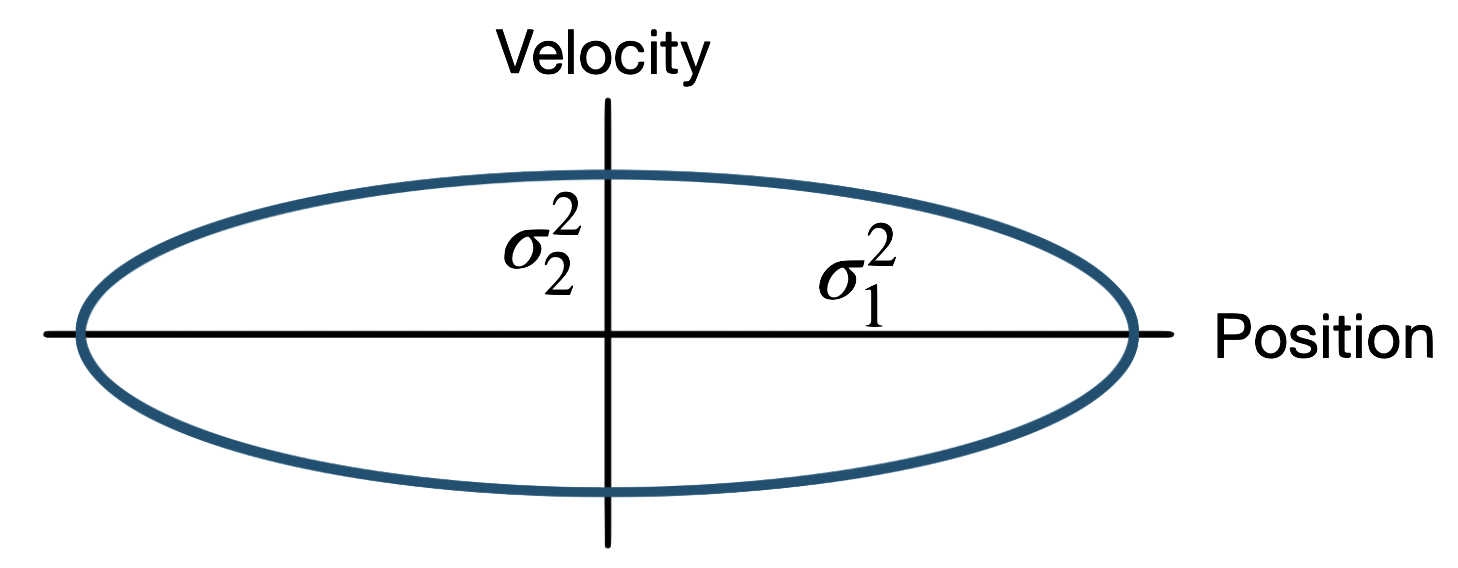

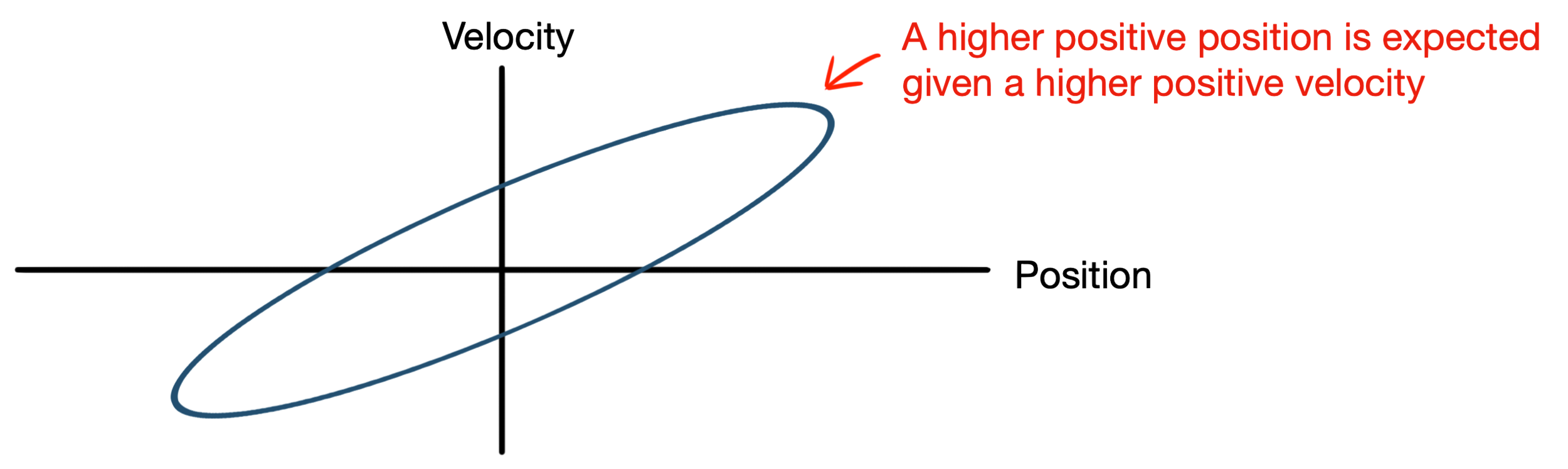

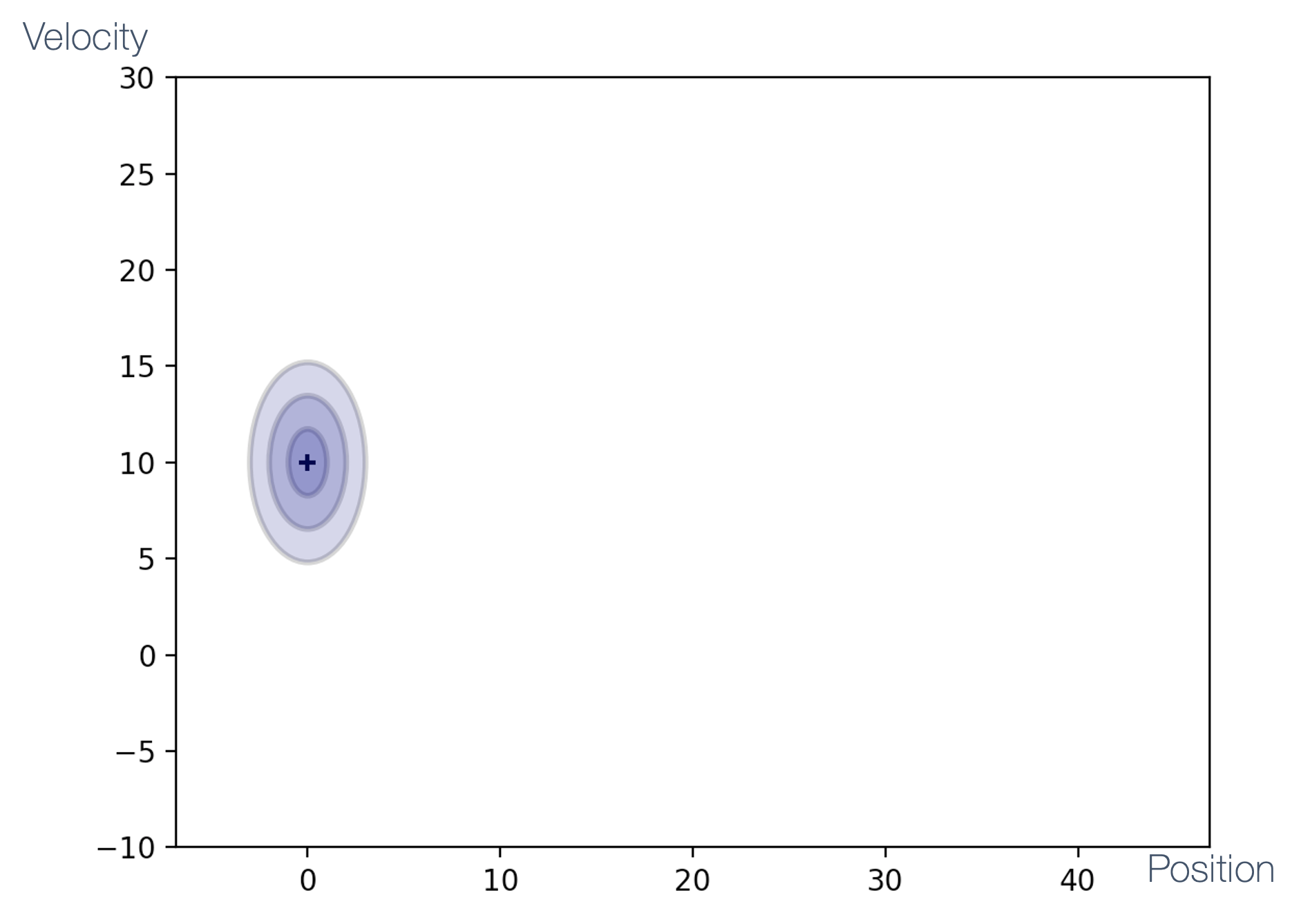

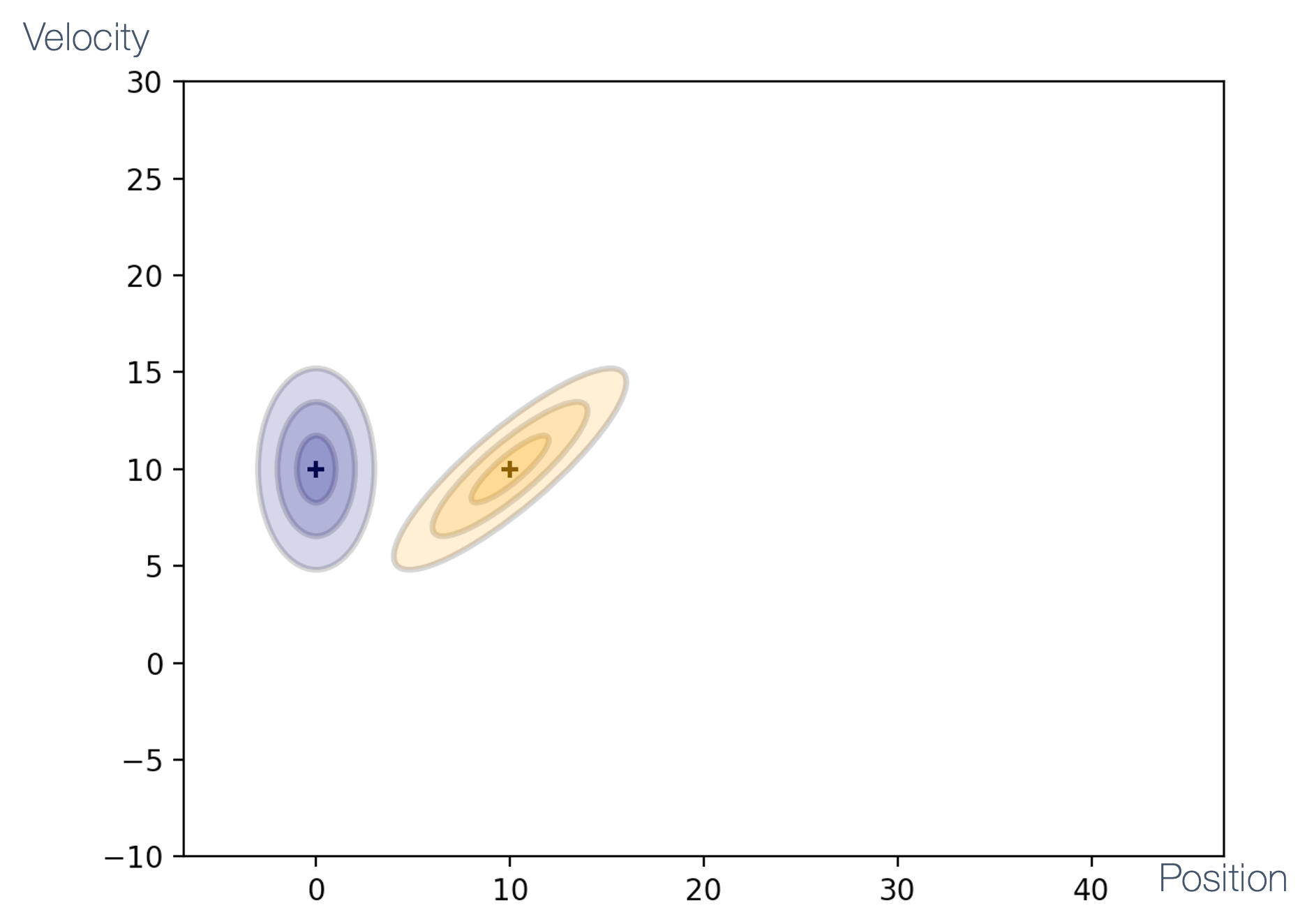

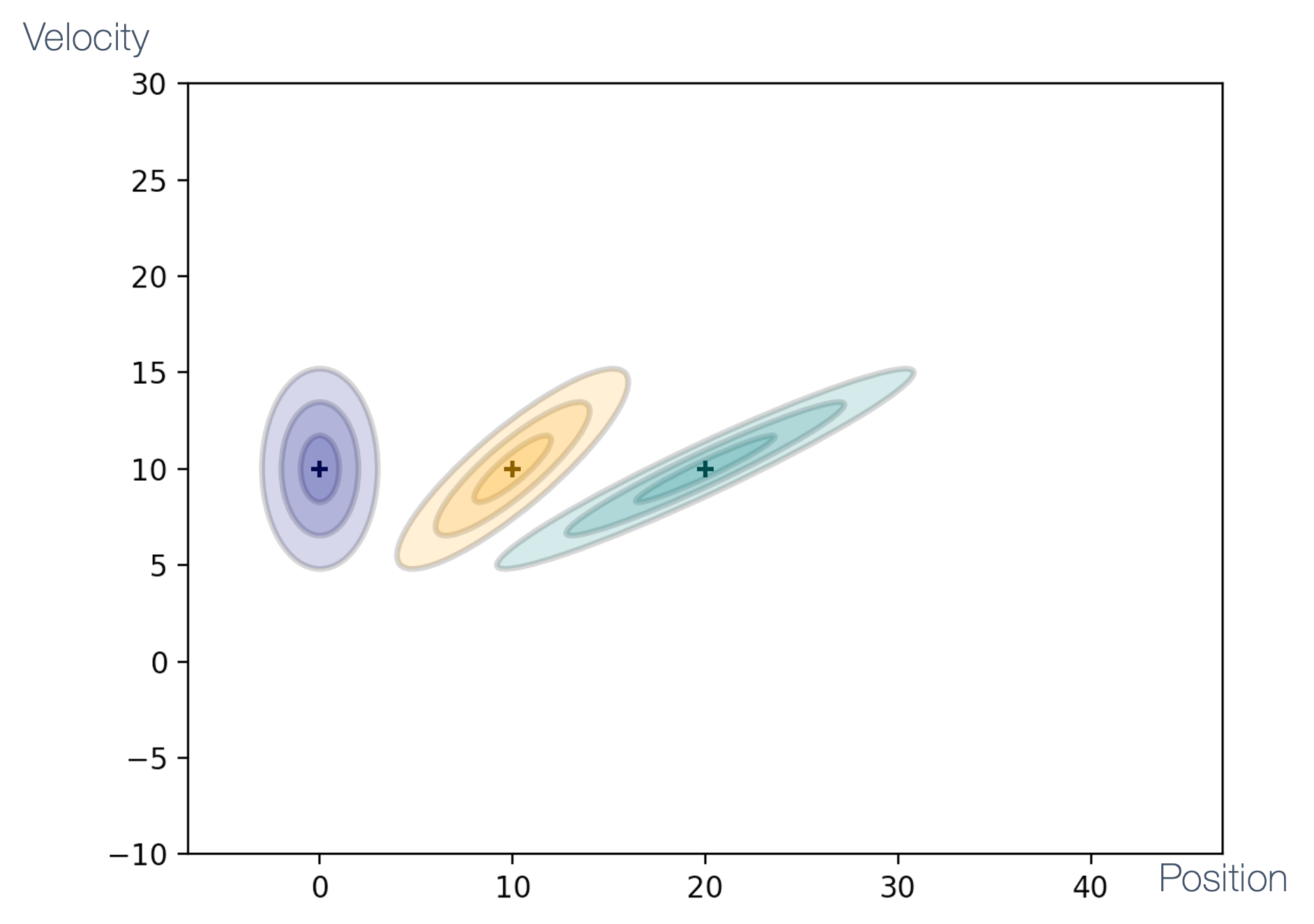

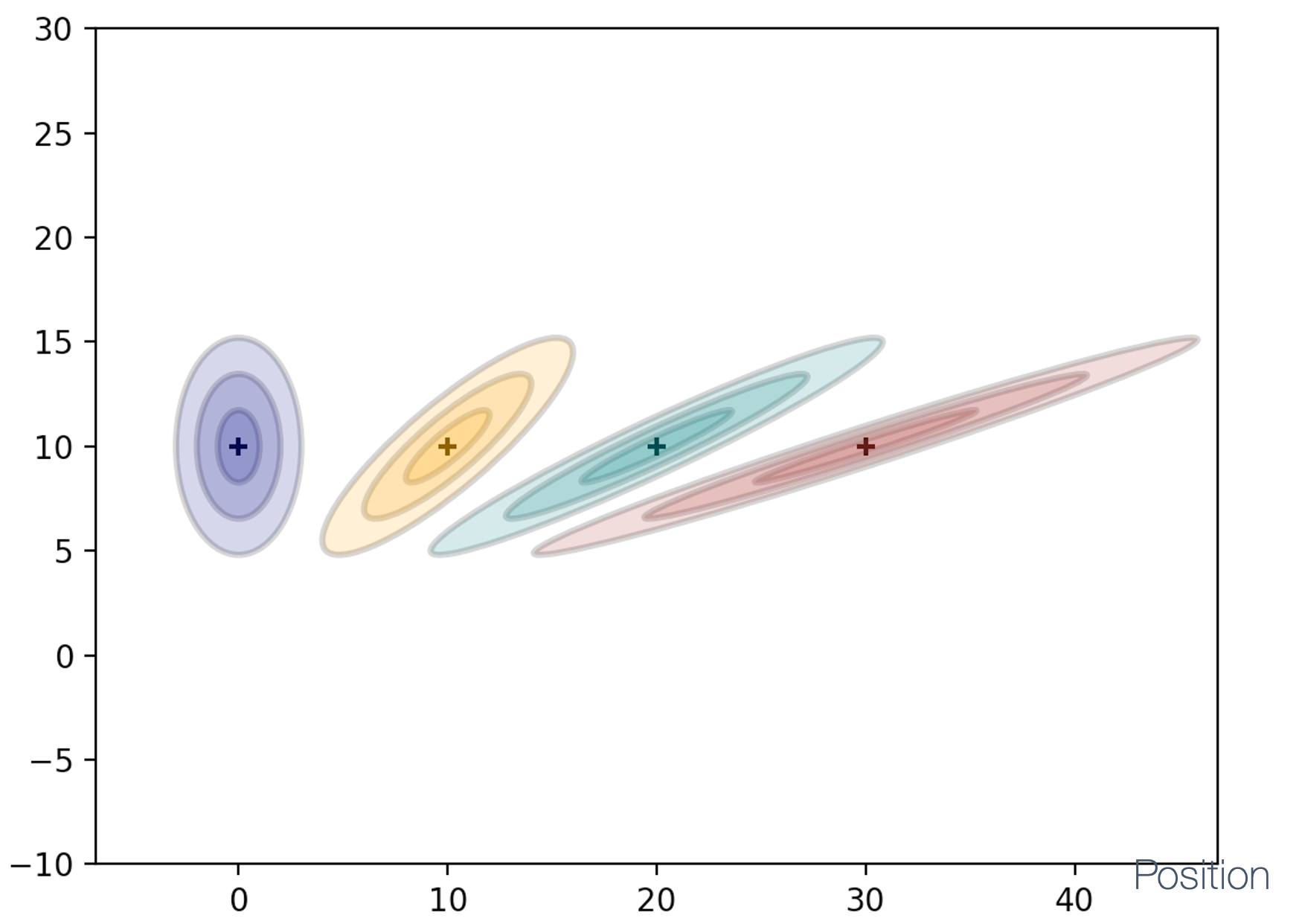

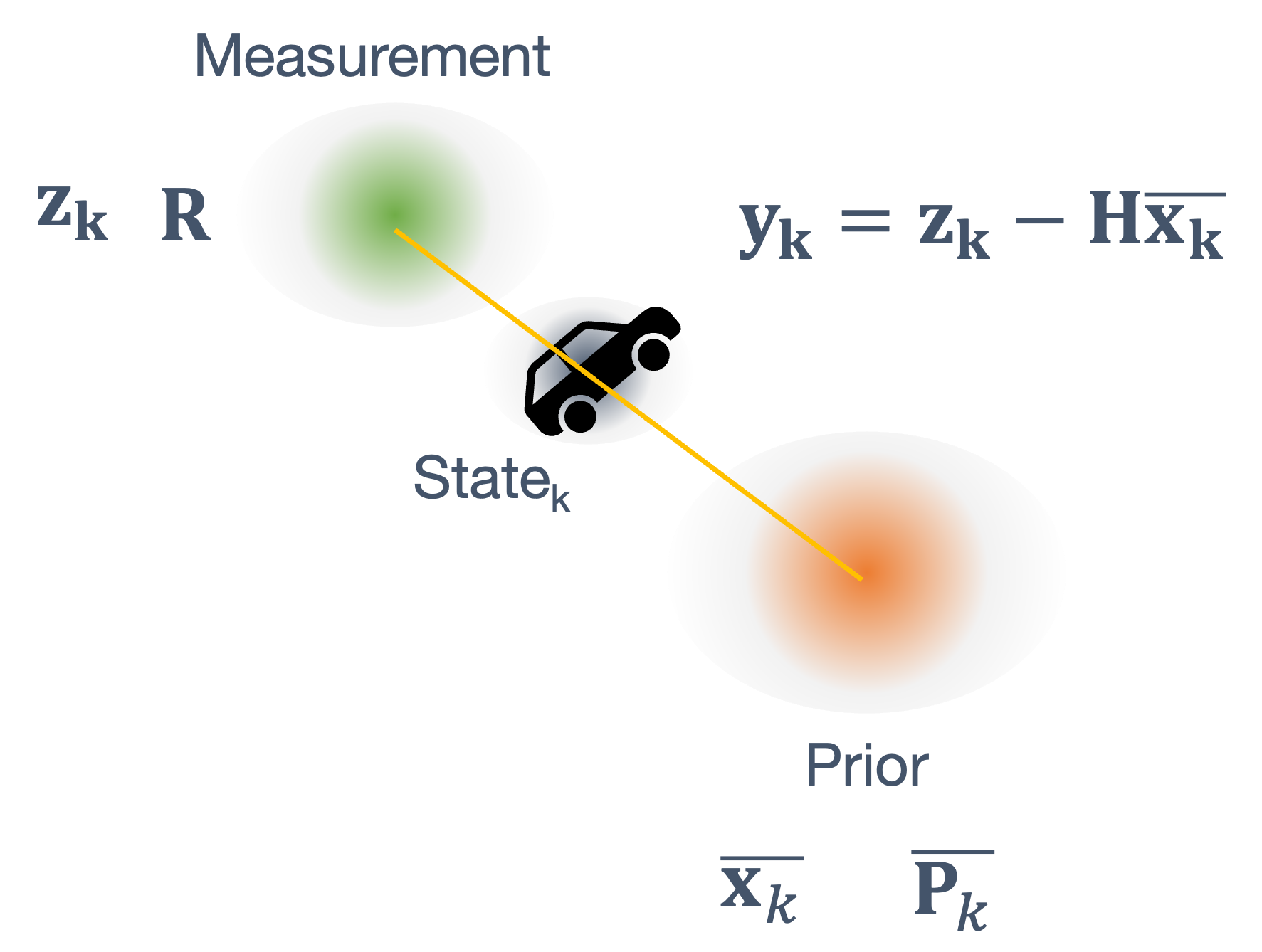

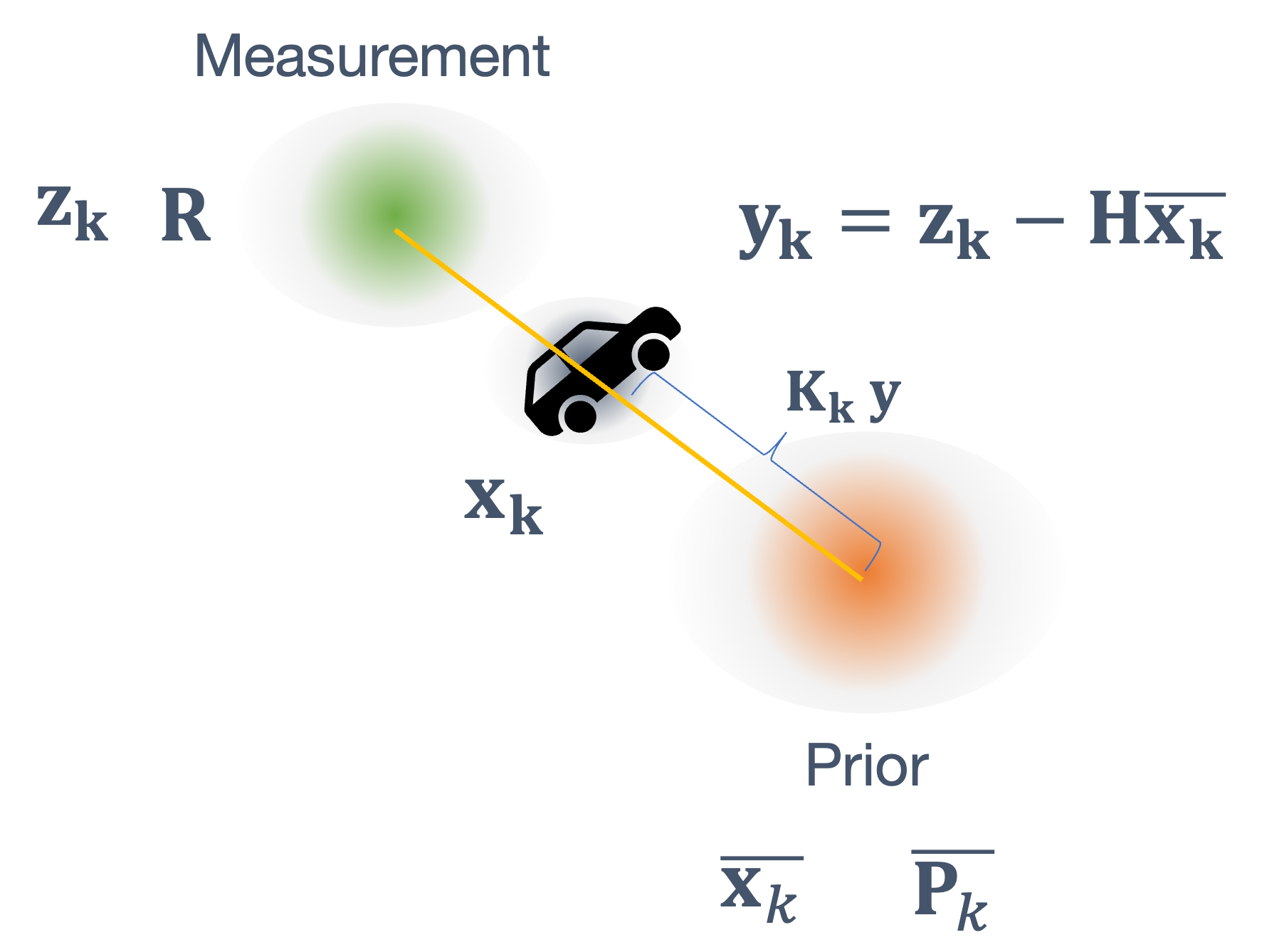

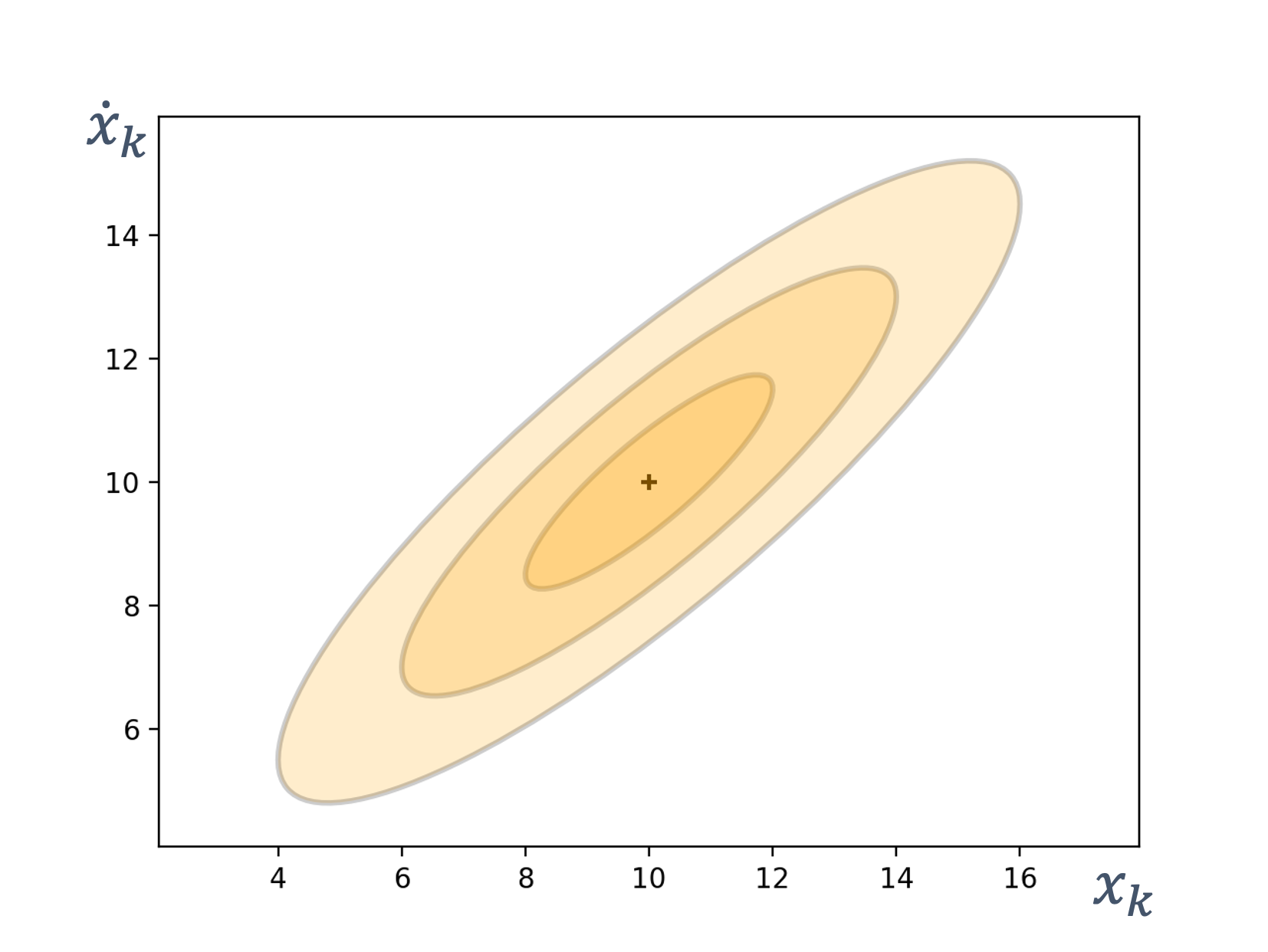

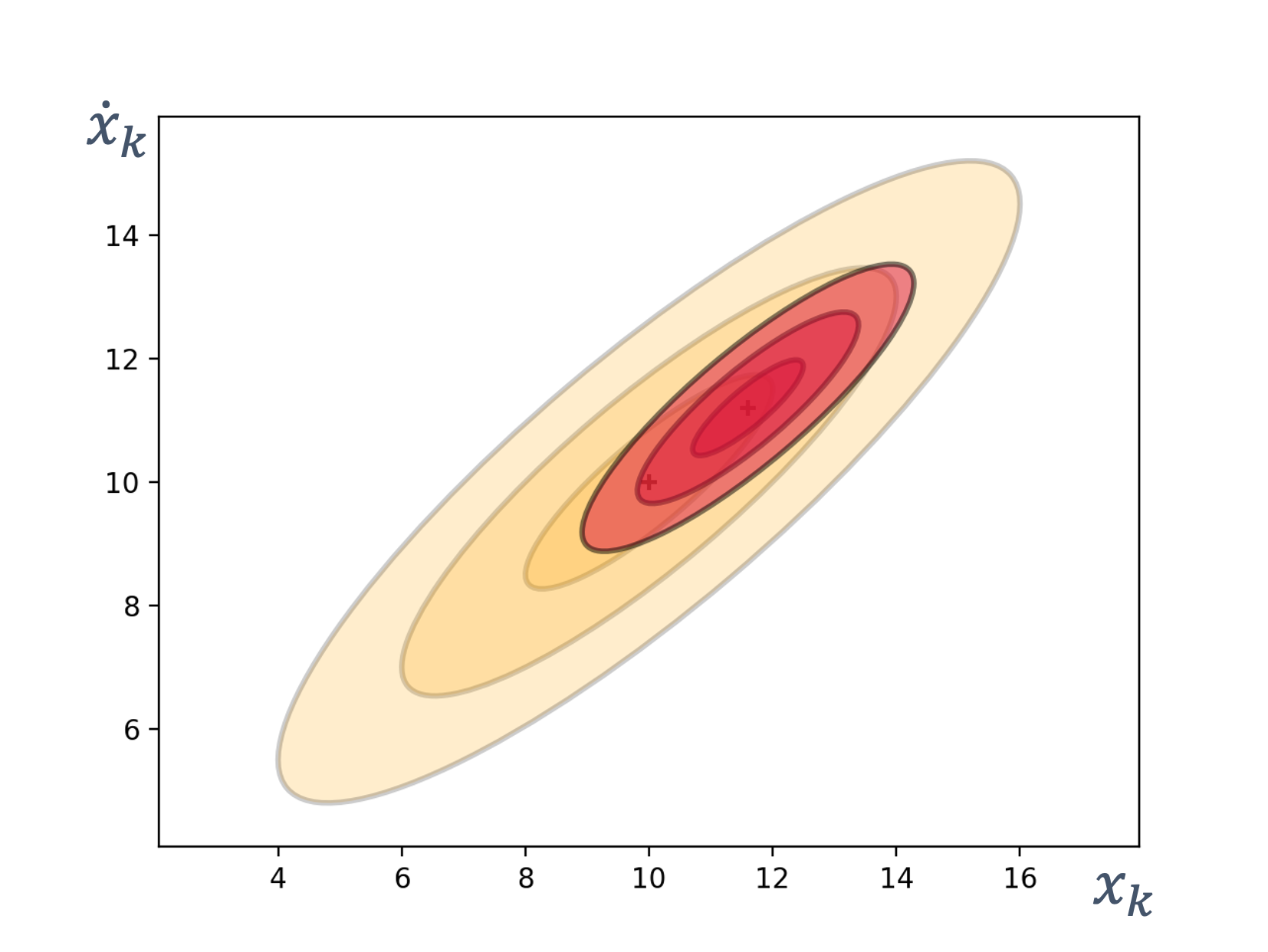

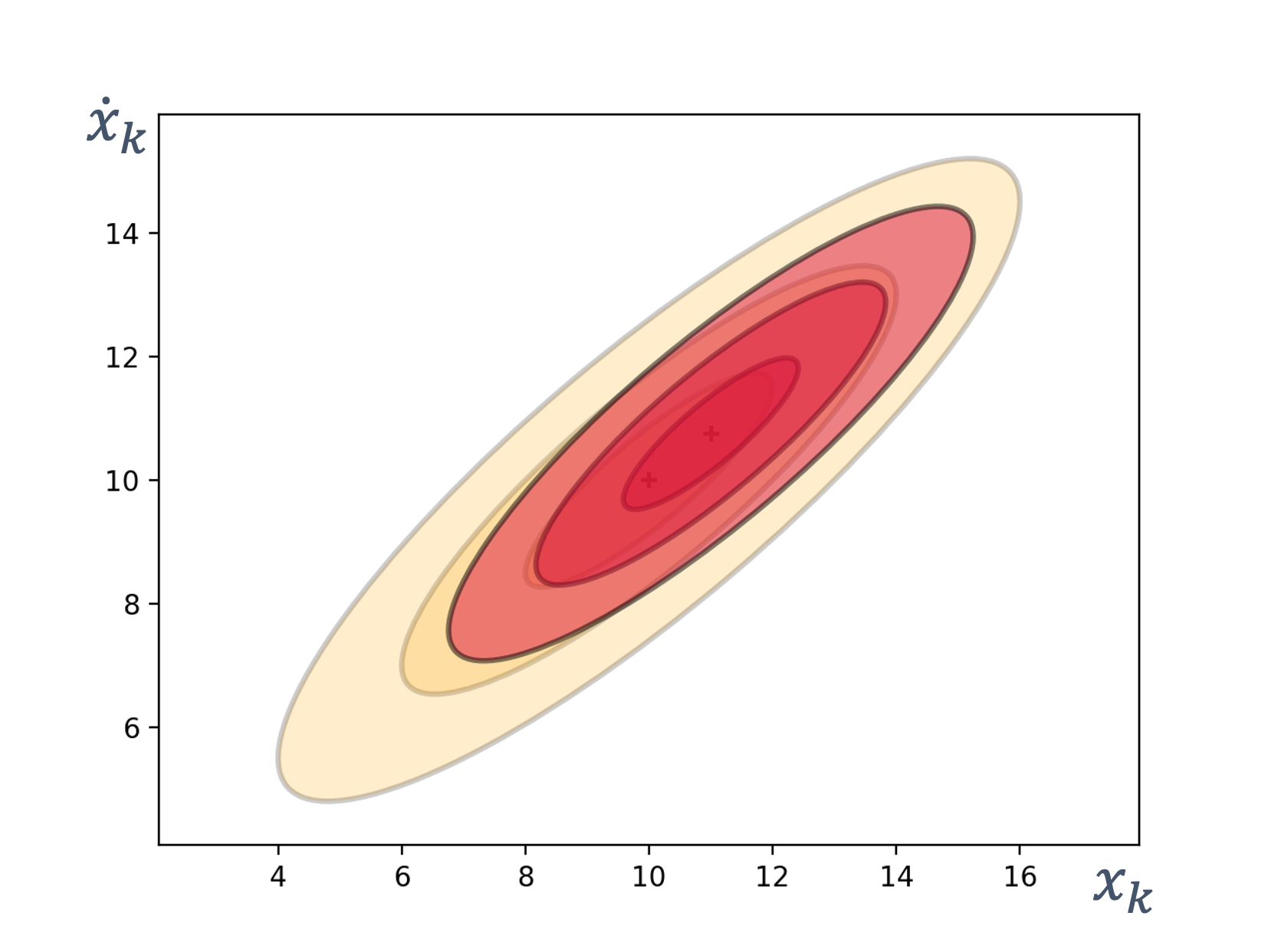

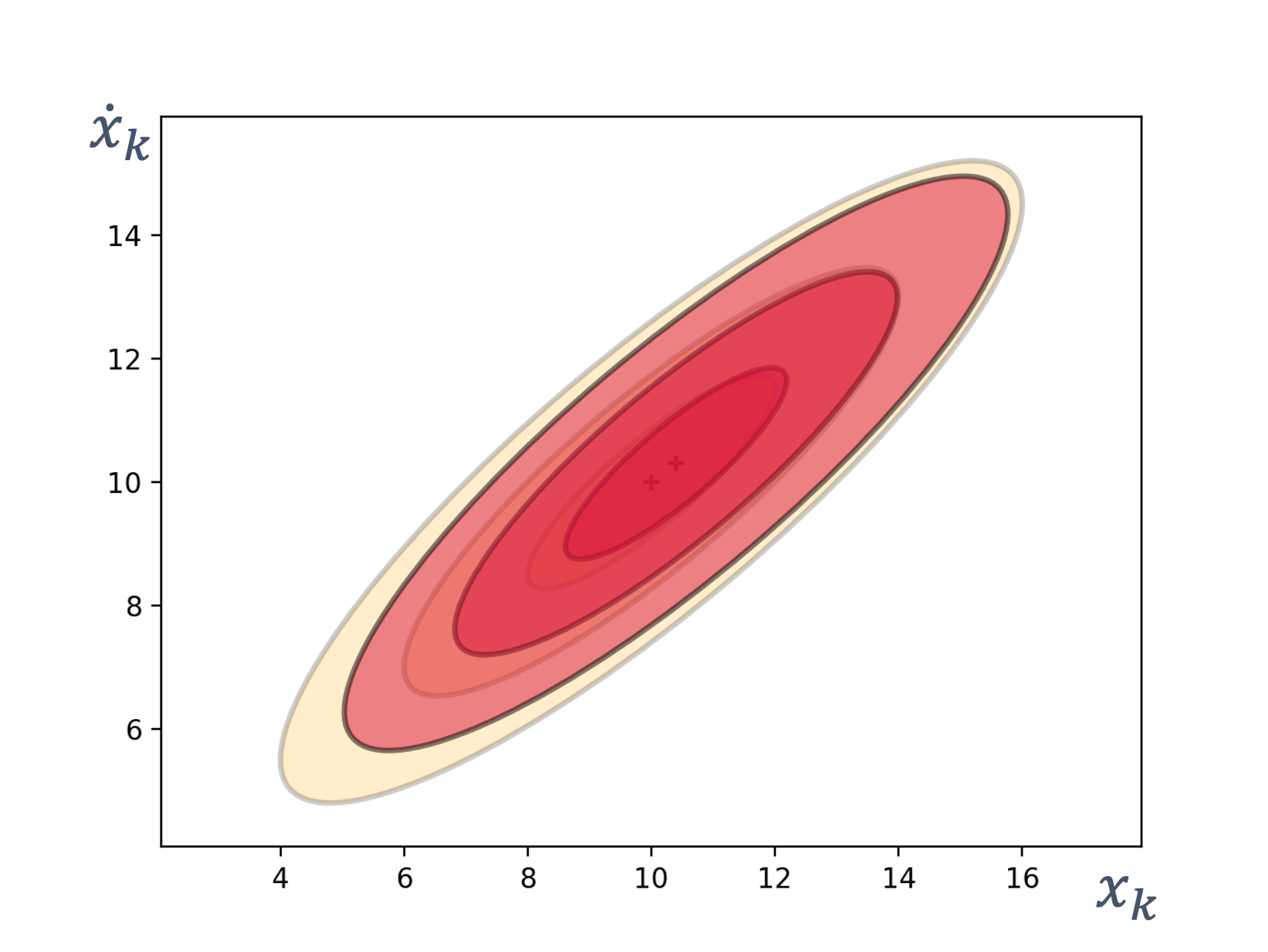

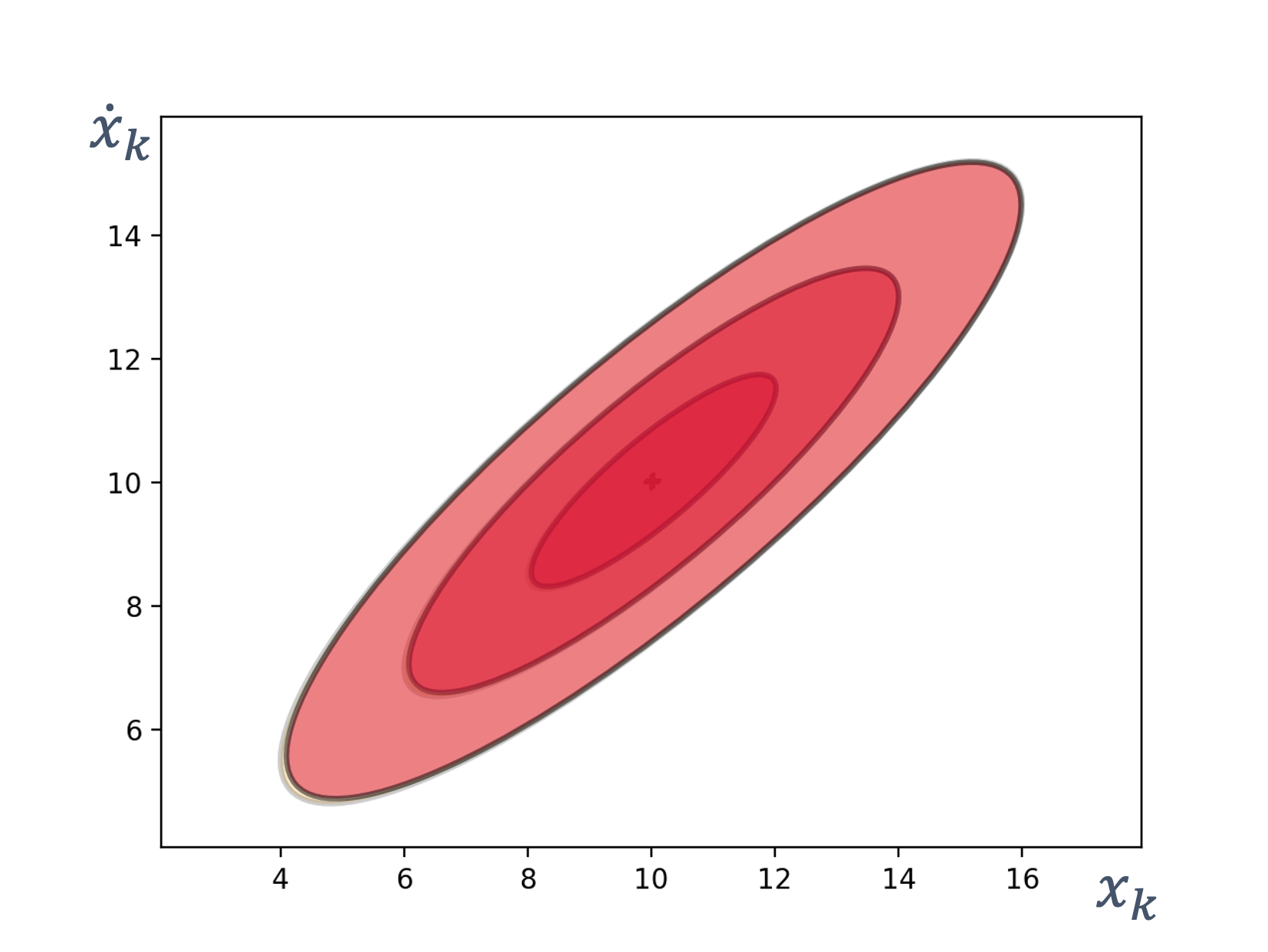

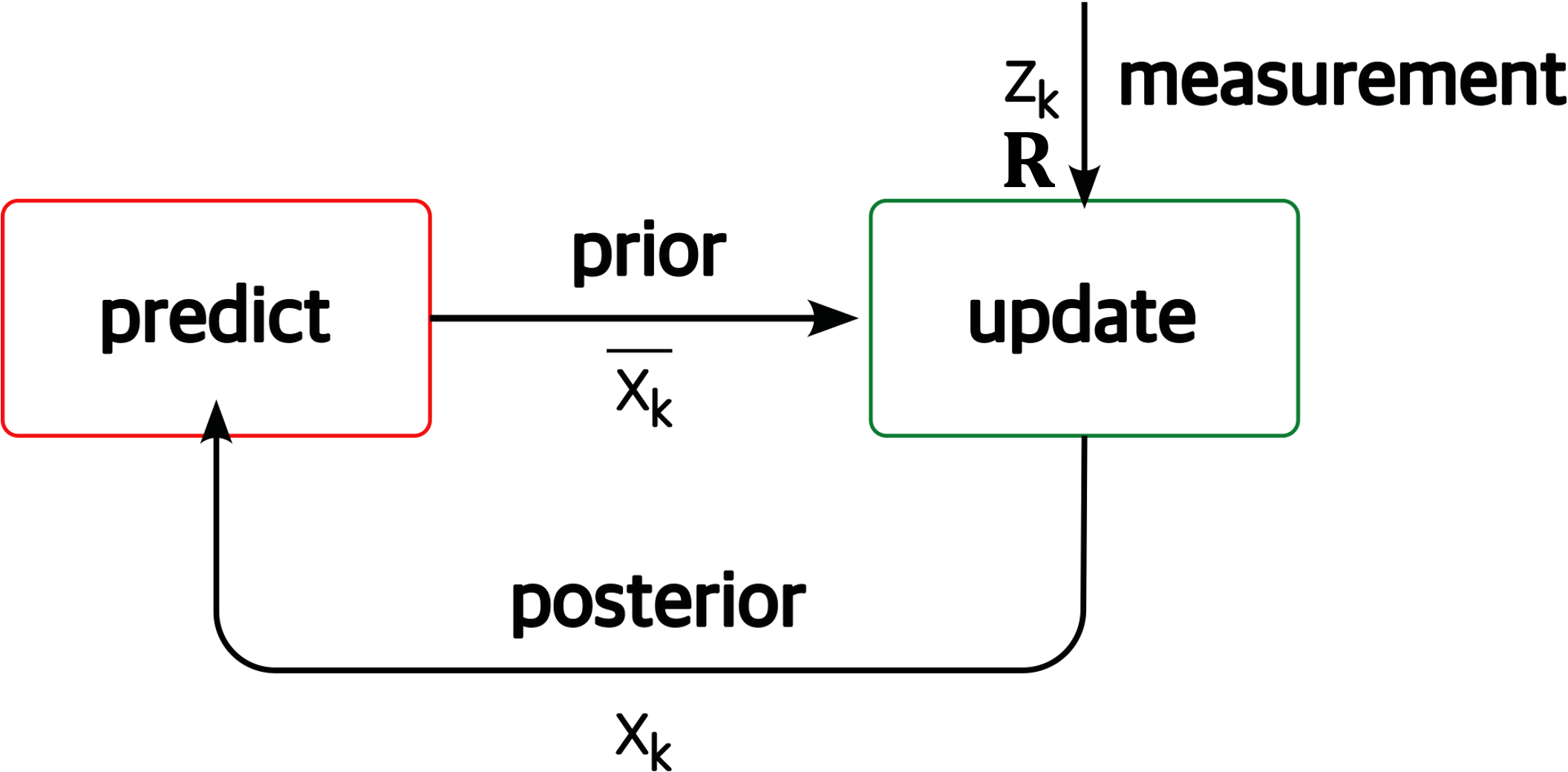

- 8 State Estimation

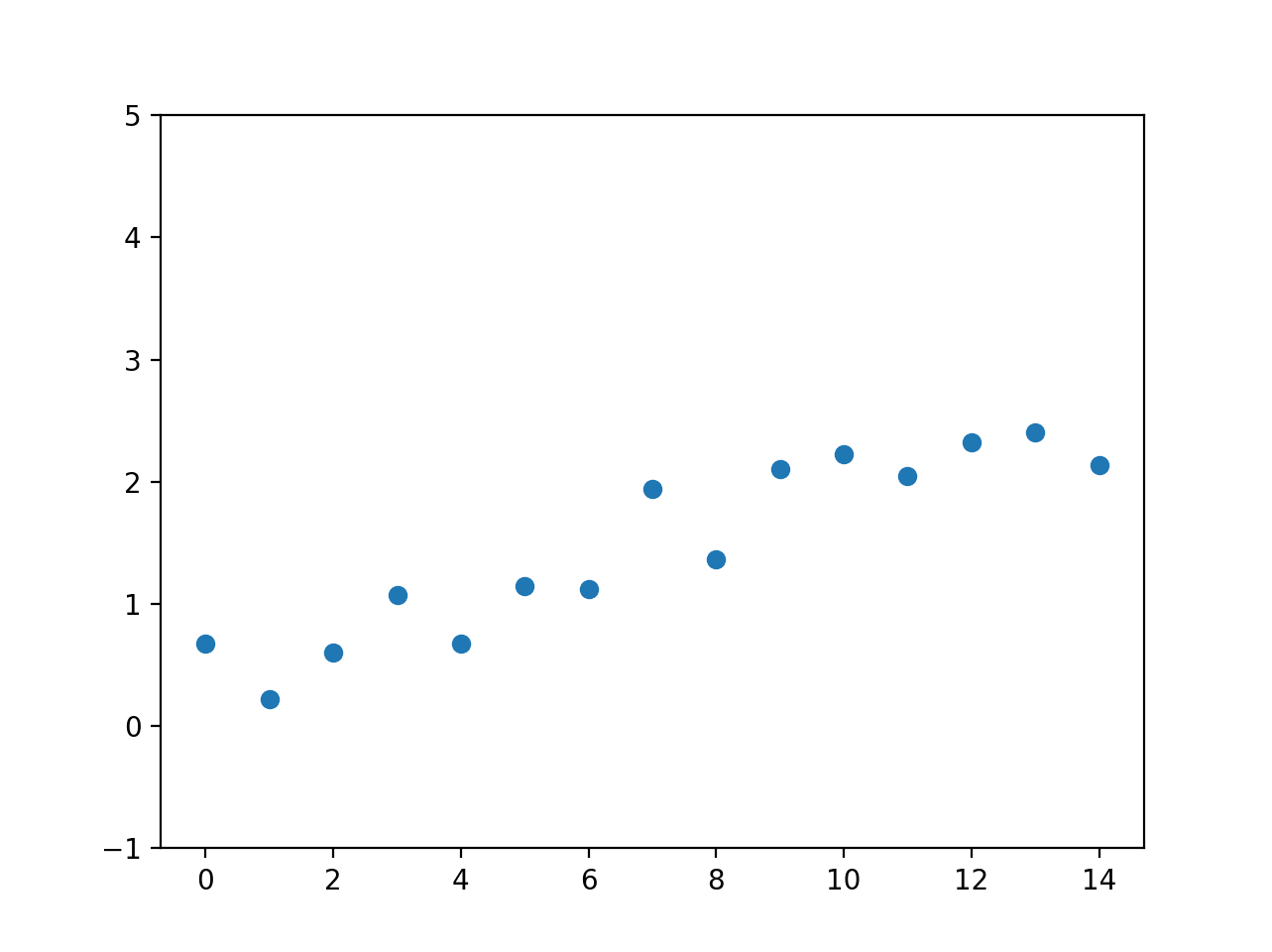

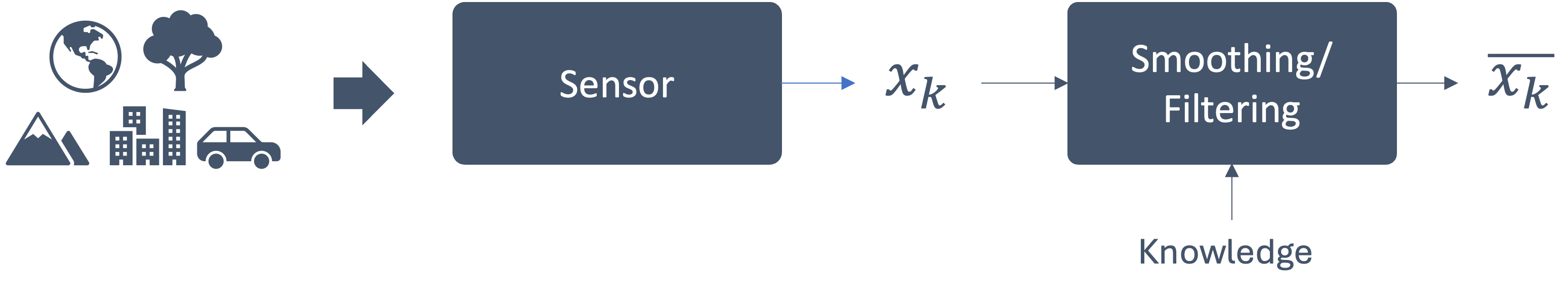

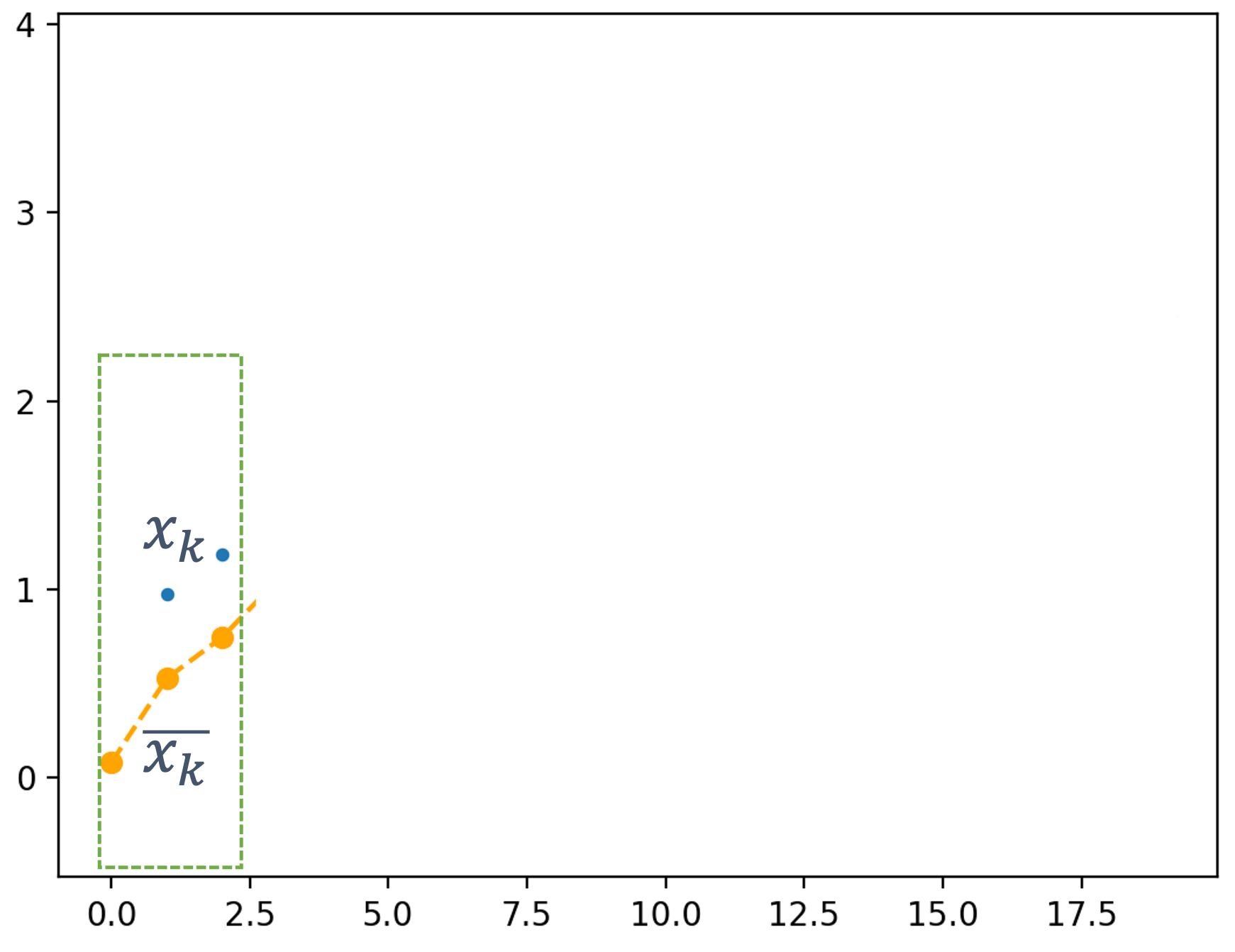

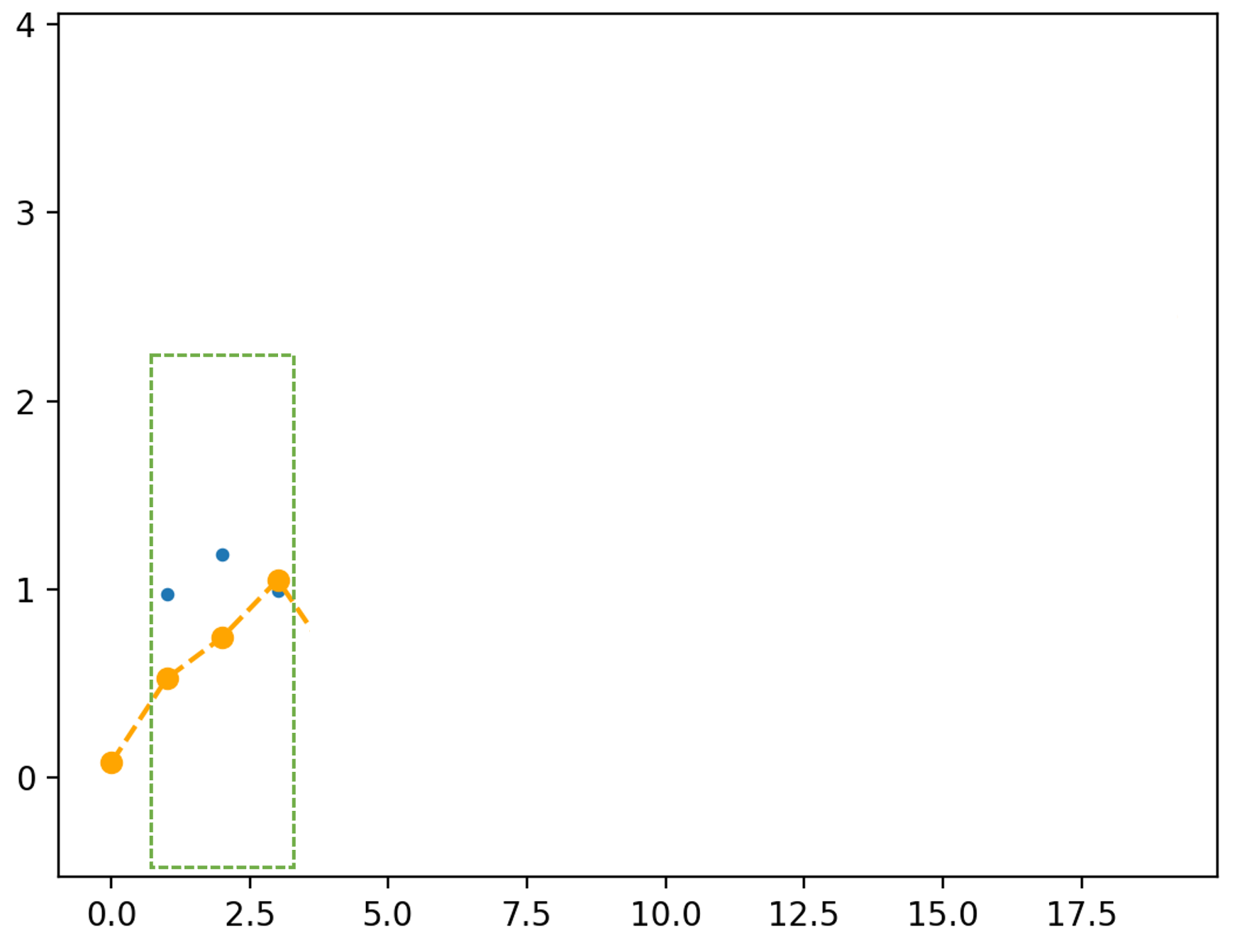

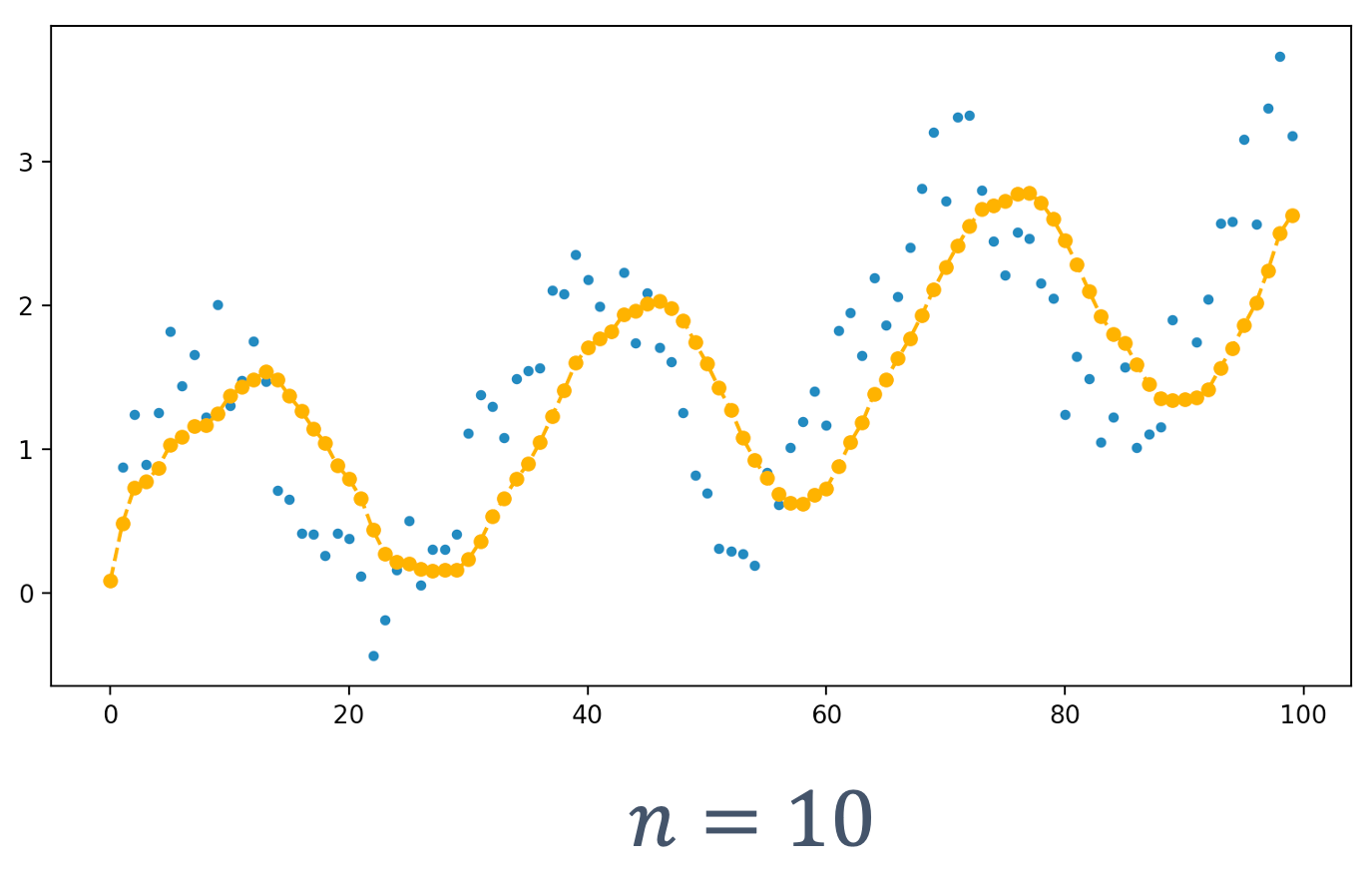

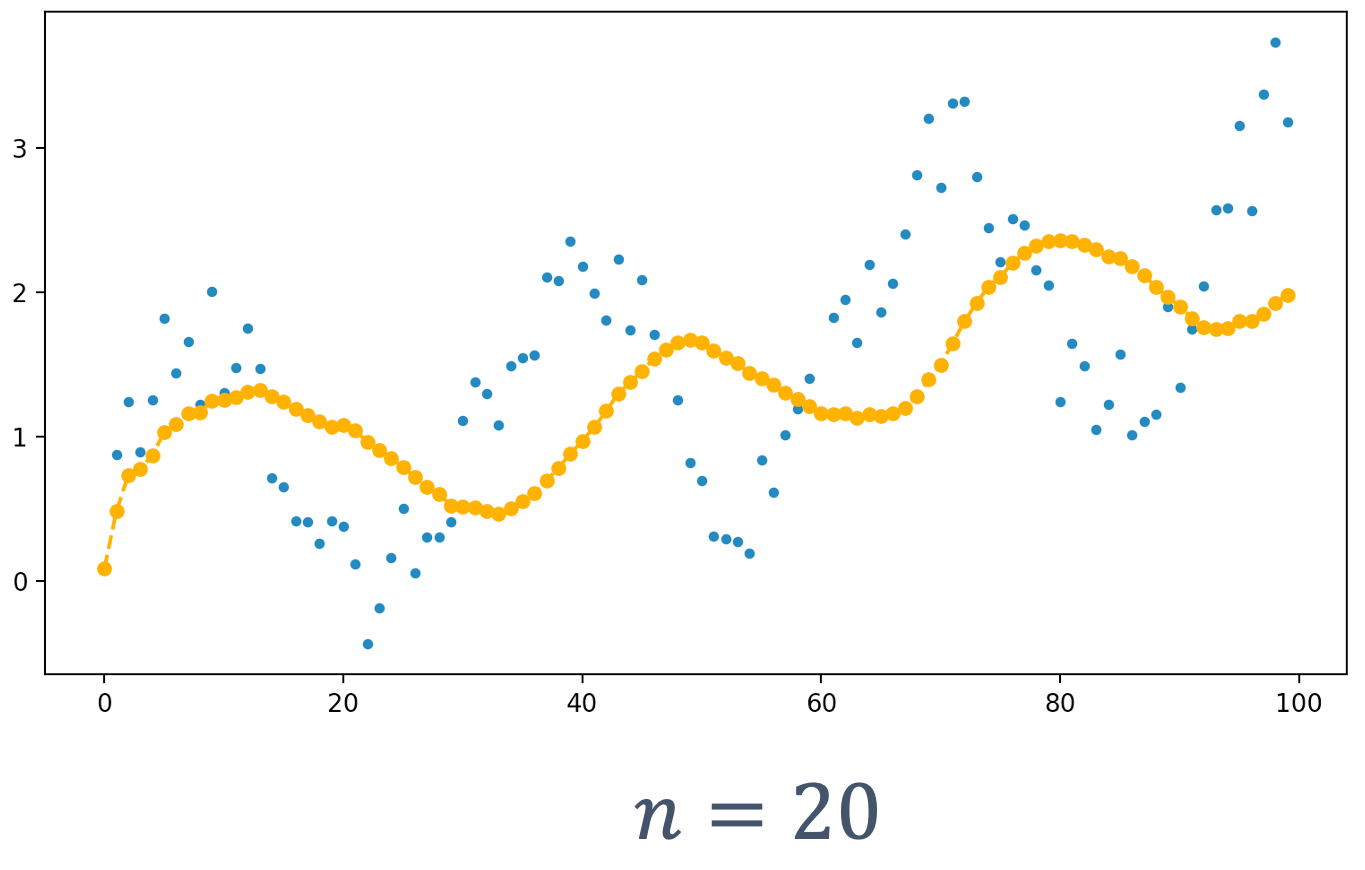

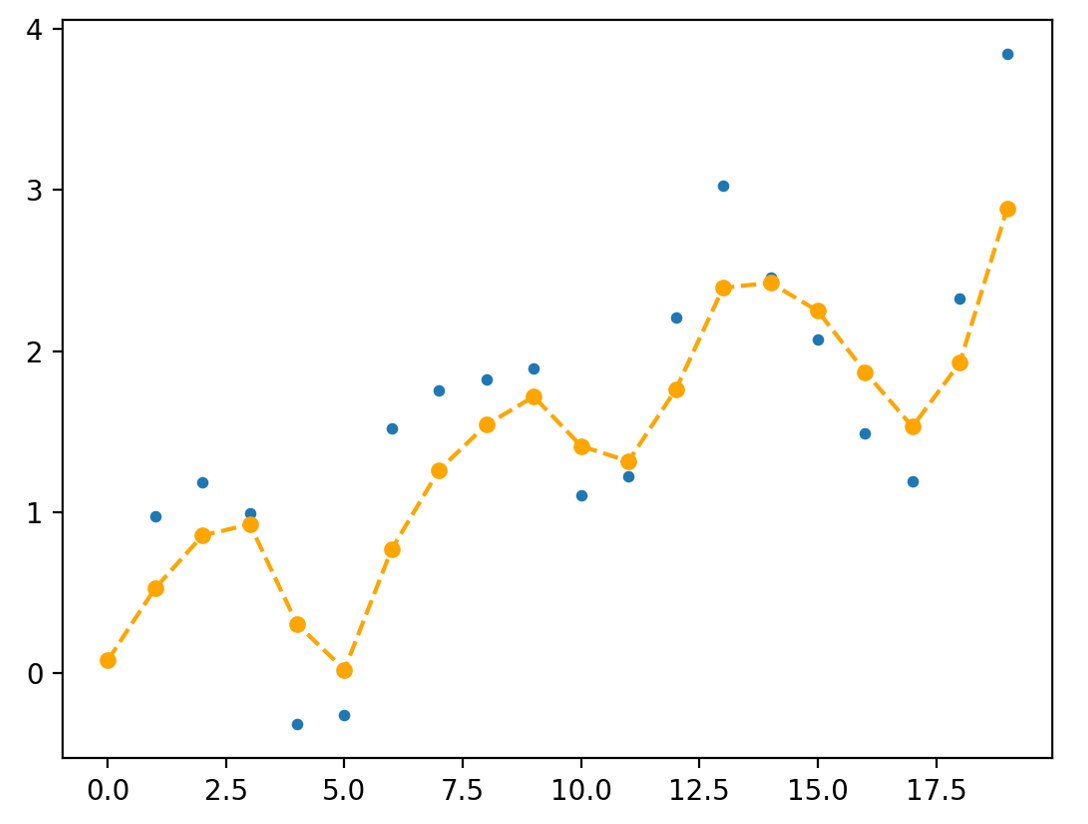

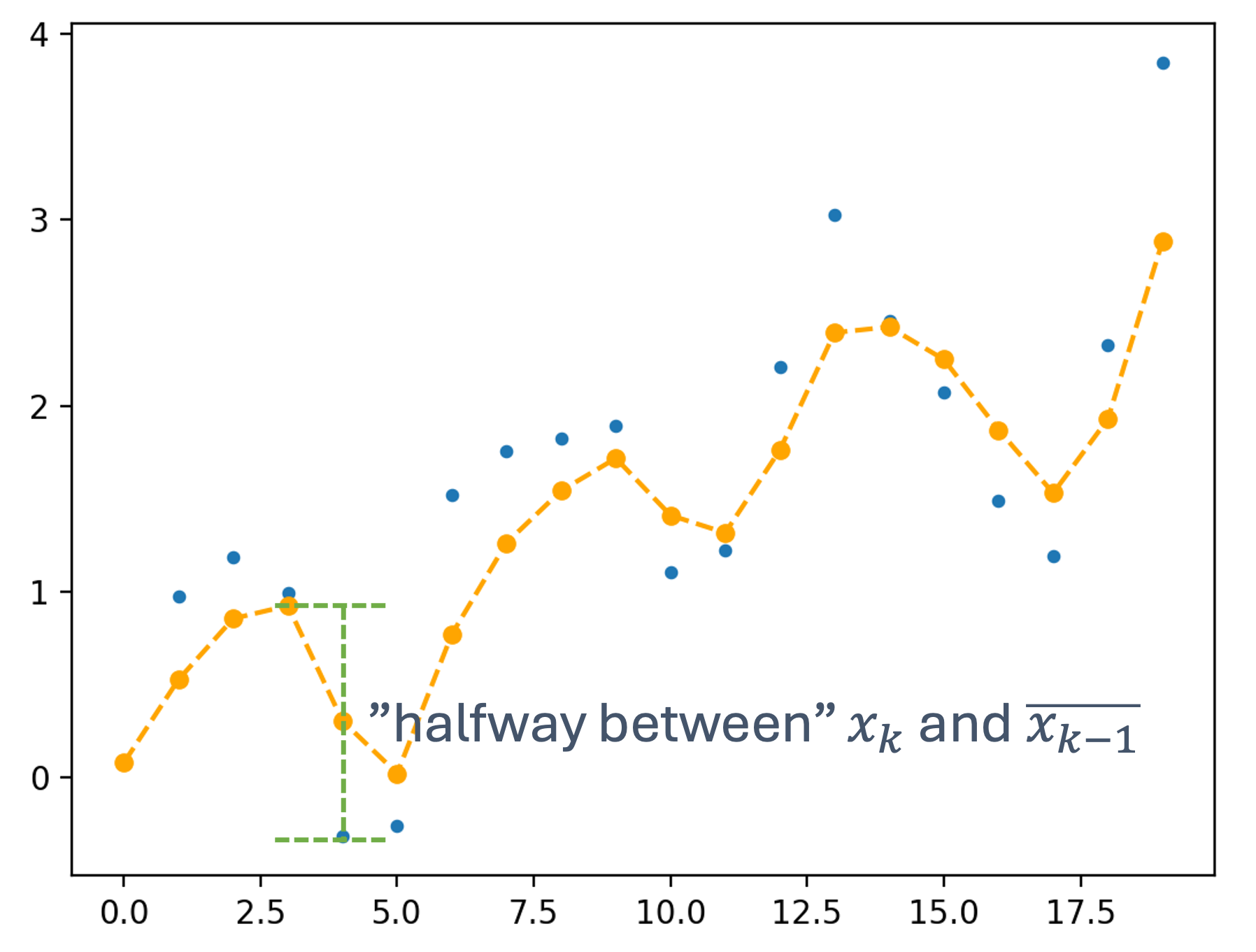

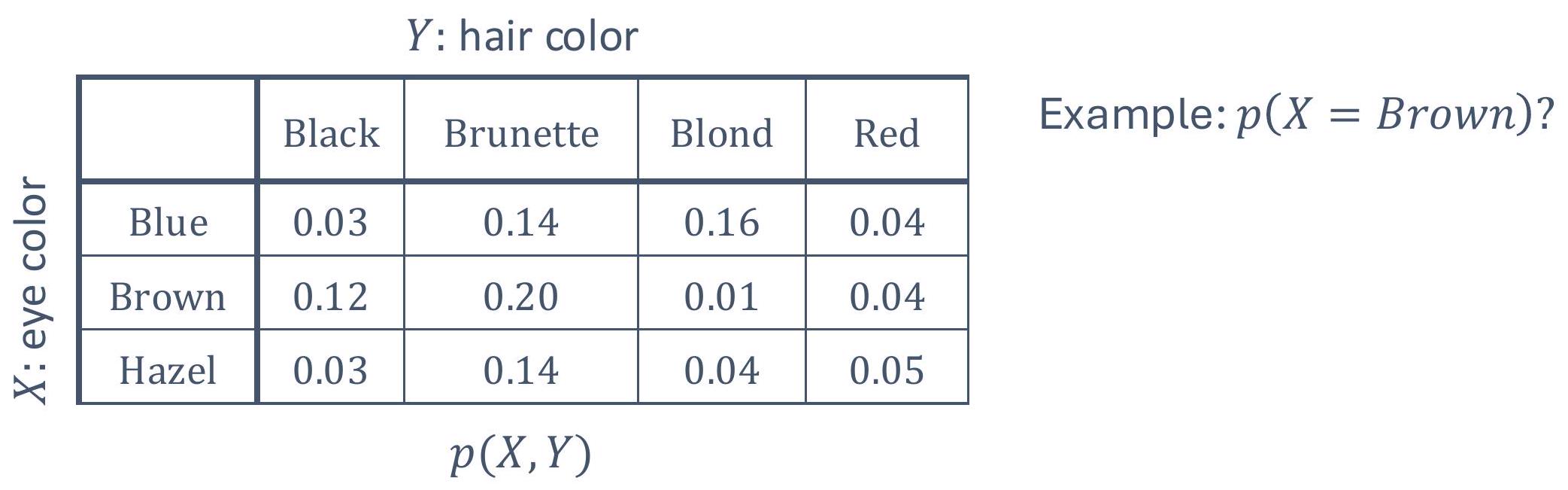

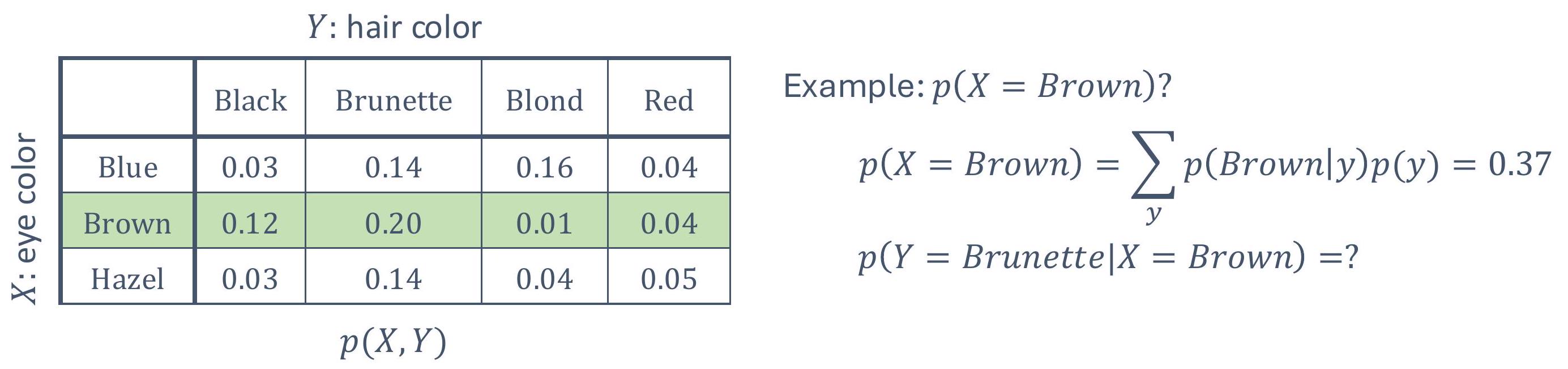

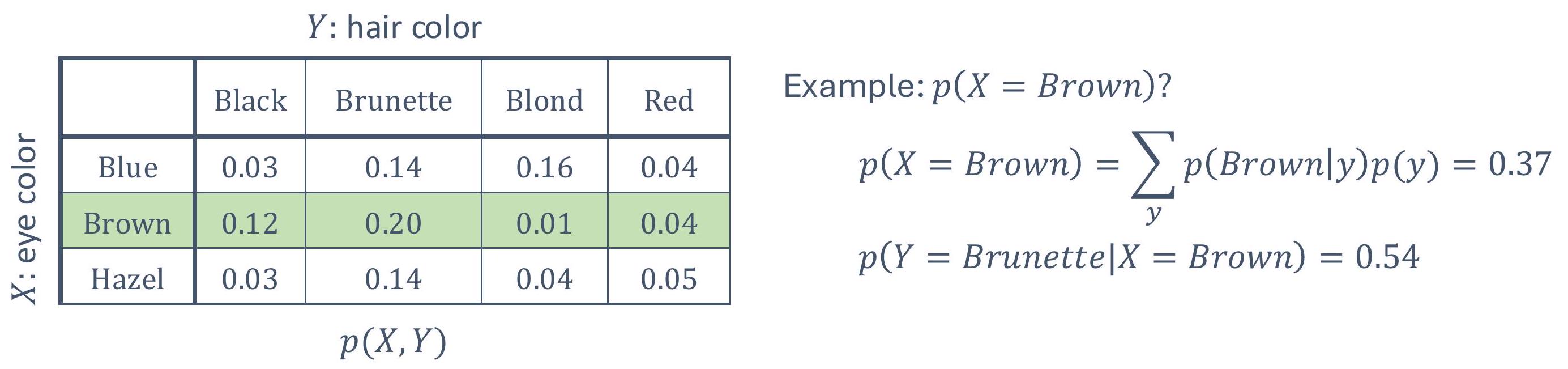

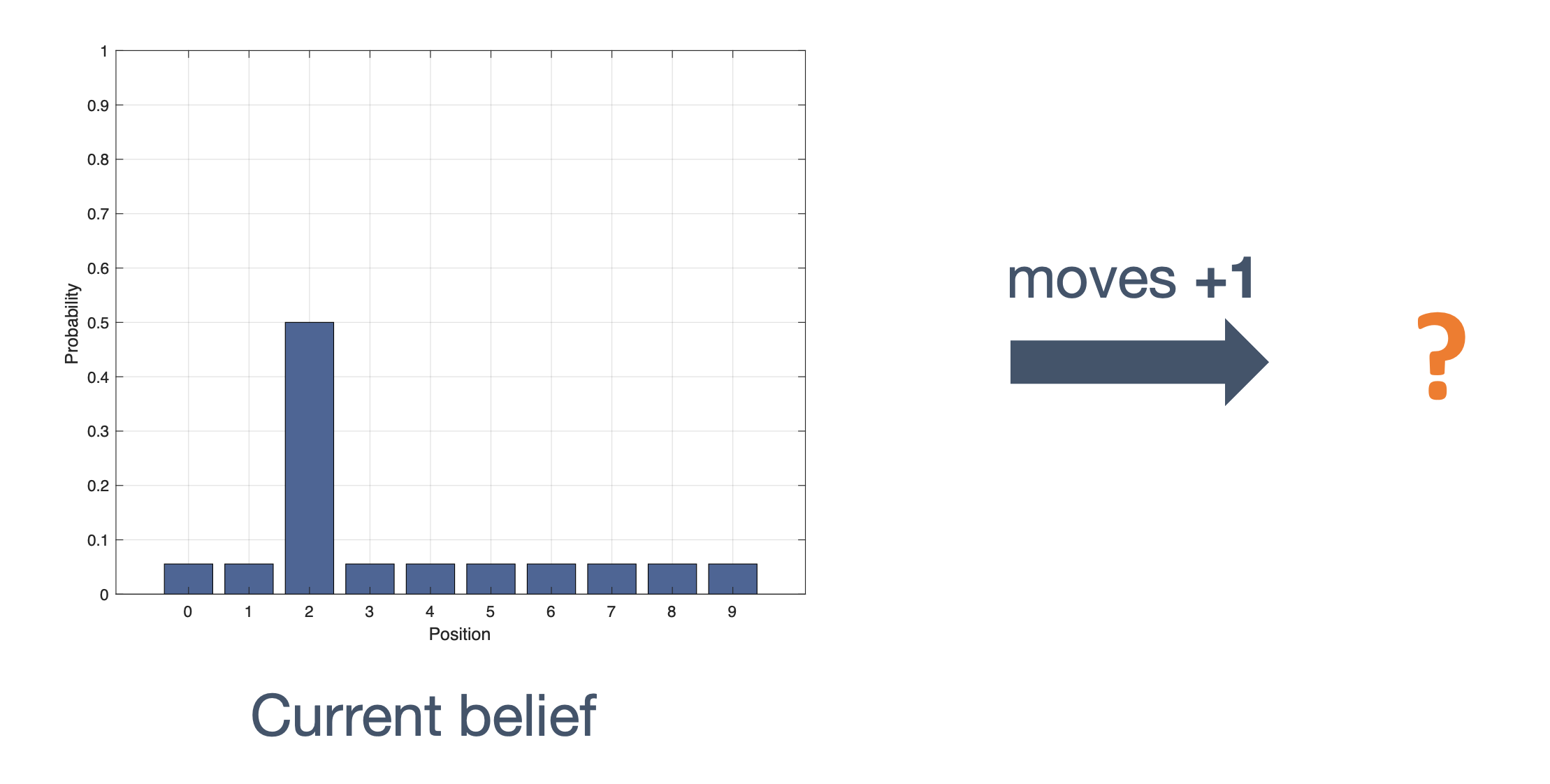

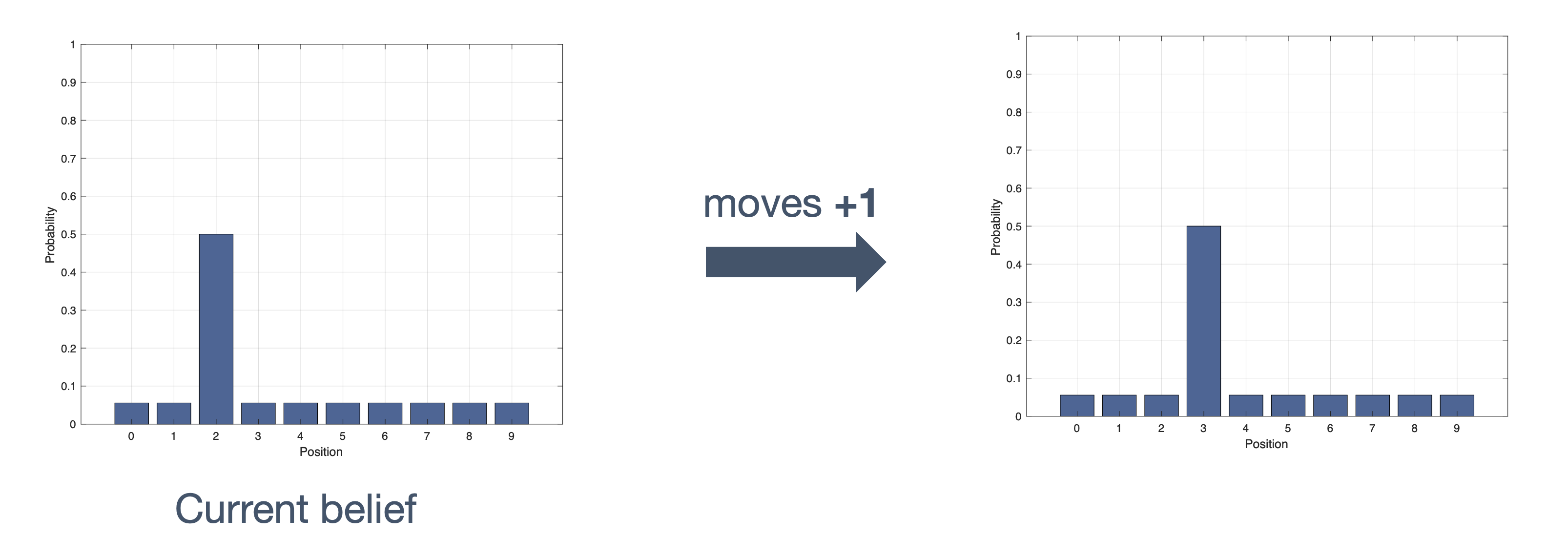

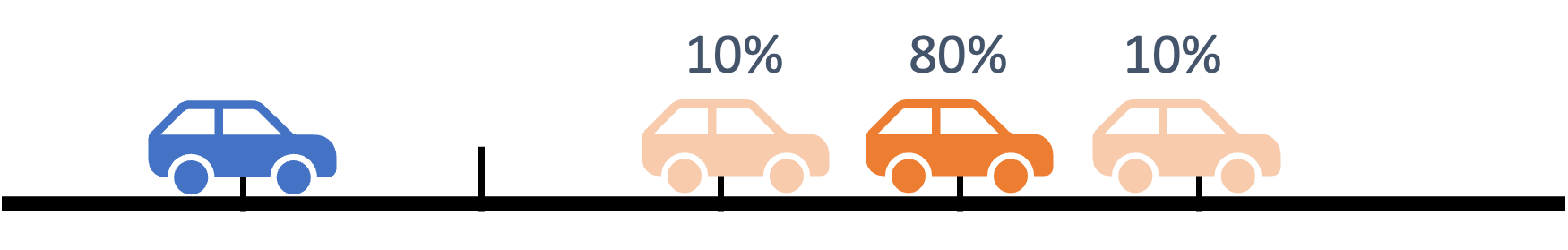

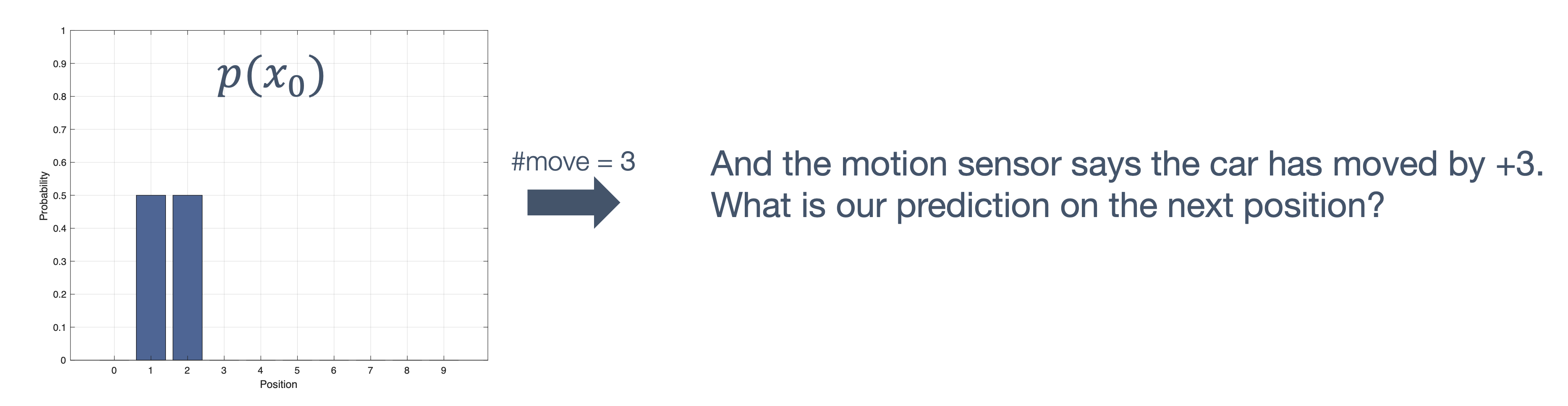

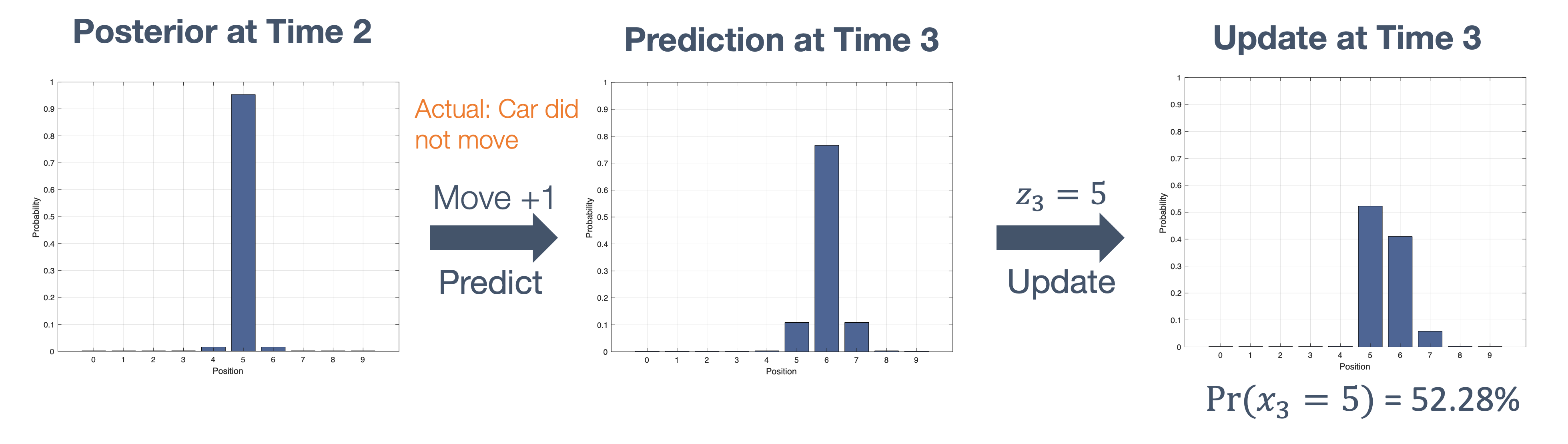

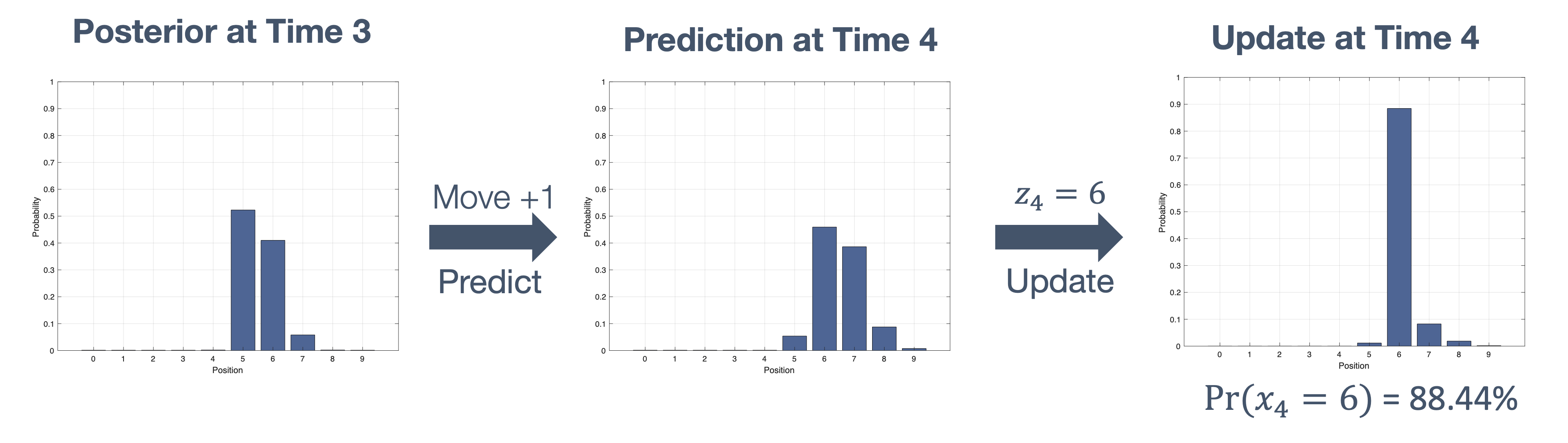

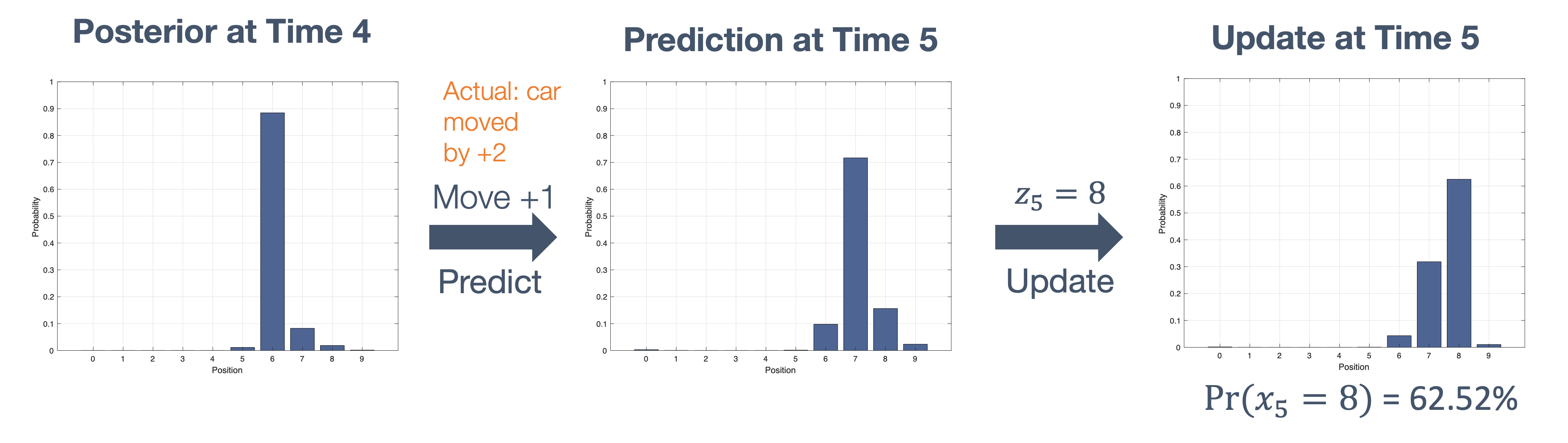

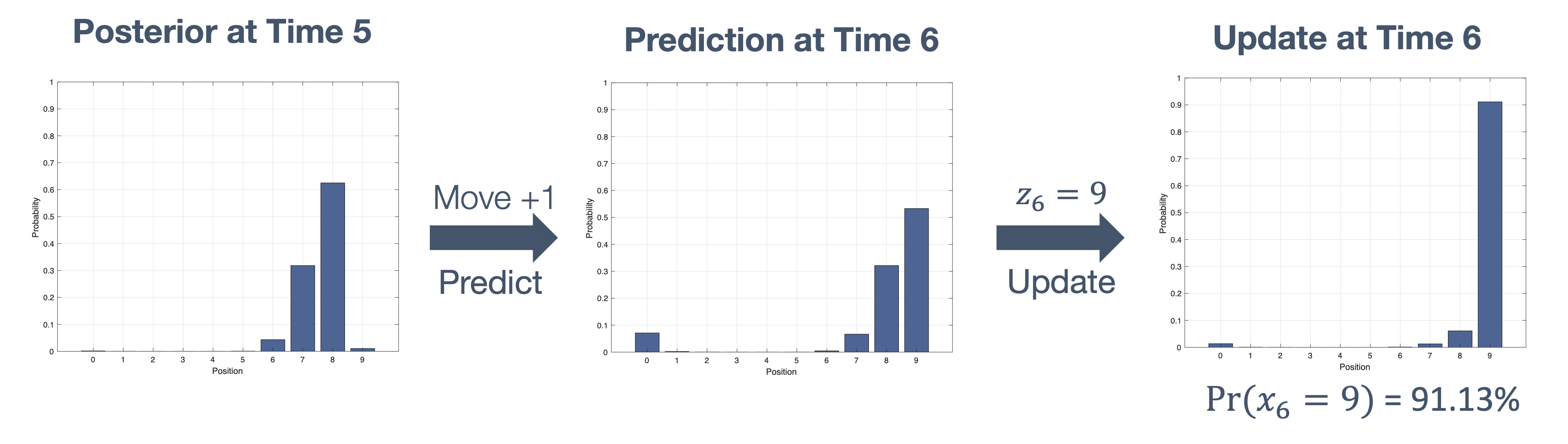

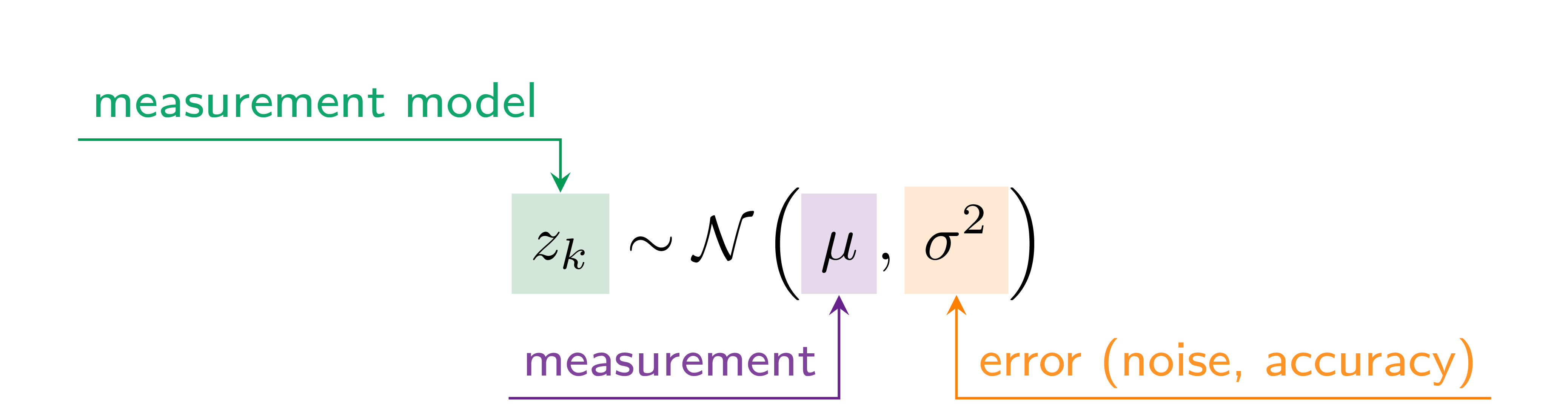

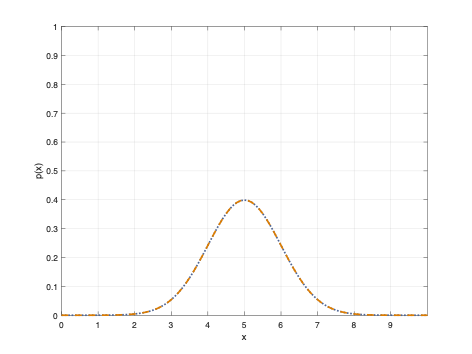

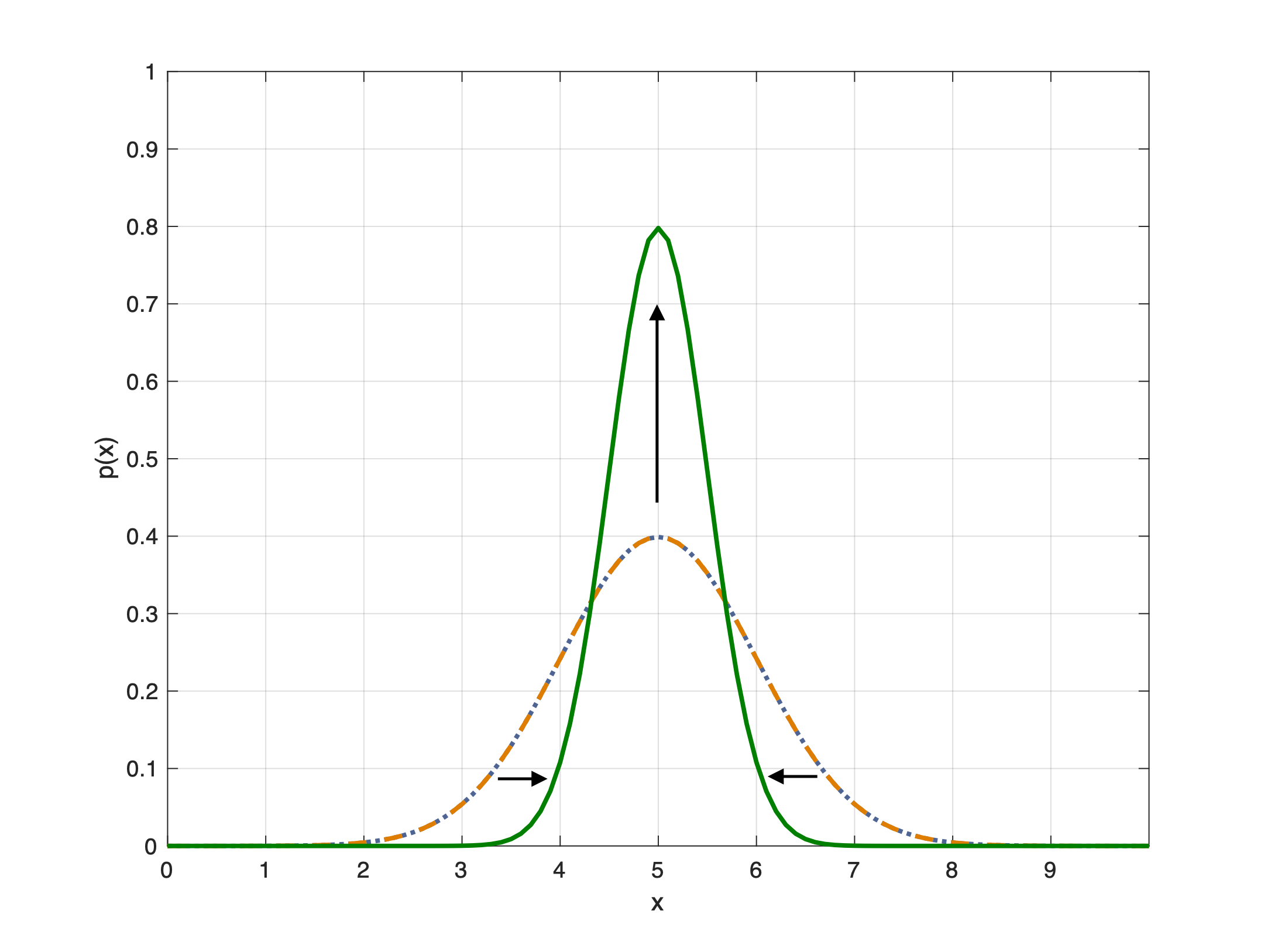

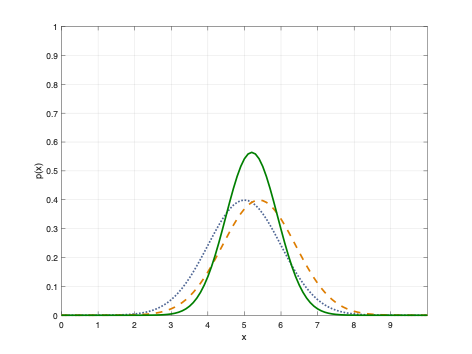

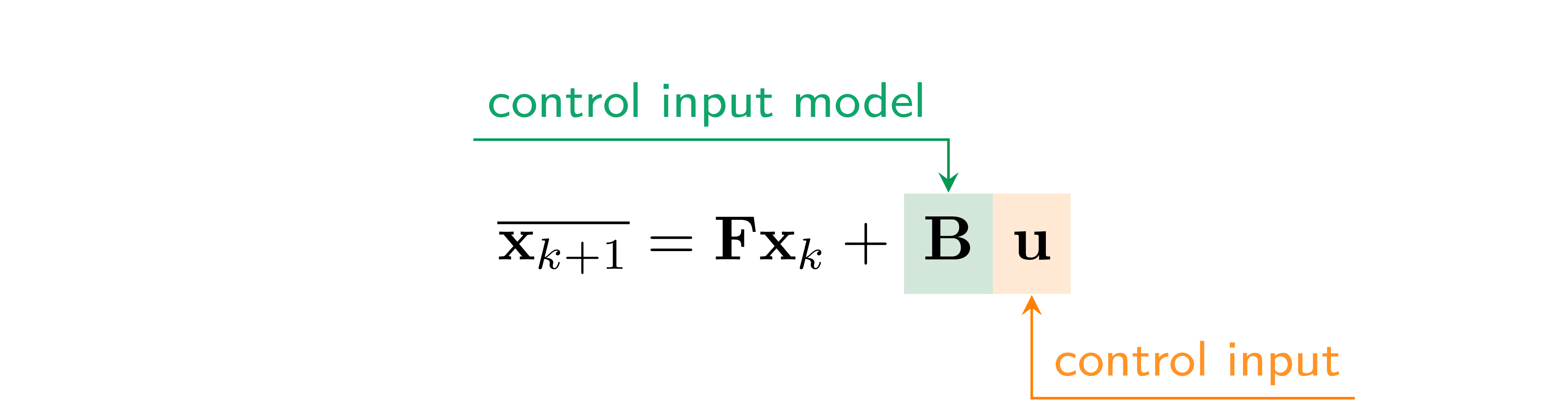

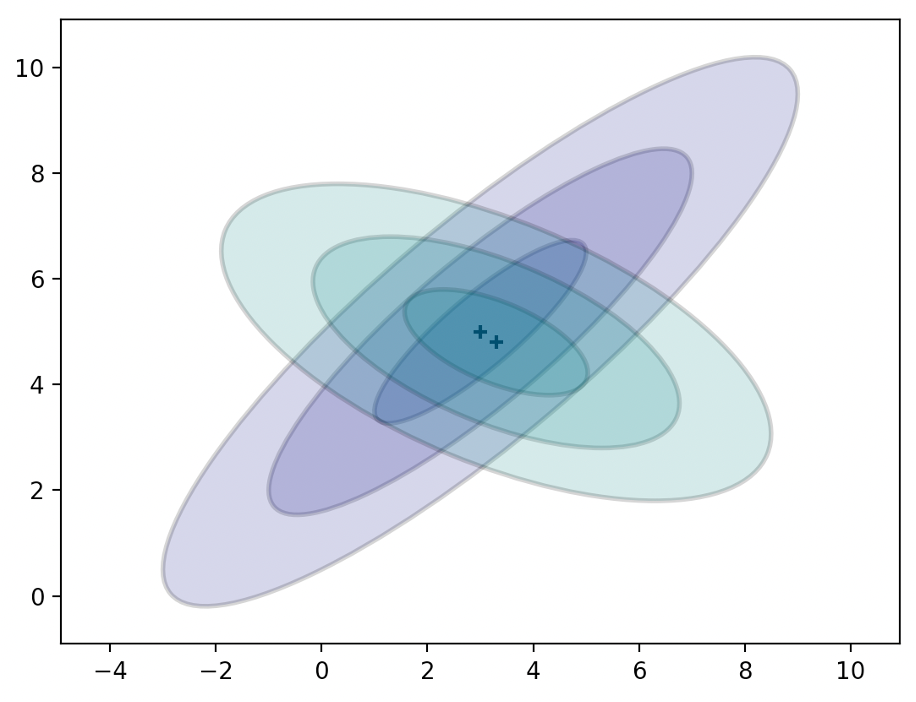

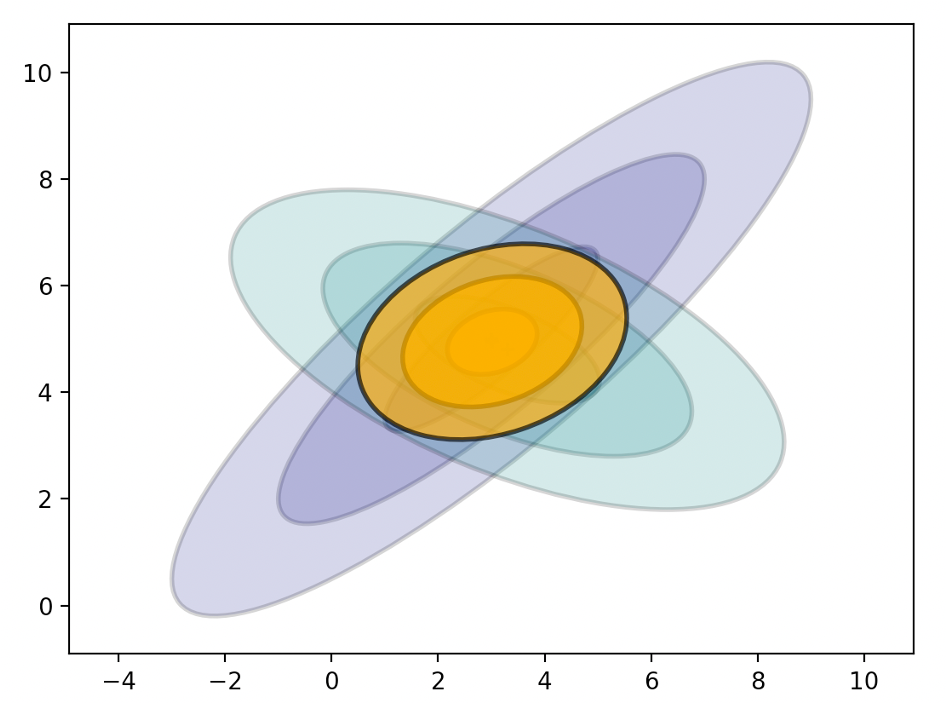

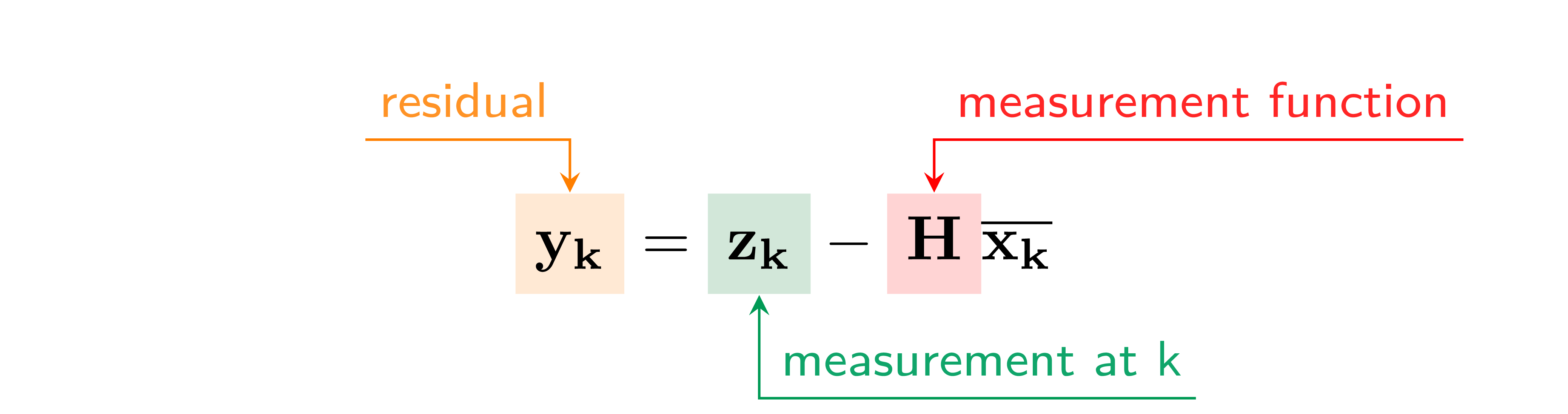

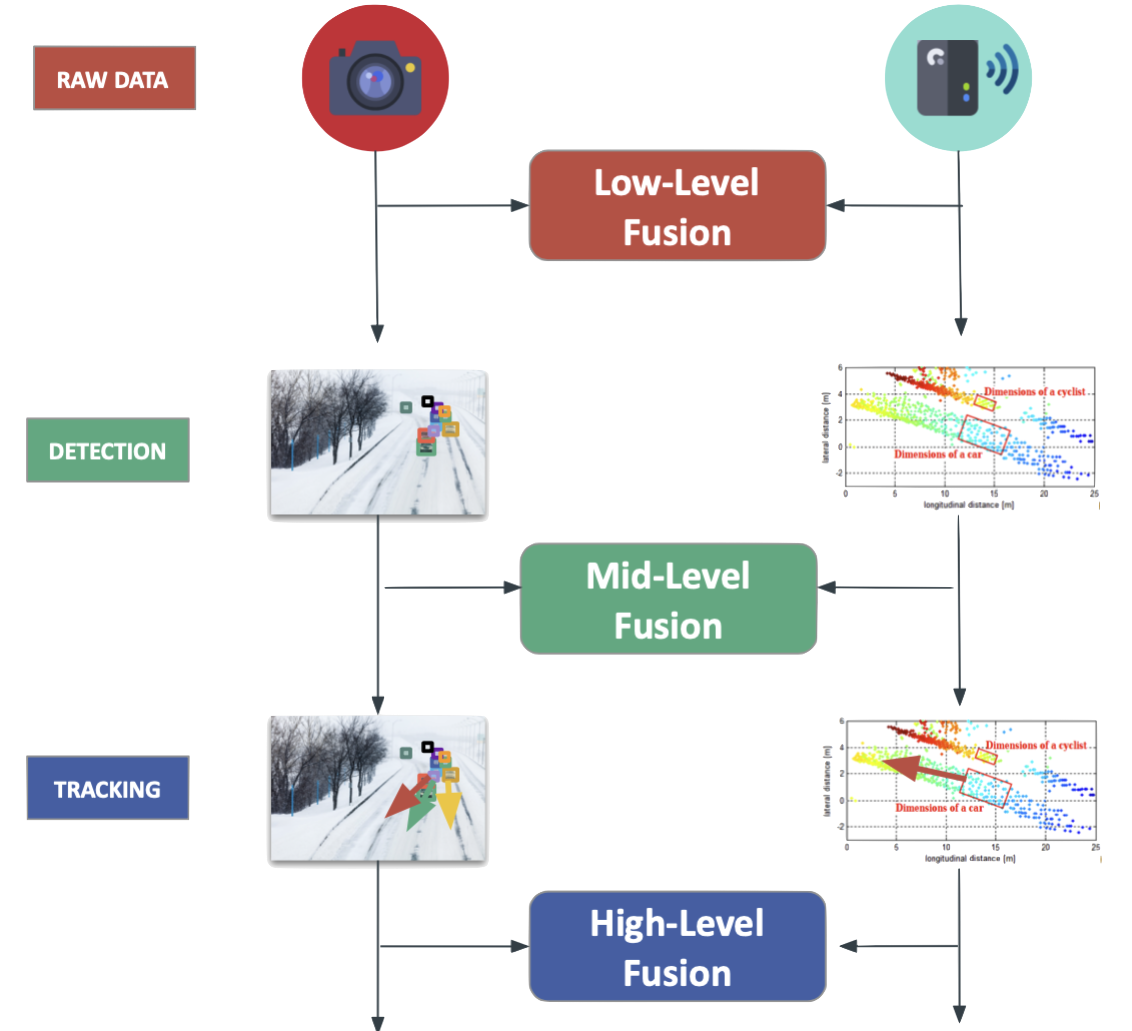

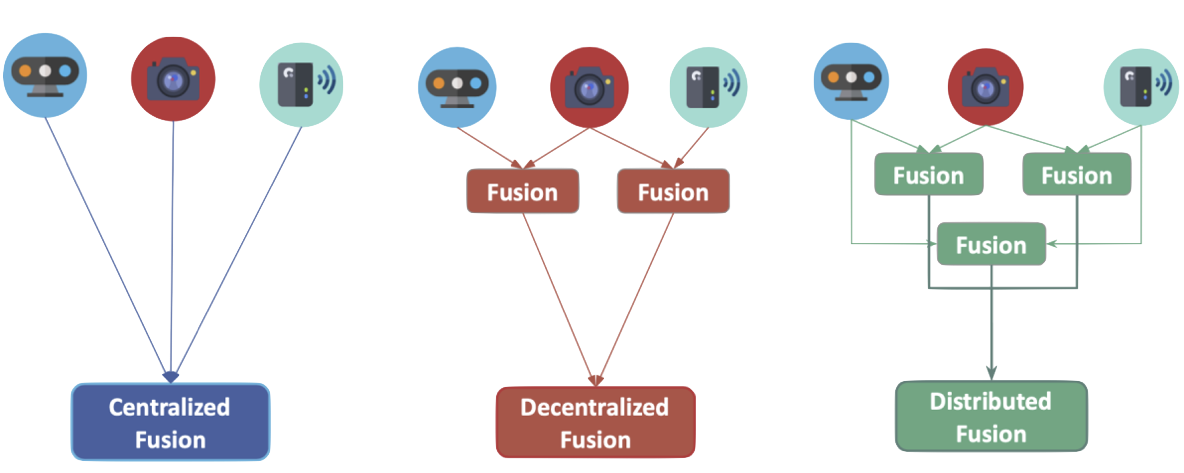

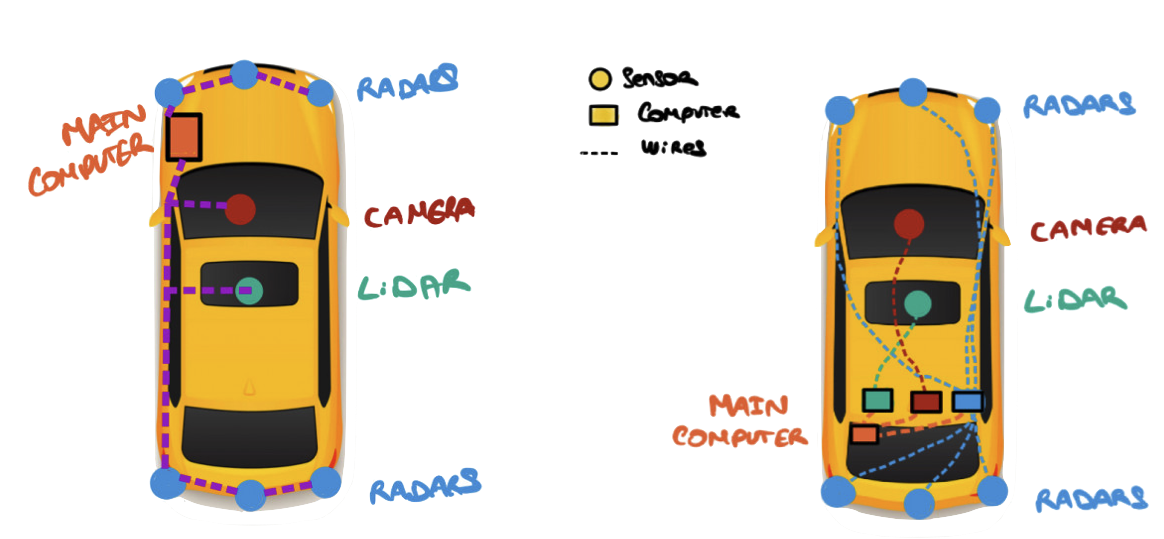

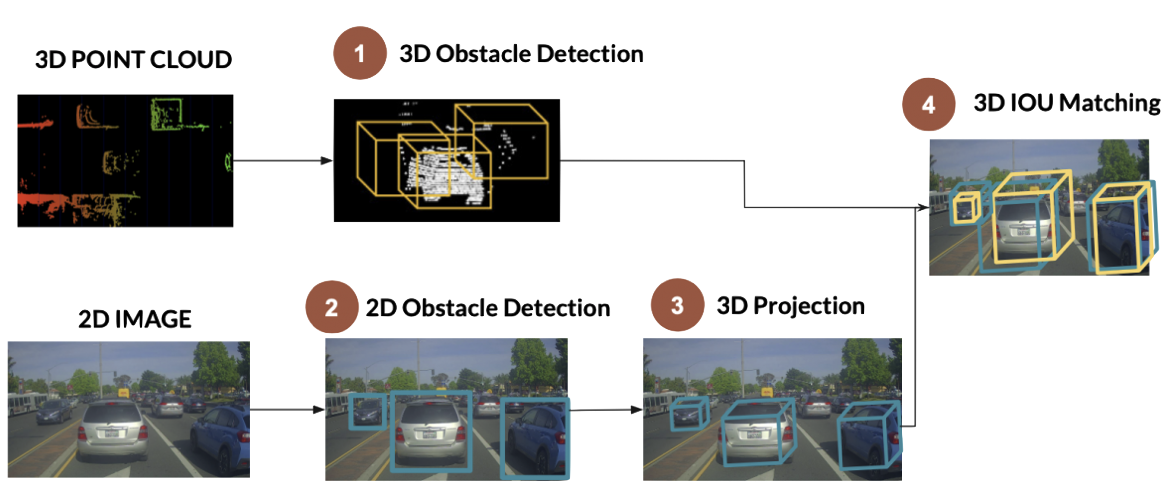

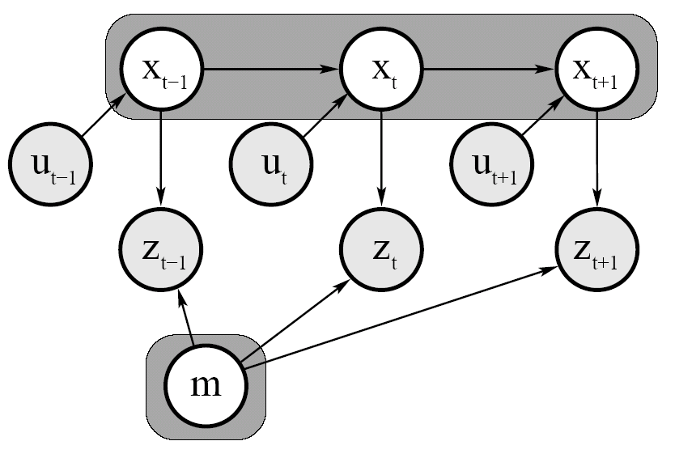

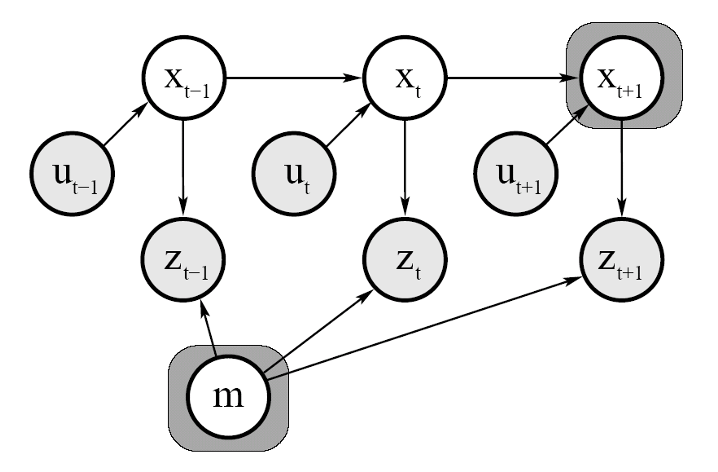

- 9 Sensor Fusion

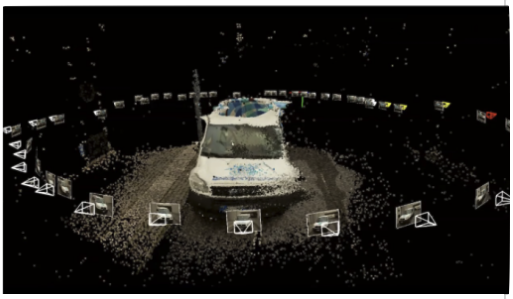

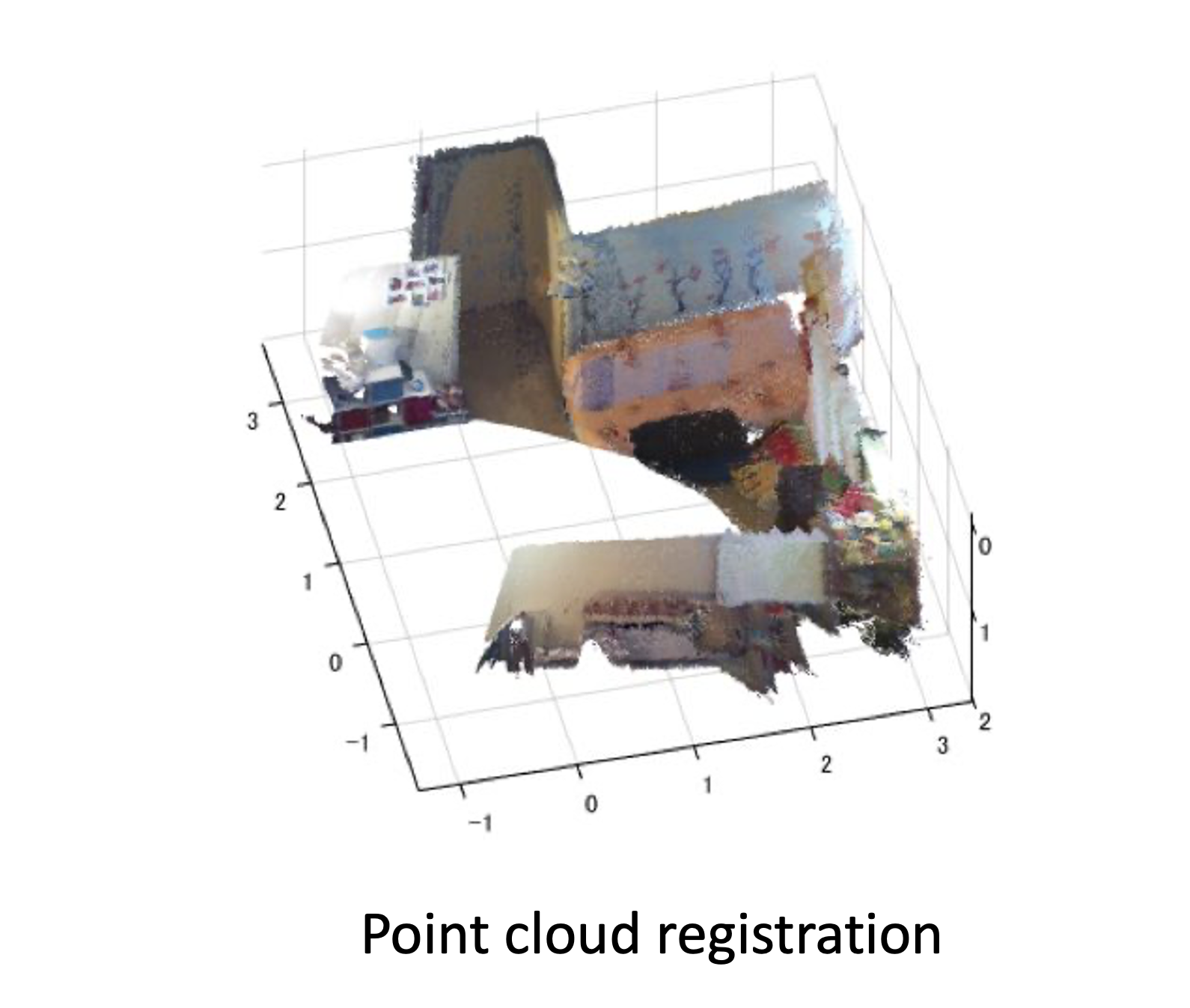

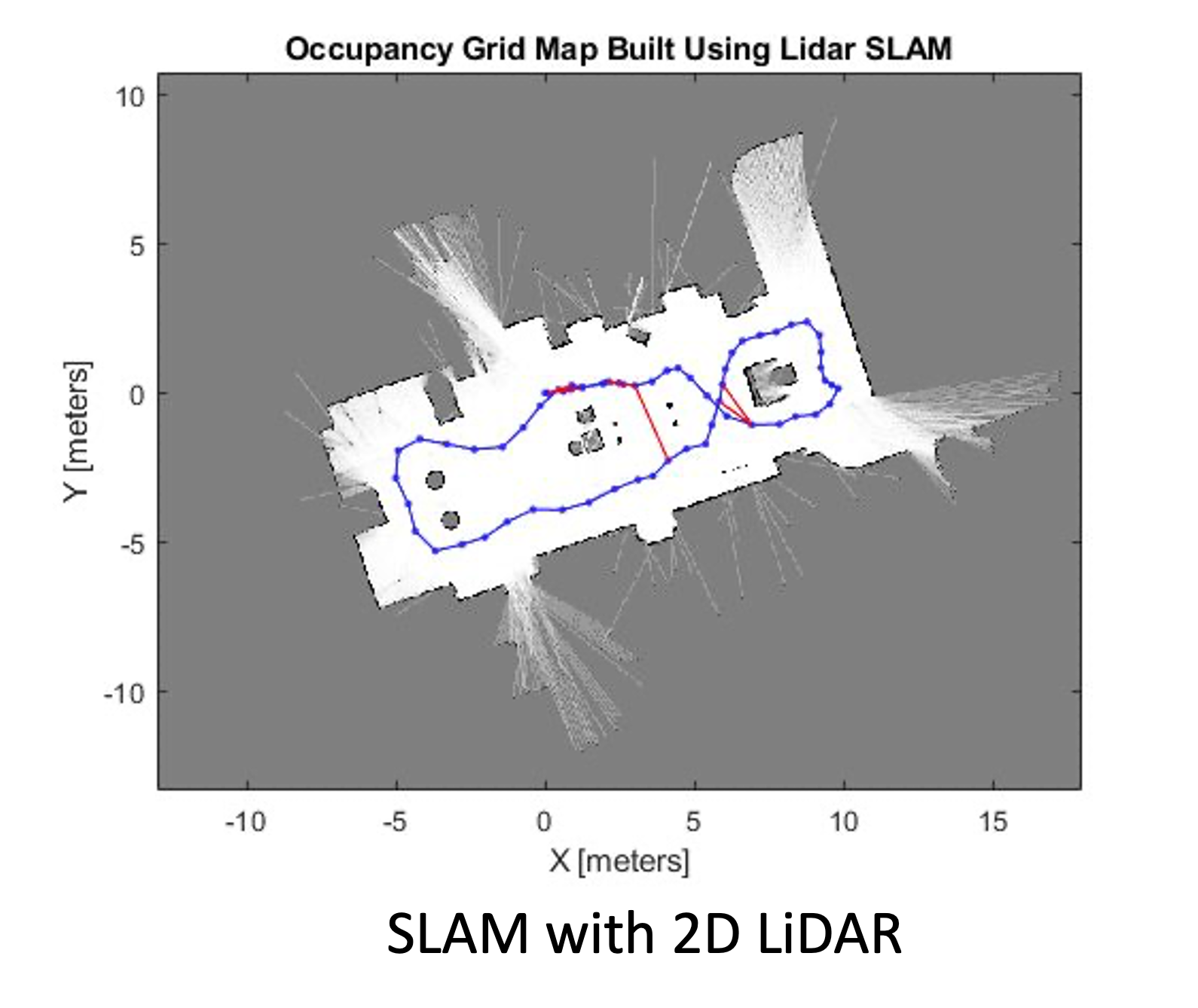

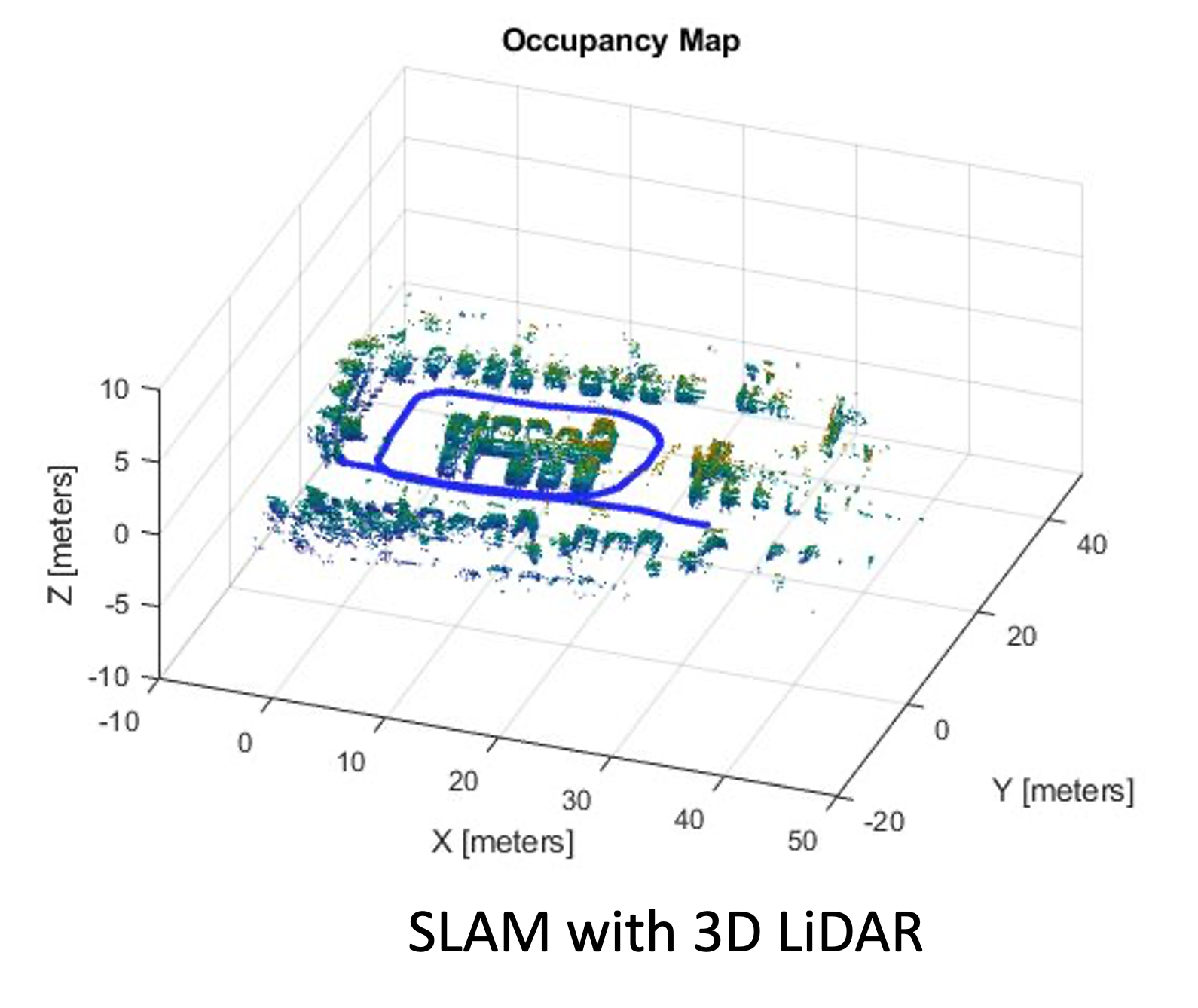

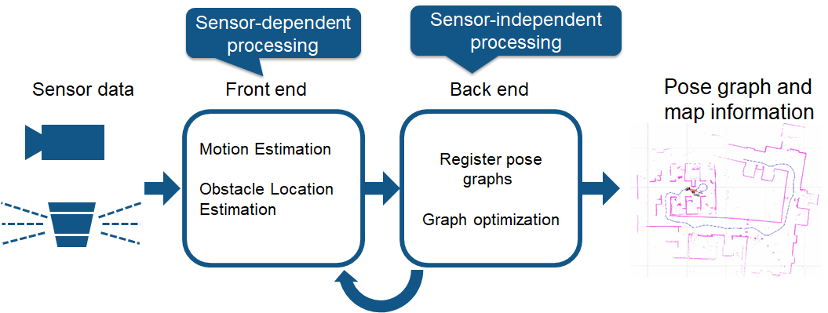

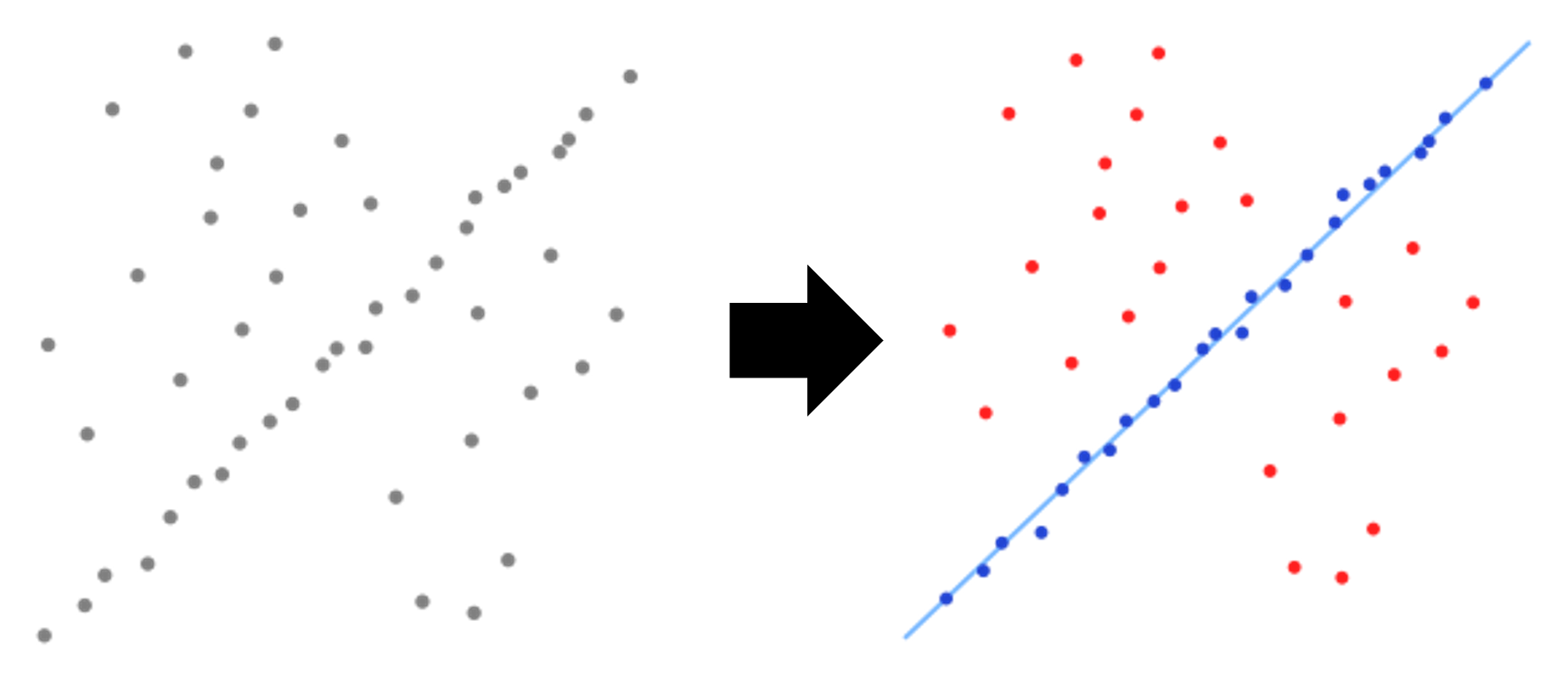

- 10 SLAM

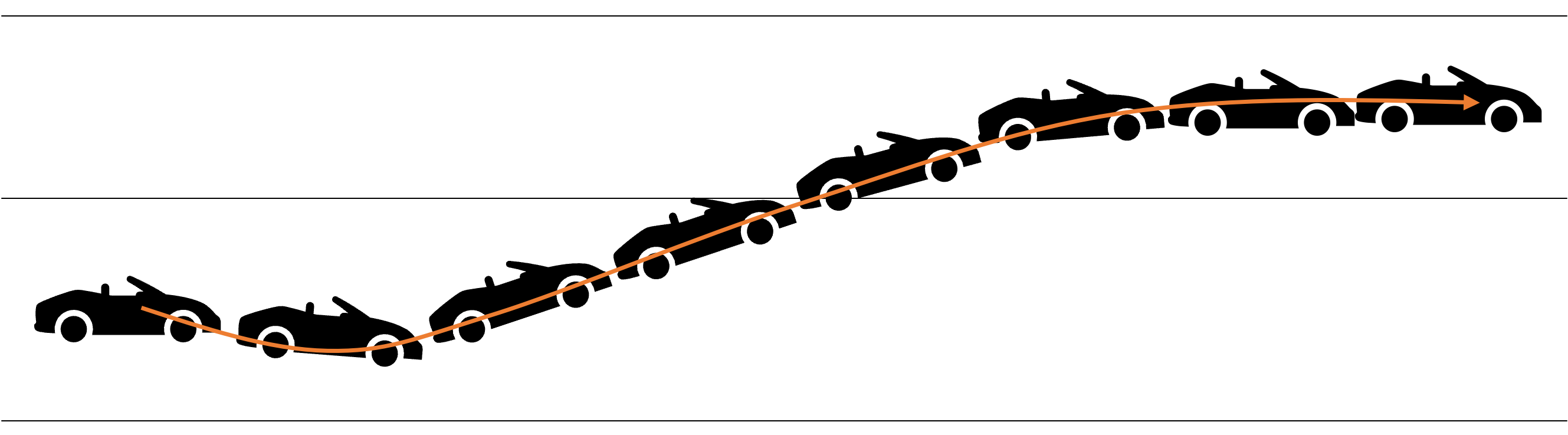

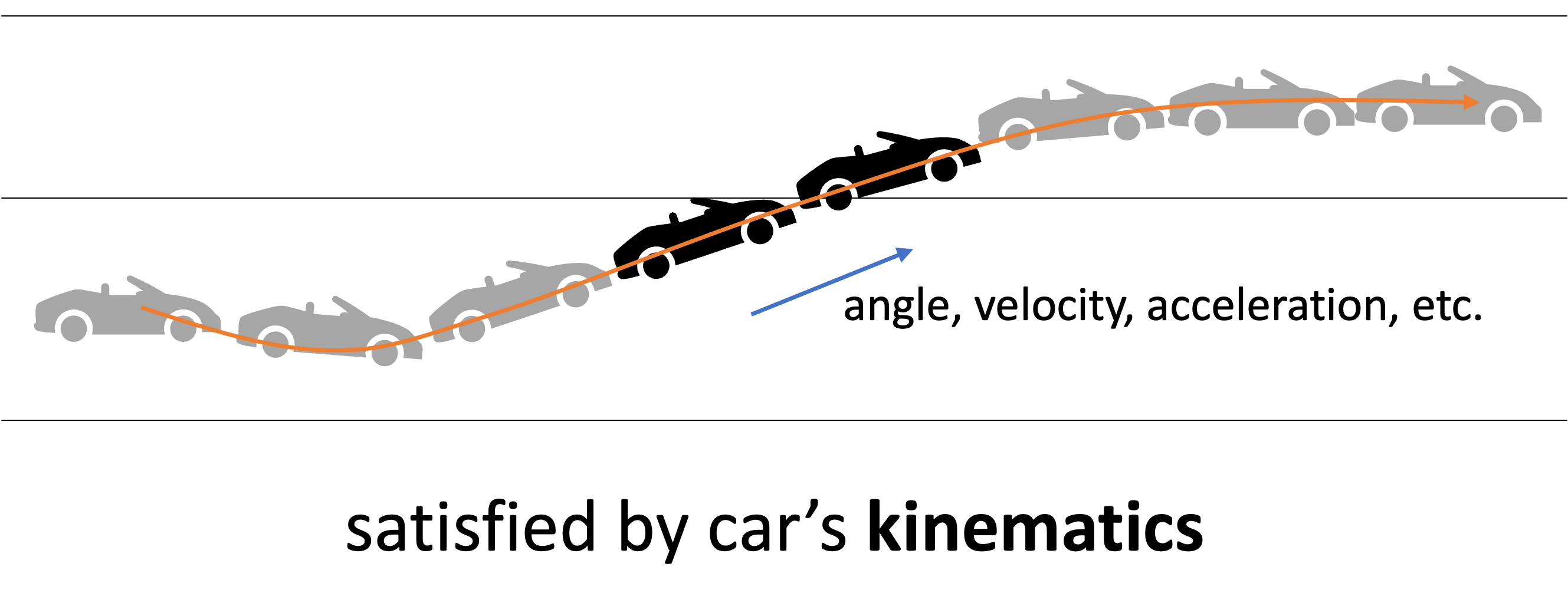

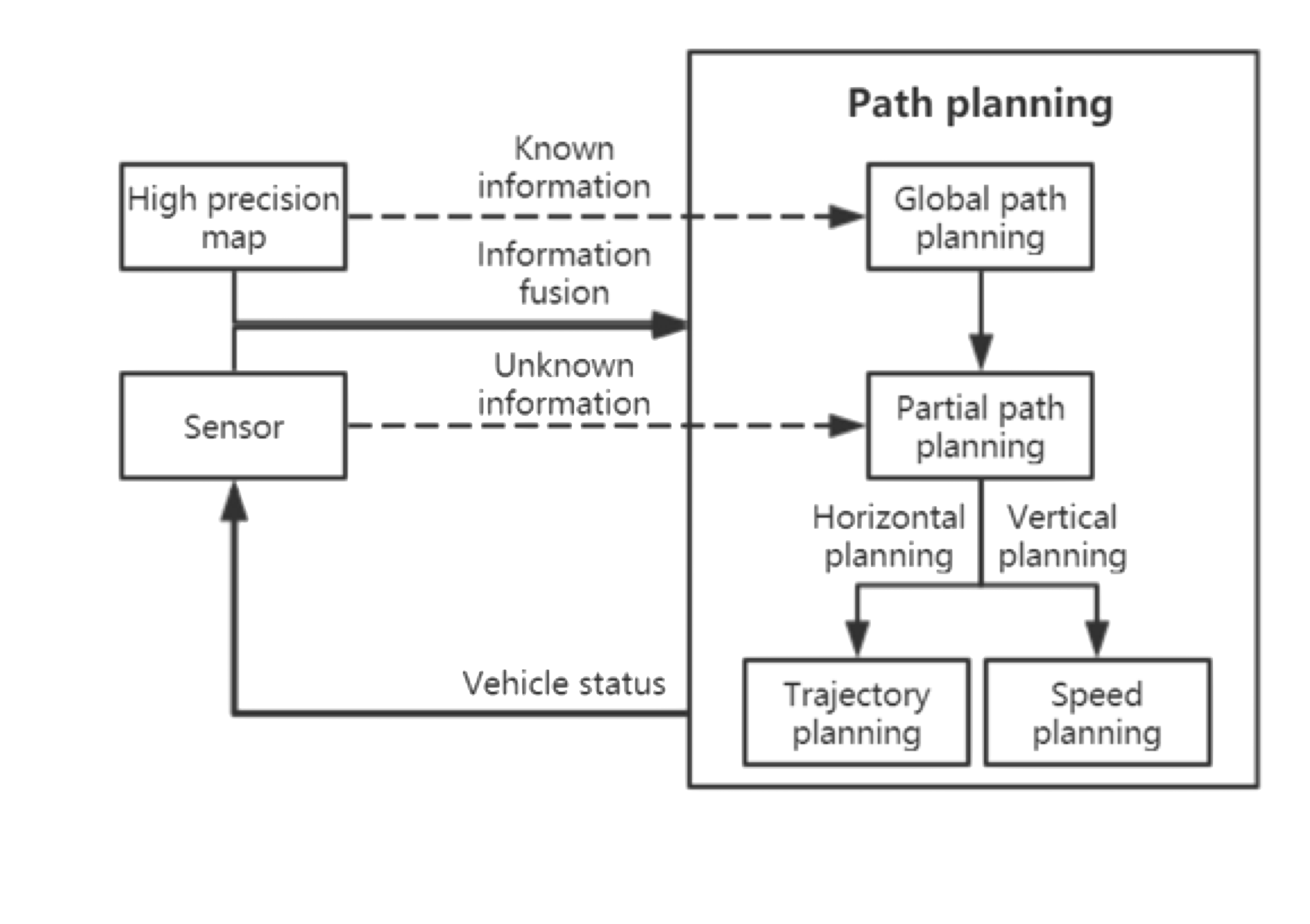

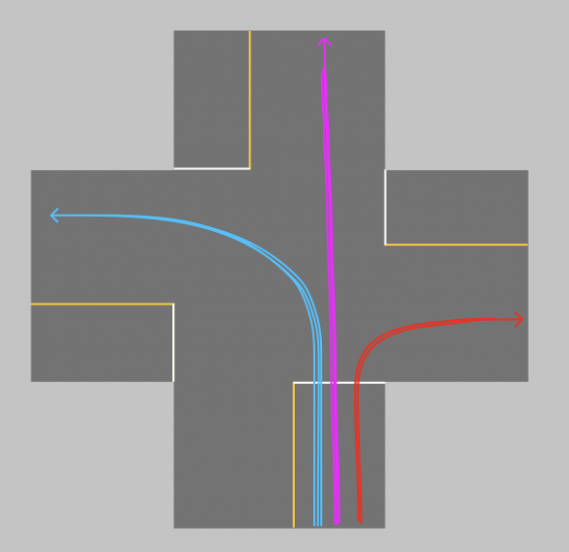

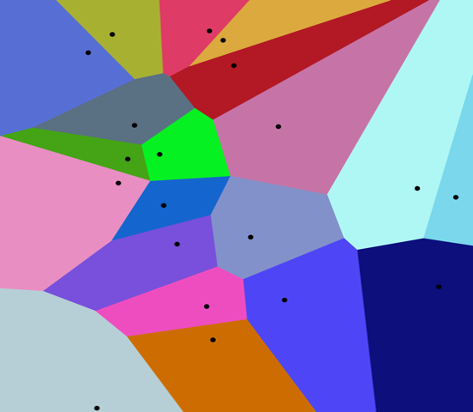

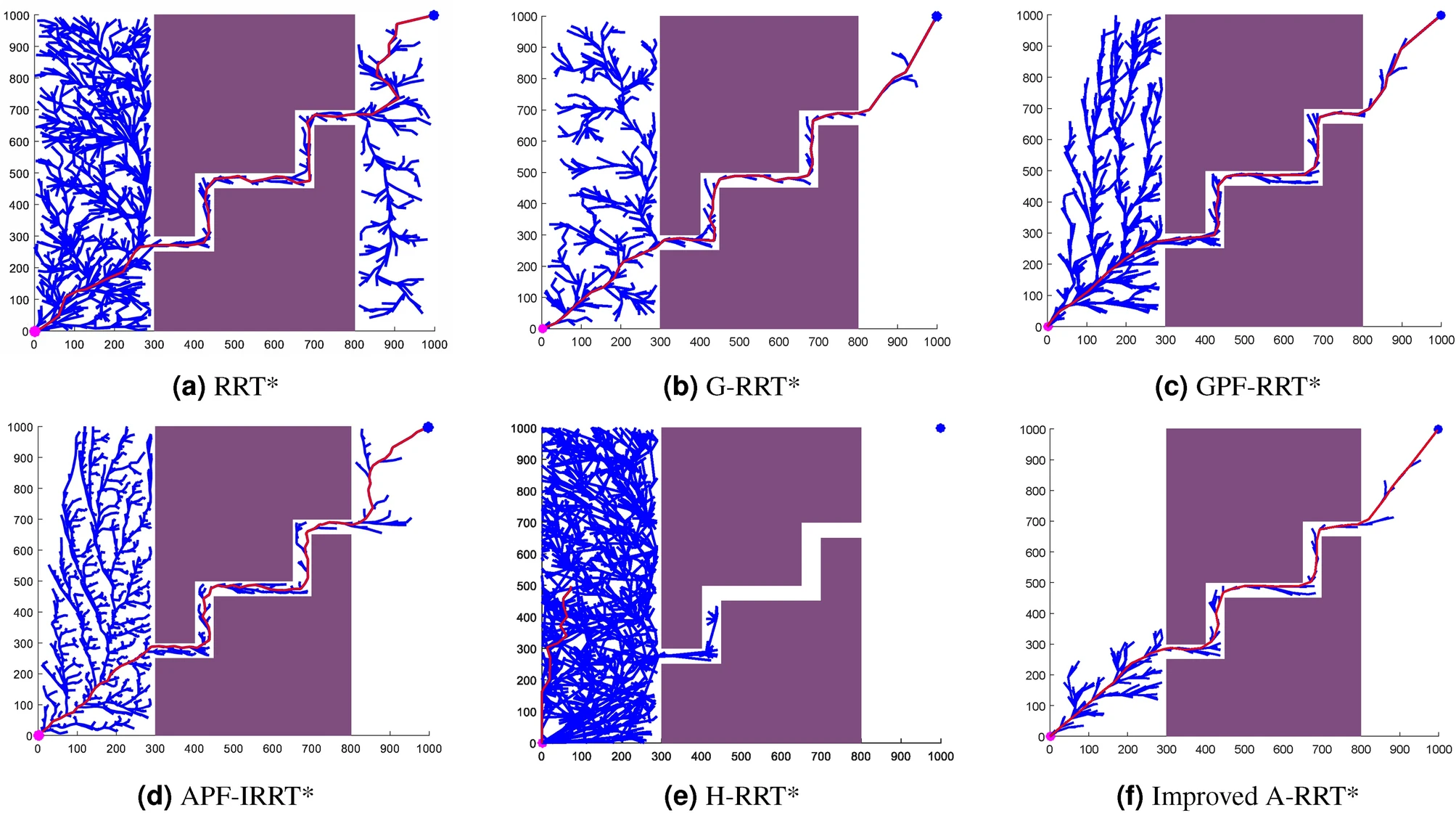

- 11 Path Planning

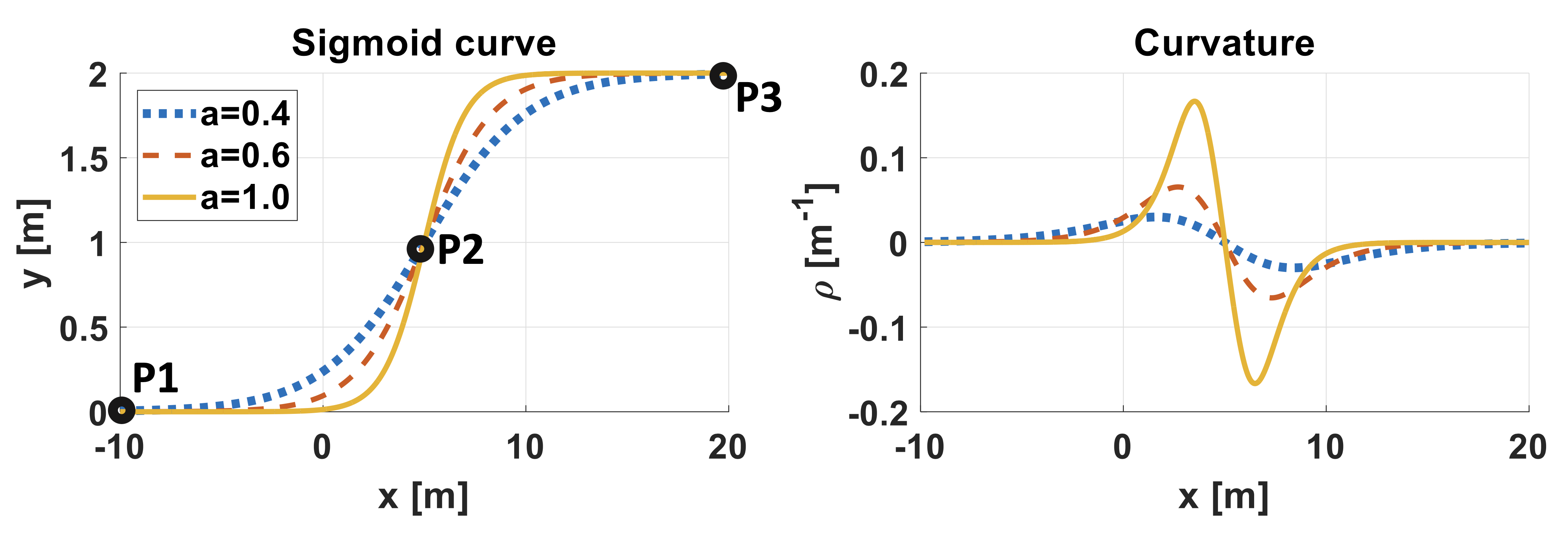

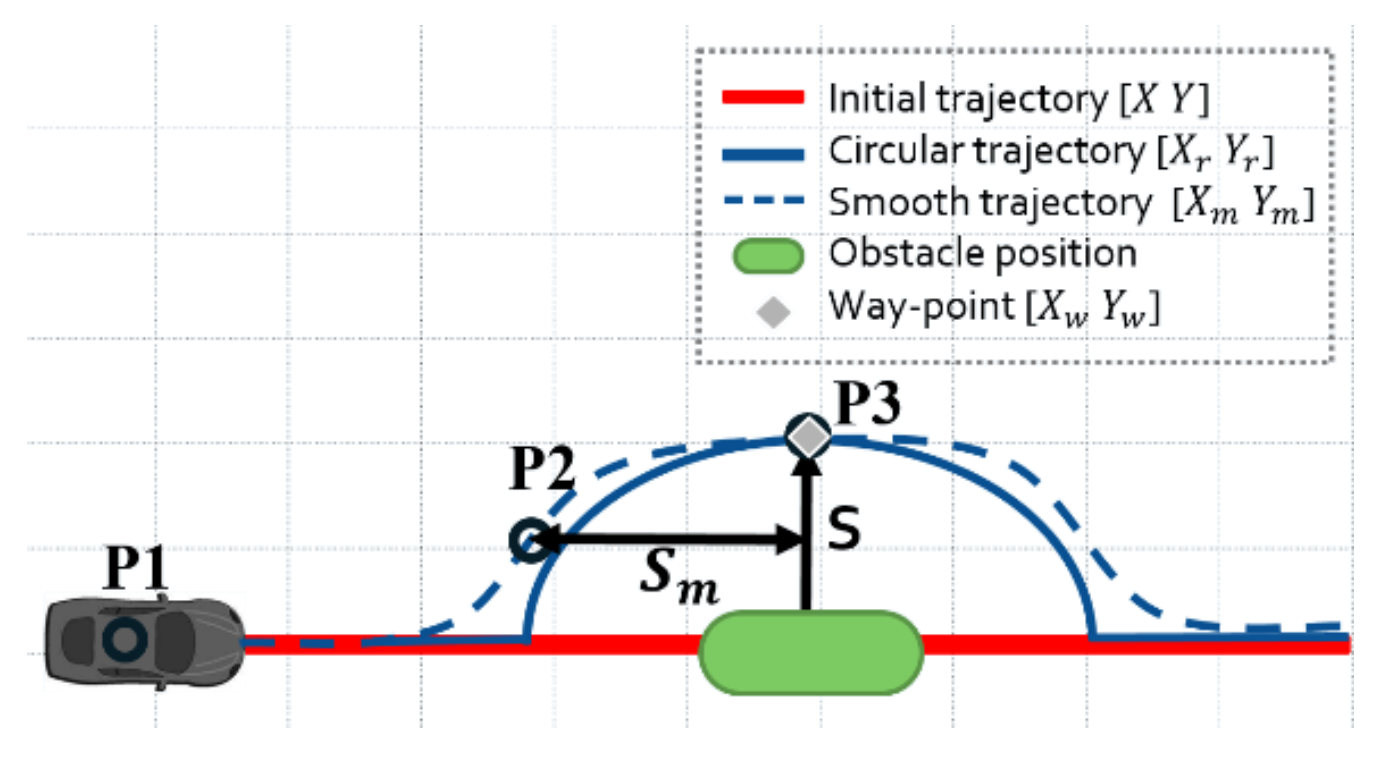

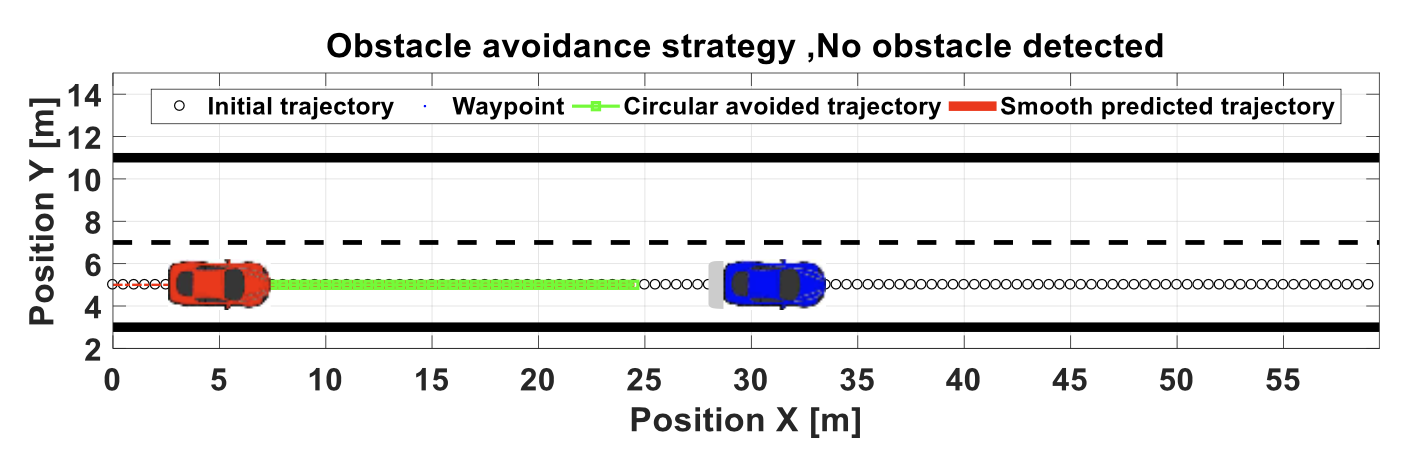

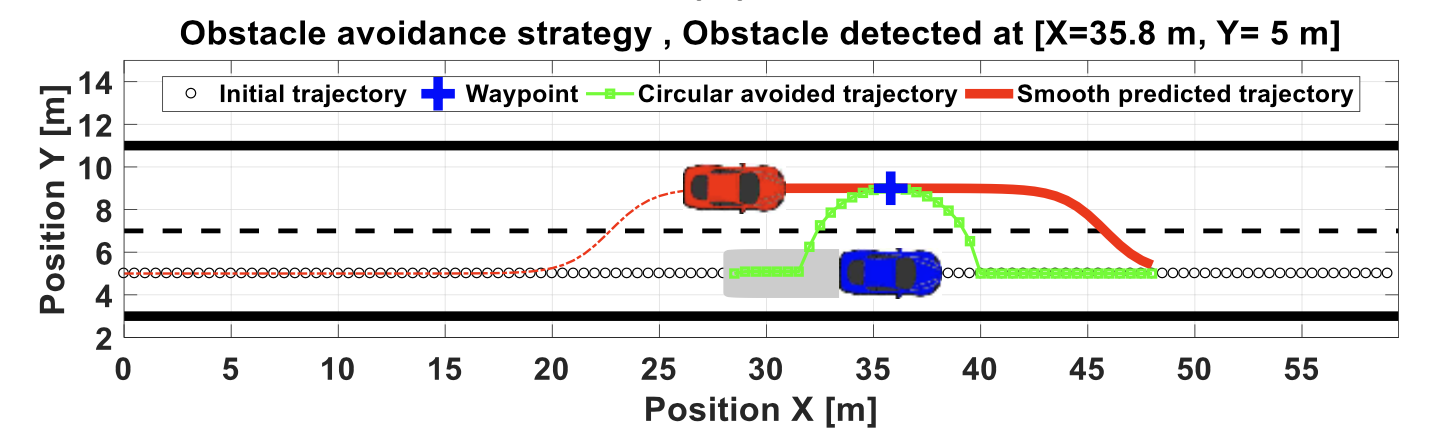

- 12 Object Detection and Avoidance

- 13 Safety and Standards

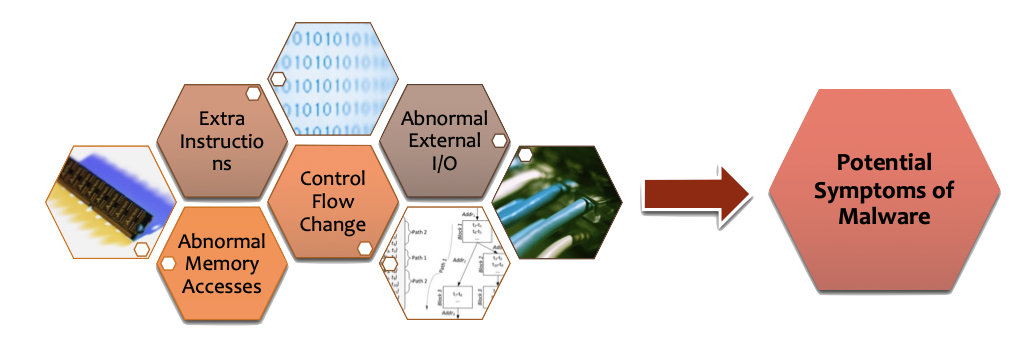

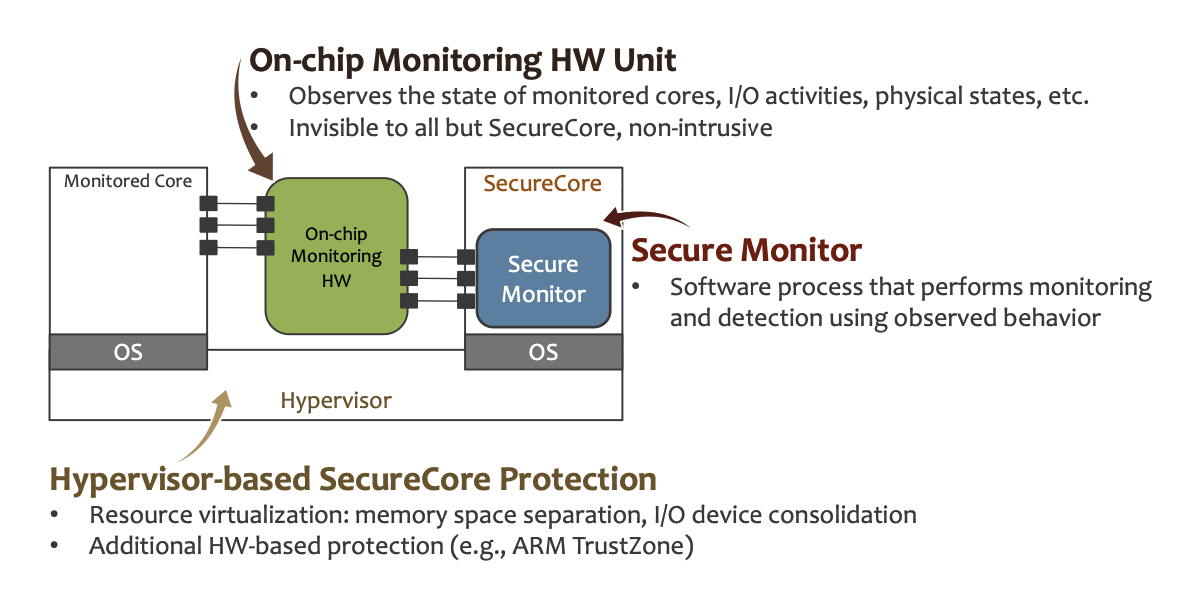

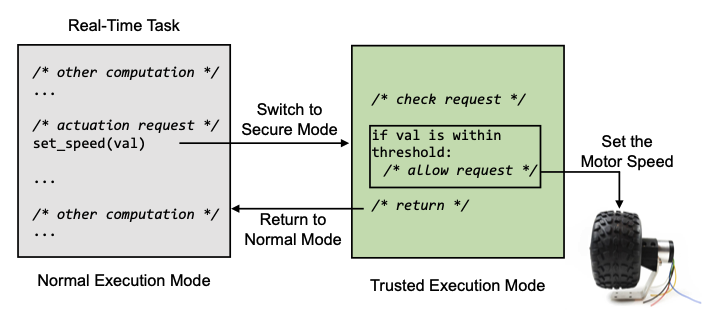

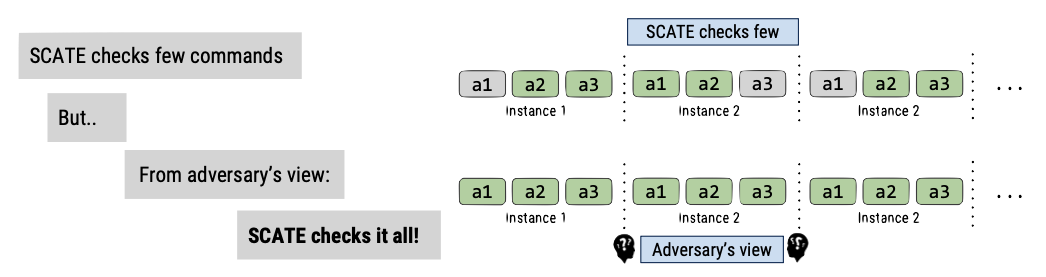

- 14 Security for Autonomous Systems

1 introduction

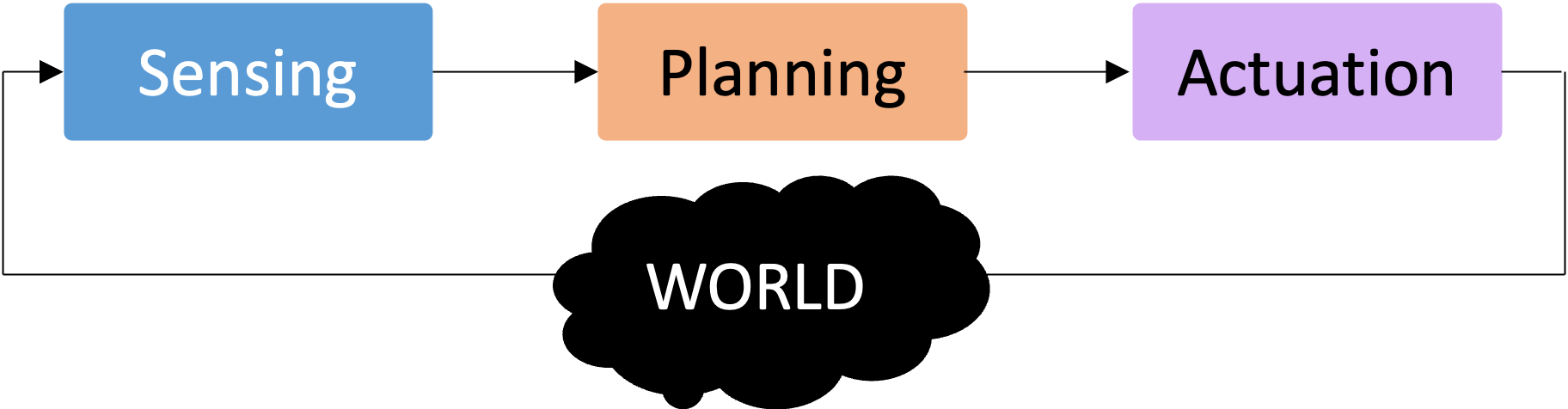

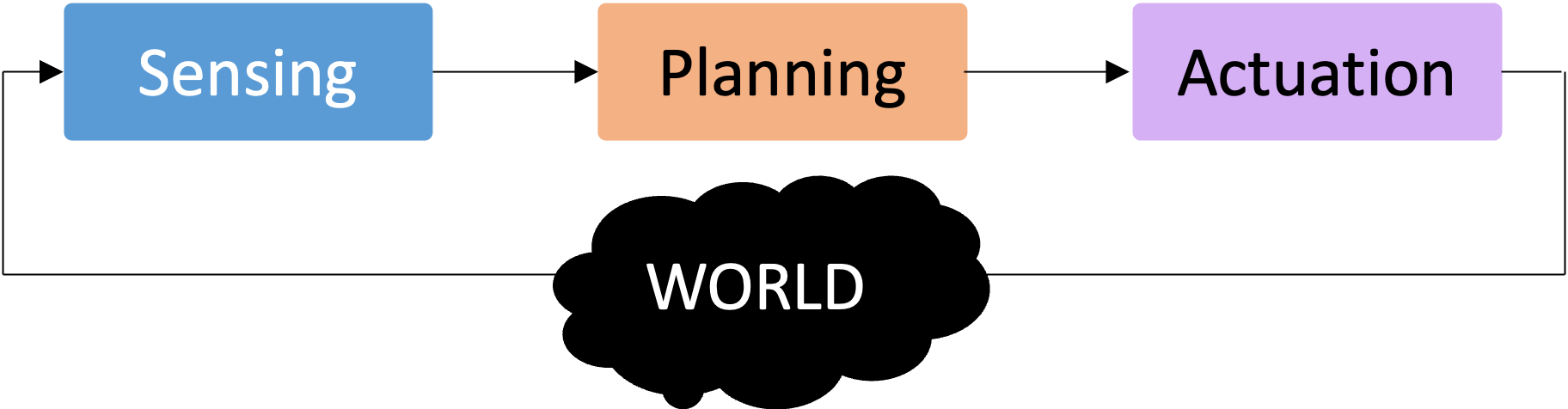

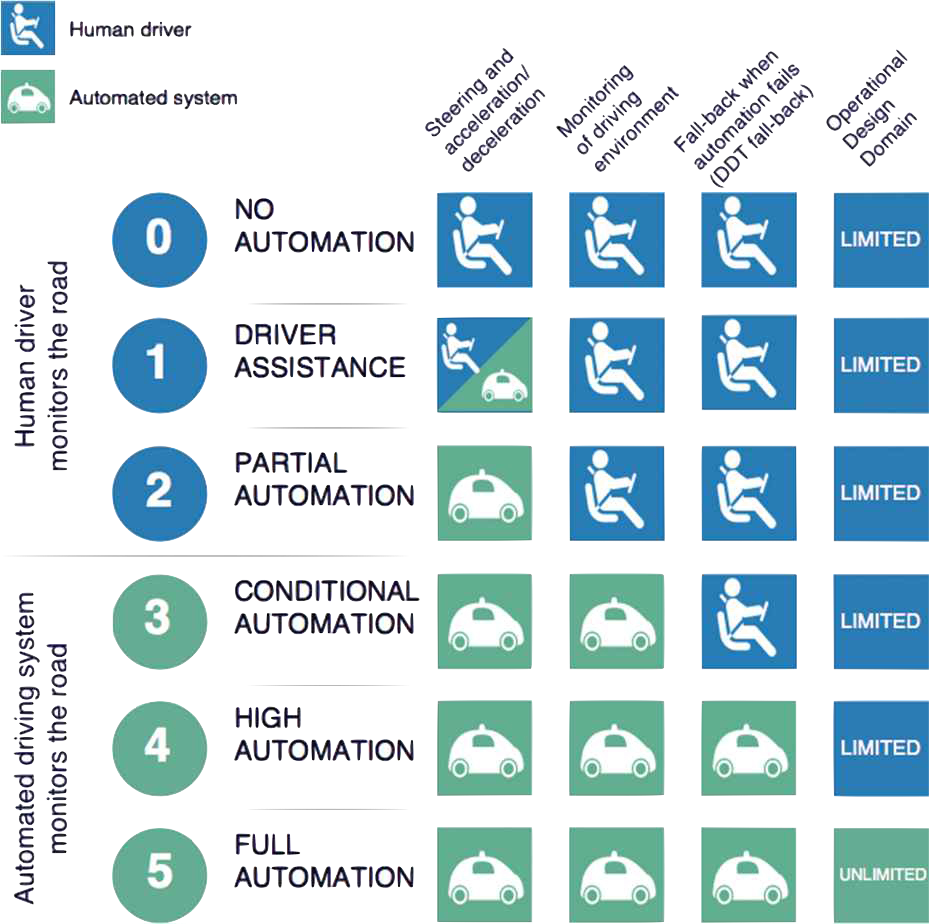

1.1 autonomy

what is “autonomy”?

we see various examples of it…

1.1.1 what are the aspects of autonomy?

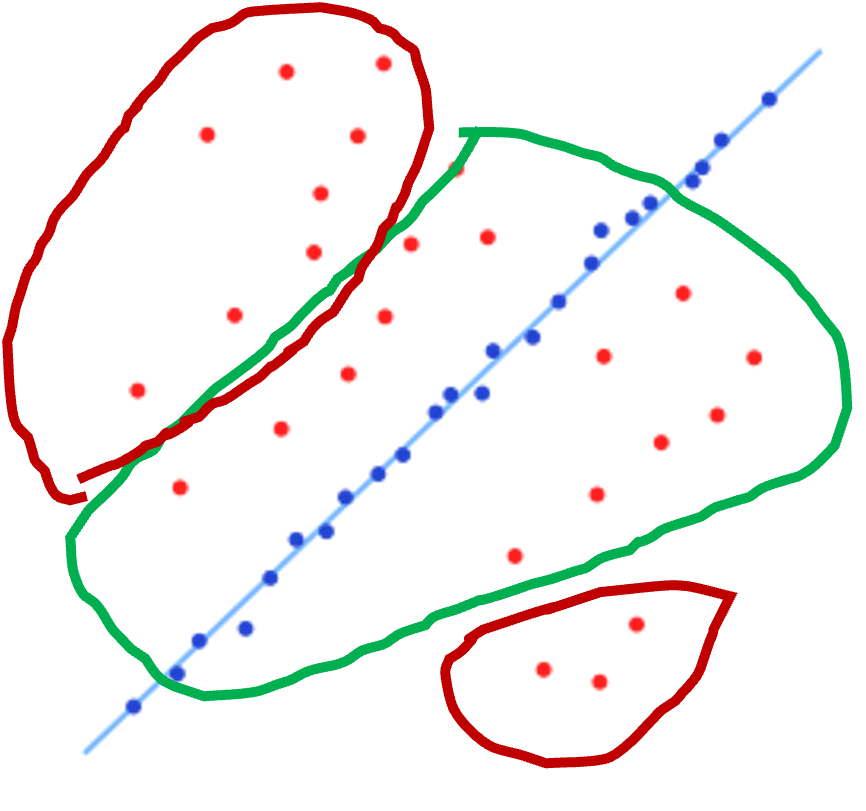

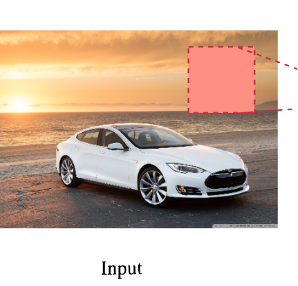

| perception | how do you “see” the world around you? |

| sensing | various ways to perceive the world around you (e.g, camera, LiDar) |

| compute | what do you “do” with the information about the world? |

| motion | do your computations result in any “physical” changes? |

| actuation | what “actions”, if any, do you take for said physical changes? |

| planning | can you do some “higher order” thinking (i.e., not just your immediate next move) |

1.2 let us define autonomy

| Autonomy is the ability to perform given tasks based on the systems perception |

|

1.3 autonomous systems

| cyber |  |

|

|

| physical |  |

|

|

hence, they fall under the class of systems → cyber-physical systems

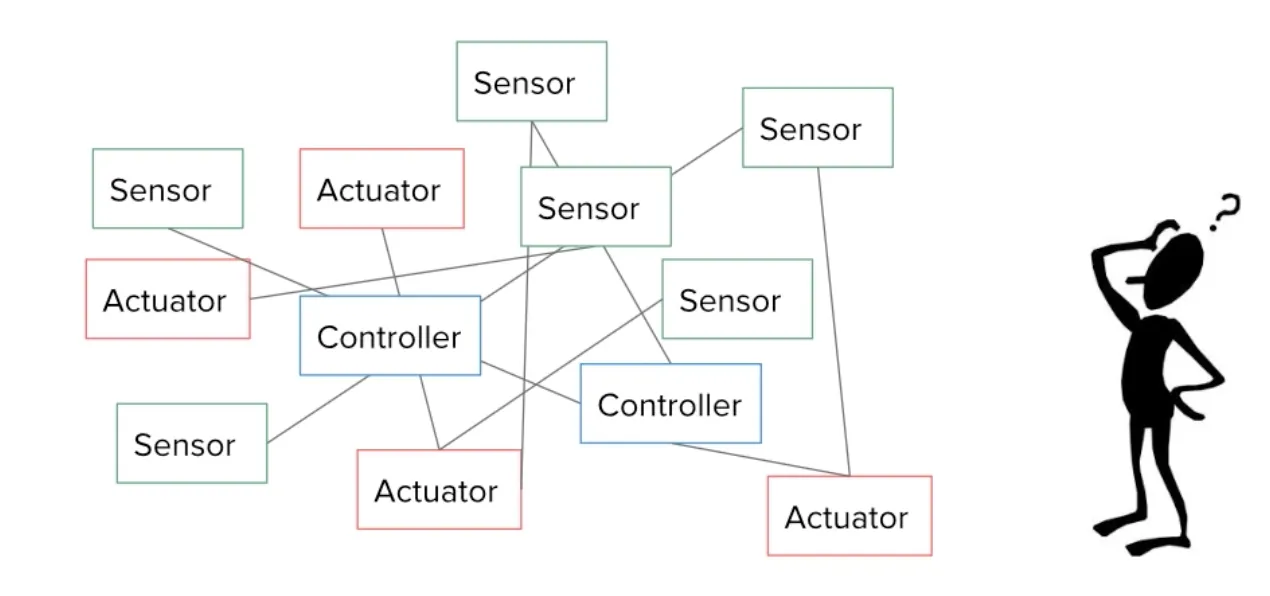

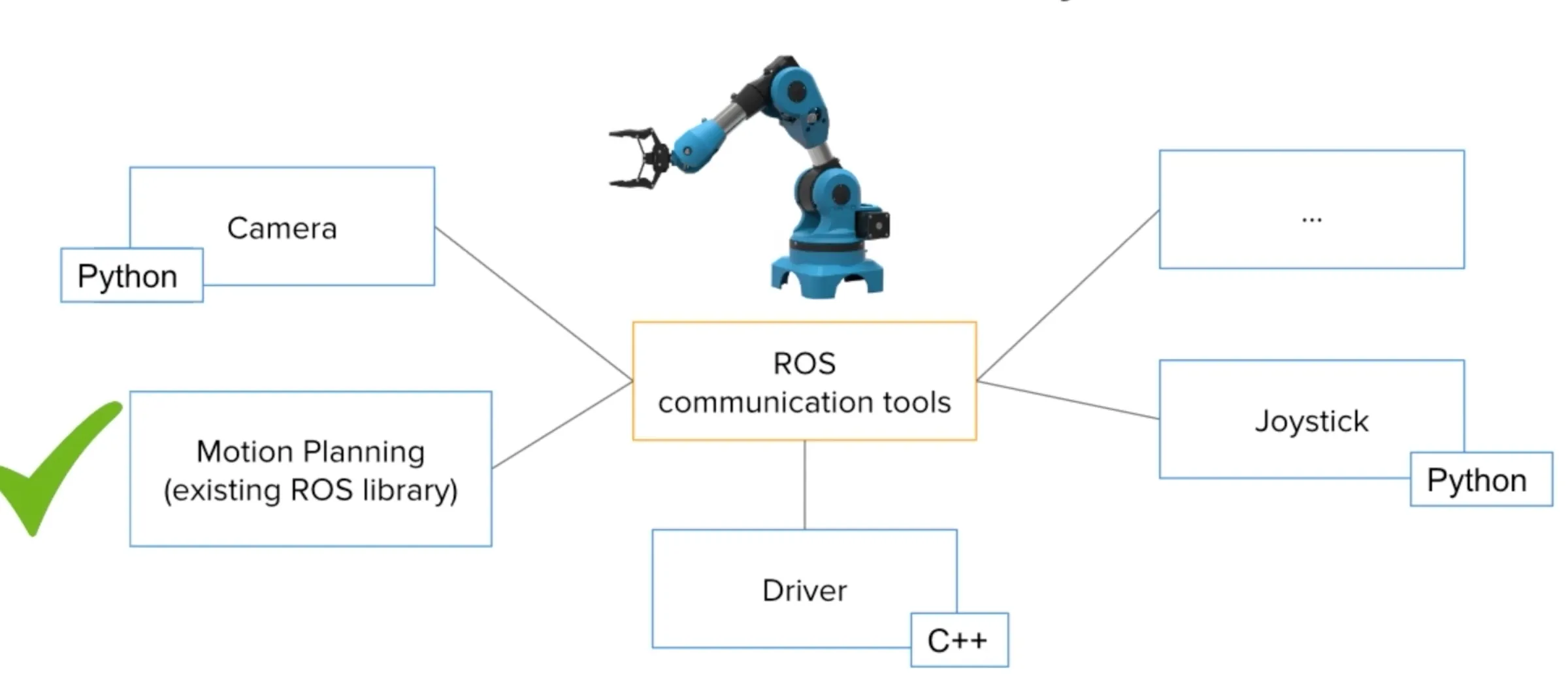

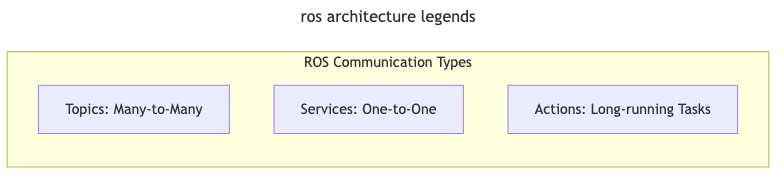

1.4 sensors and actuators…

|

|

…are everywhere!

the embedded components → interactions with the real world

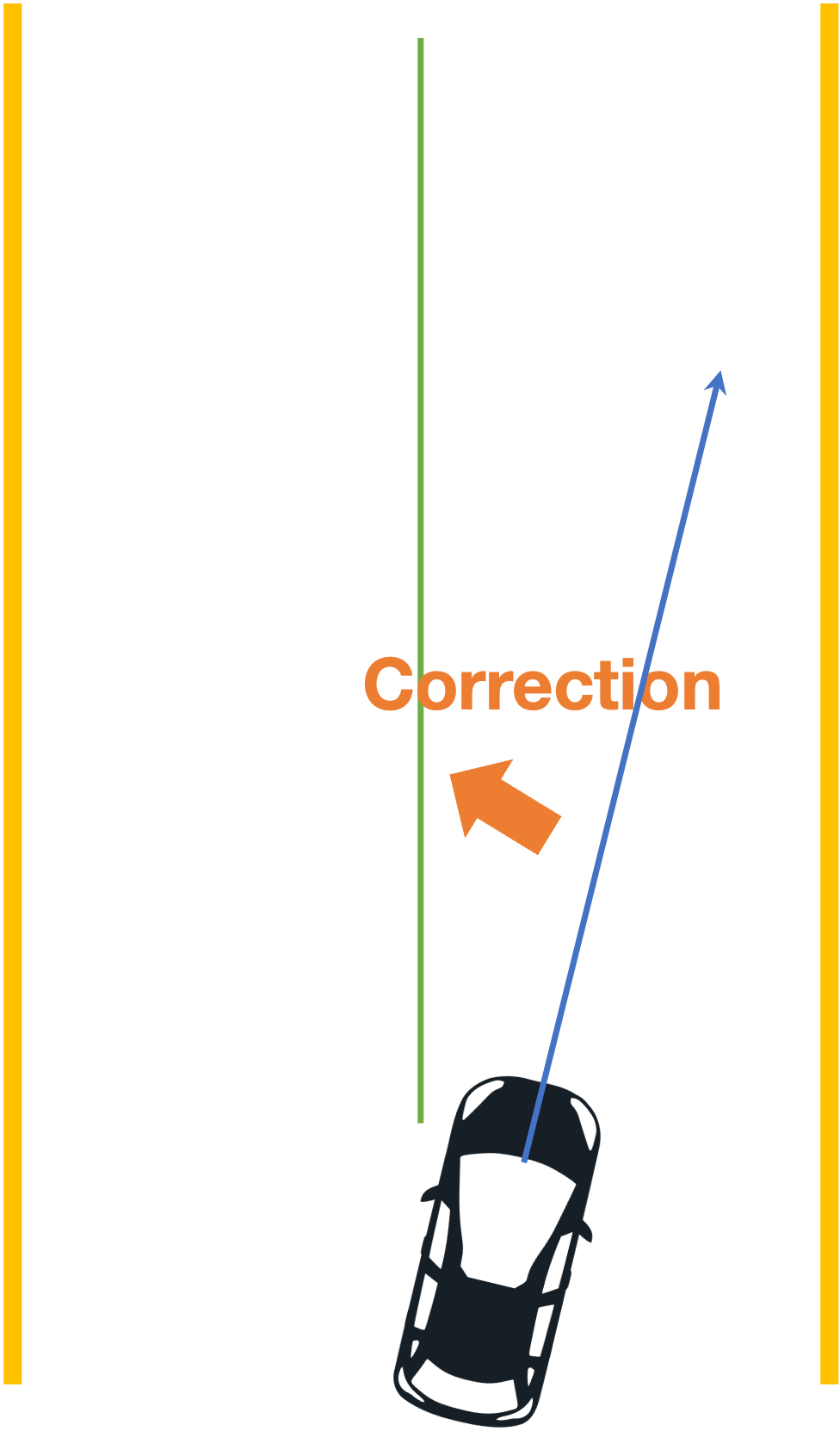

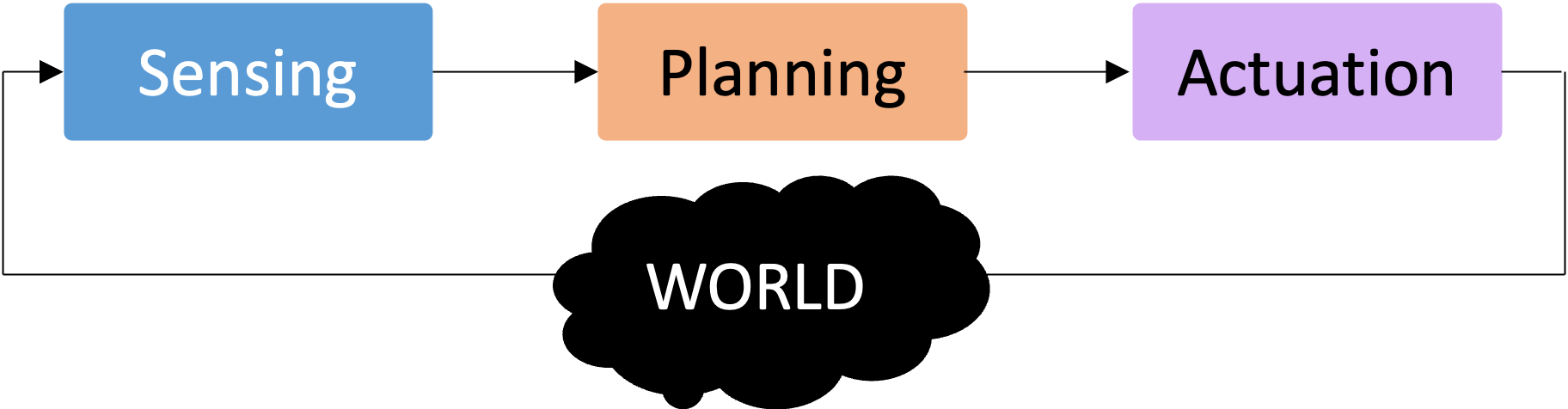

1.5 sensing and actuation in the real world

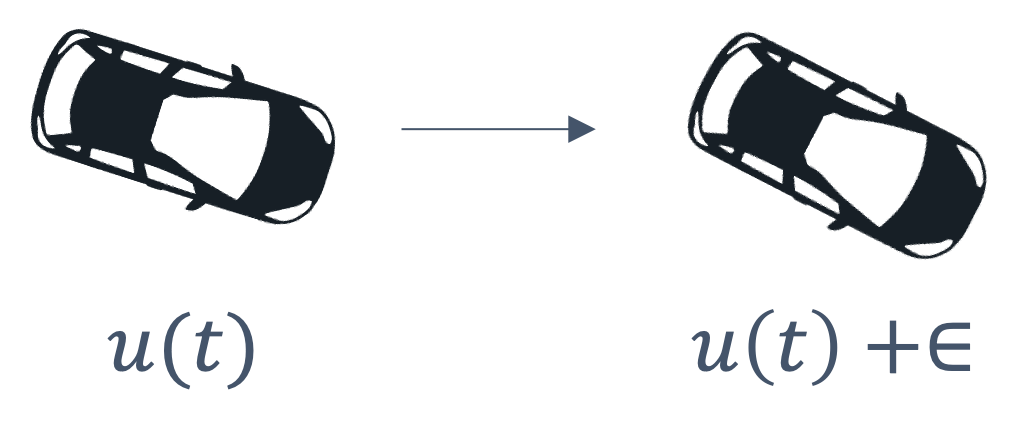

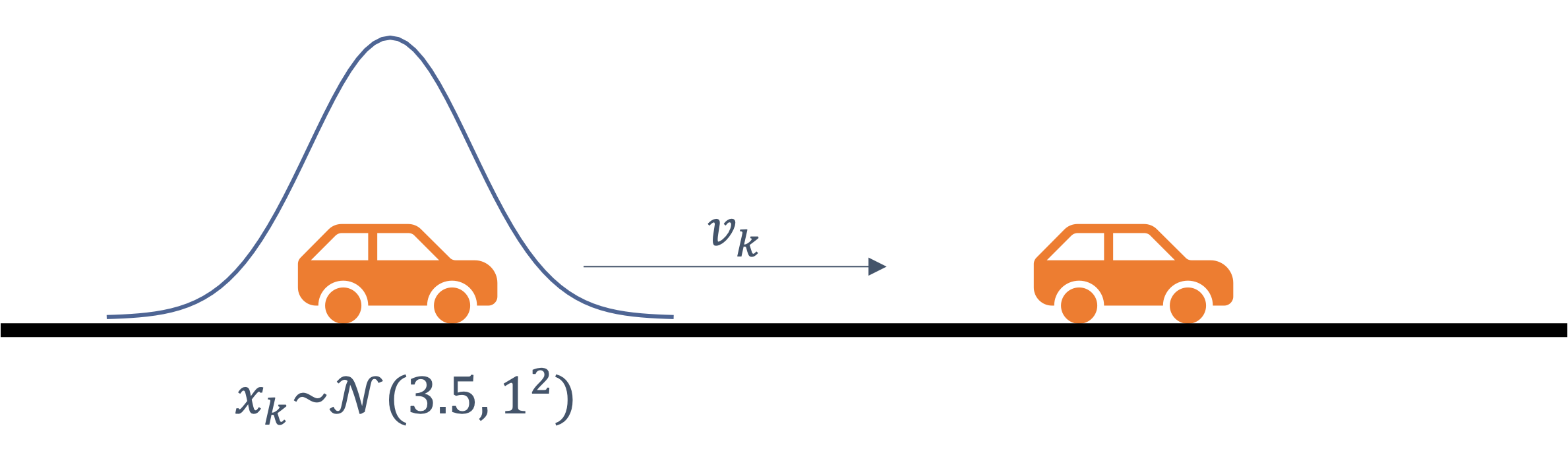

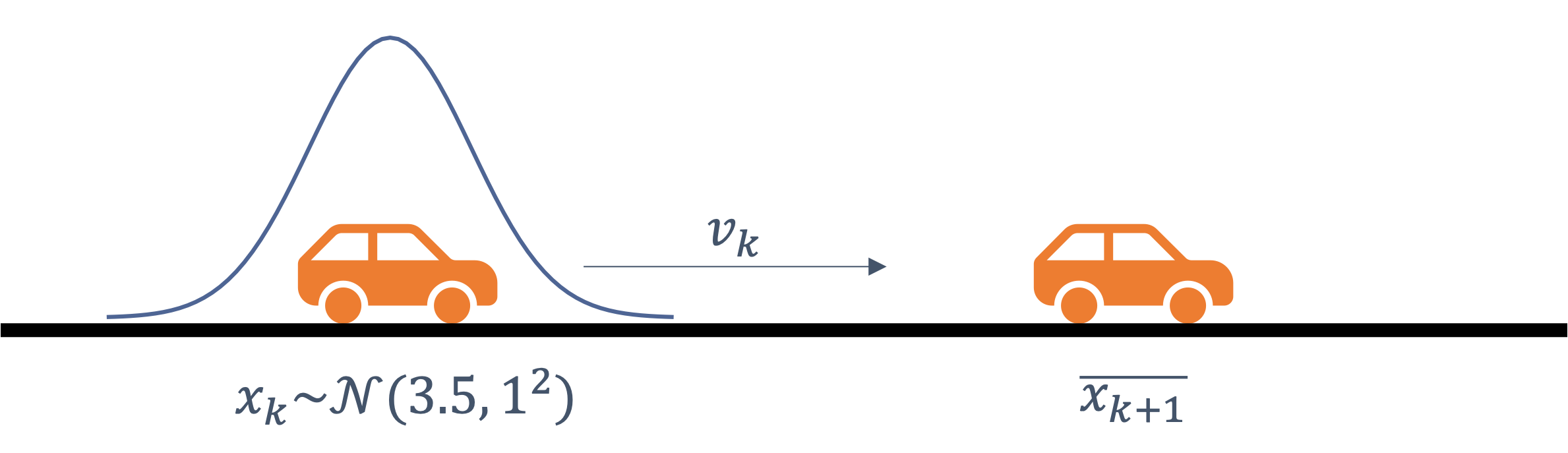

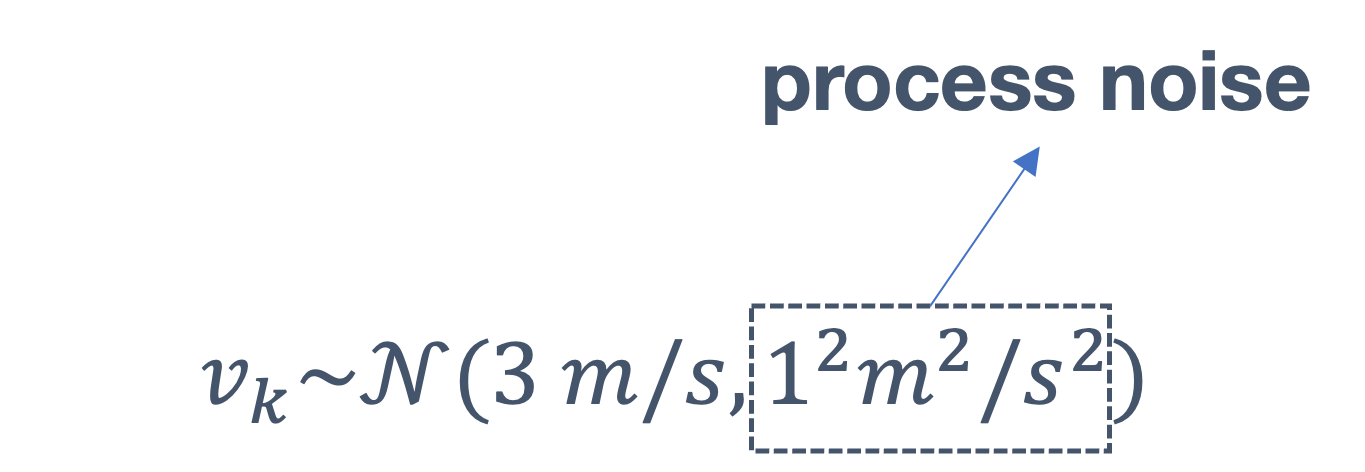

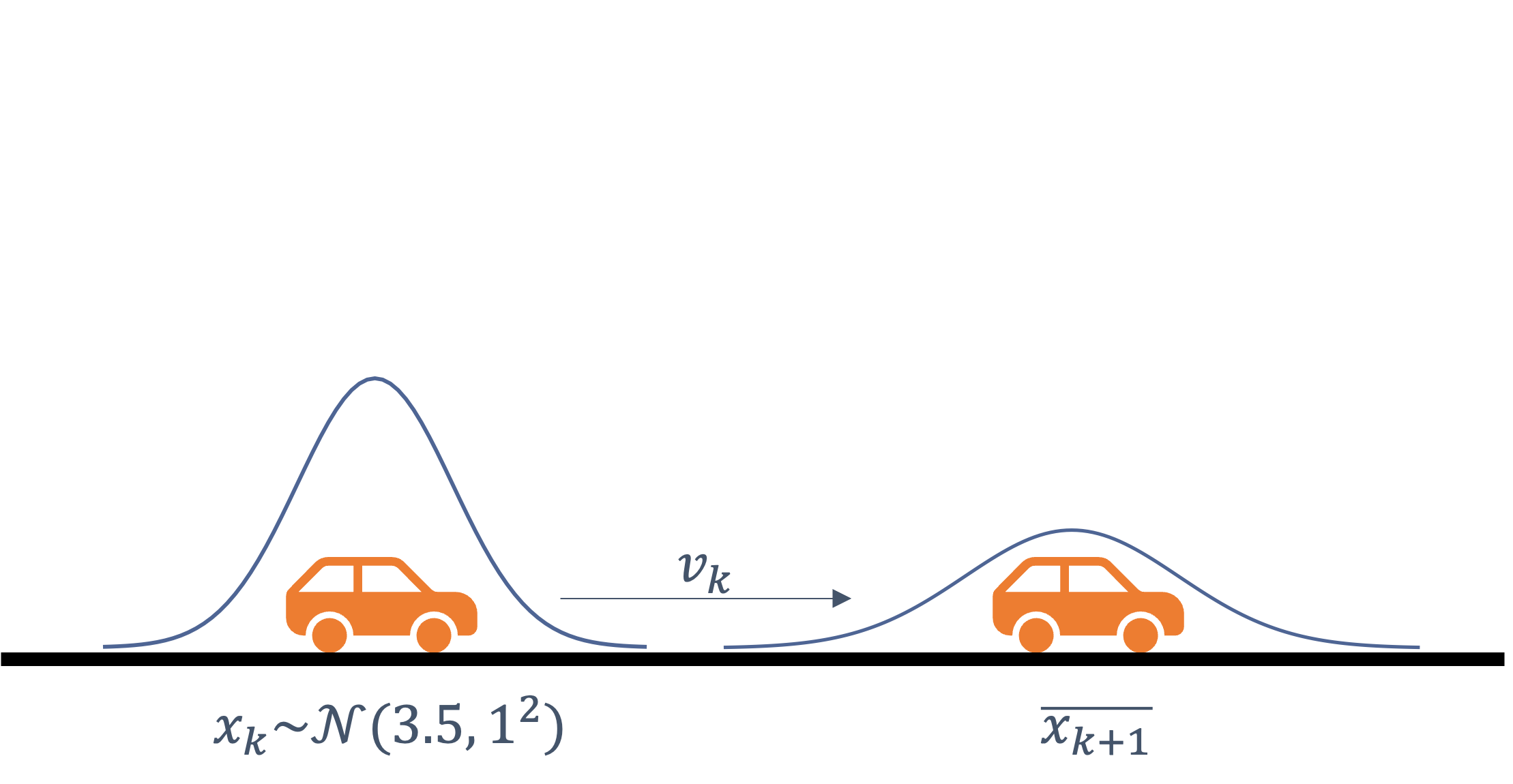

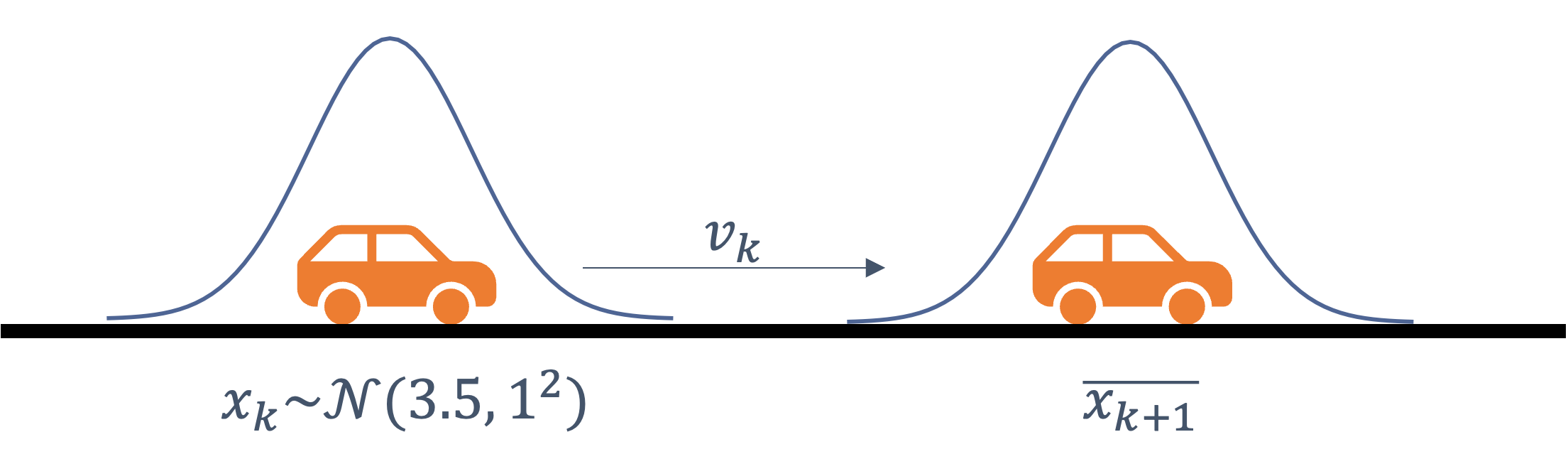

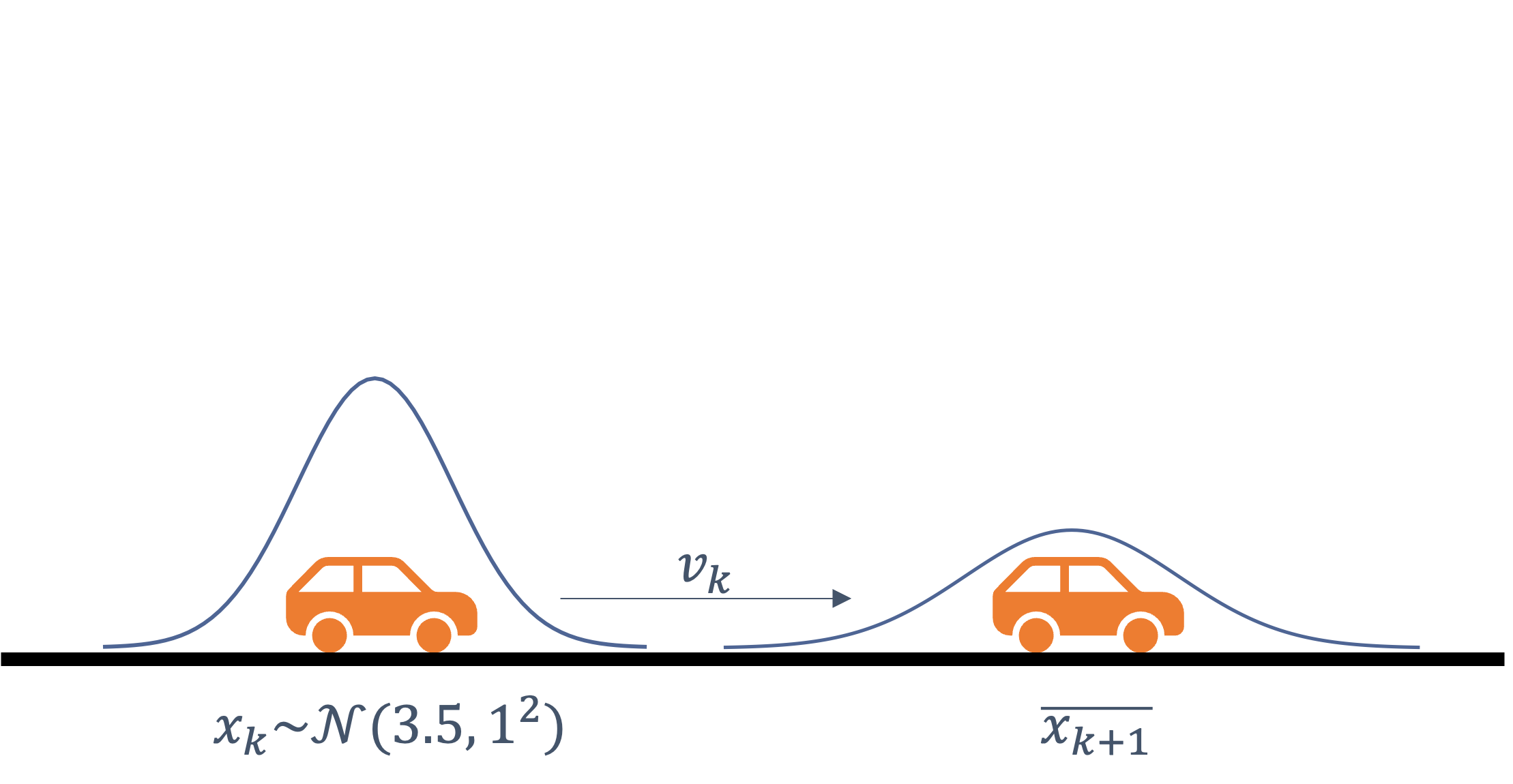

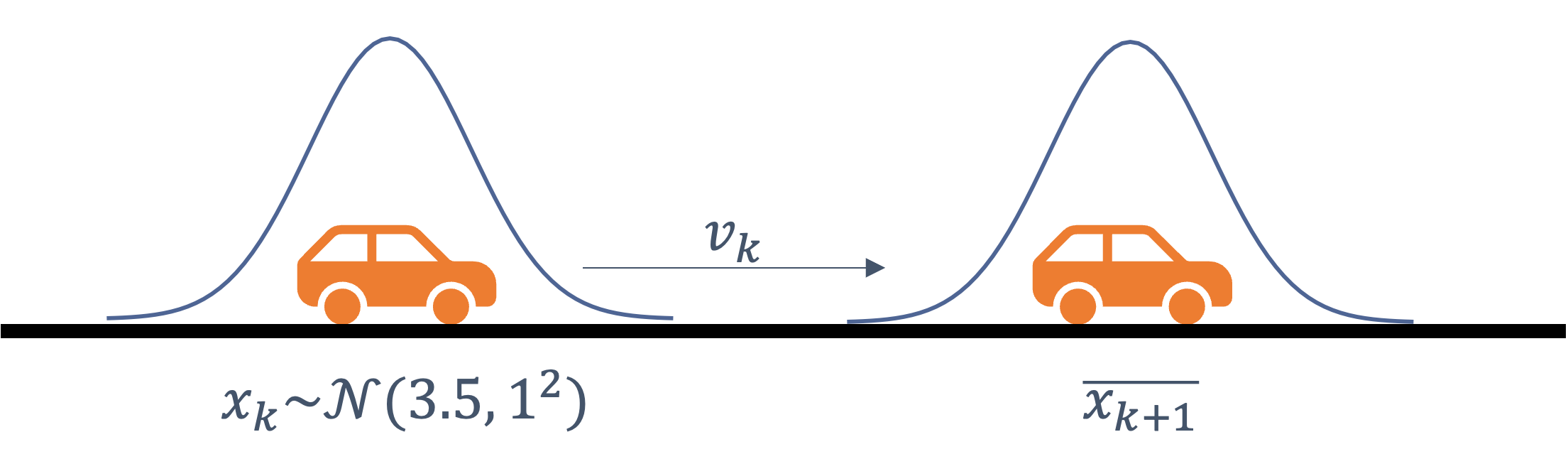

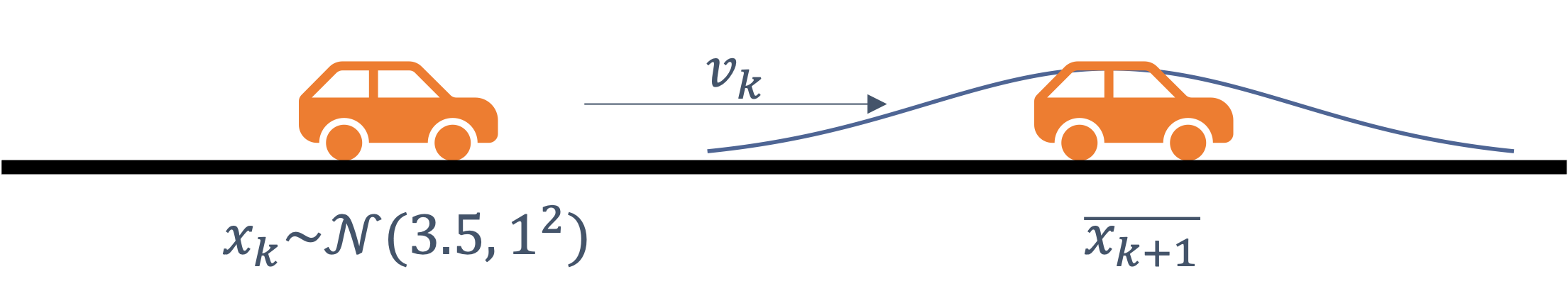

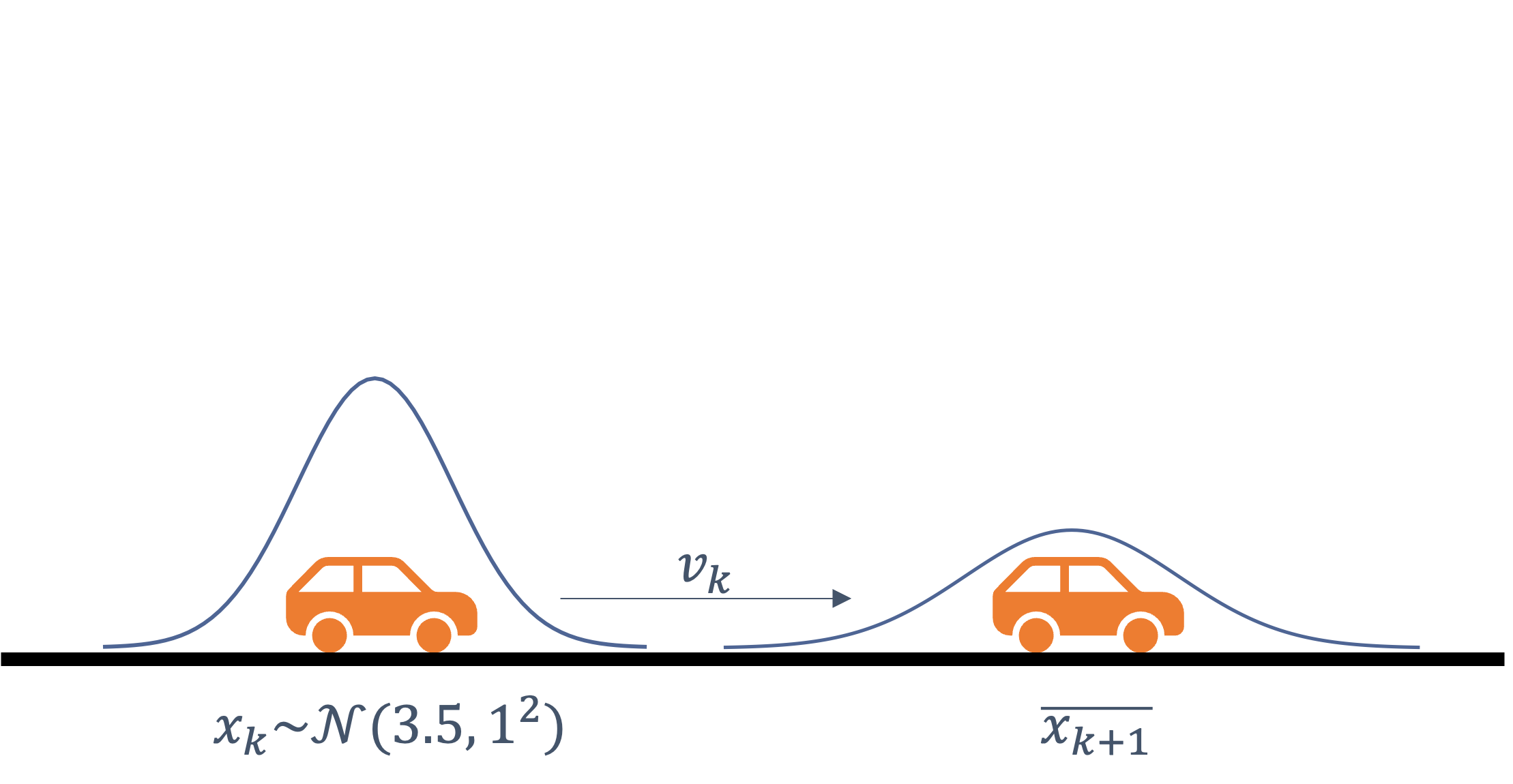

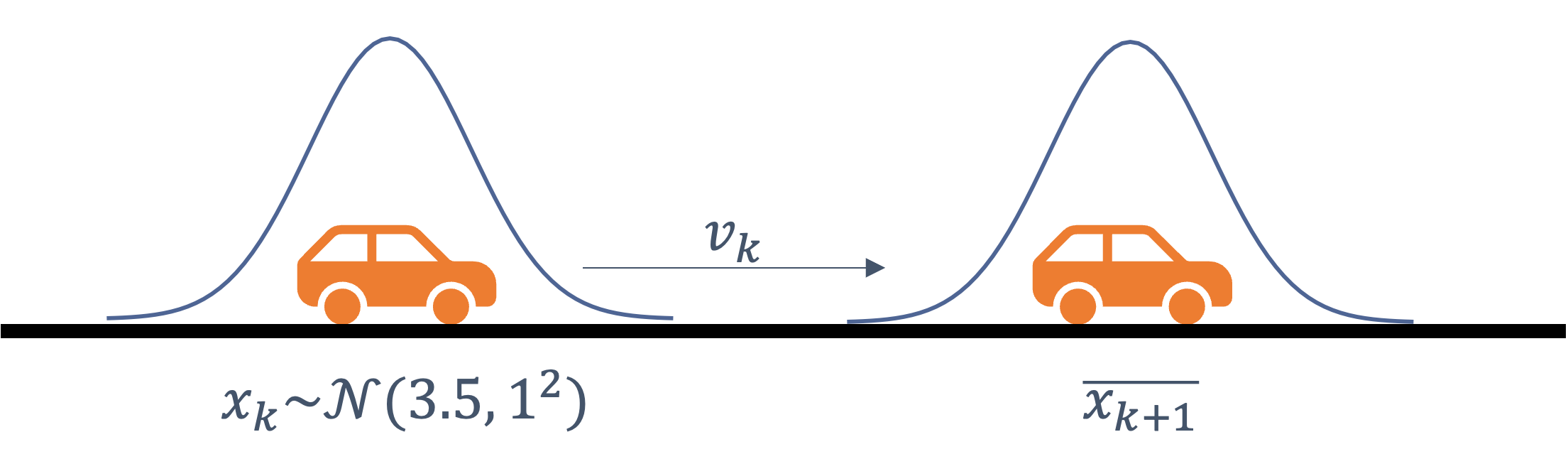

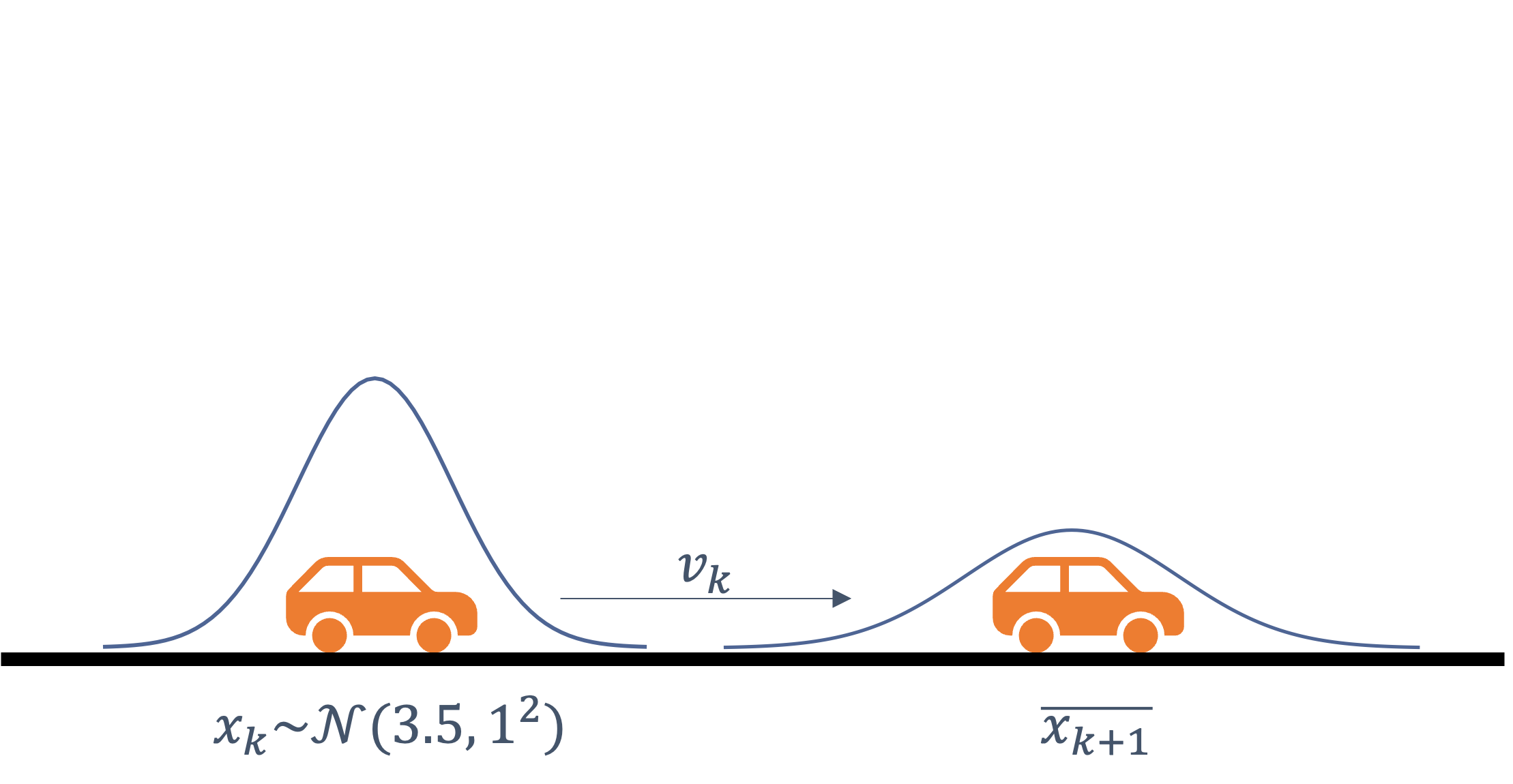

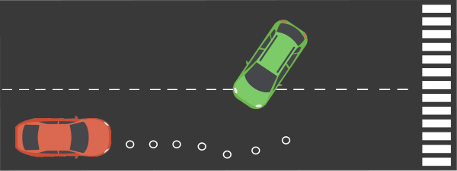

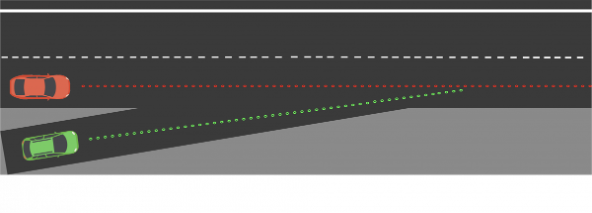

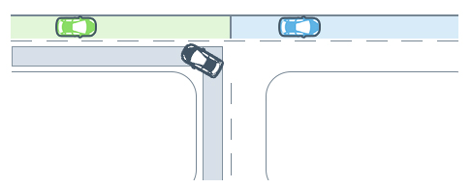

consider the following example of two cars…

the second car is approaching the first

sensors → constantly gathering data/sensing

- periodic sensing

on detection (of other car) → quickly compute what to do

- periodic sensing

- computation

take physical action (actuation) → say by braking in time

- periodic sensing

- computation

- actuation

- periodic sensing

- computation

- actuation

“control”

Remember this → on detection (of other car) →

“quickly” compute → complete computation/actuation → before a deadline

This is a real-time system.

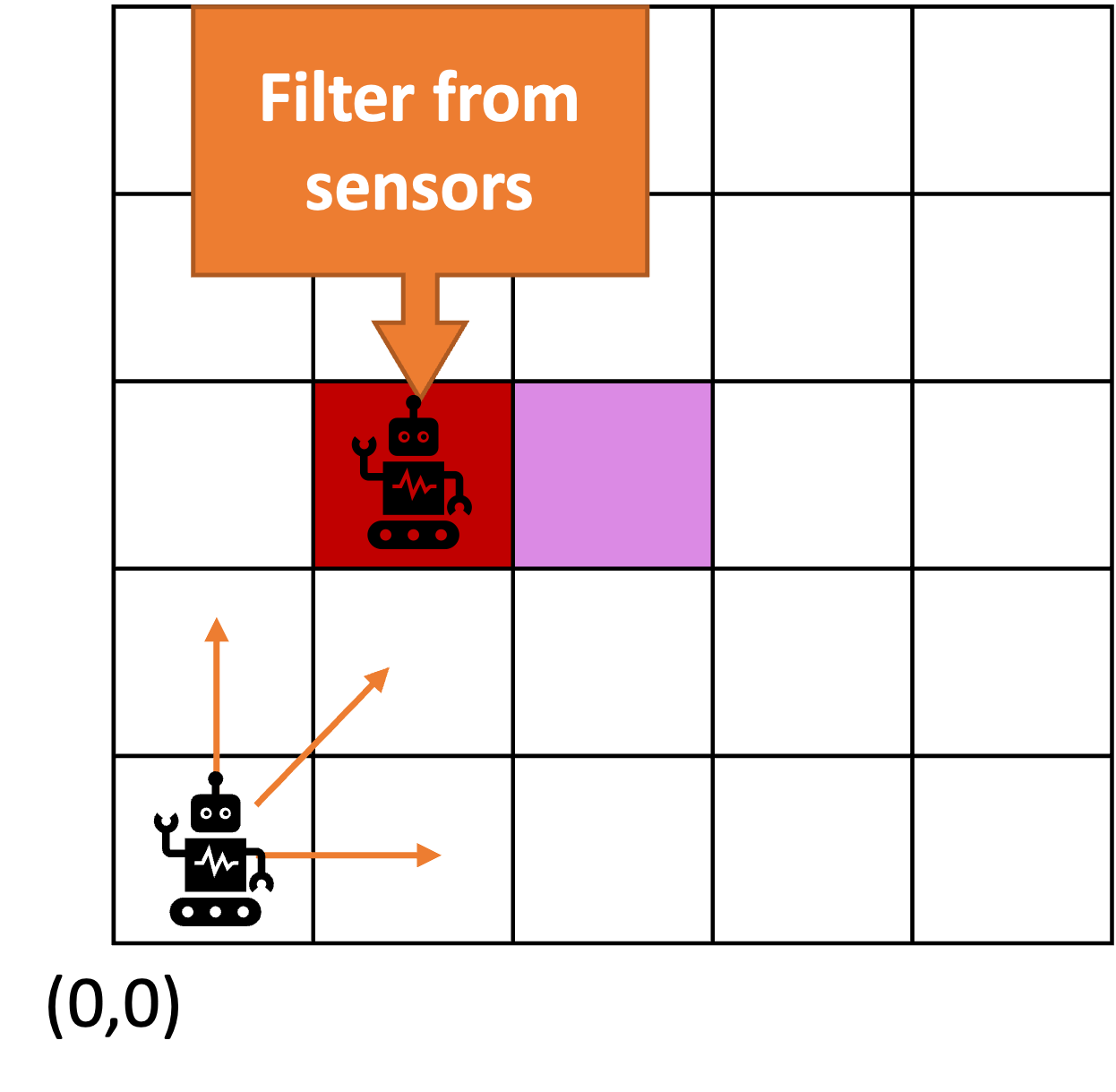

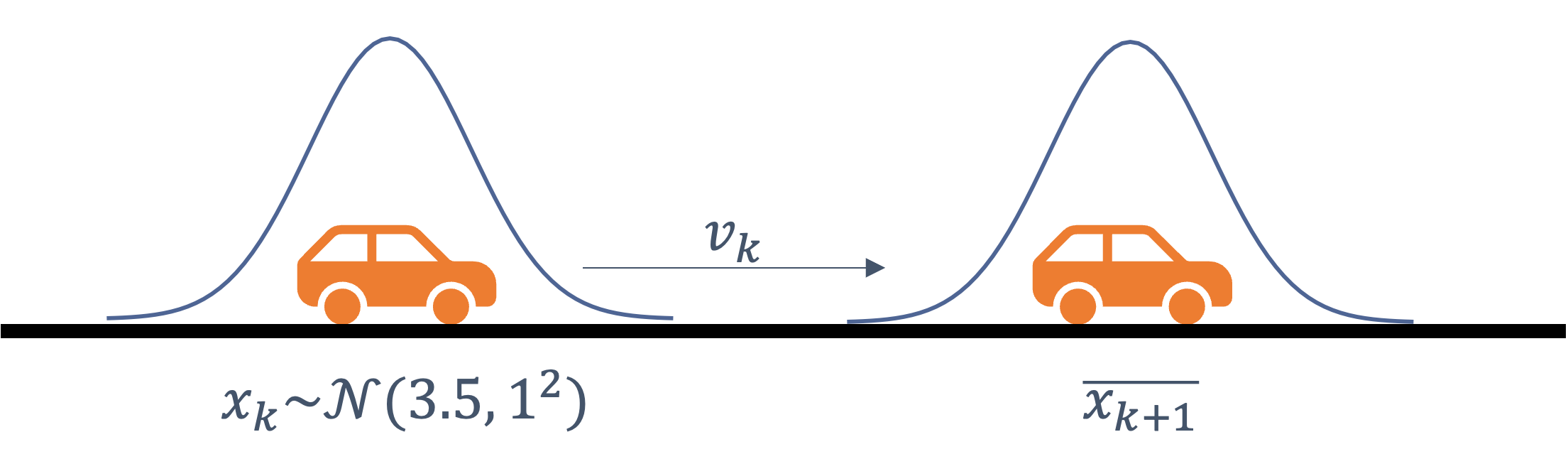

1.5.1 Come back to sensing

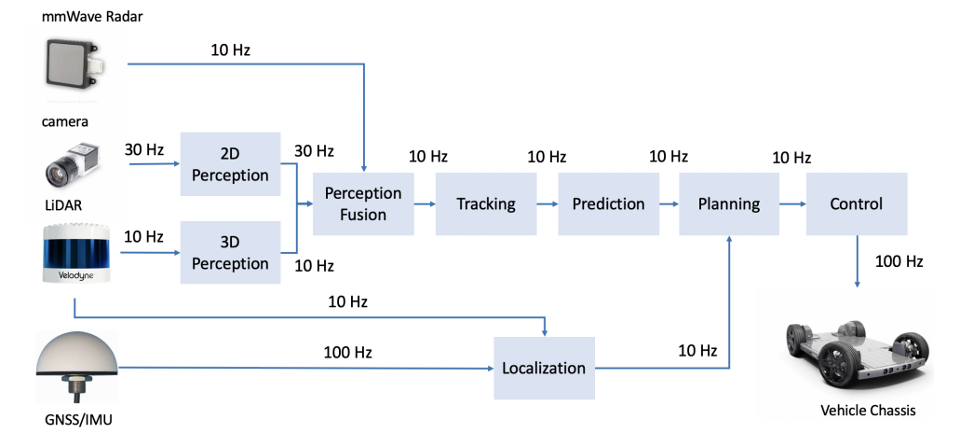

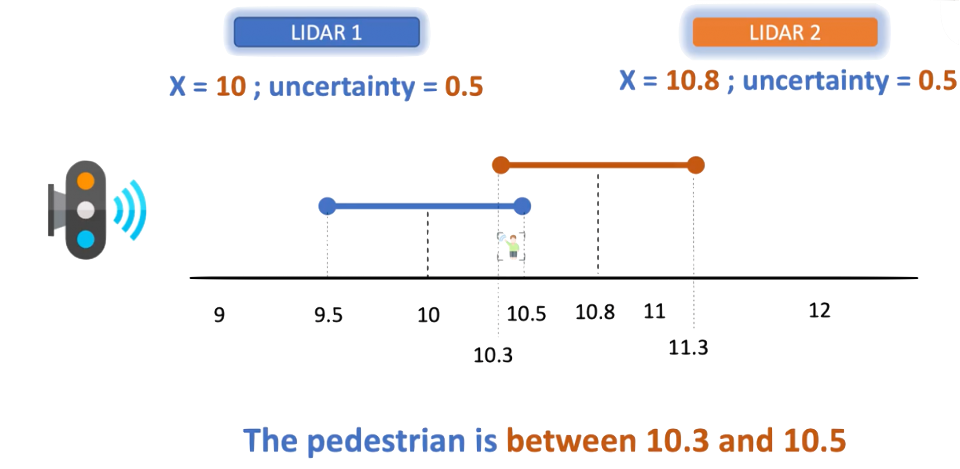

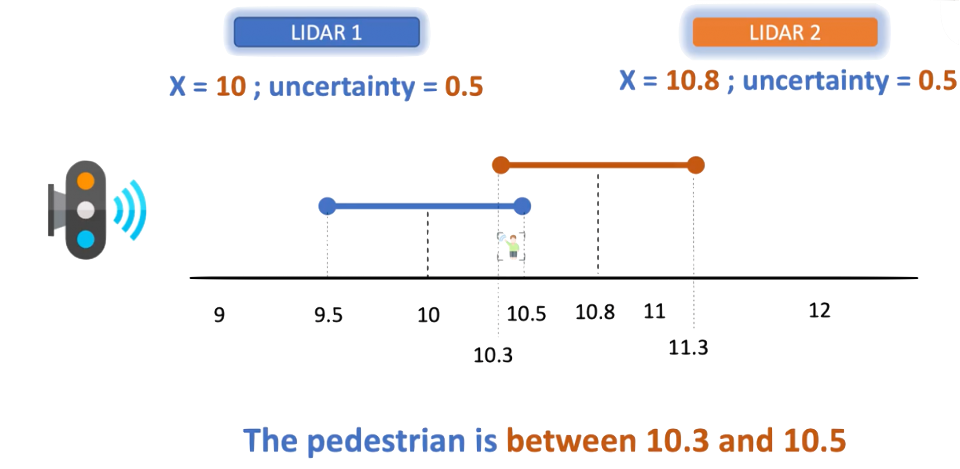

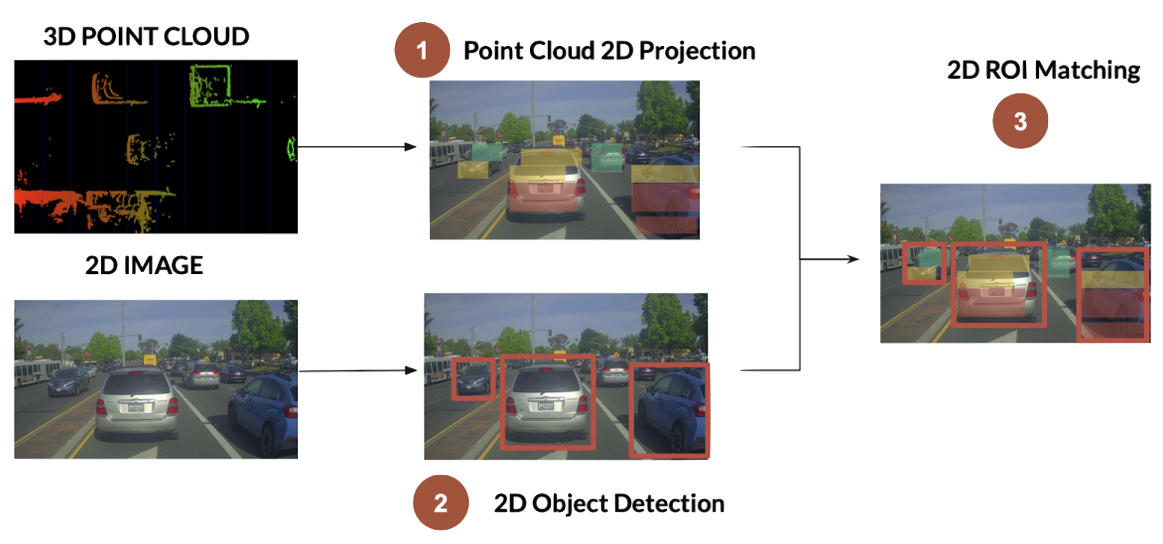

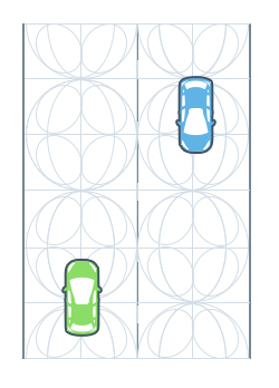

Multiple sensors in an autonomous vehicle → need to combine them somehow

sensor fusion

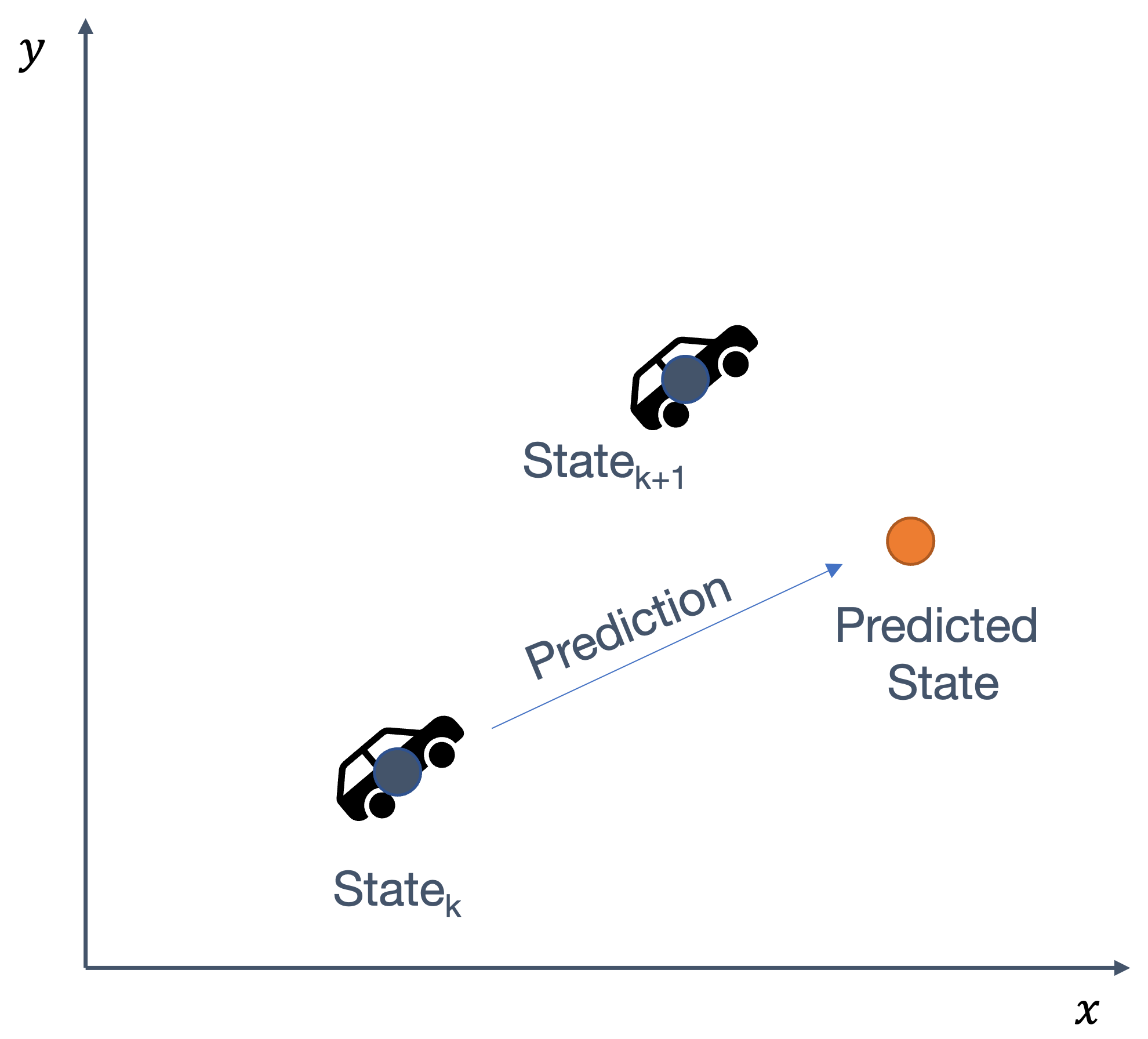

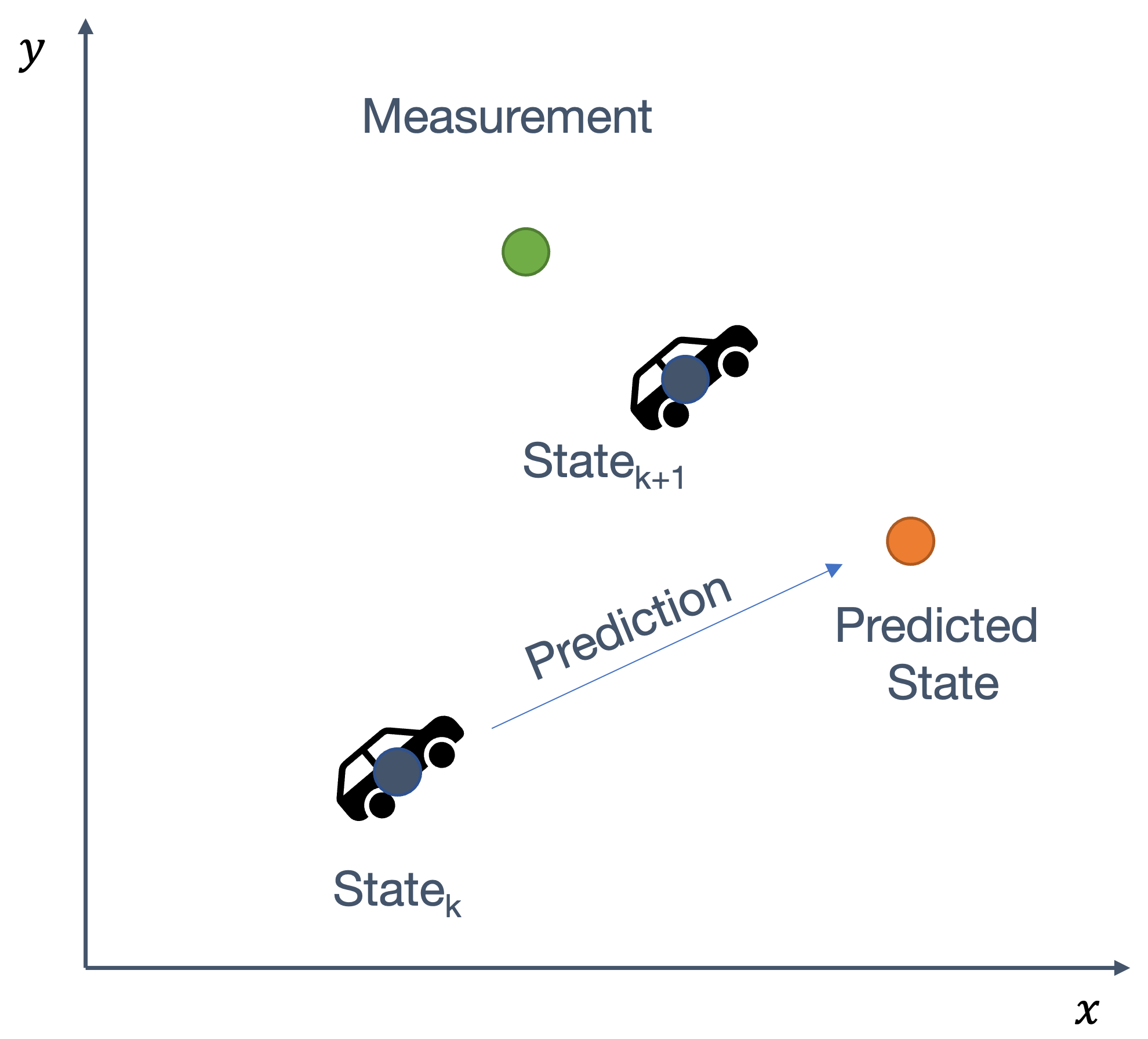

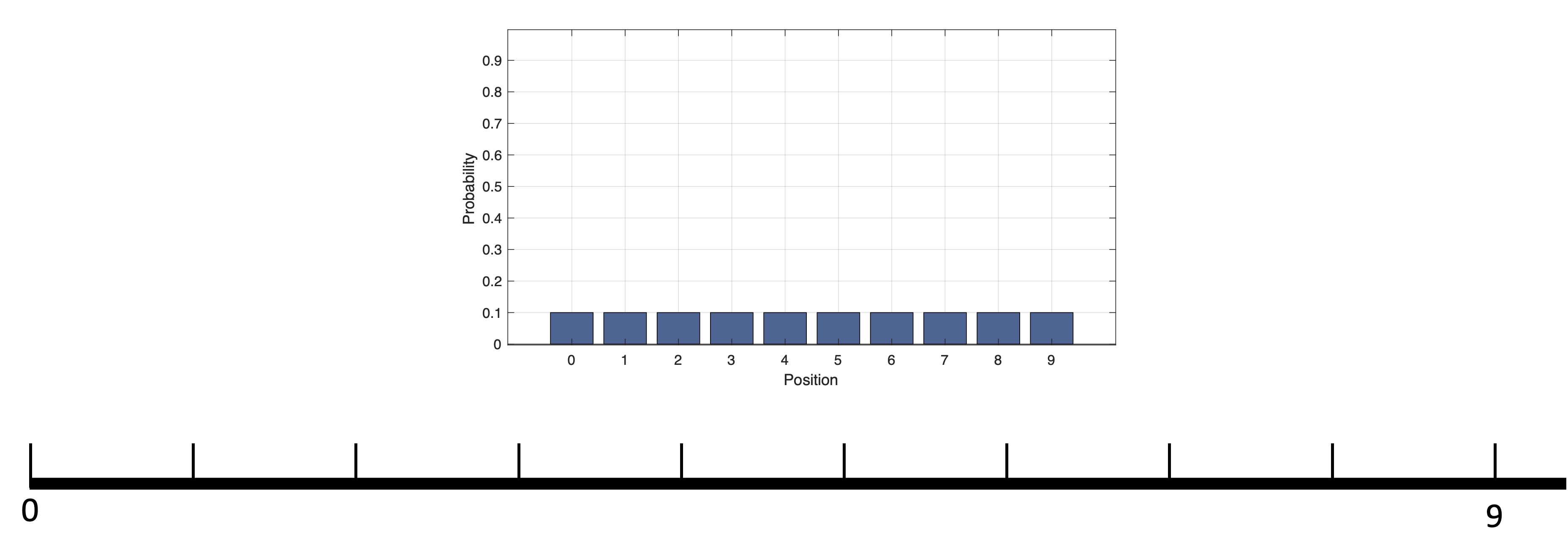

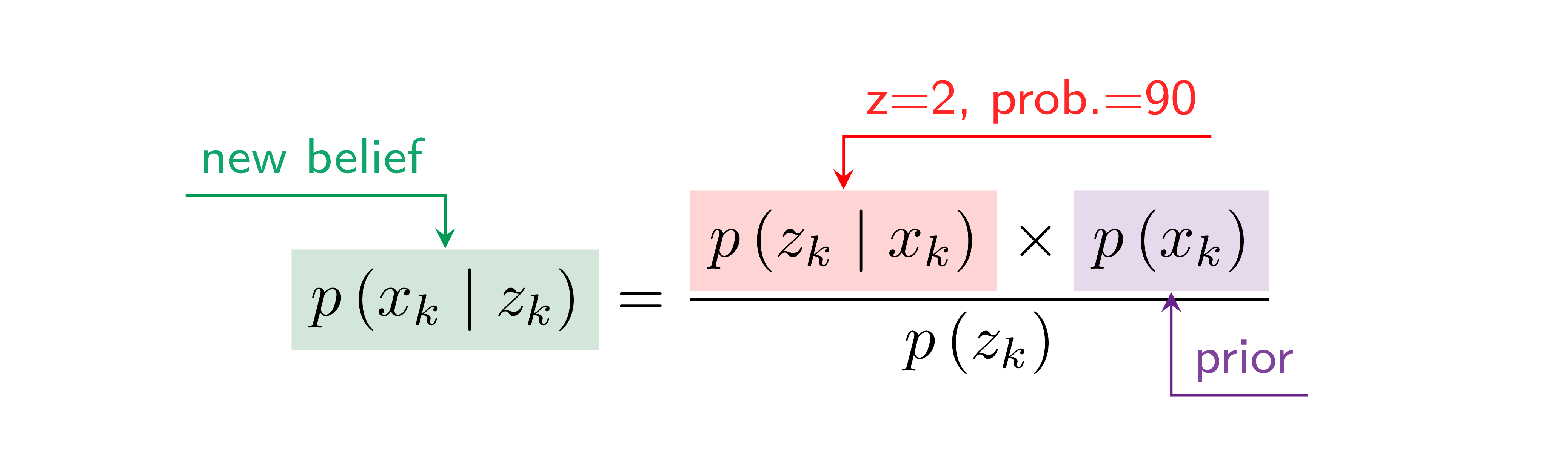

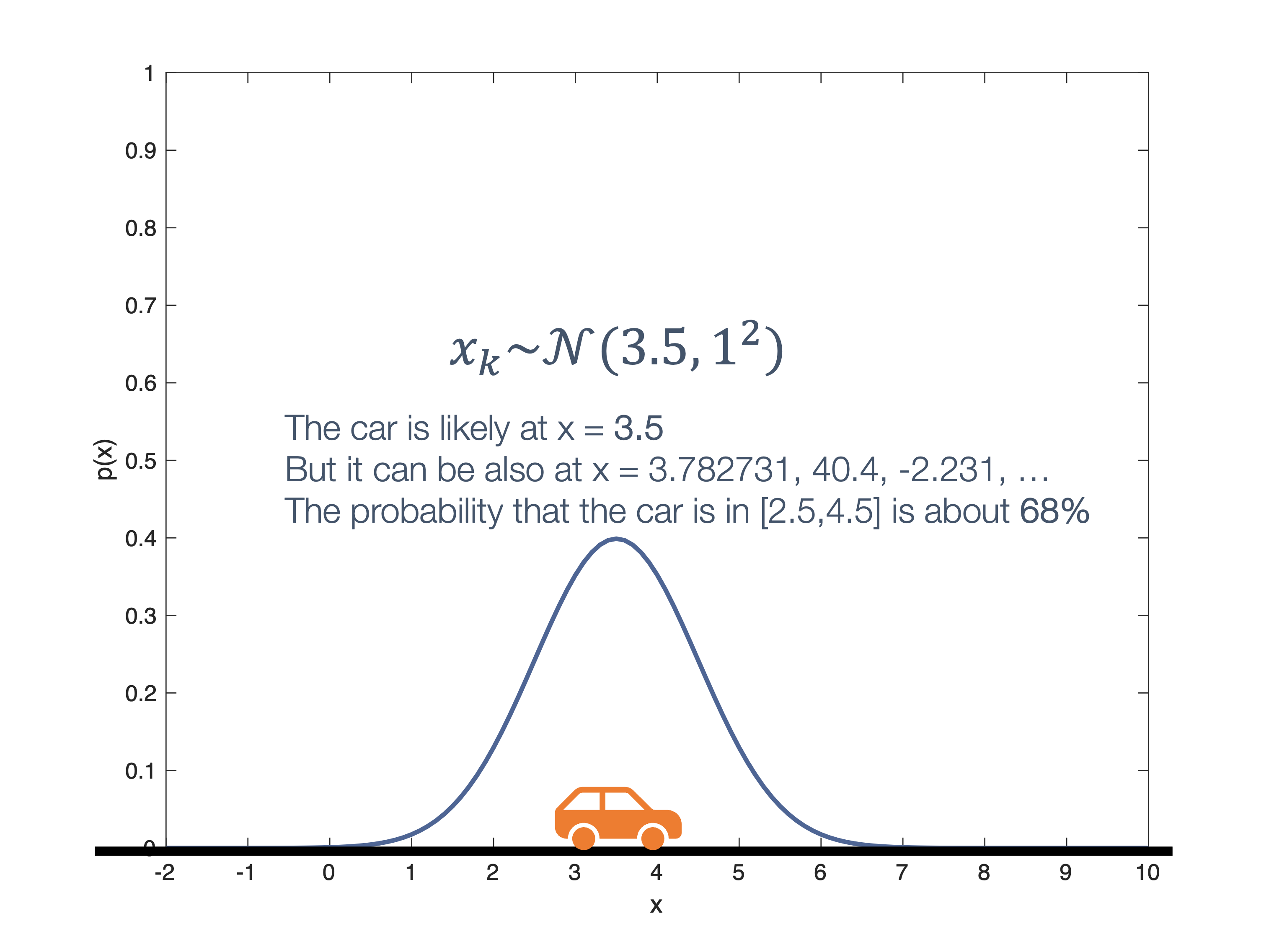

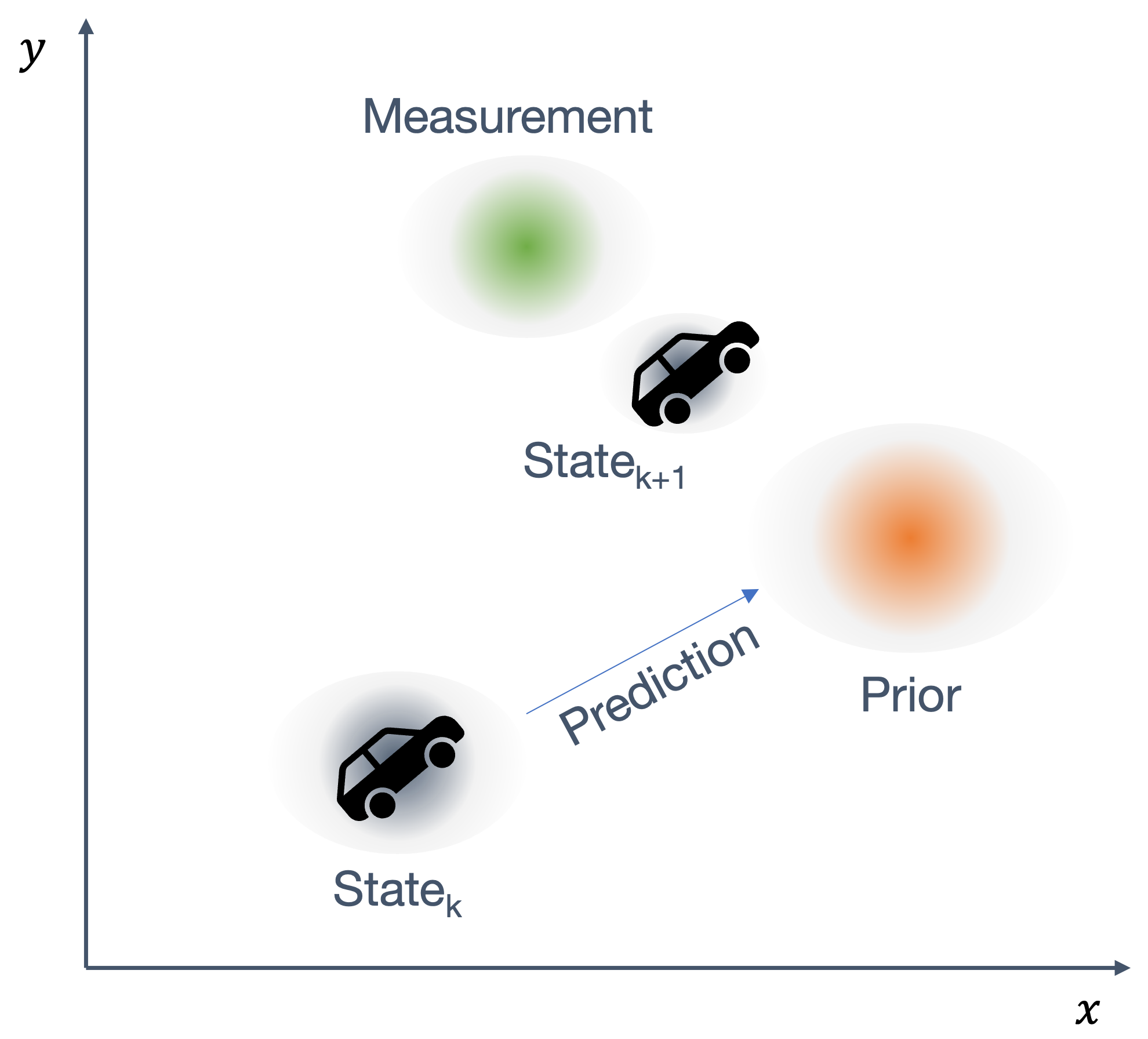

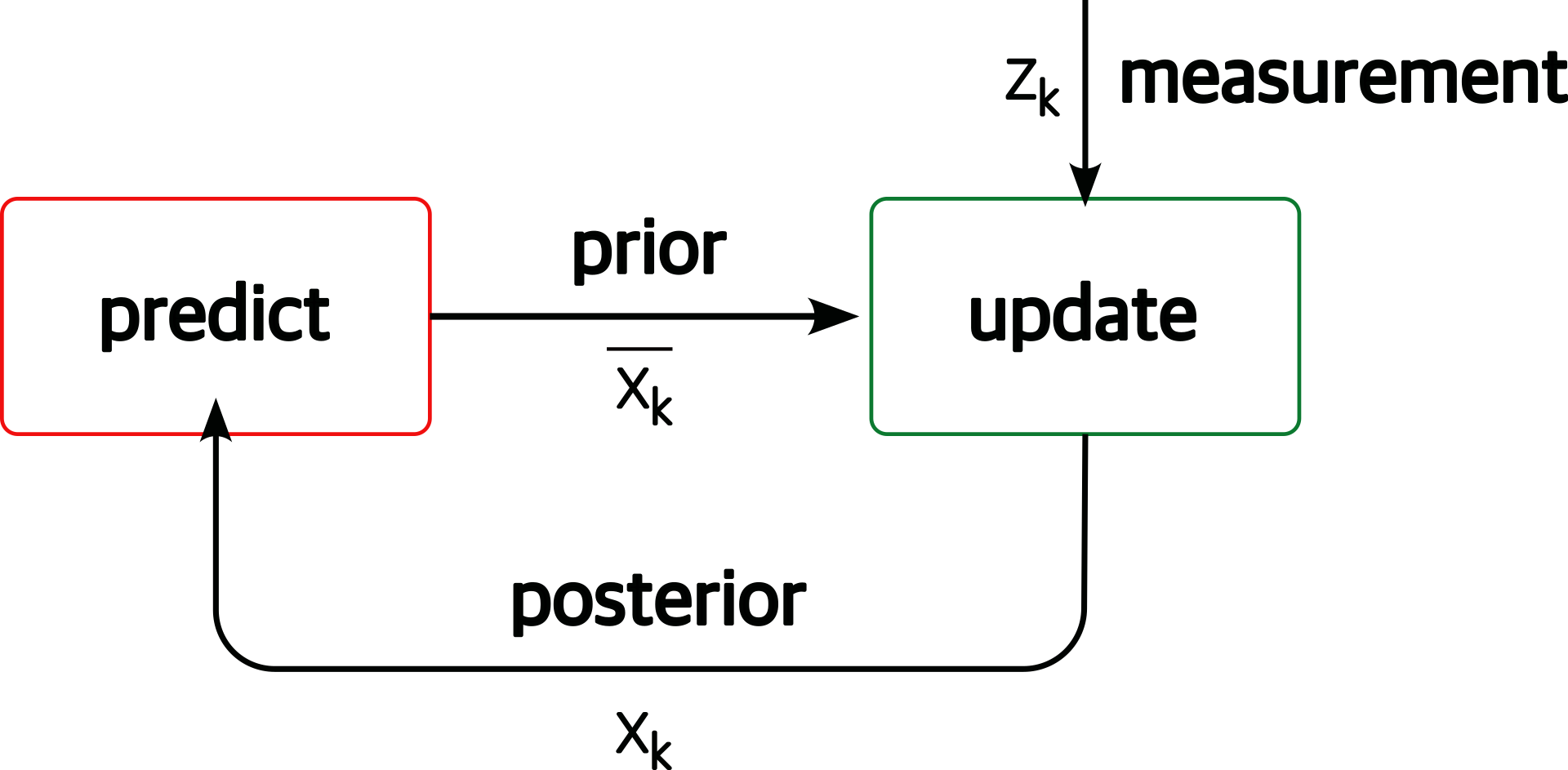

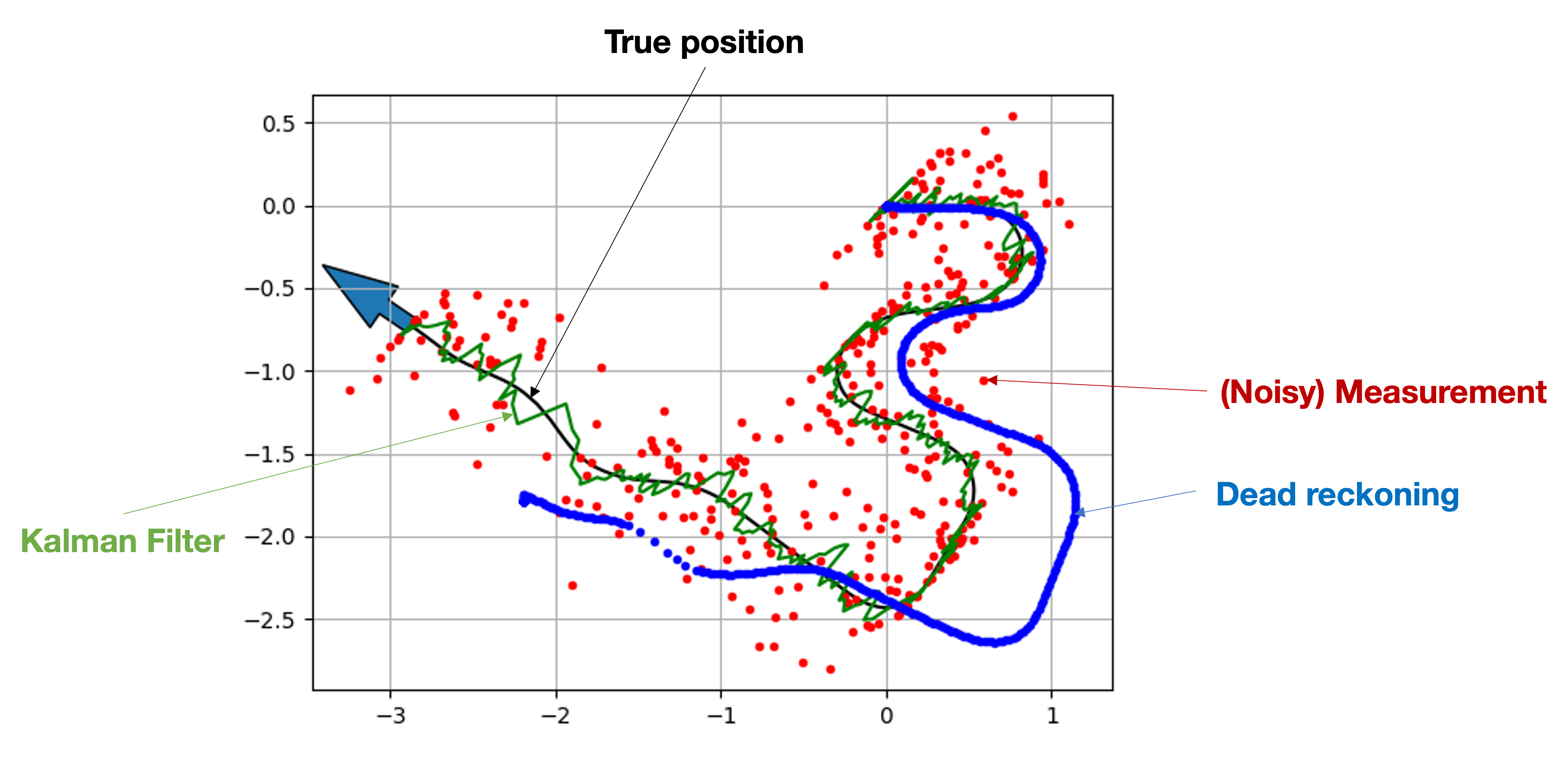

Once we have information from the sensors (fused or otherwise)…

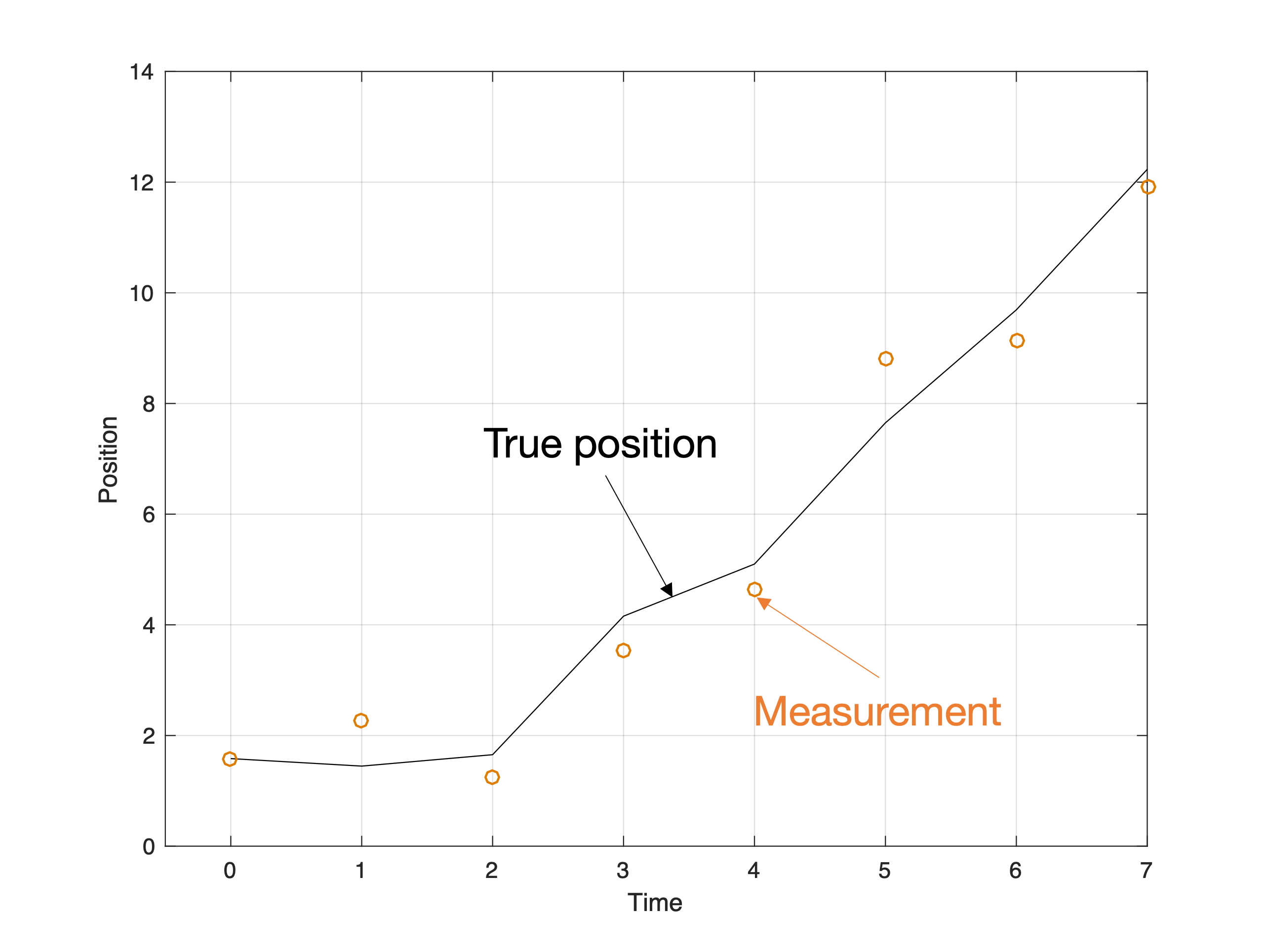

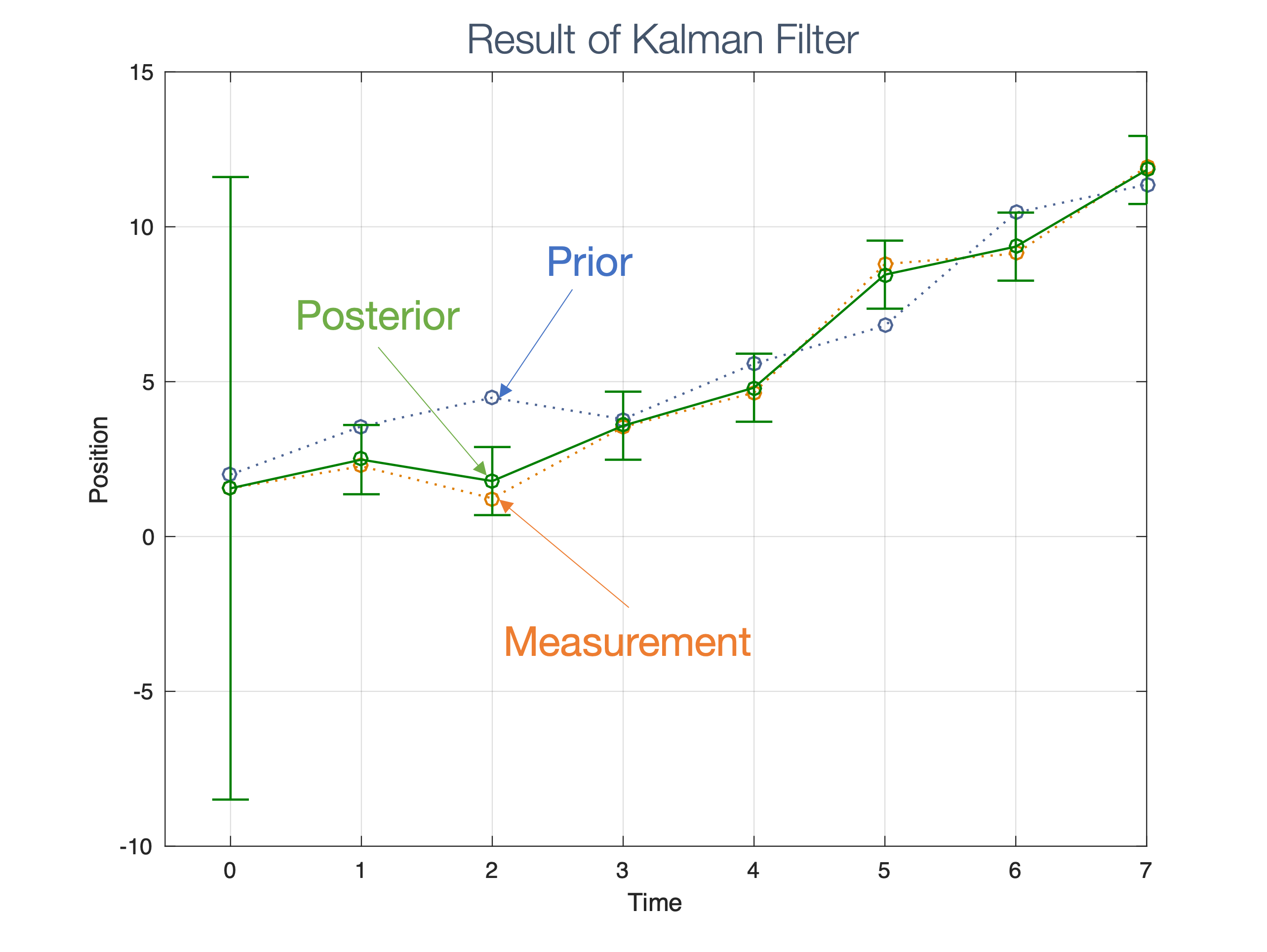

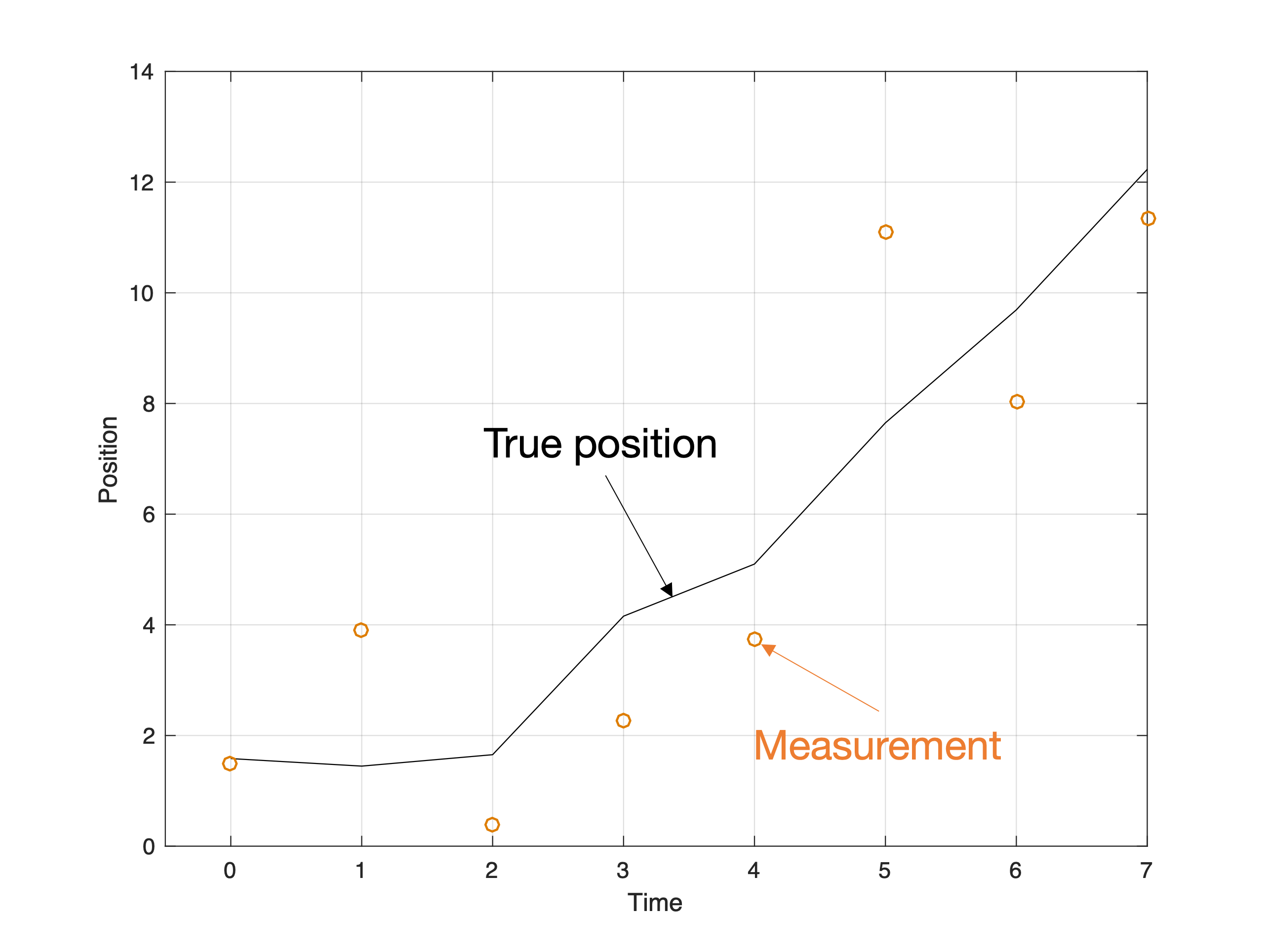

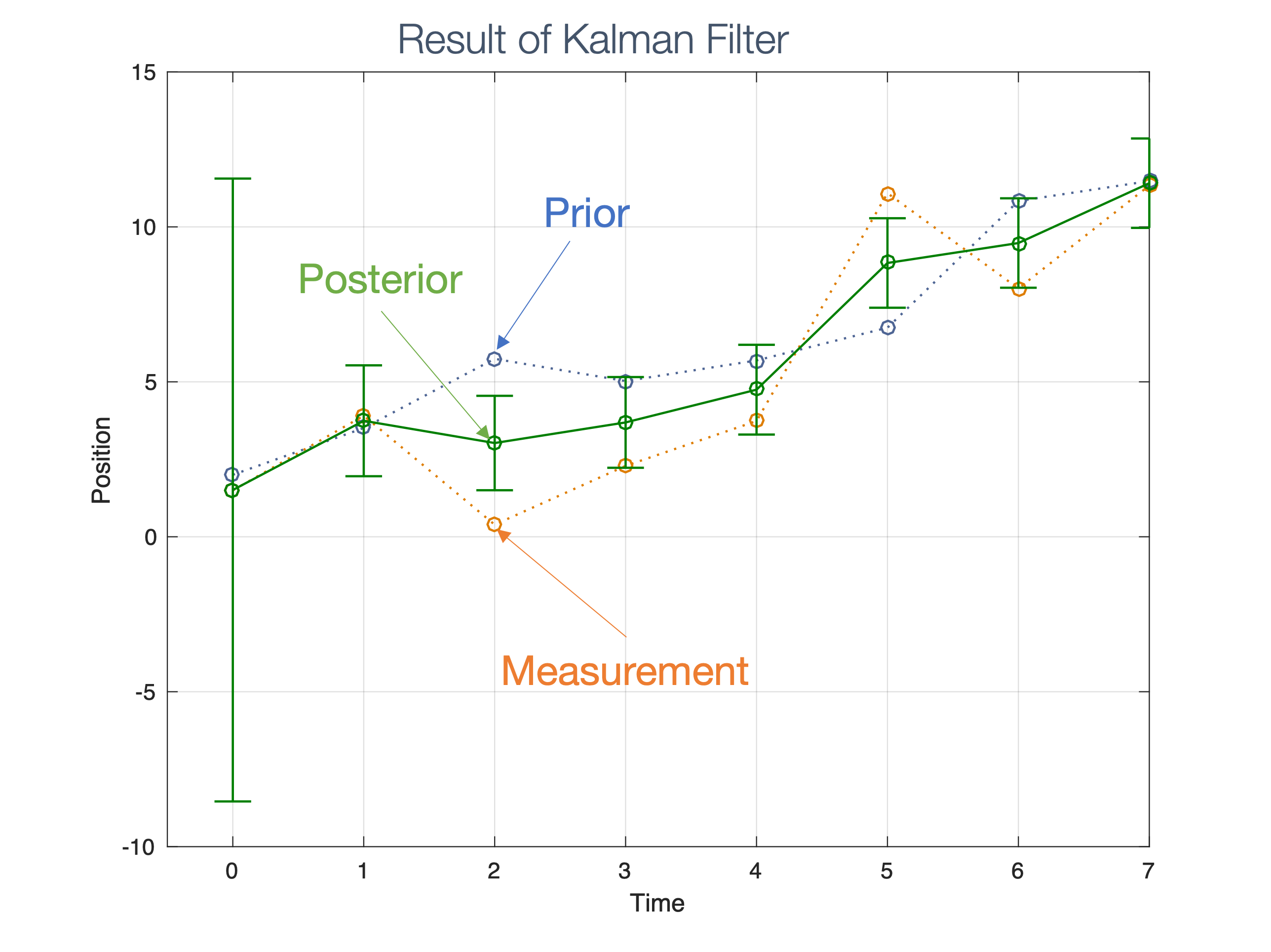

We need state estimation (kalman filter, ekf).

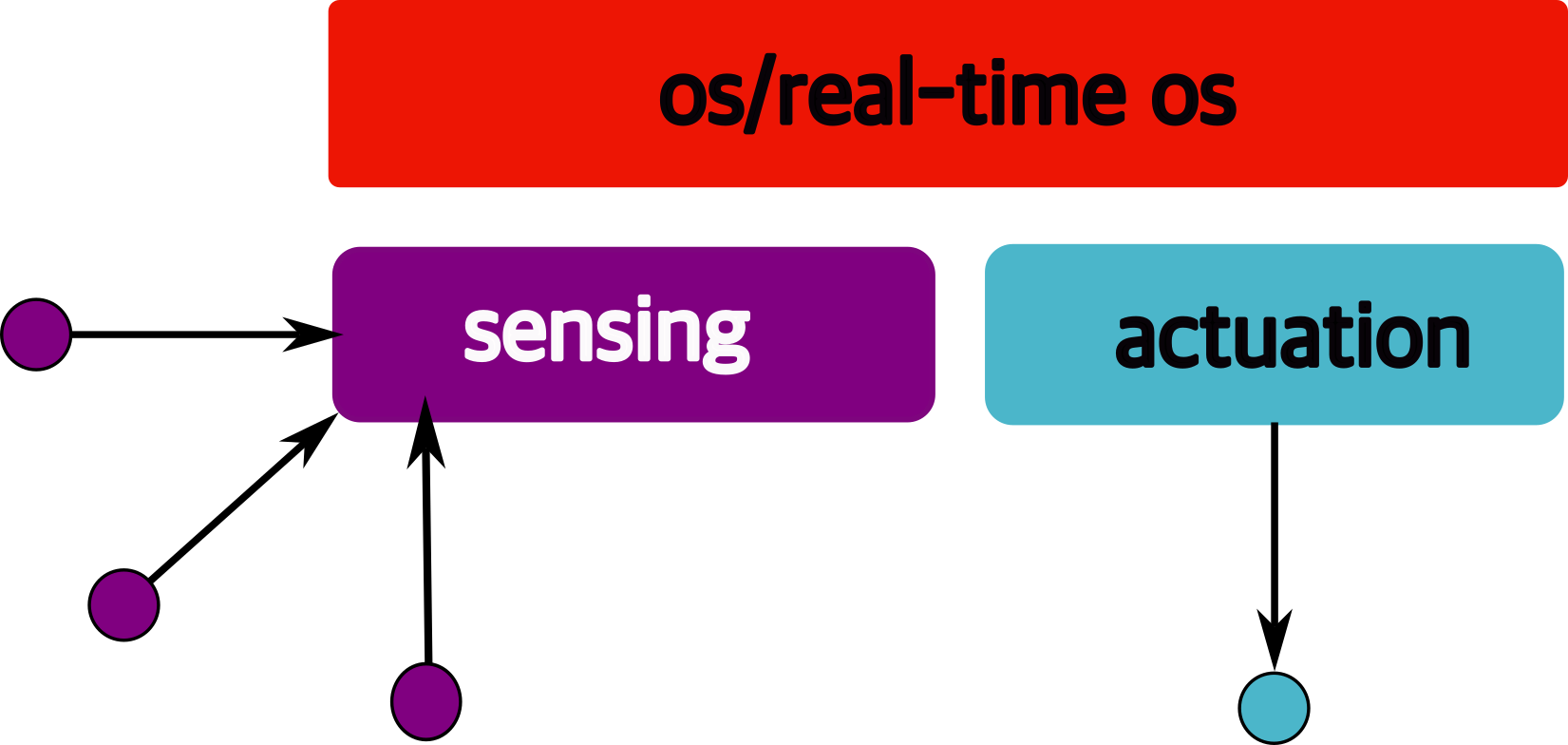

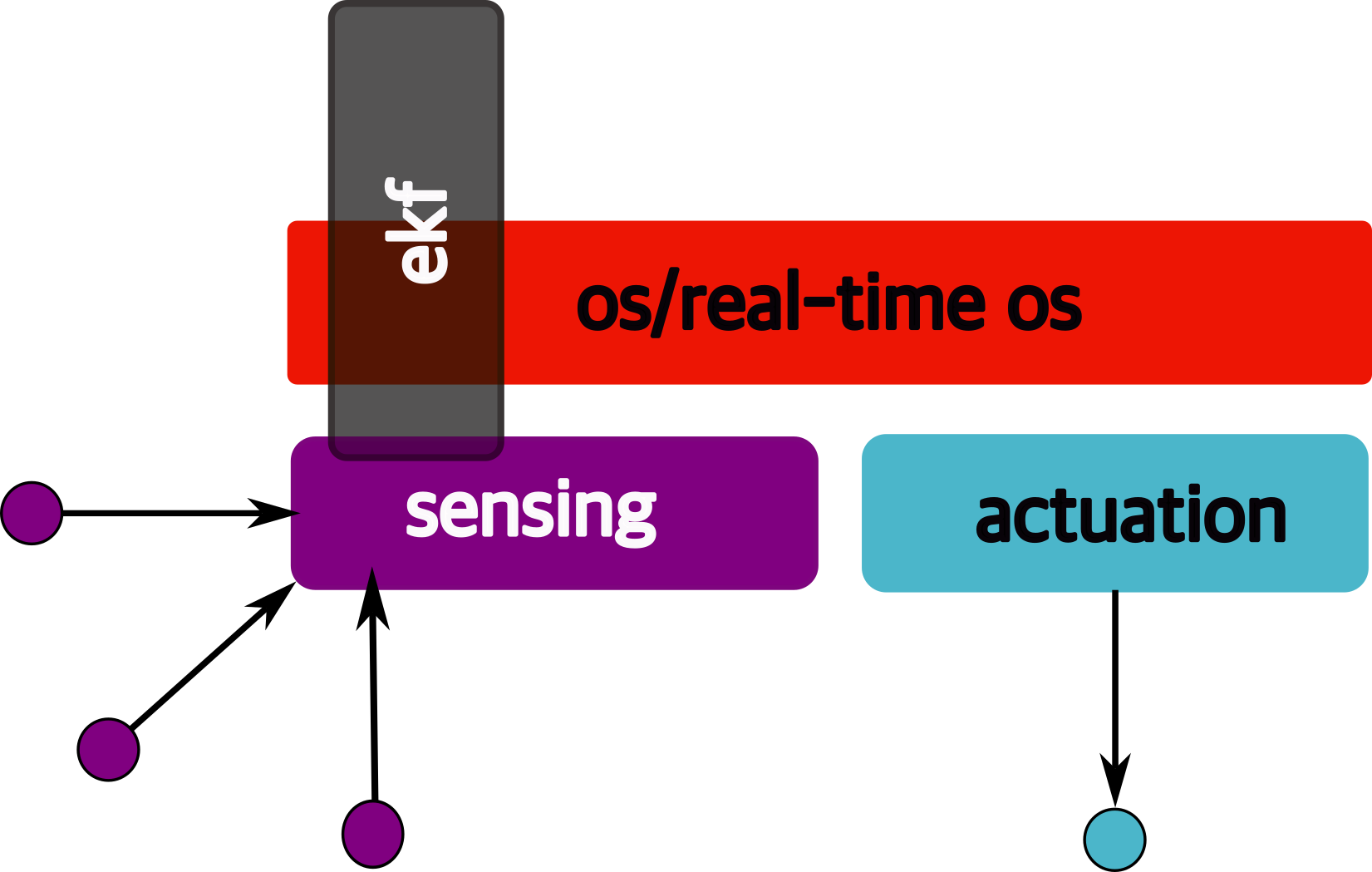

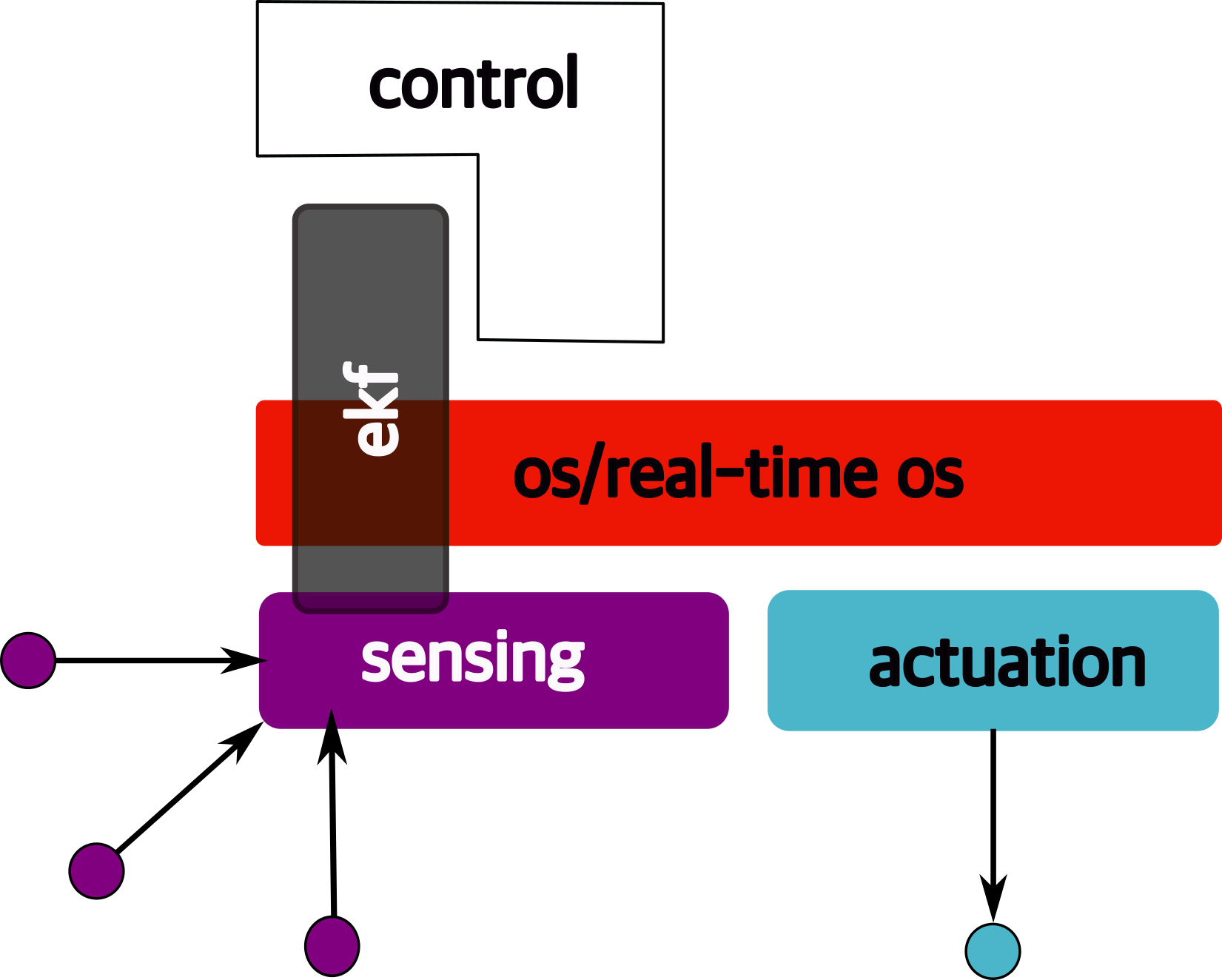

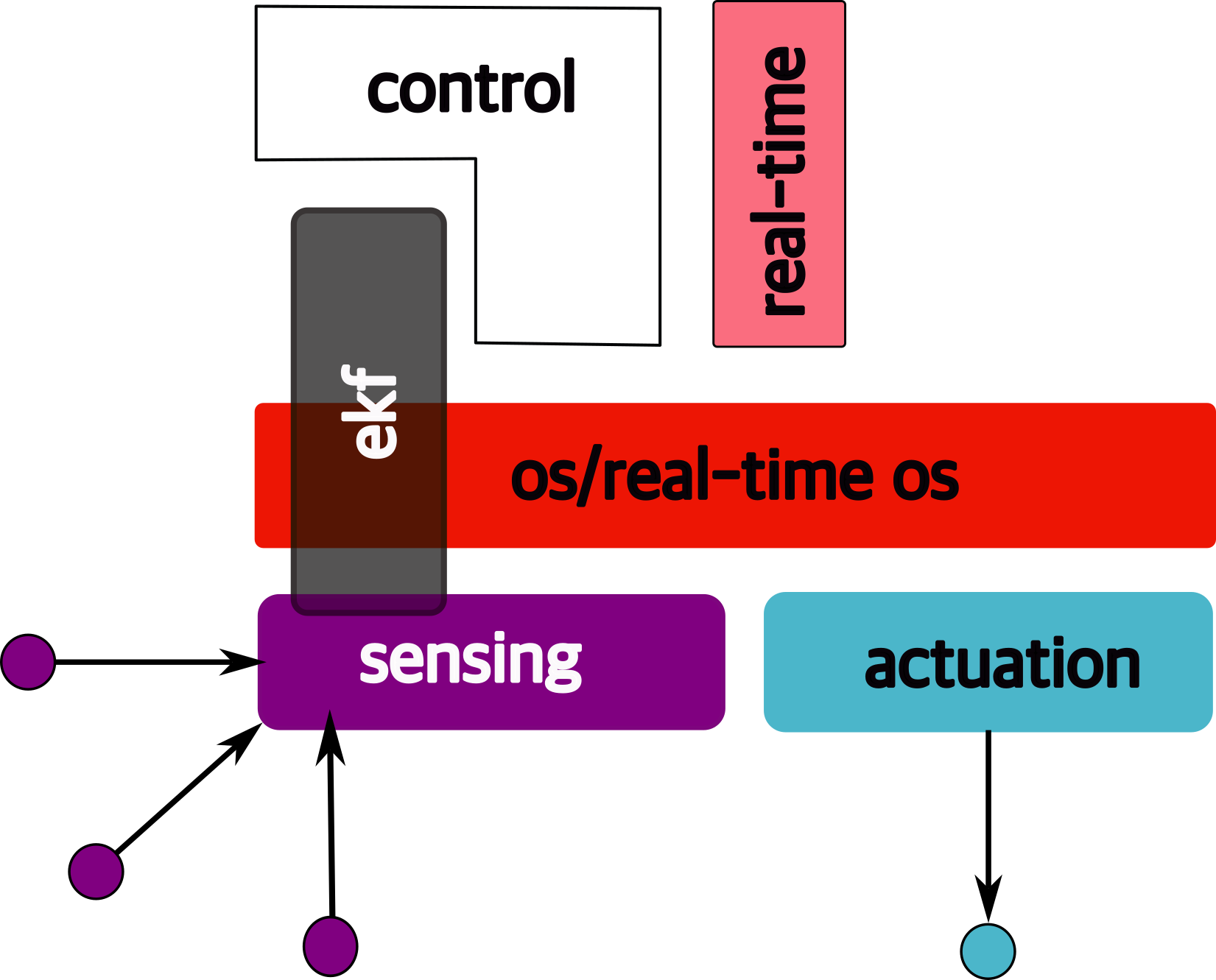

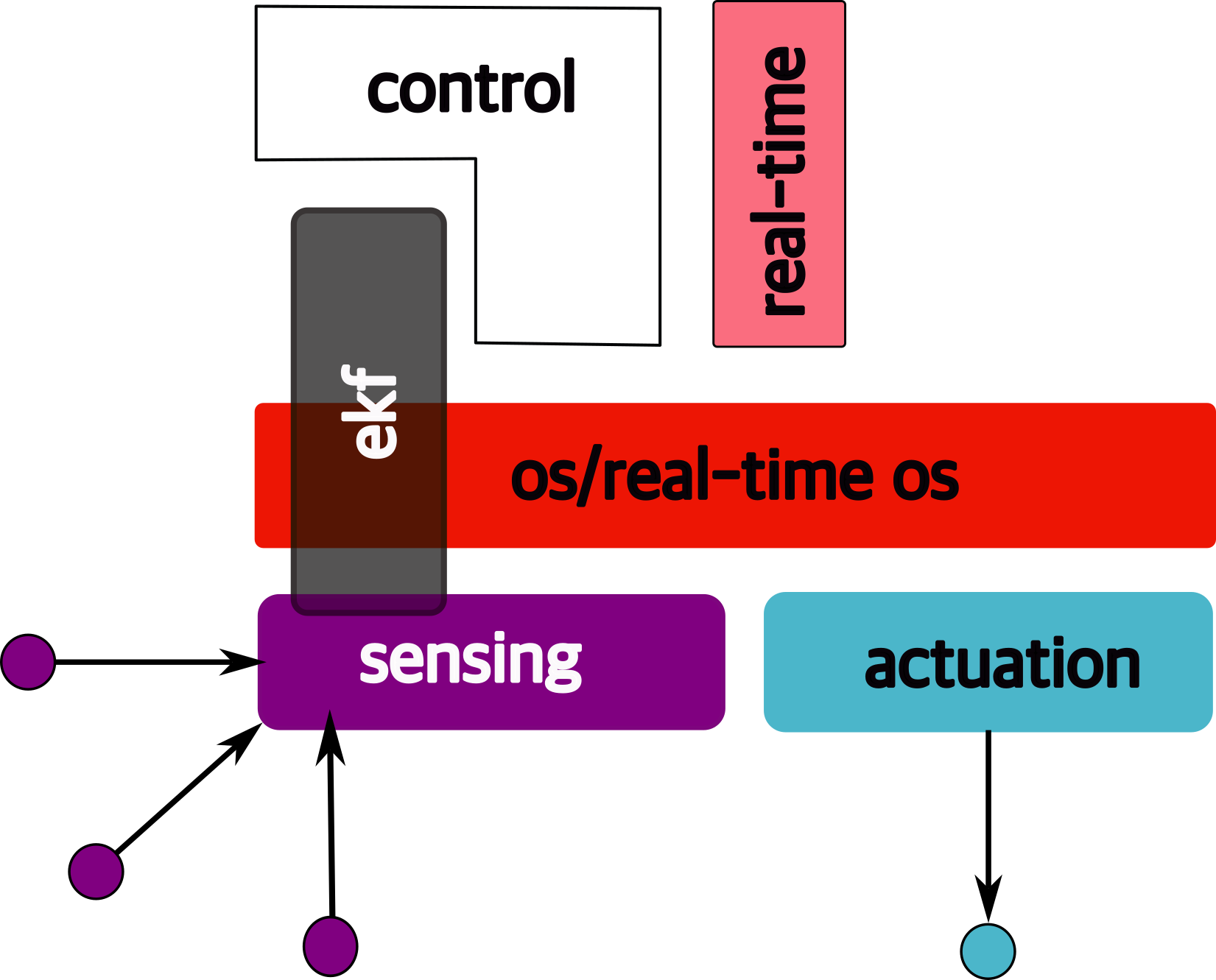

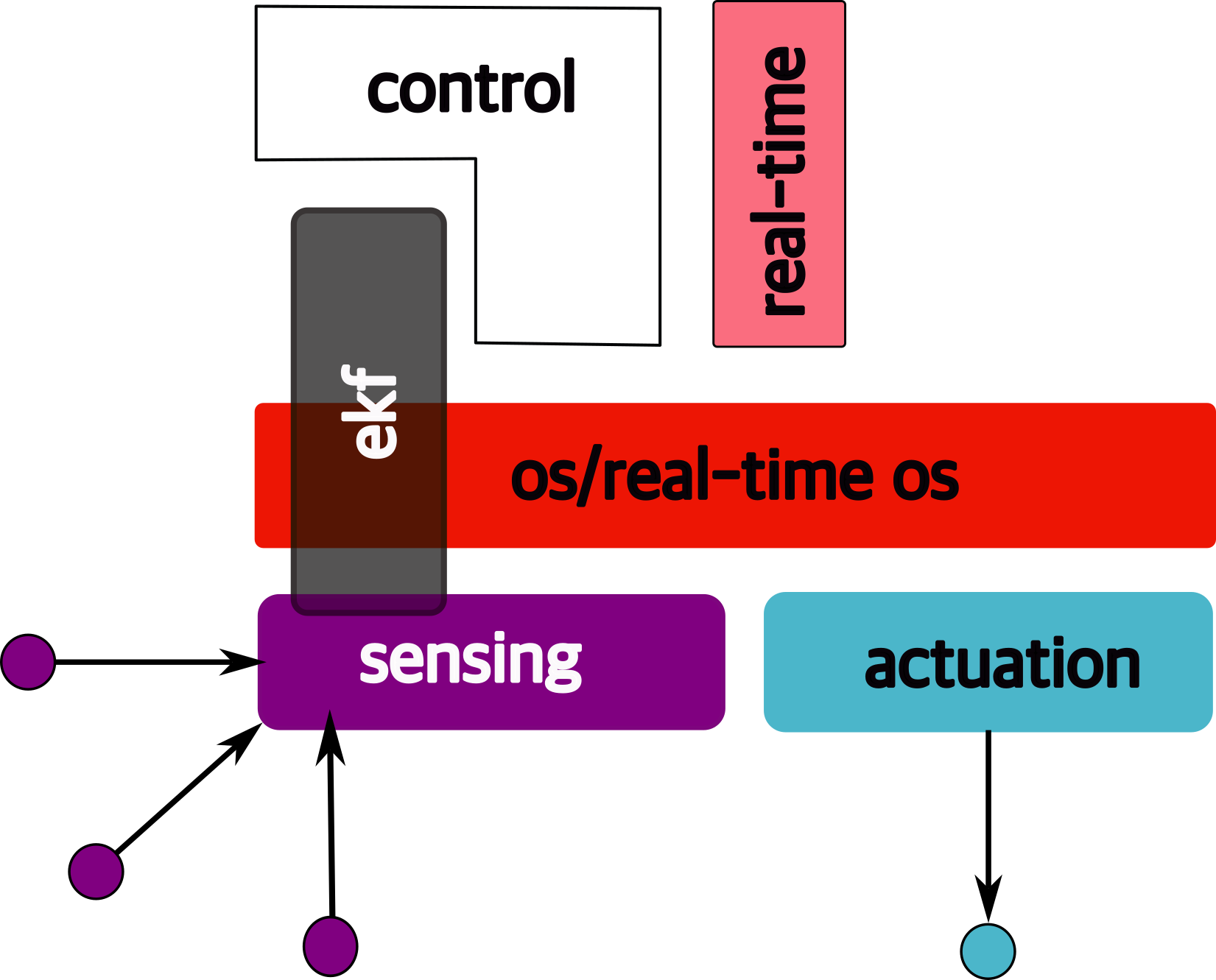

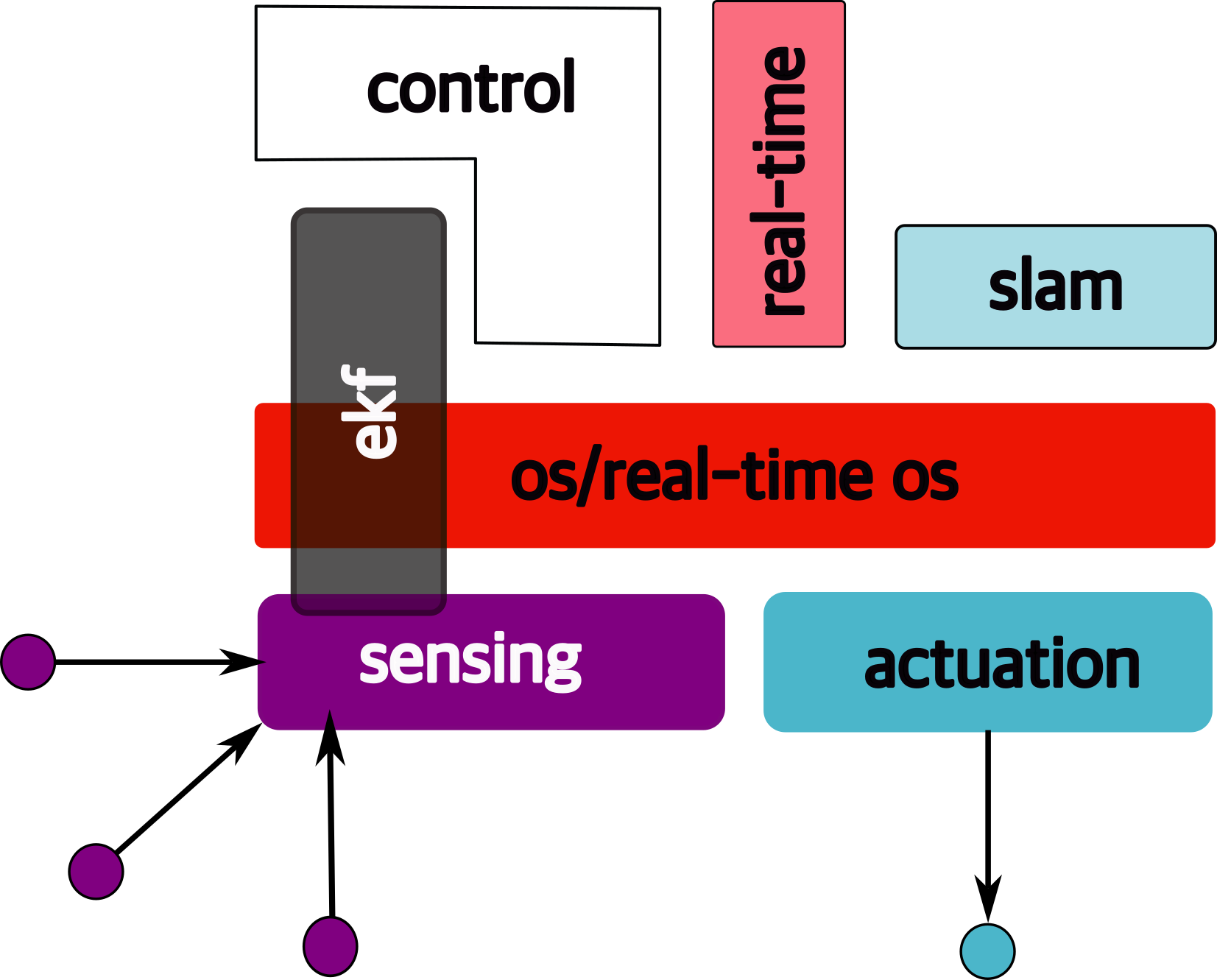

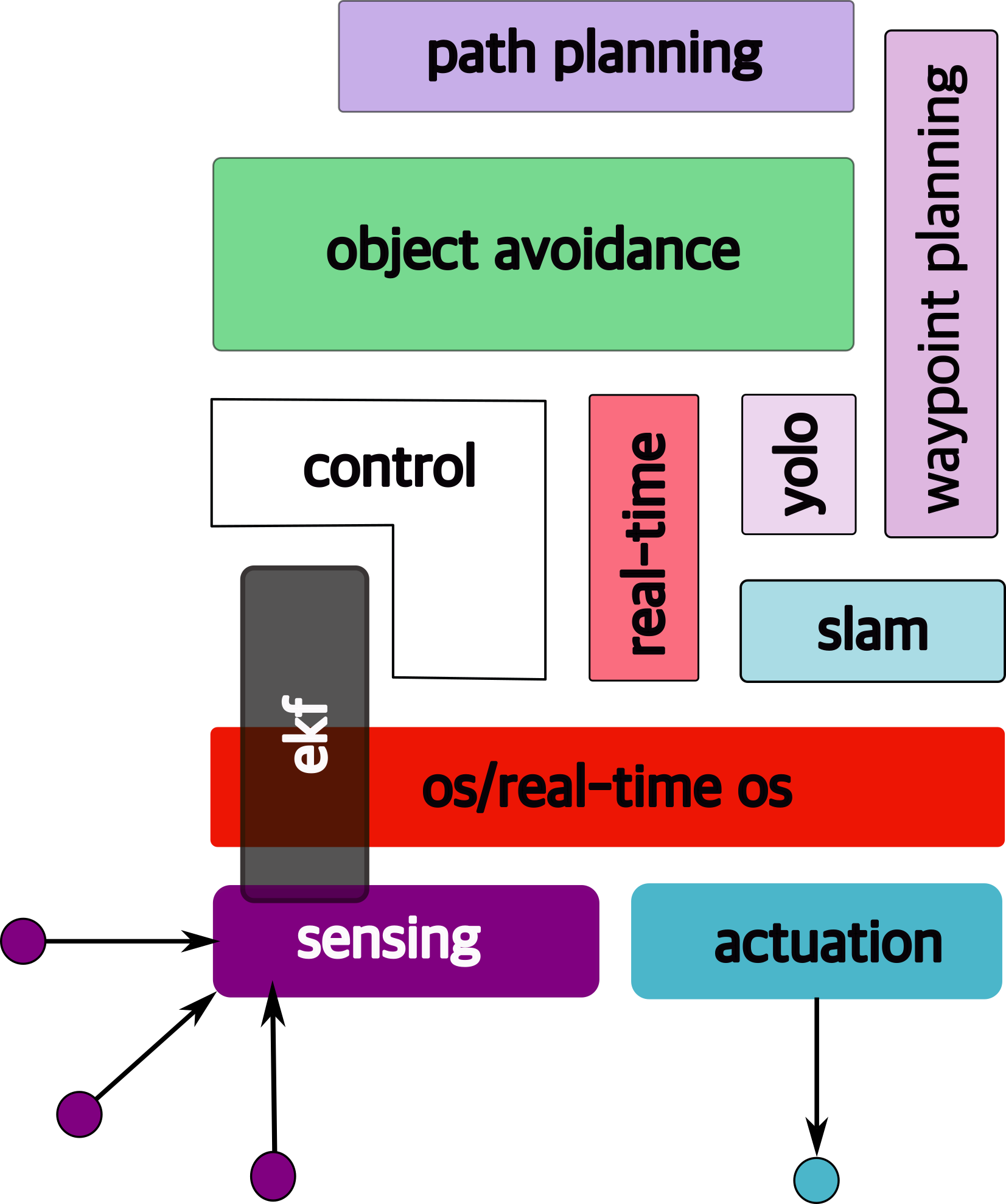

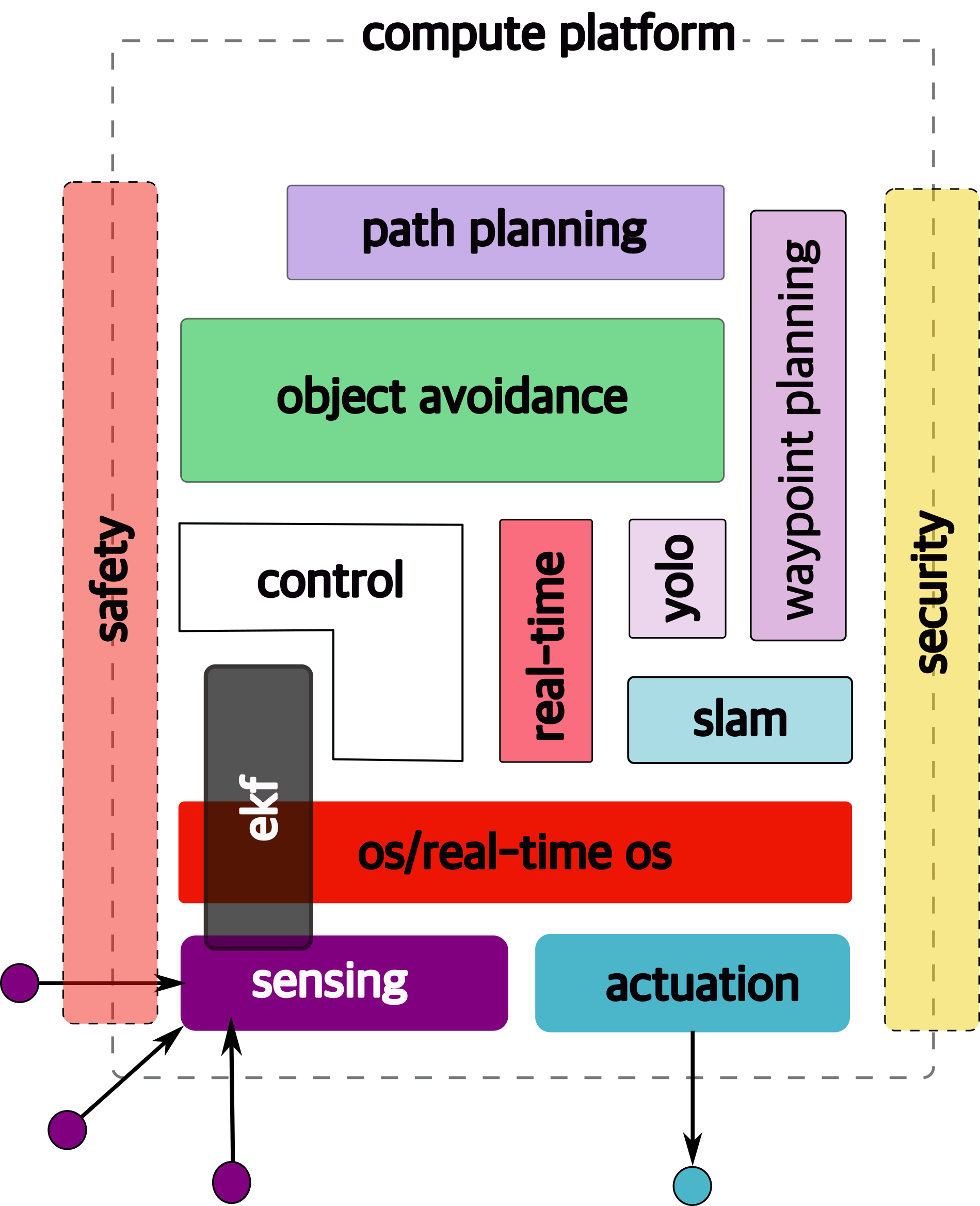

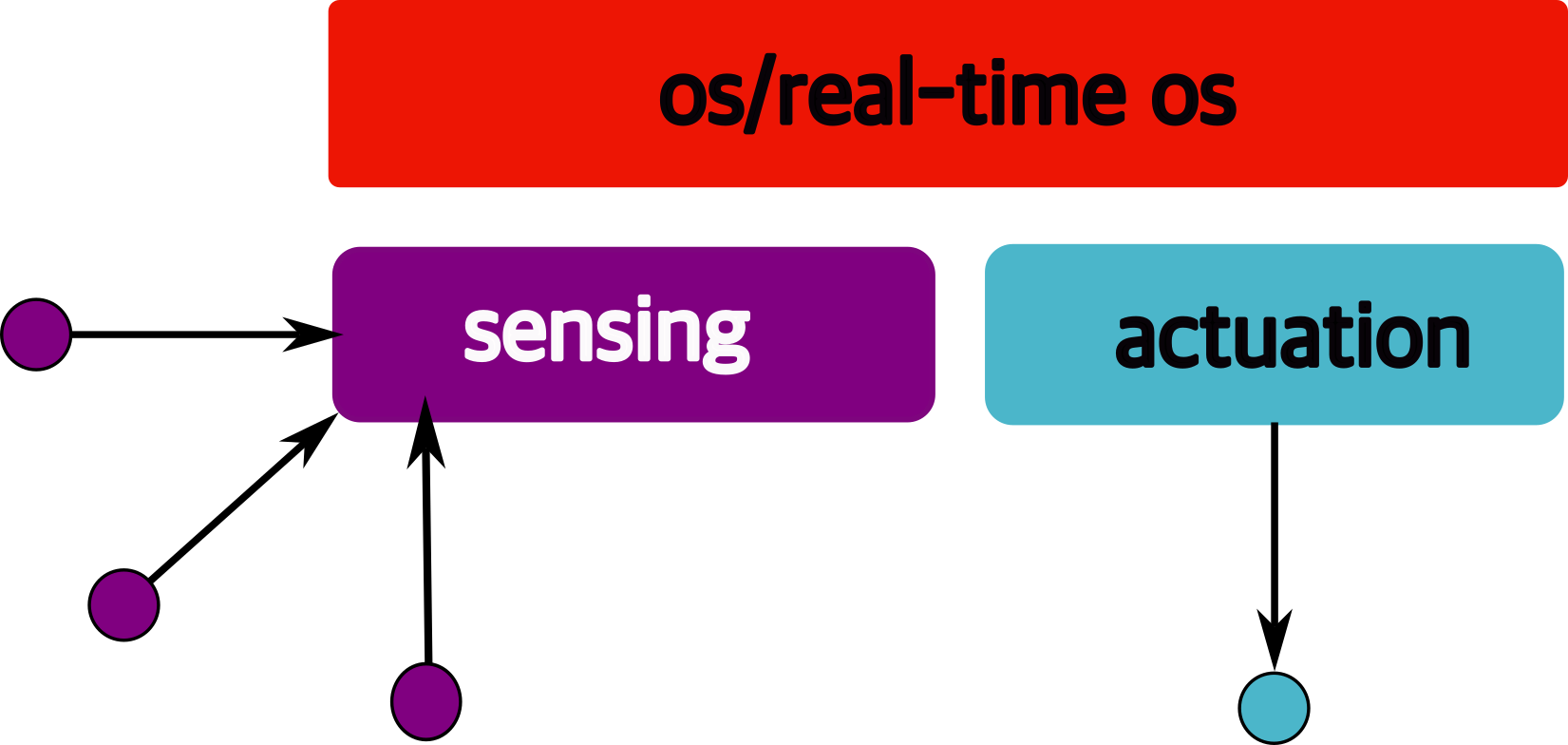

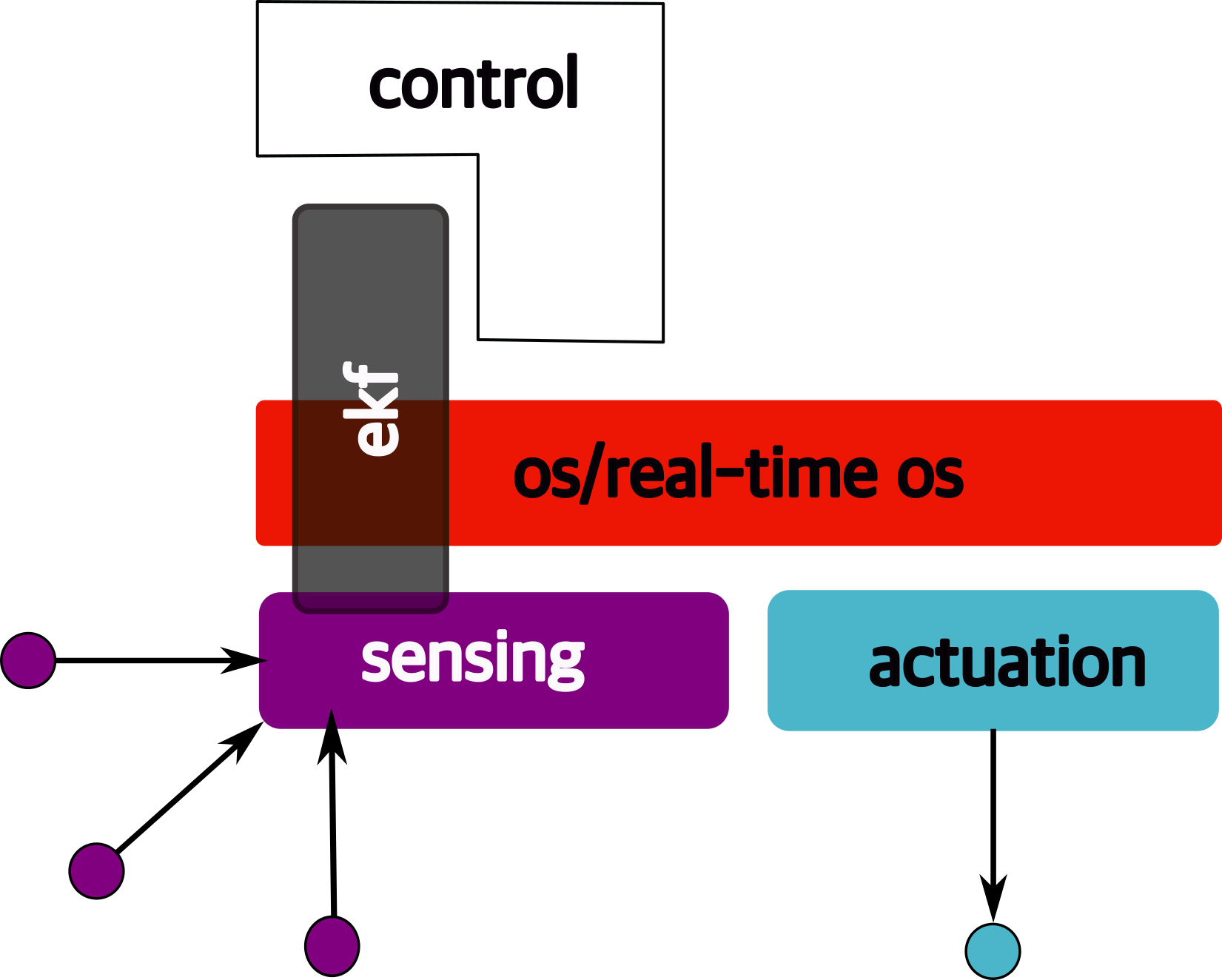

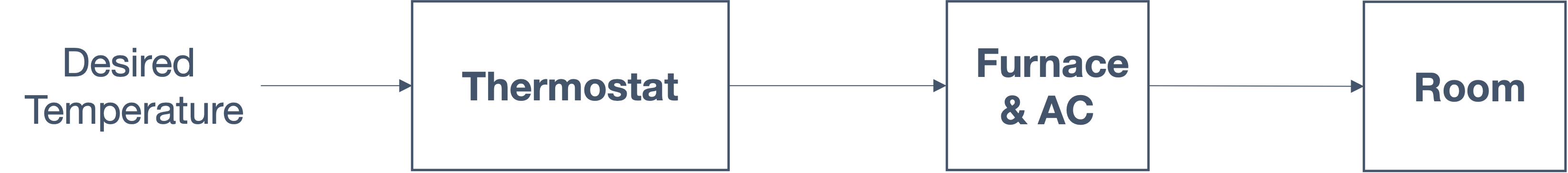

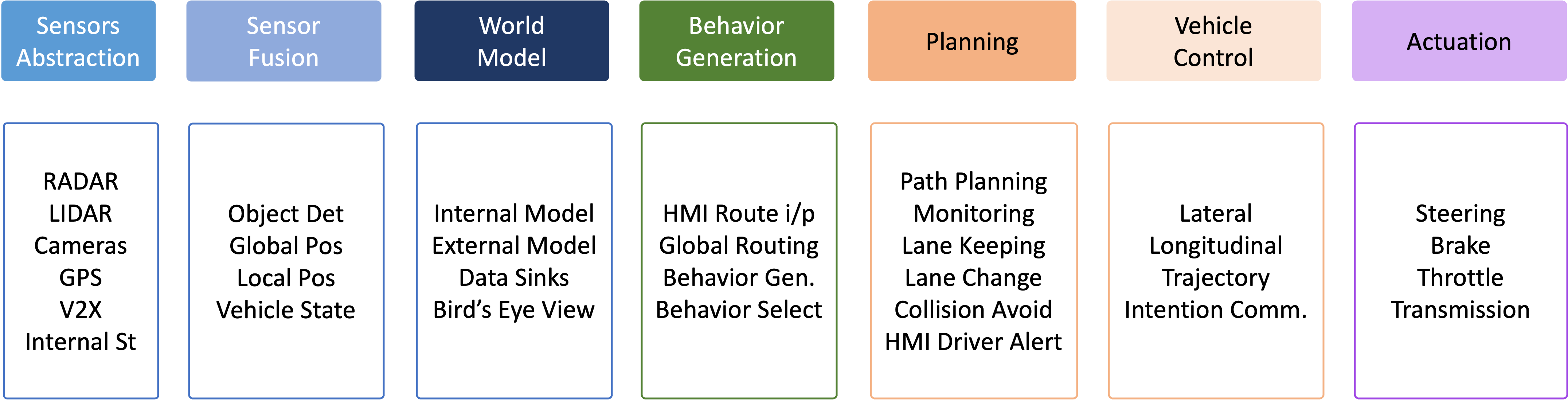

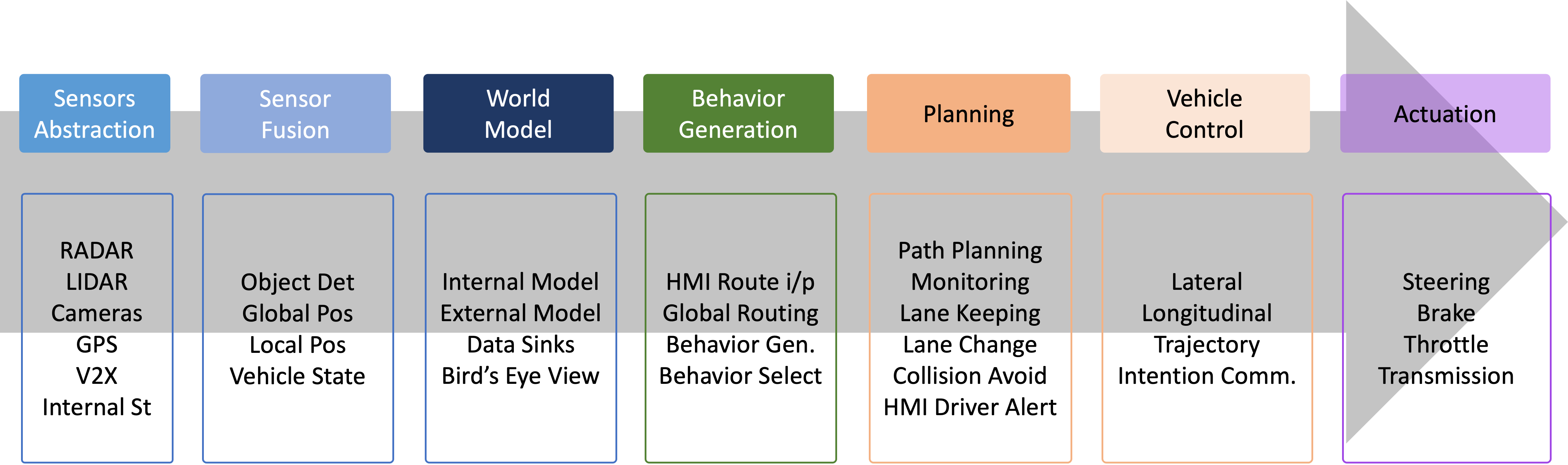

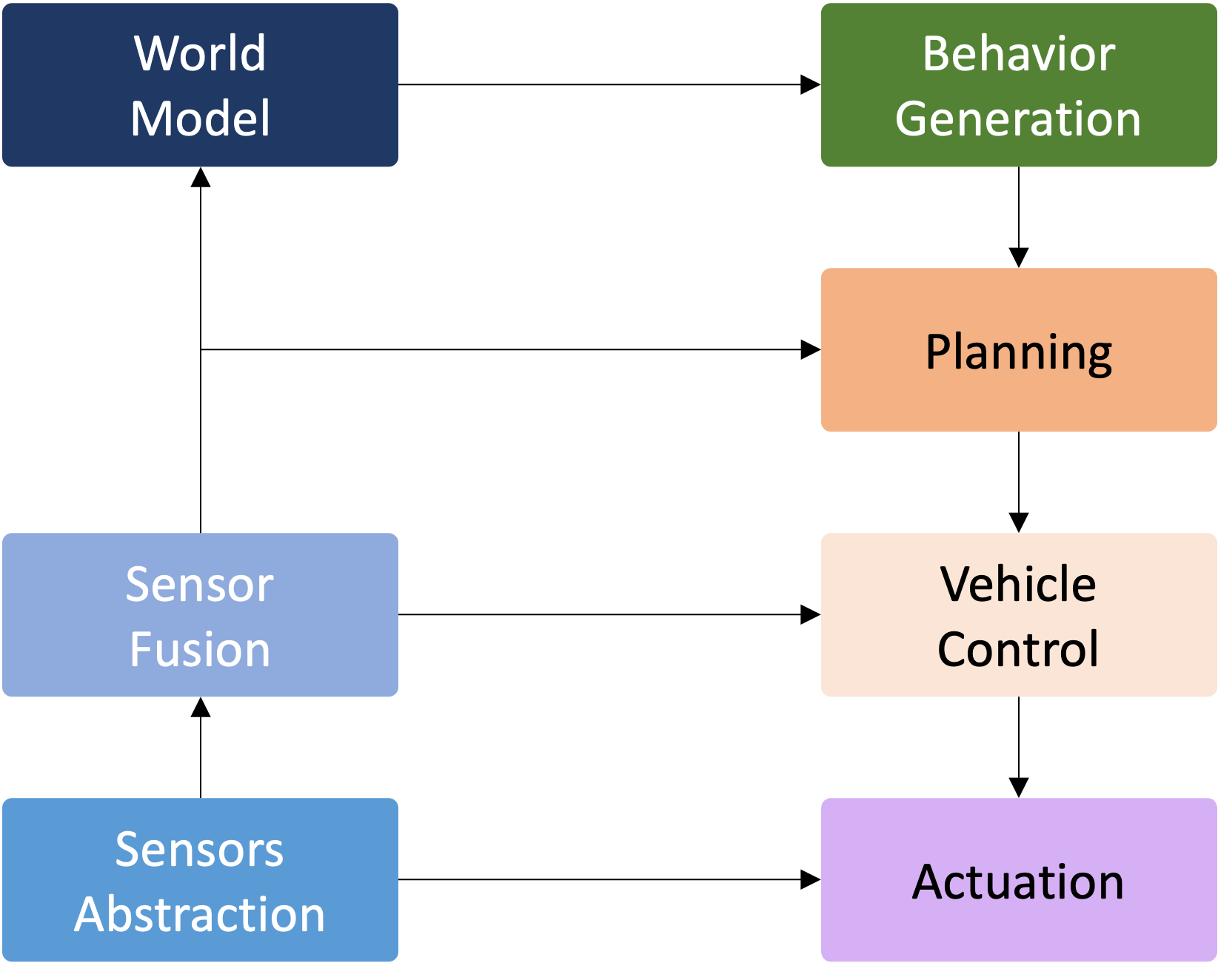

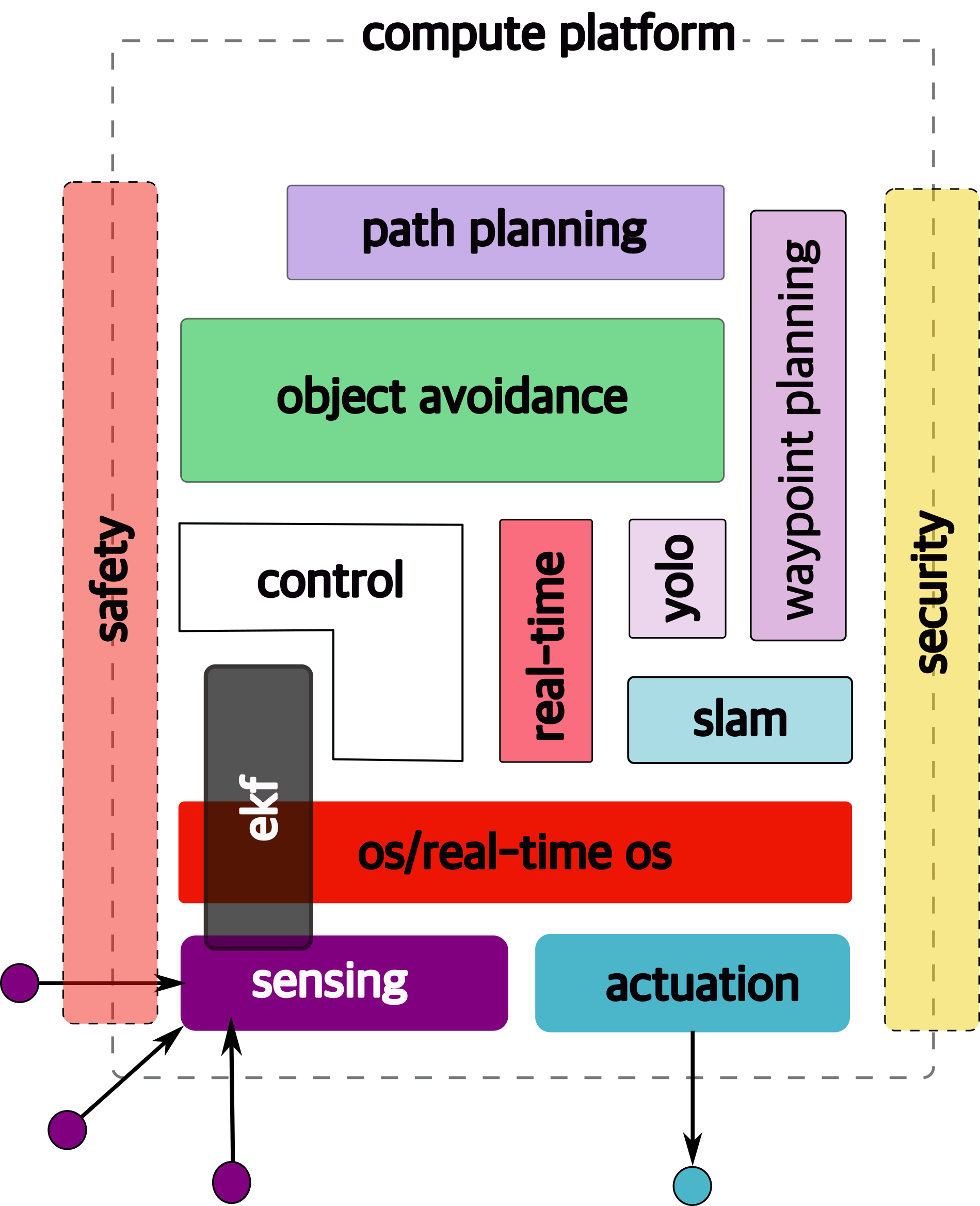

1.6 Overview/Architecture of Autonomous Systems

So far, we have (briefly) talked about…

Sensing:

Actuation:

But the system includes…an operating system (OS) in there

and it includes real-time mechanisms.

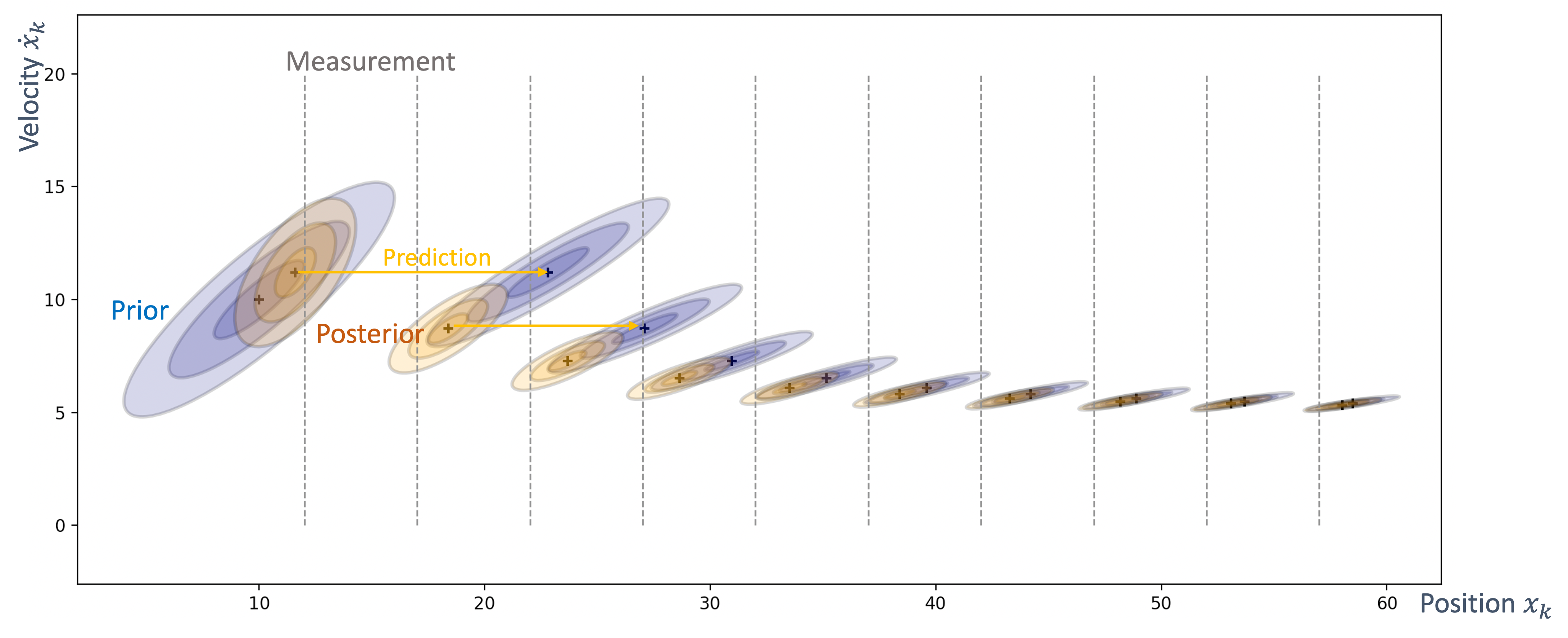

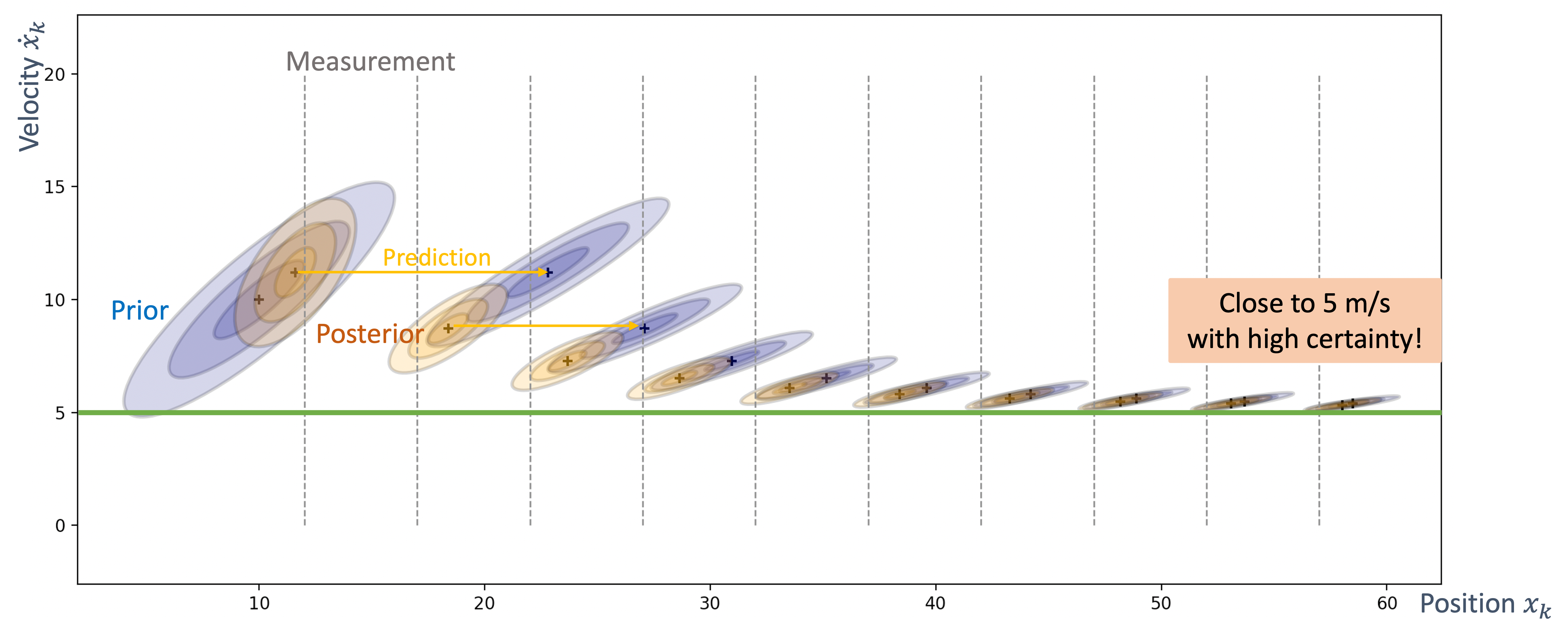

We have briefly discussed, EKF:

note: ekf is versatile; can be used for sensor fusion, slam, etc.

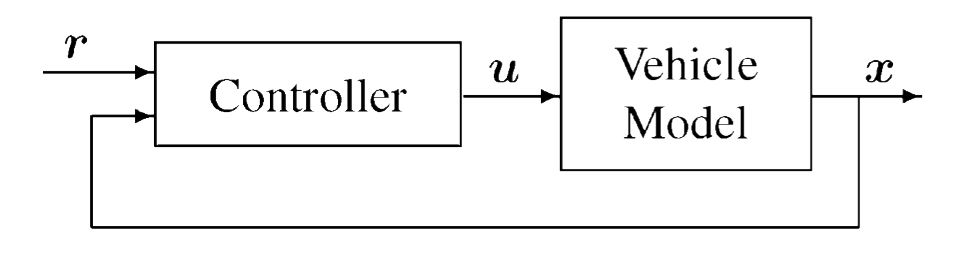

All of it integrates with…control:

There are some real-time functions in there…

like braking, engine control.

Question: if we design such a system…

is it “autonomous”?

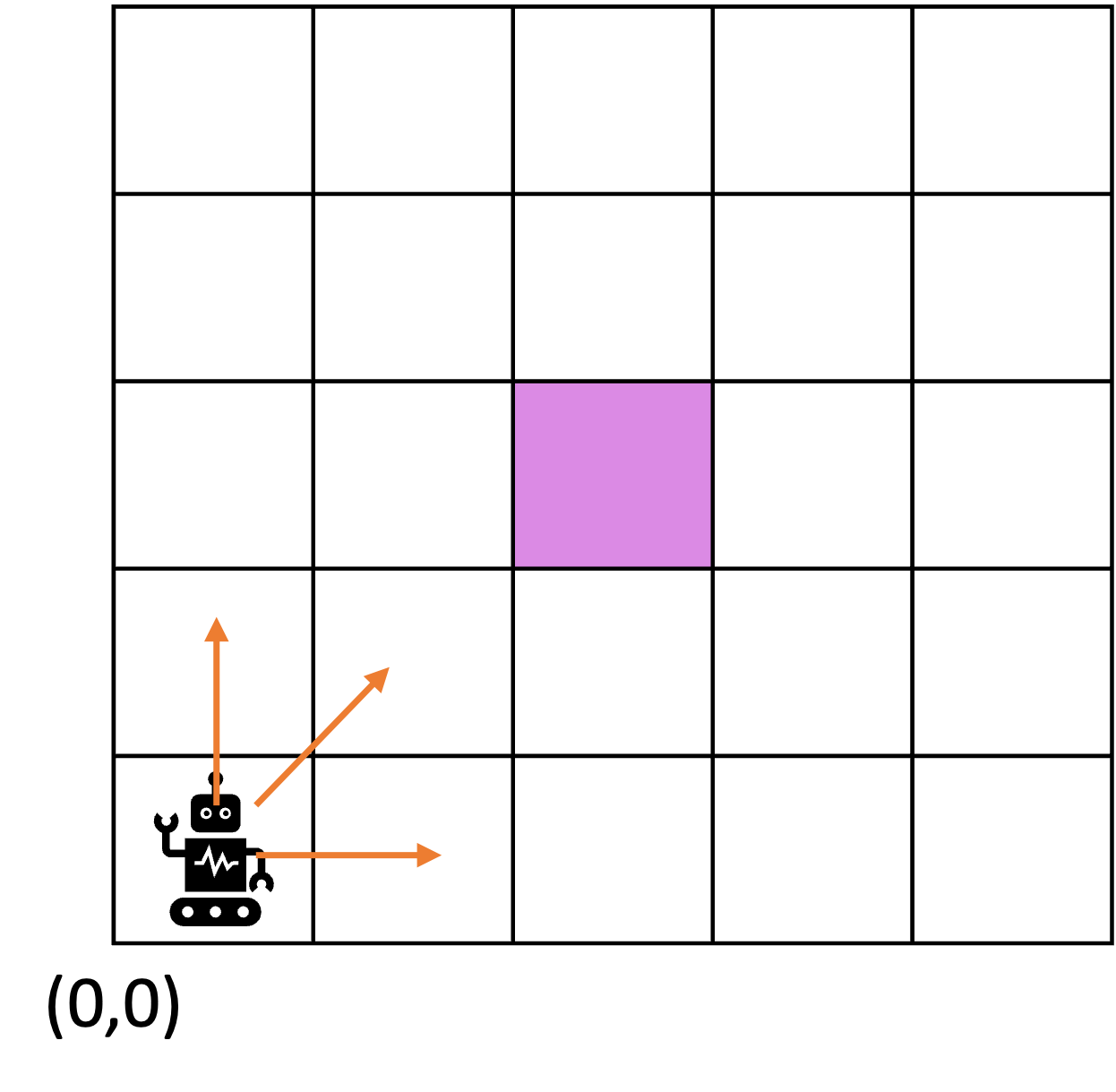

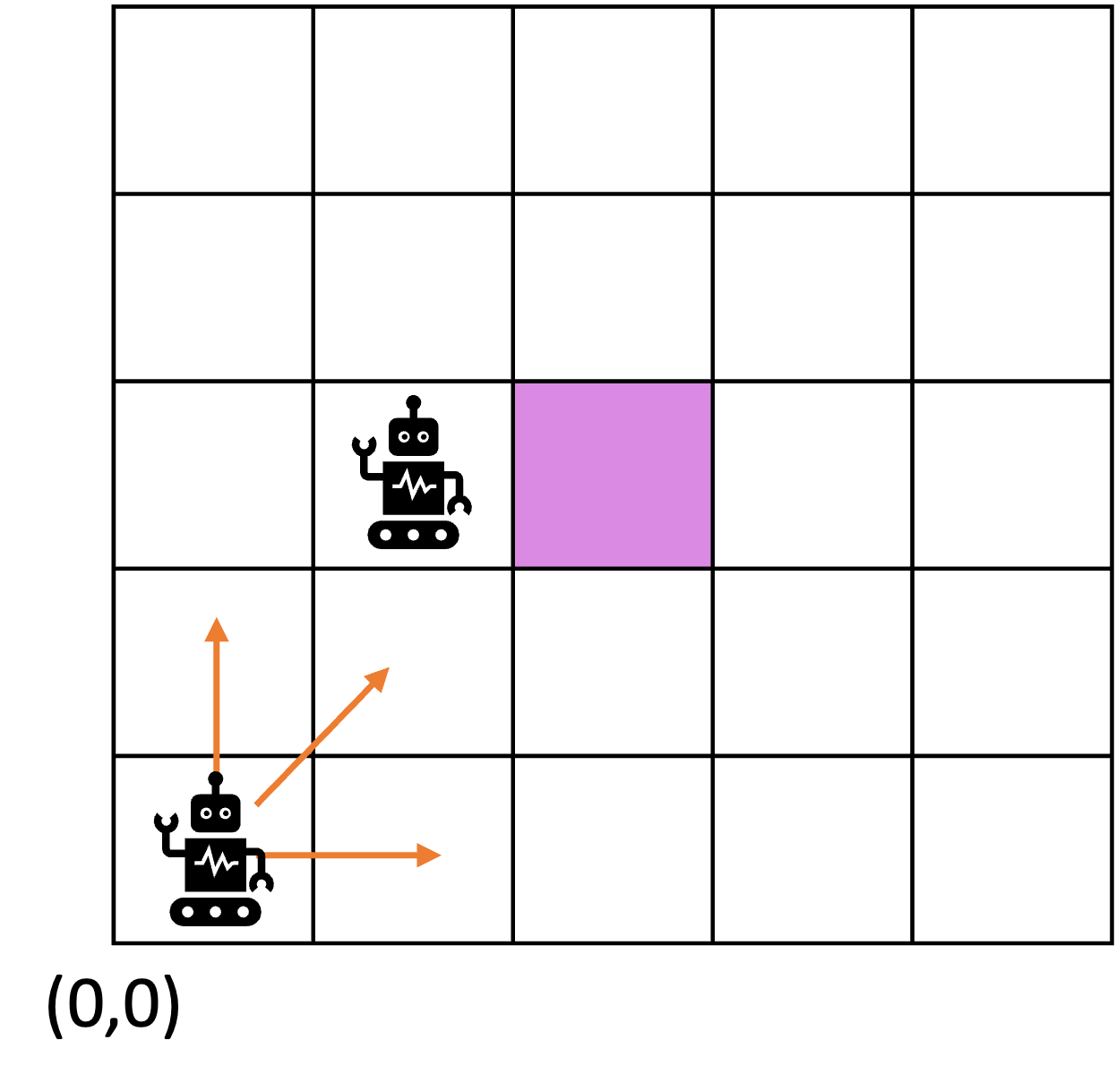

We are missing some “higher order” functionss from the perspective of the autonomous system:

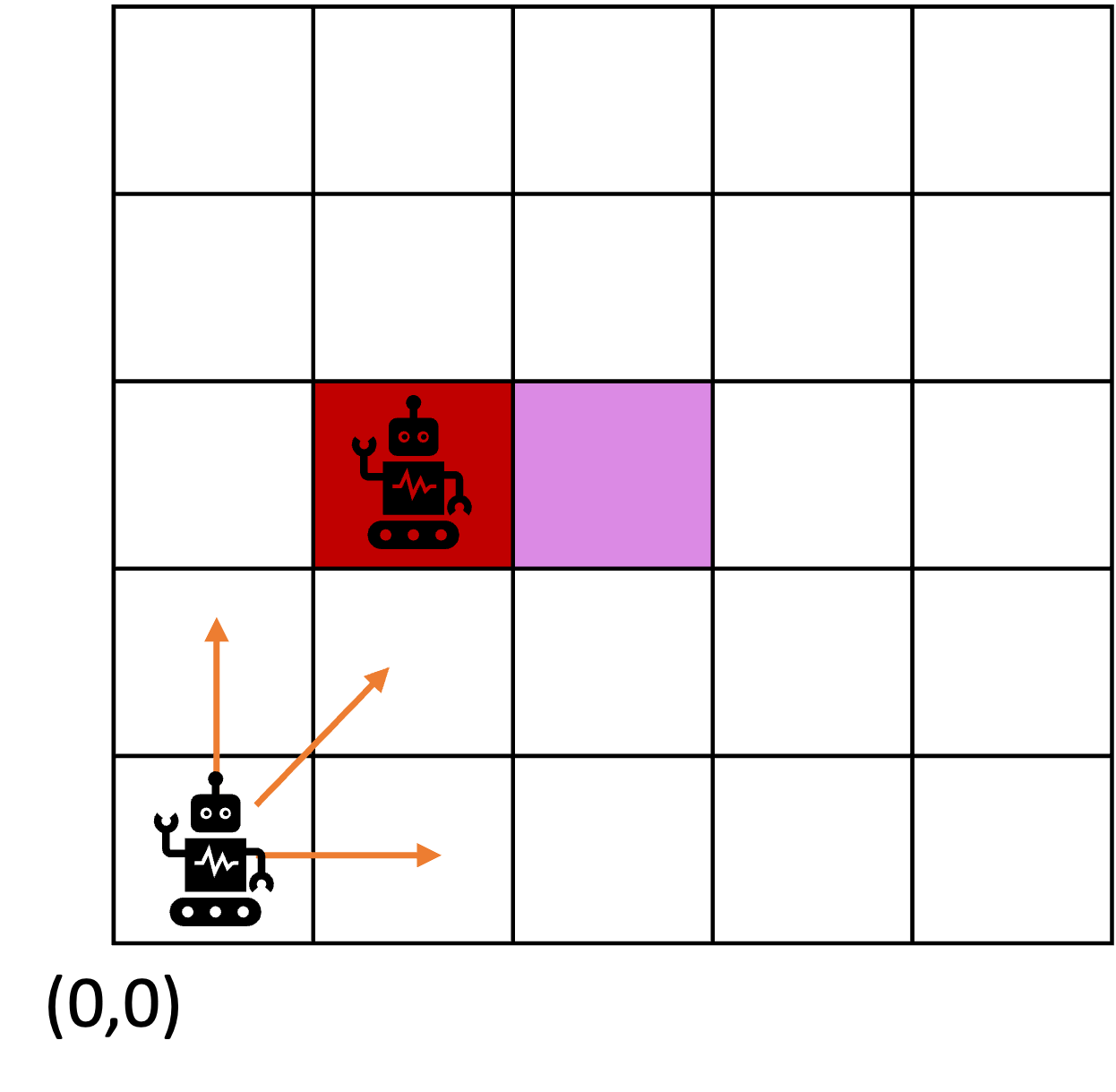

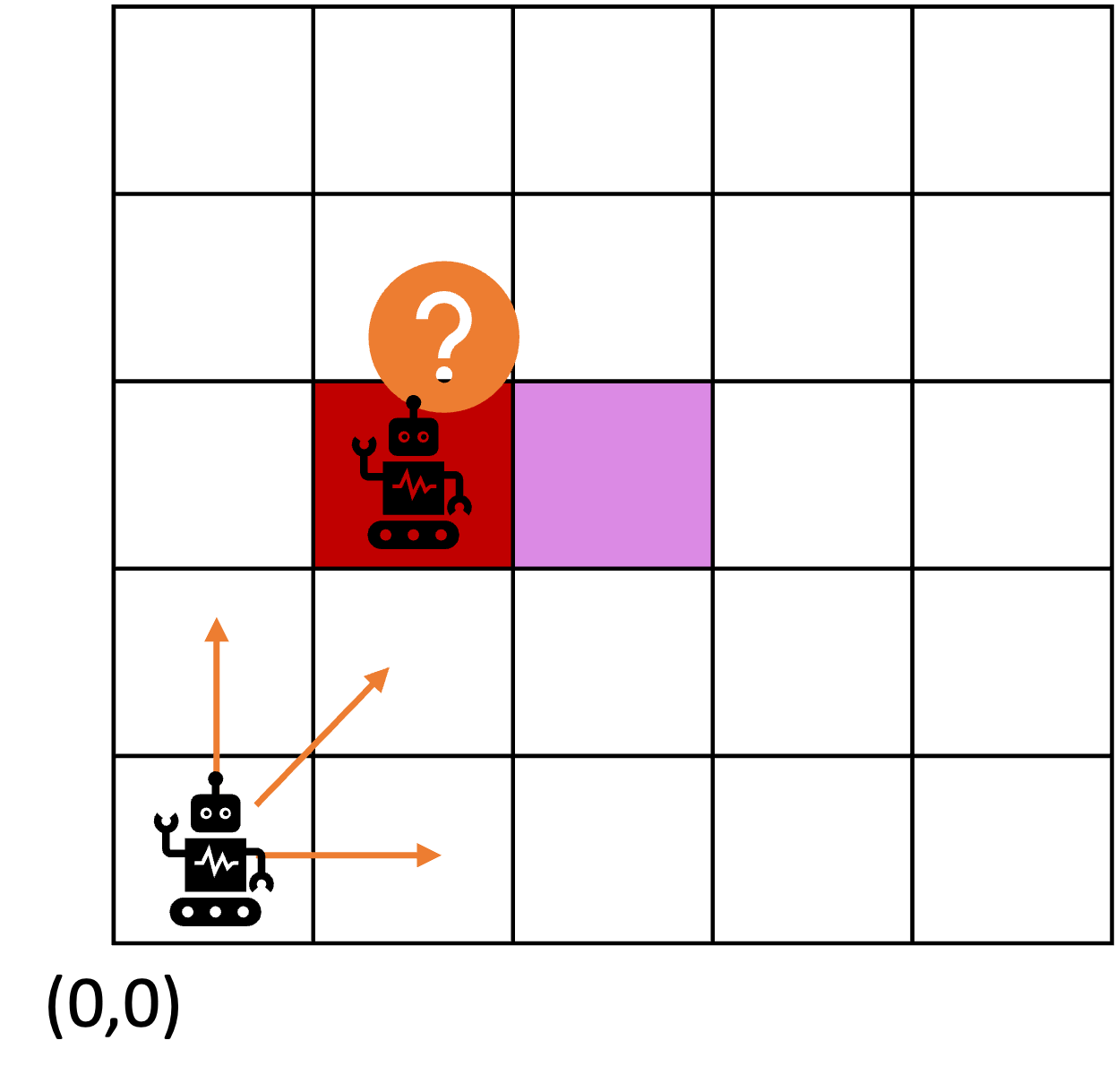

- where am I?

- where do I need to go?

- how do I get there?

- what obstacles may I face?

- how do I avoid them?

let us not forget the most important question of all…

why is gamora?

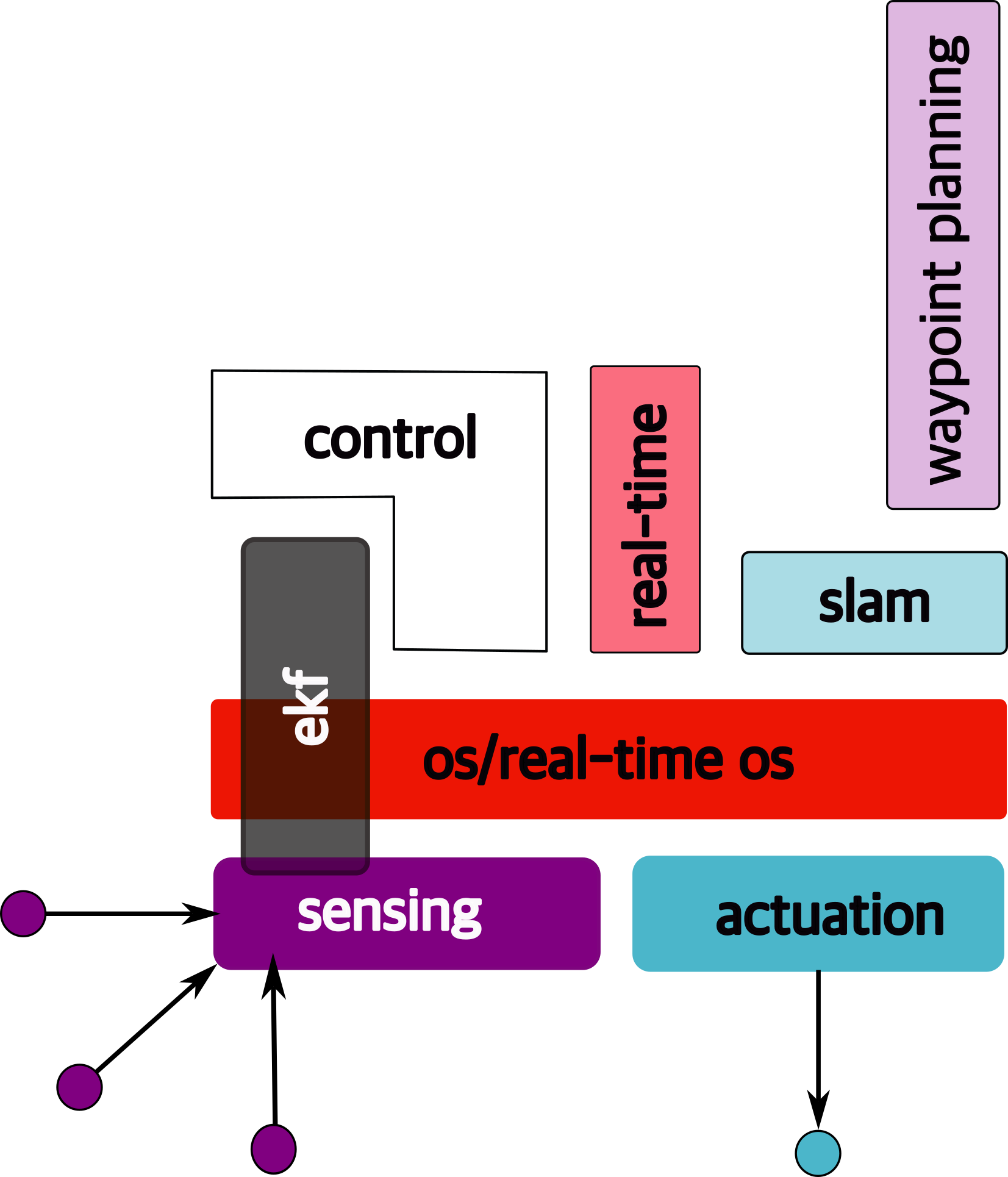

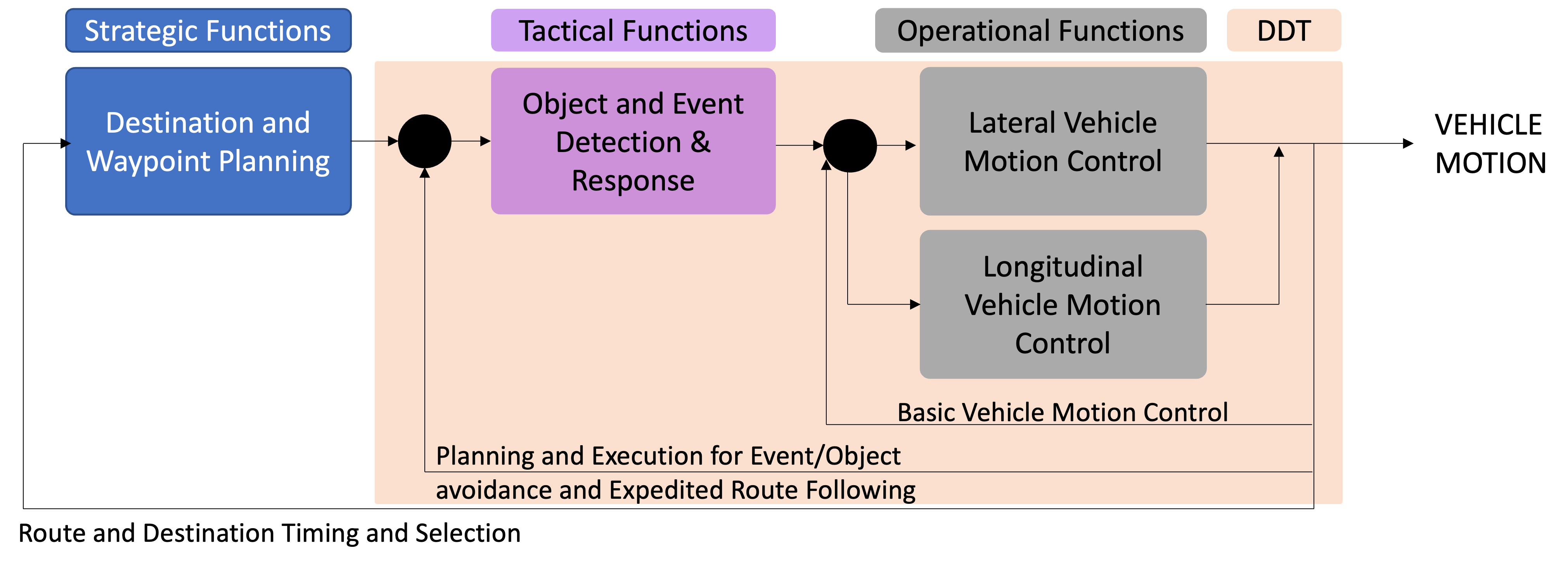

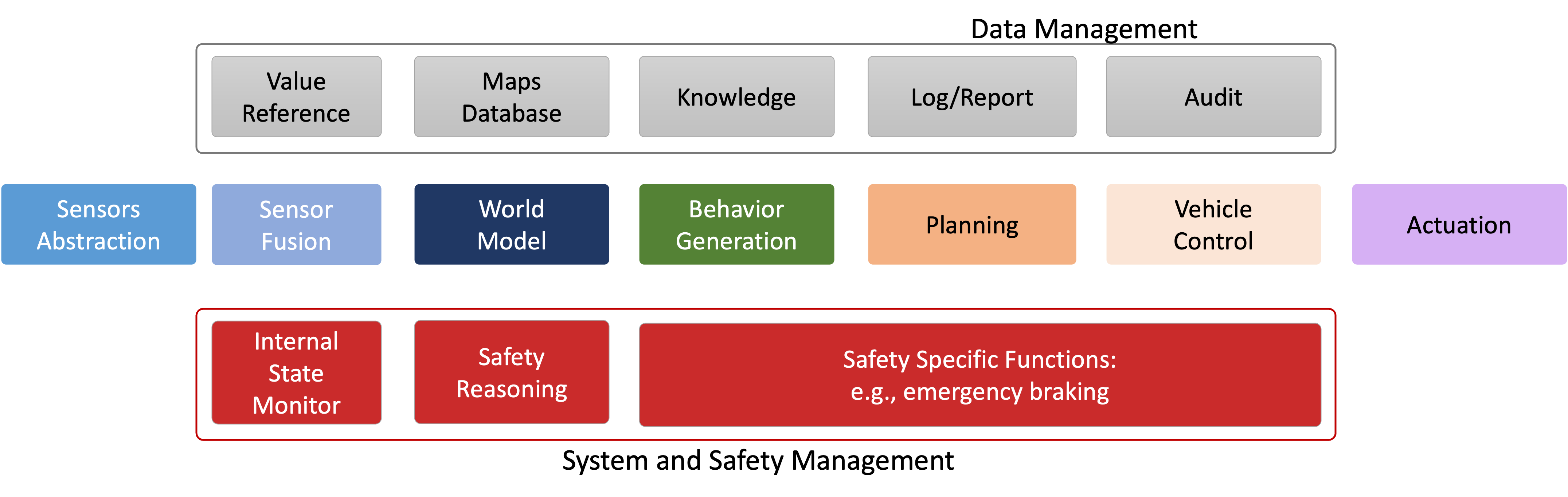

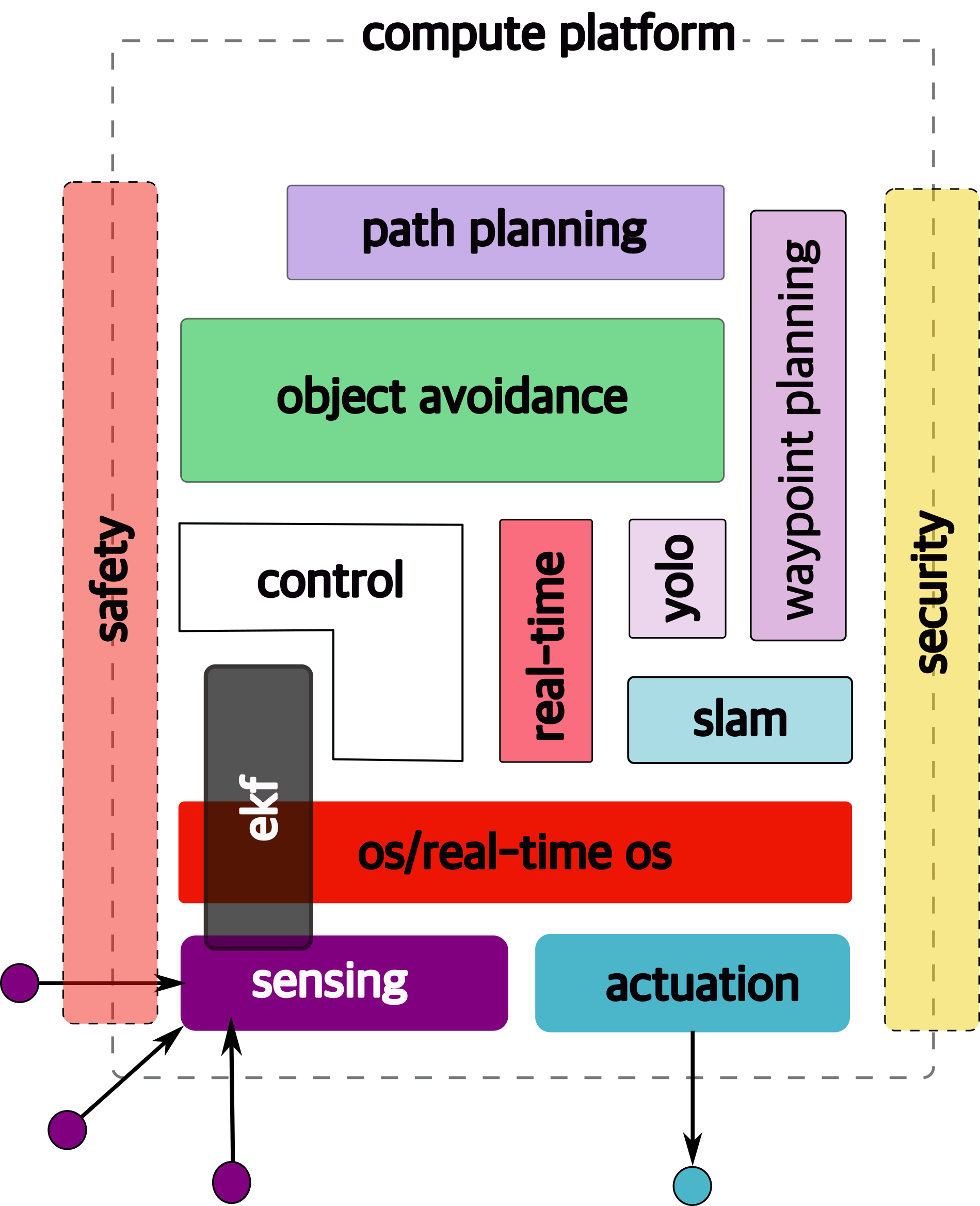

1.6.1 high-order functions

In order to answer the following, we need additional functionality. Let us go through what that might be.

|

|

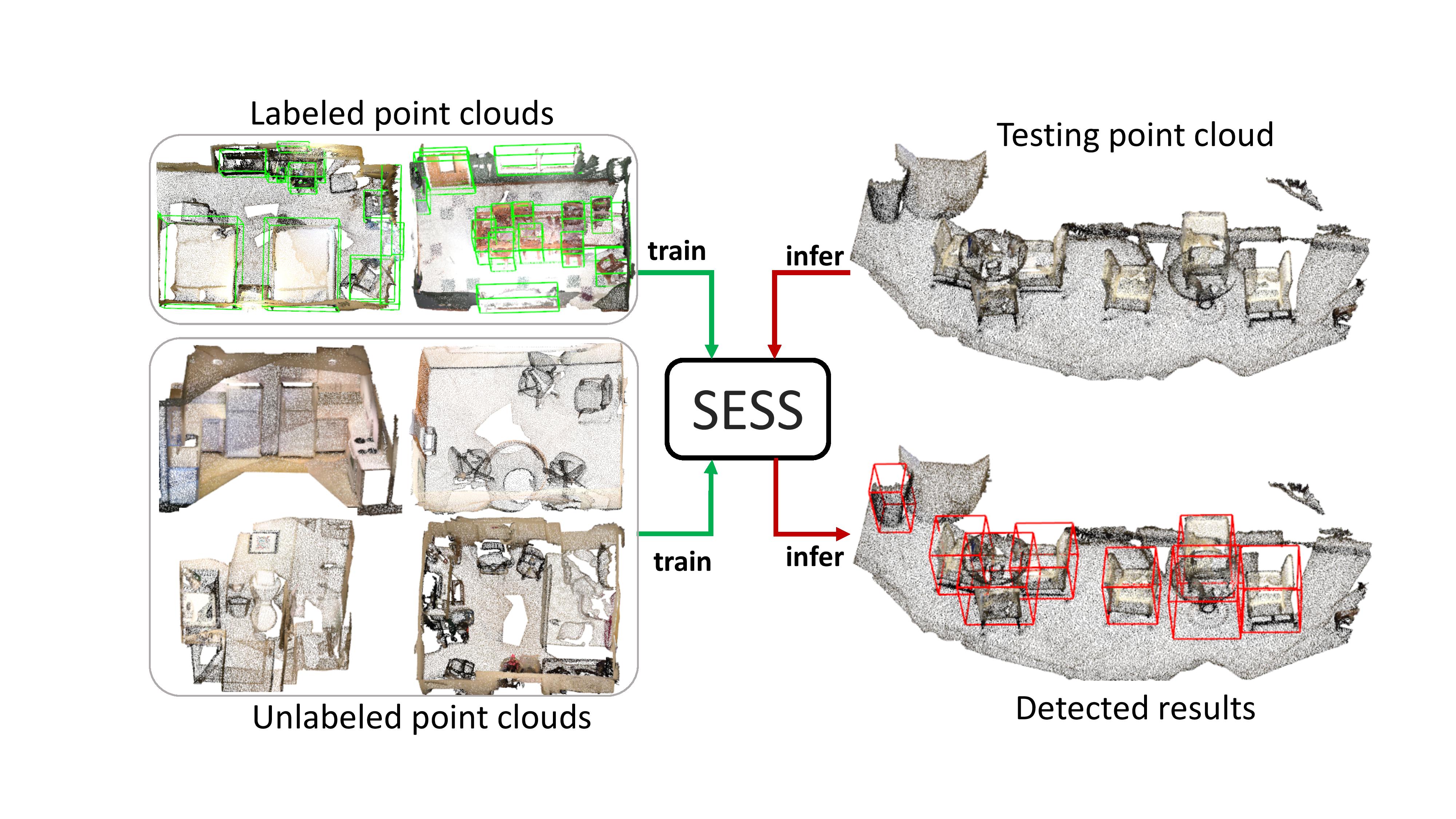

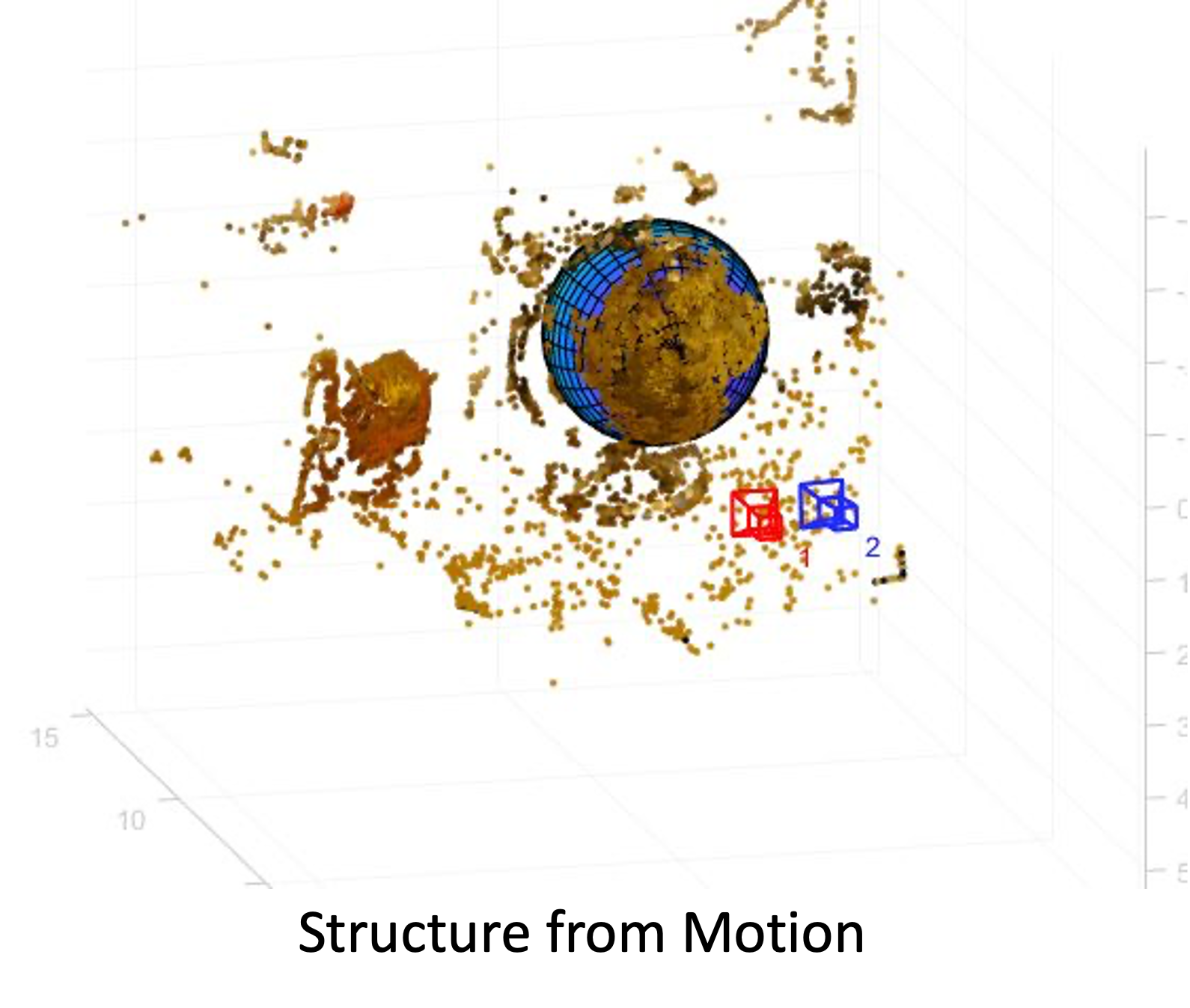

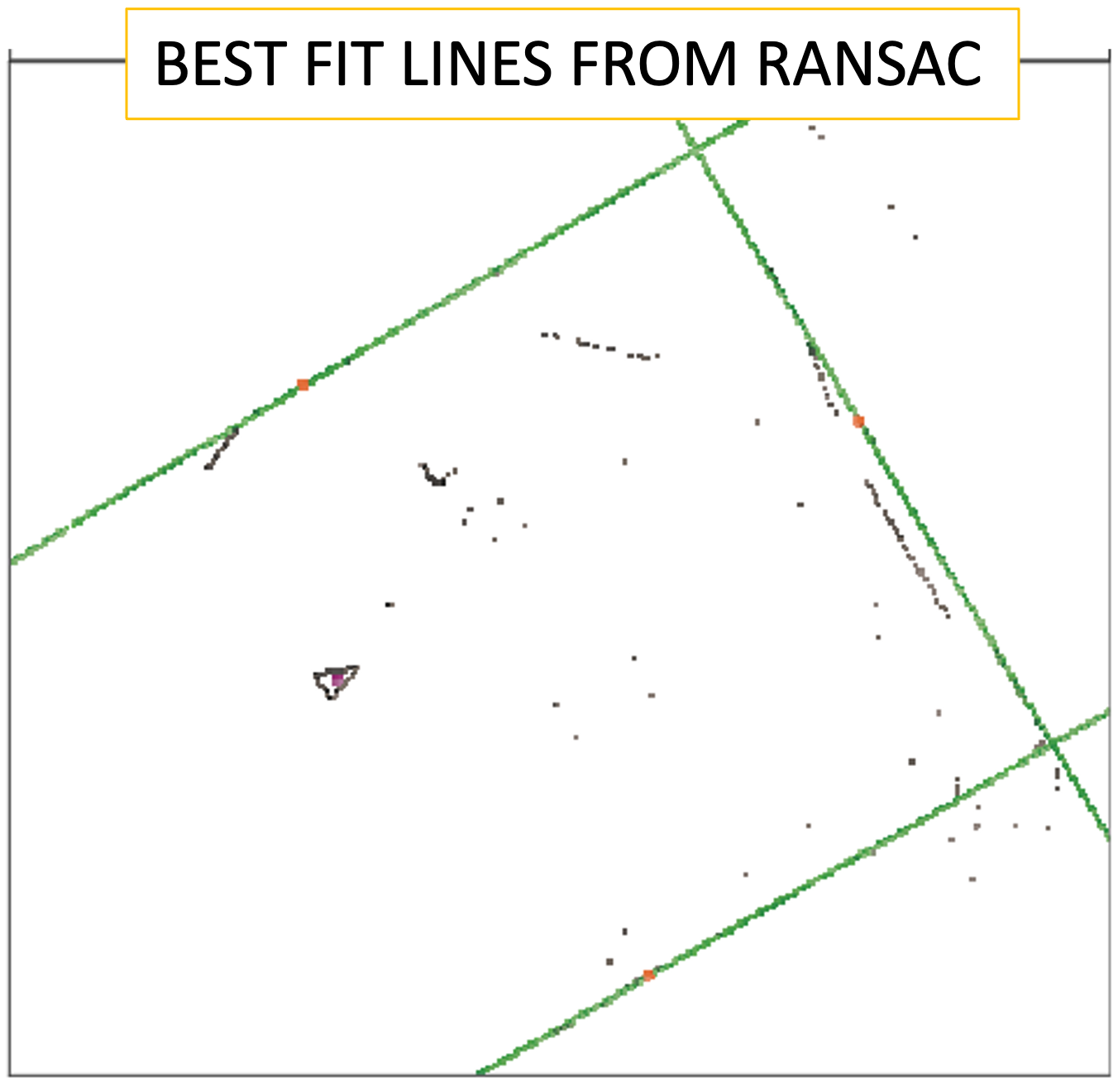

1.6.2 slam

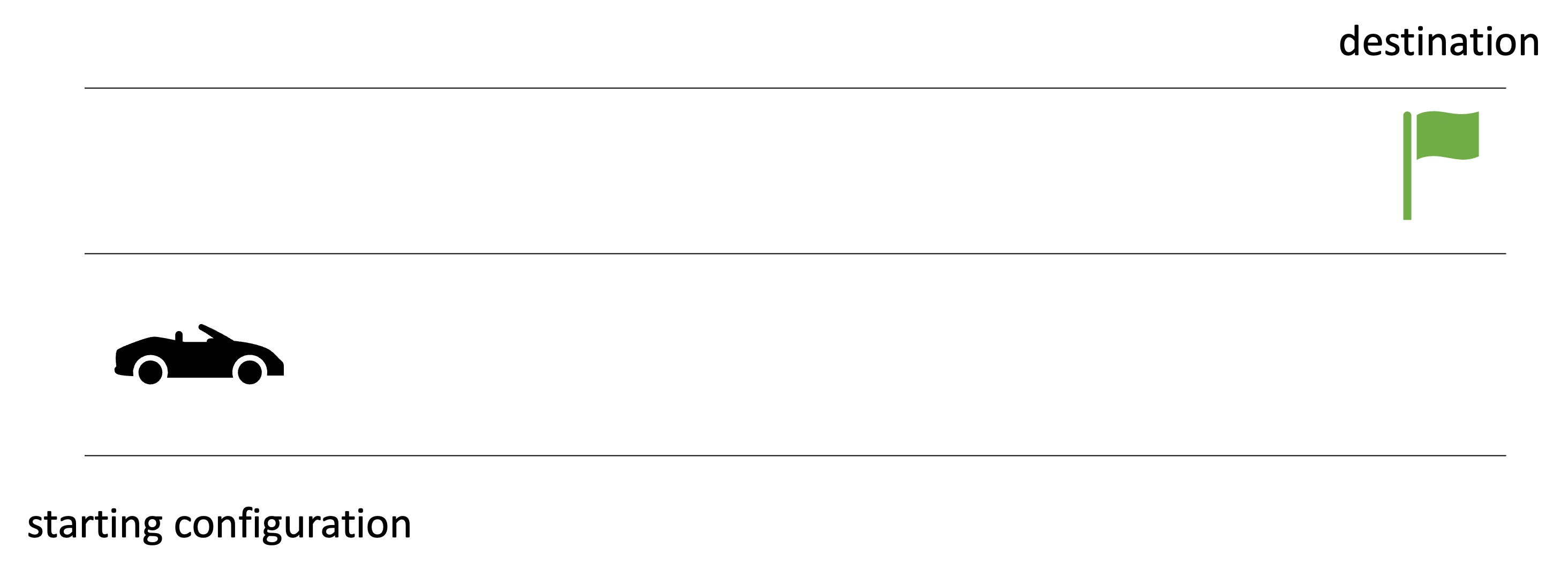

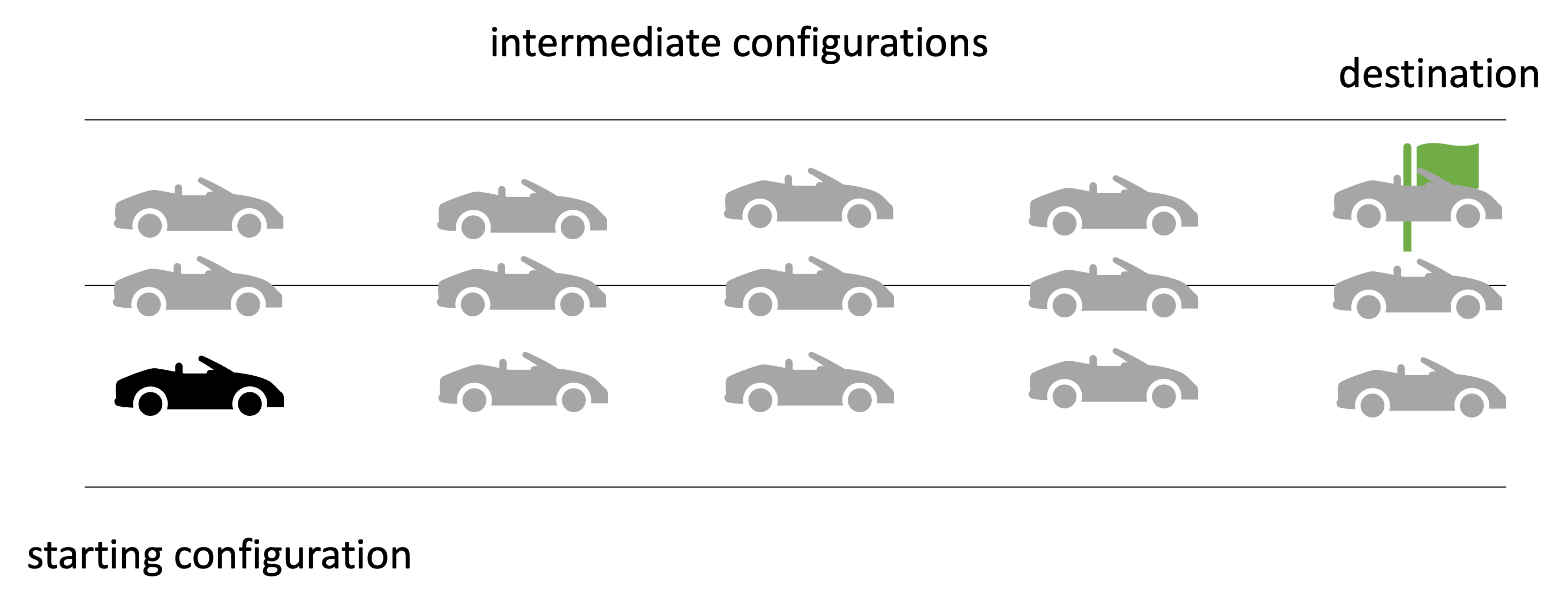

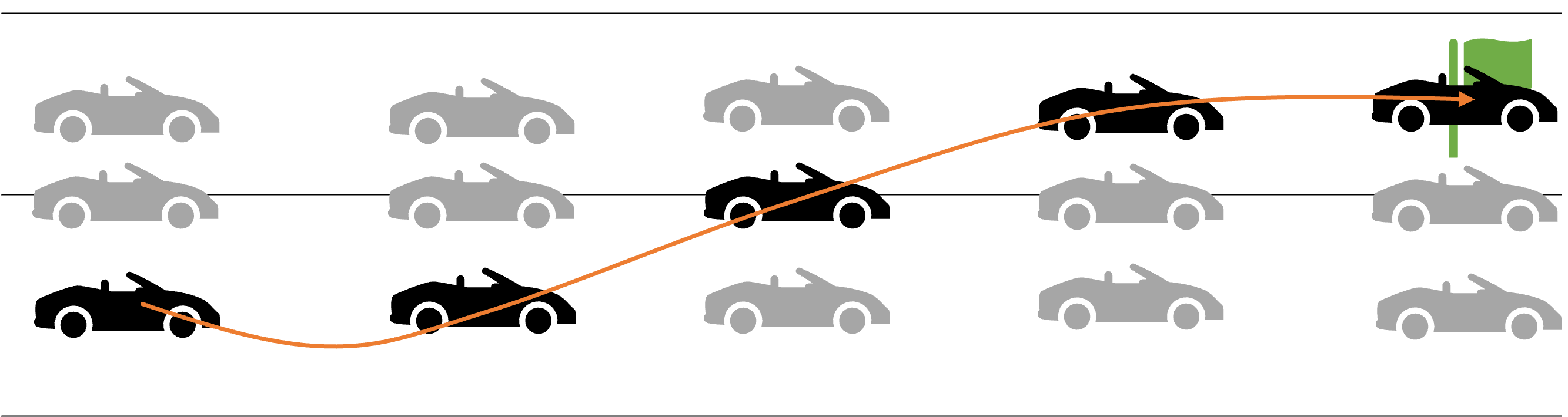

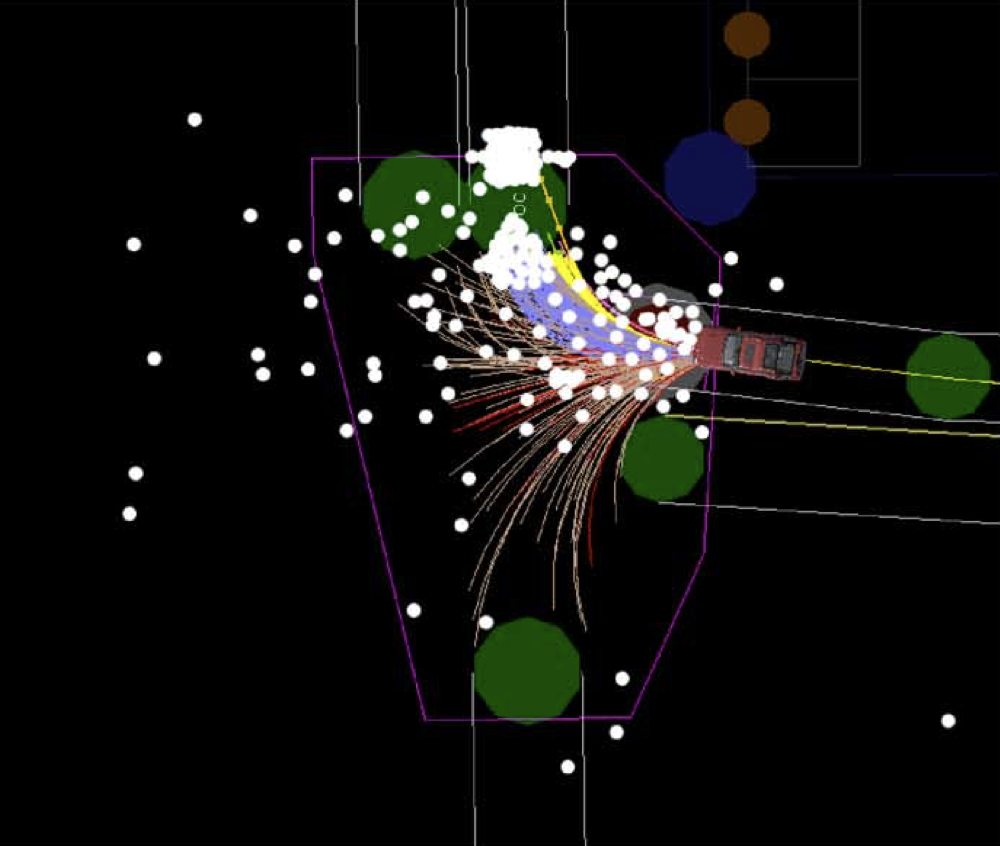

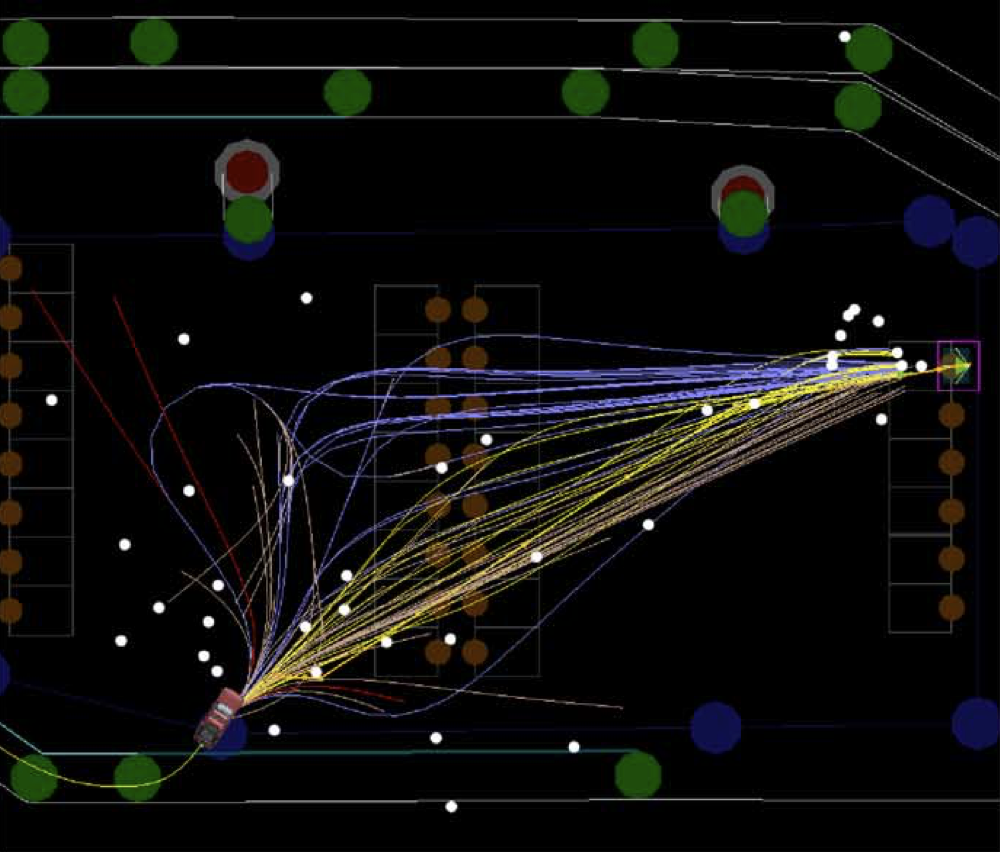

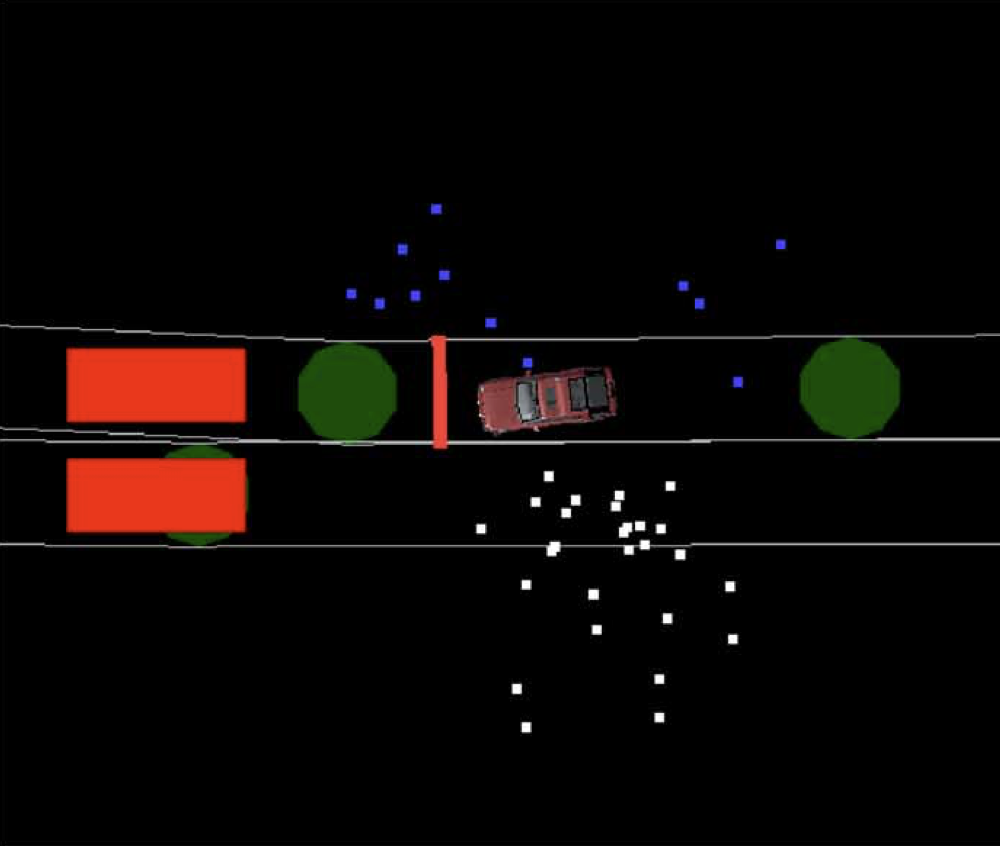

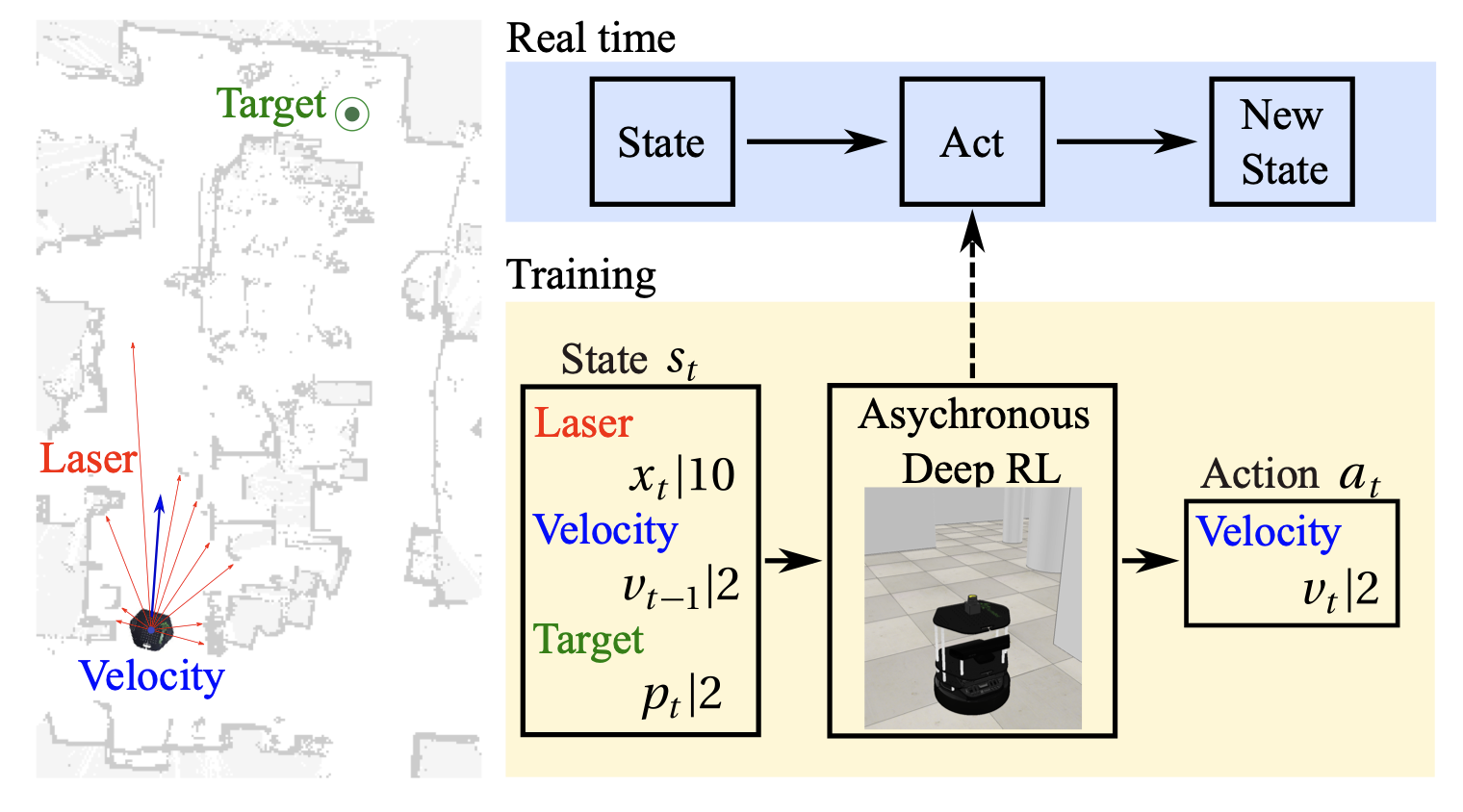

Simultaneous localization and mapping → figure out where we are.

1.6.3 waypoint detection

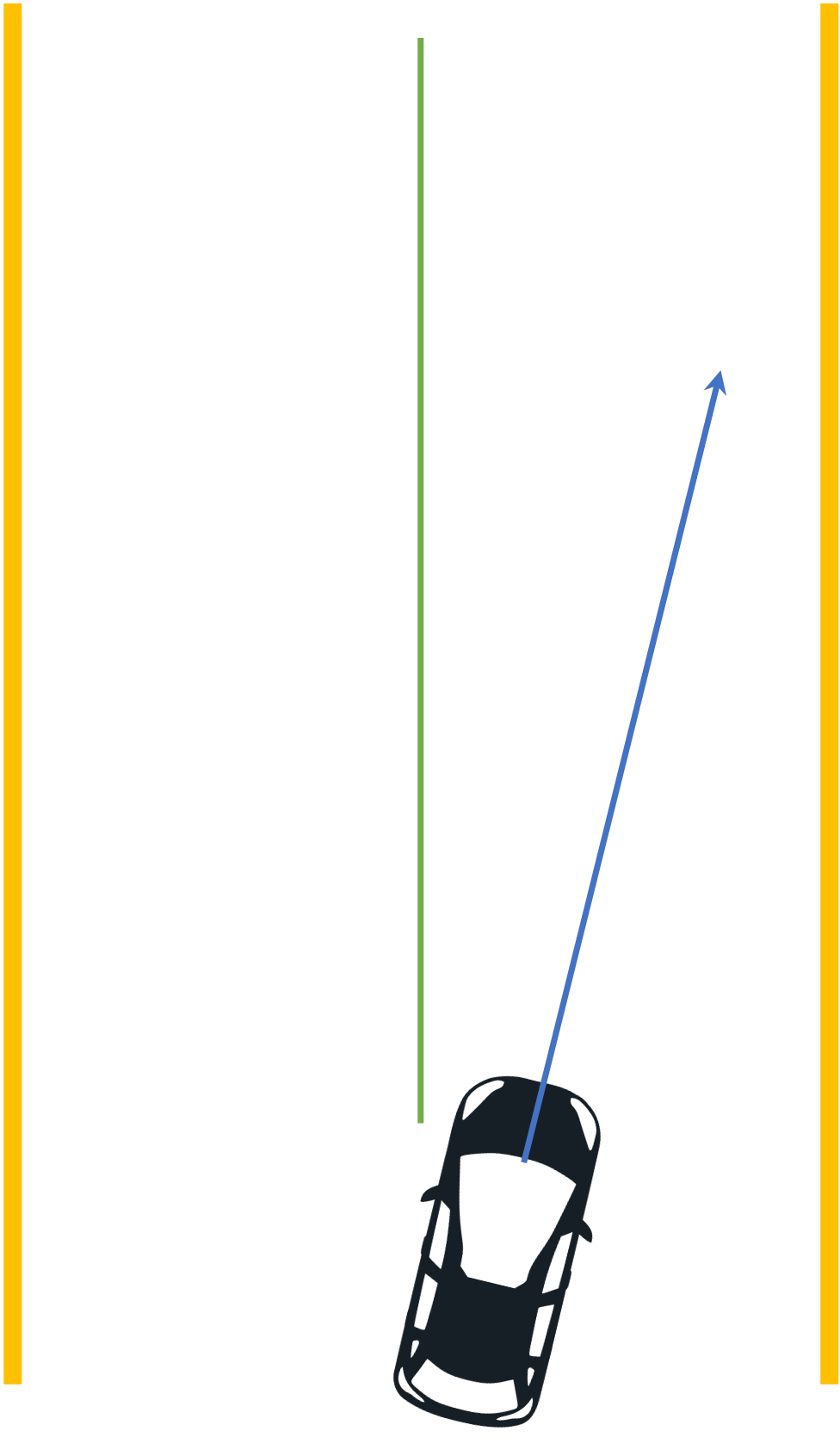

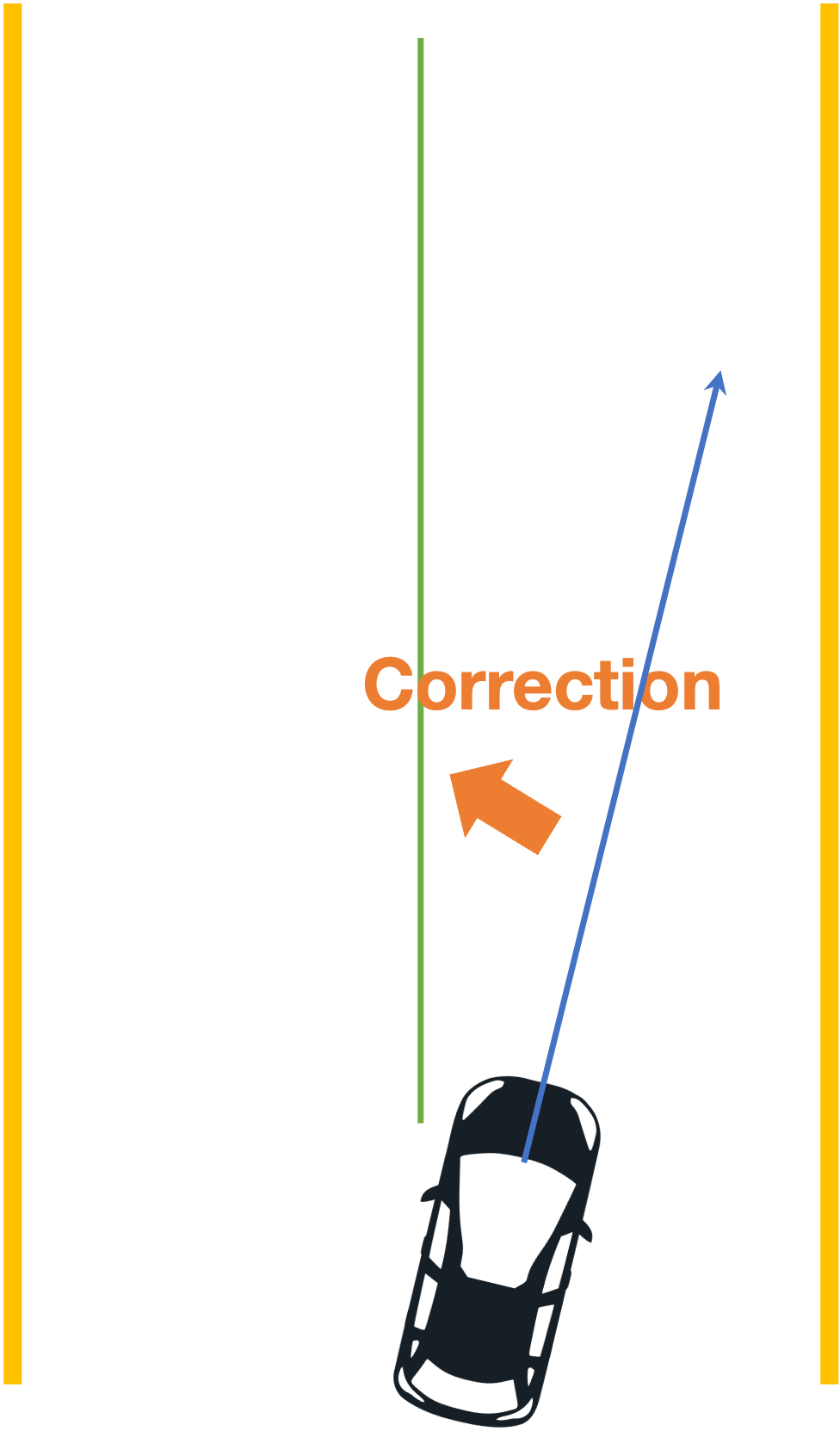

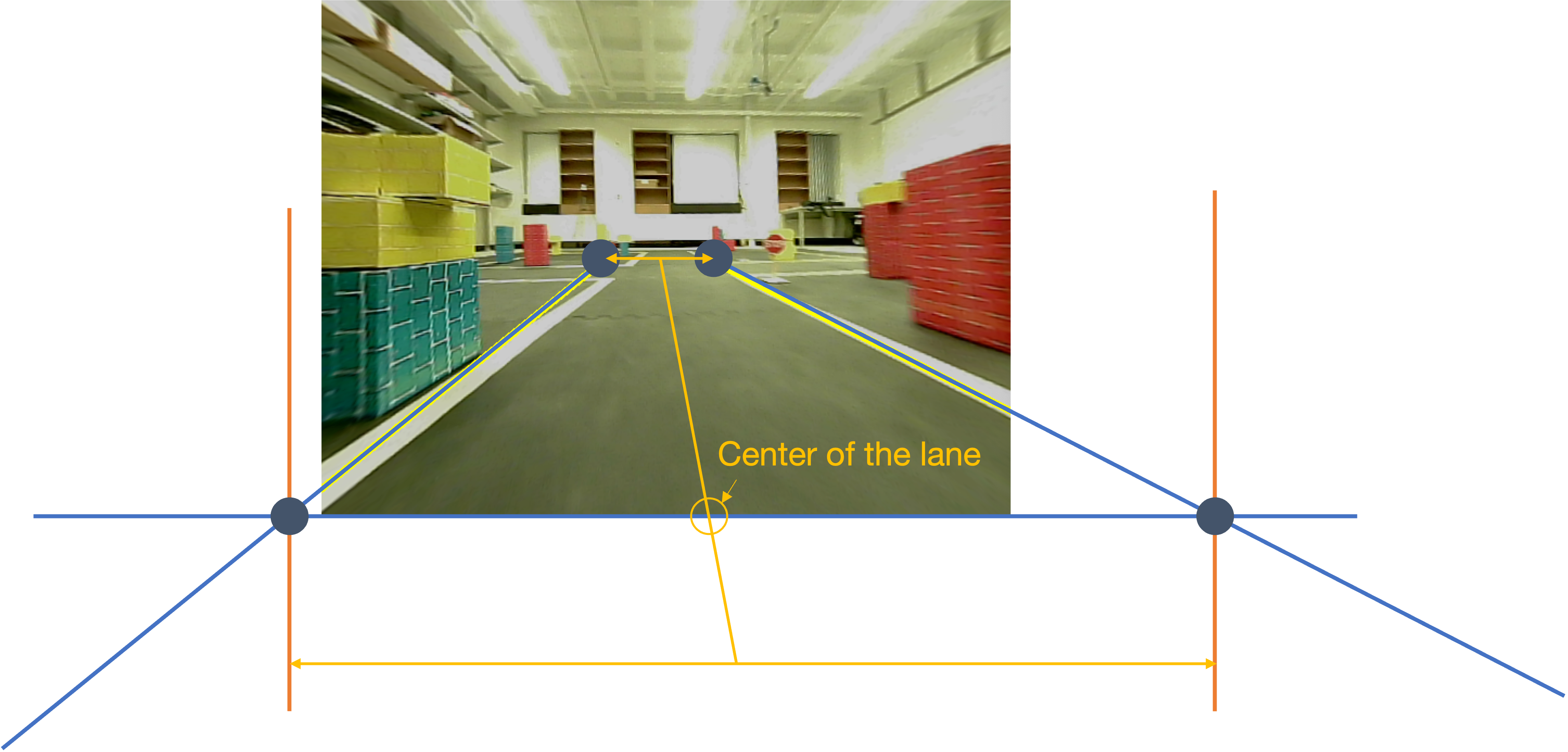

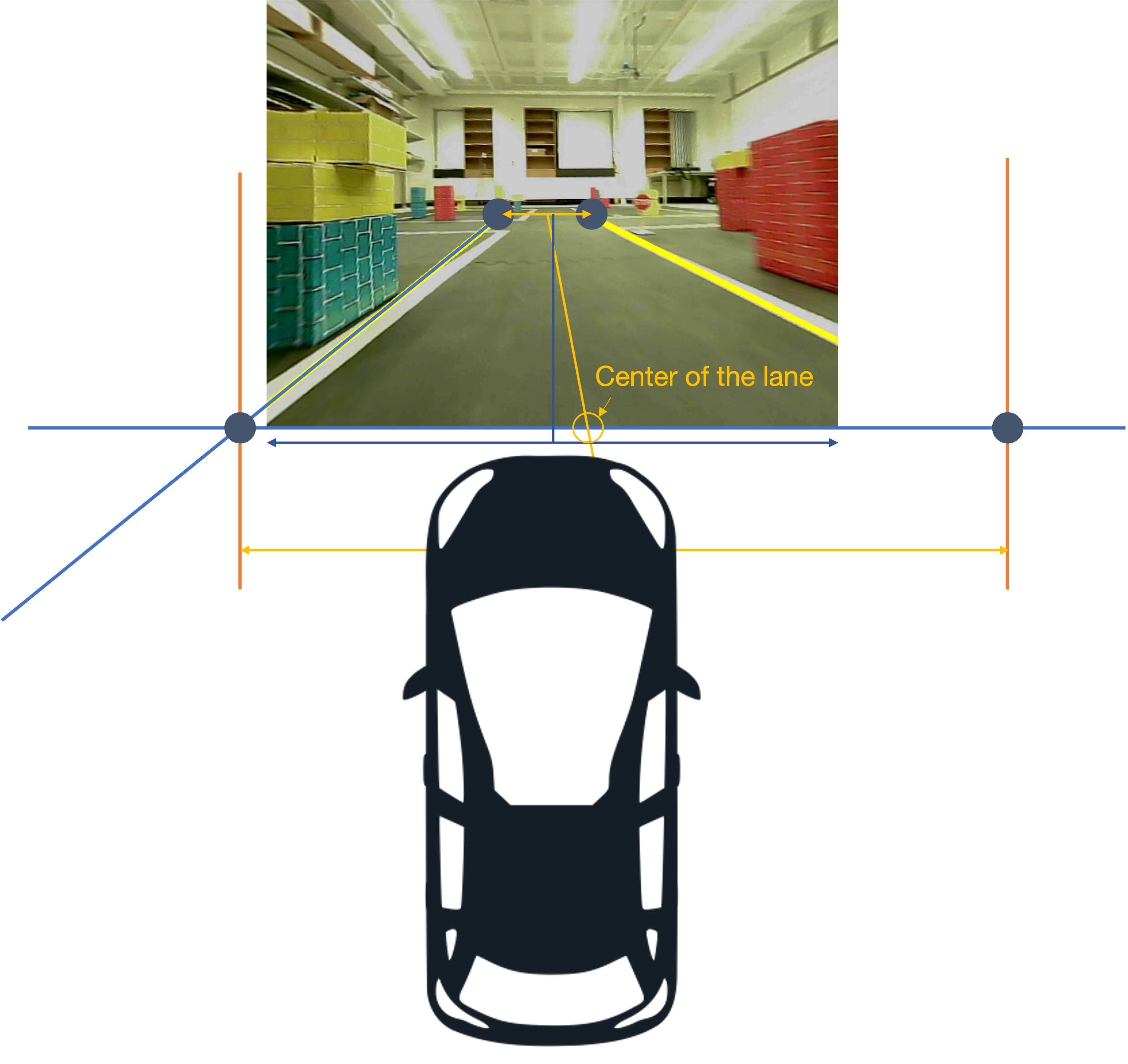

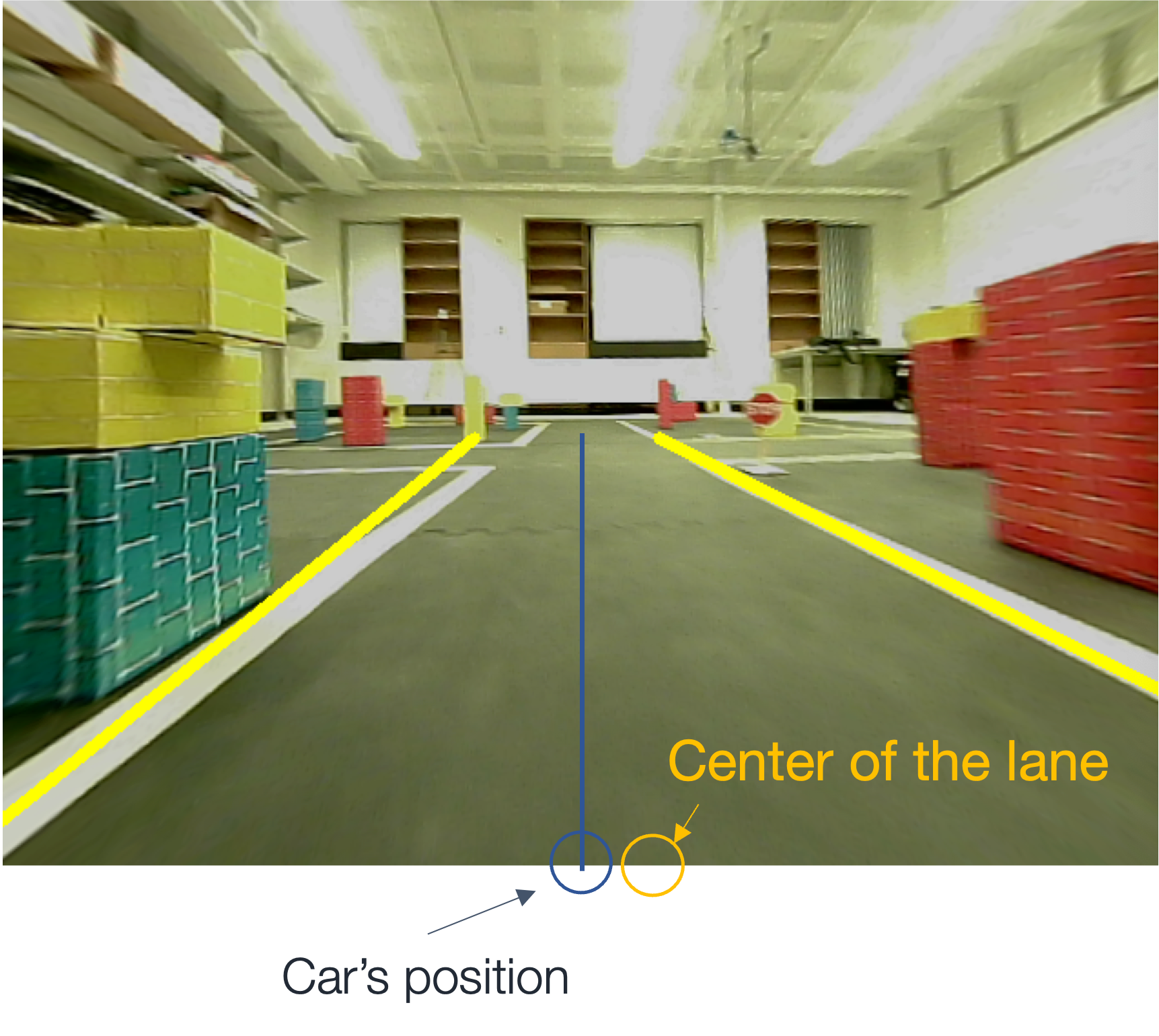

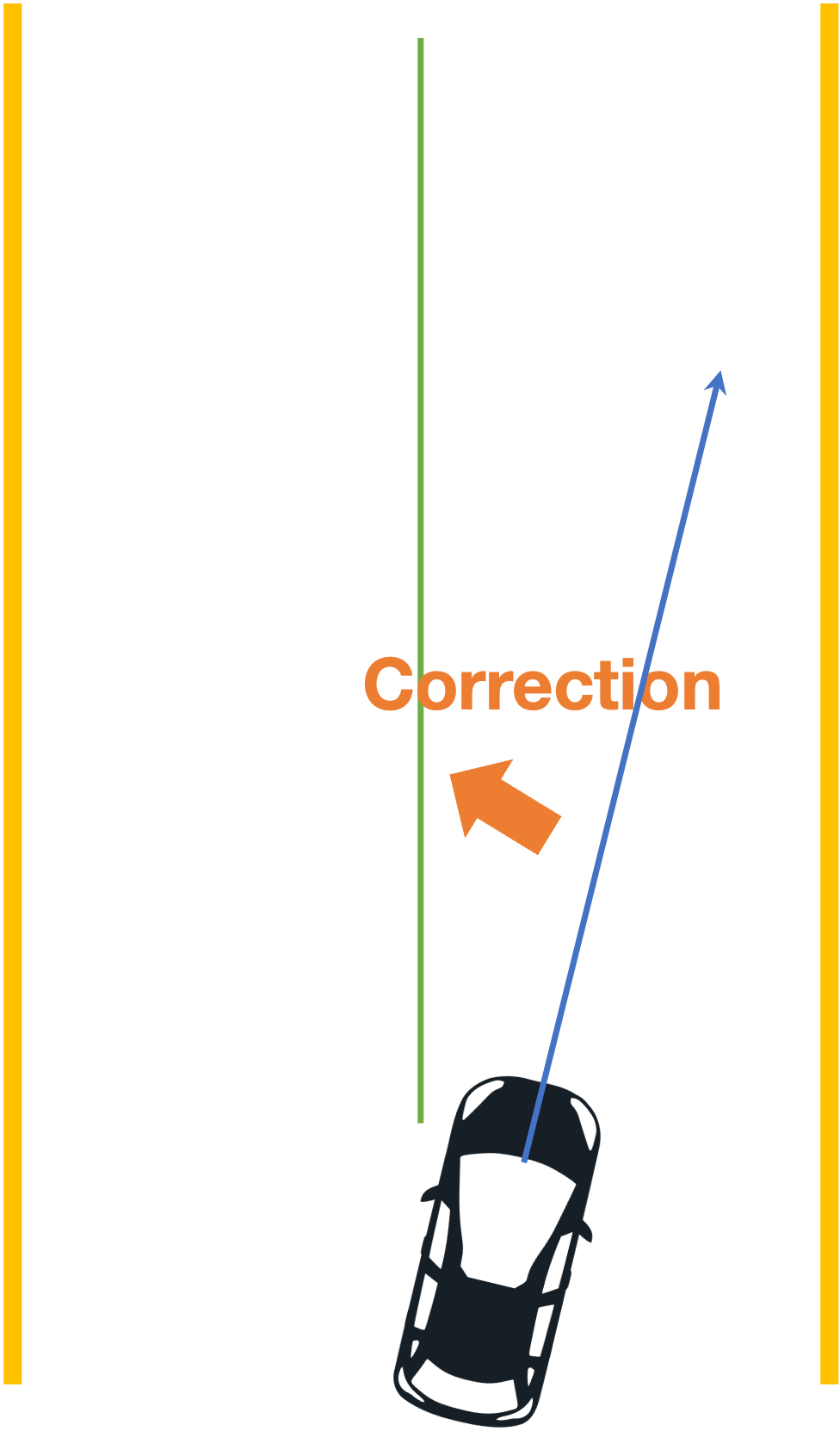

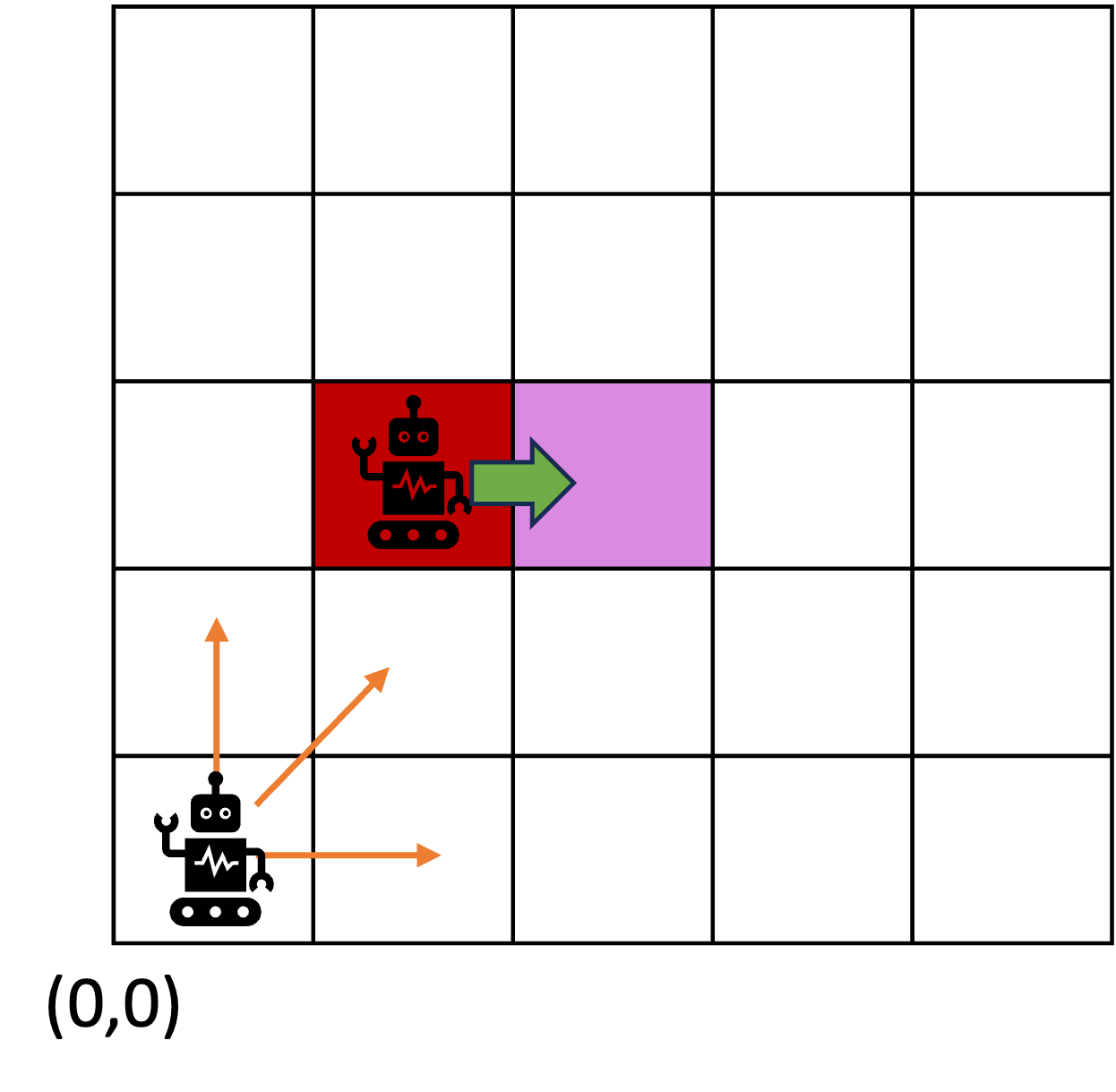

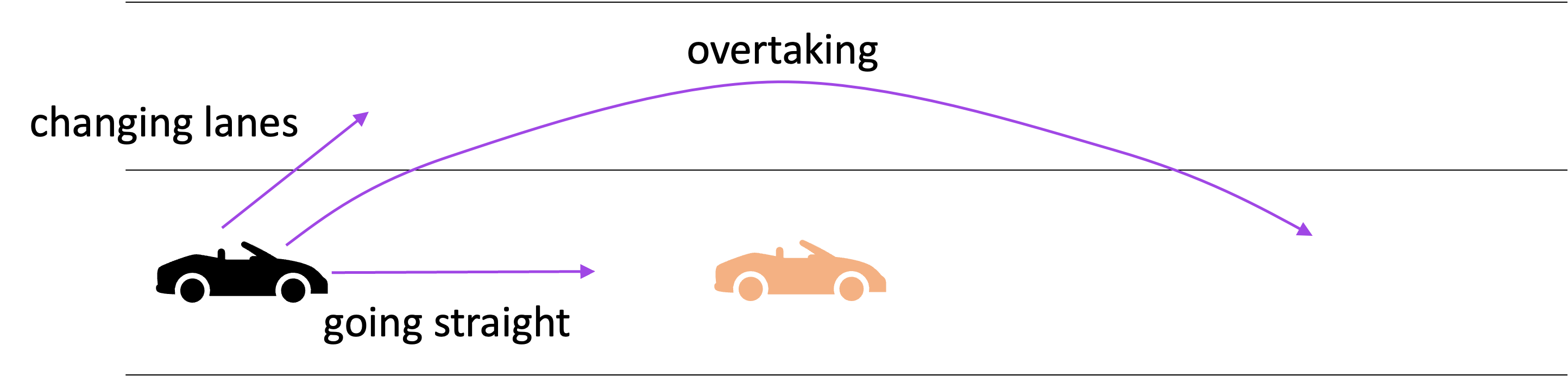

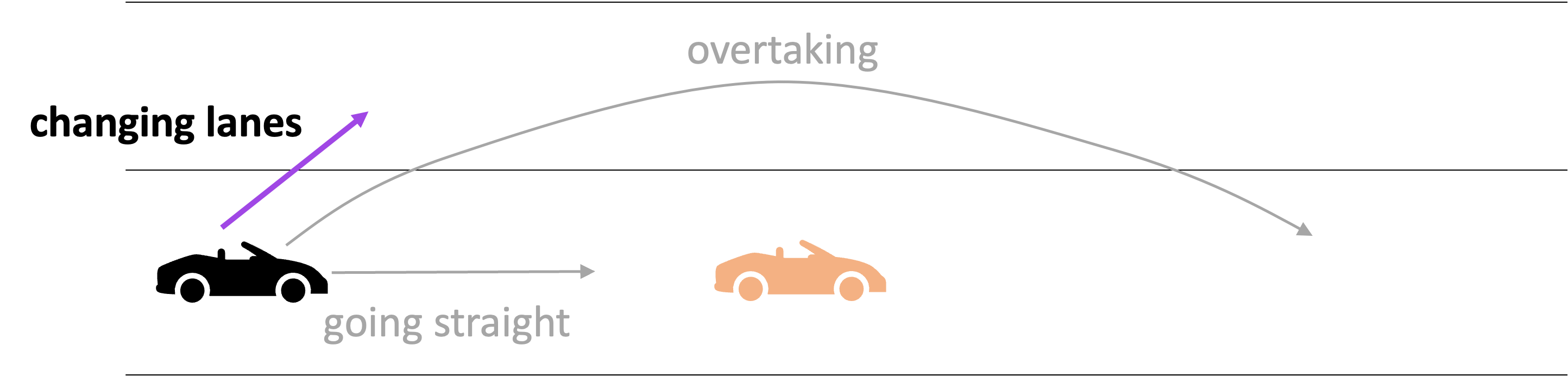

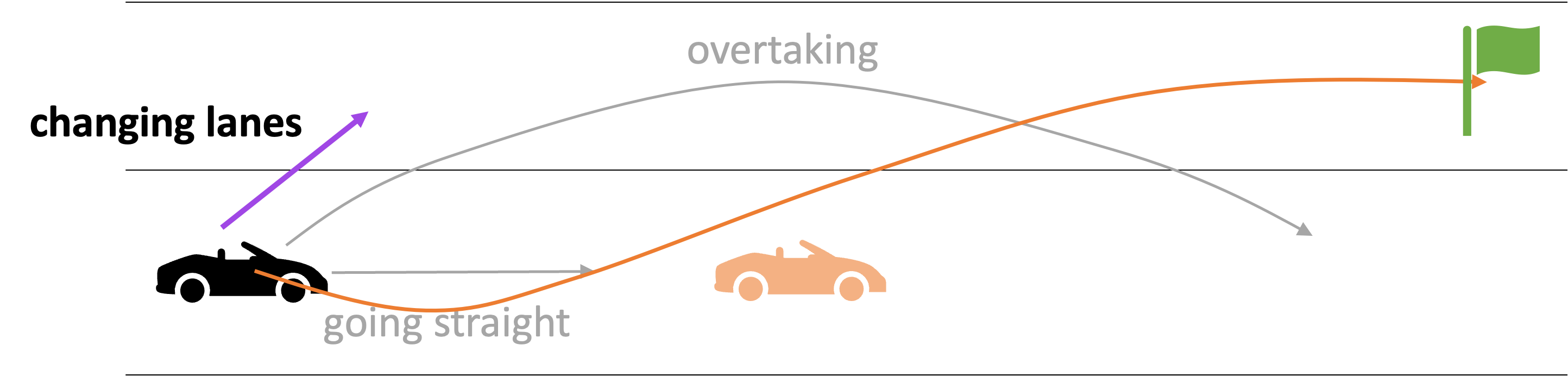

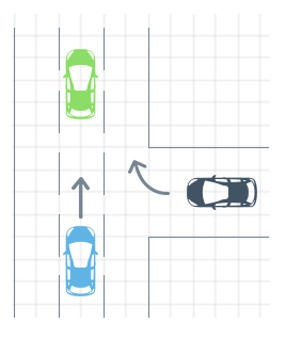

Understand how to move in the right direction at the micro level, i.e., find waypoints.

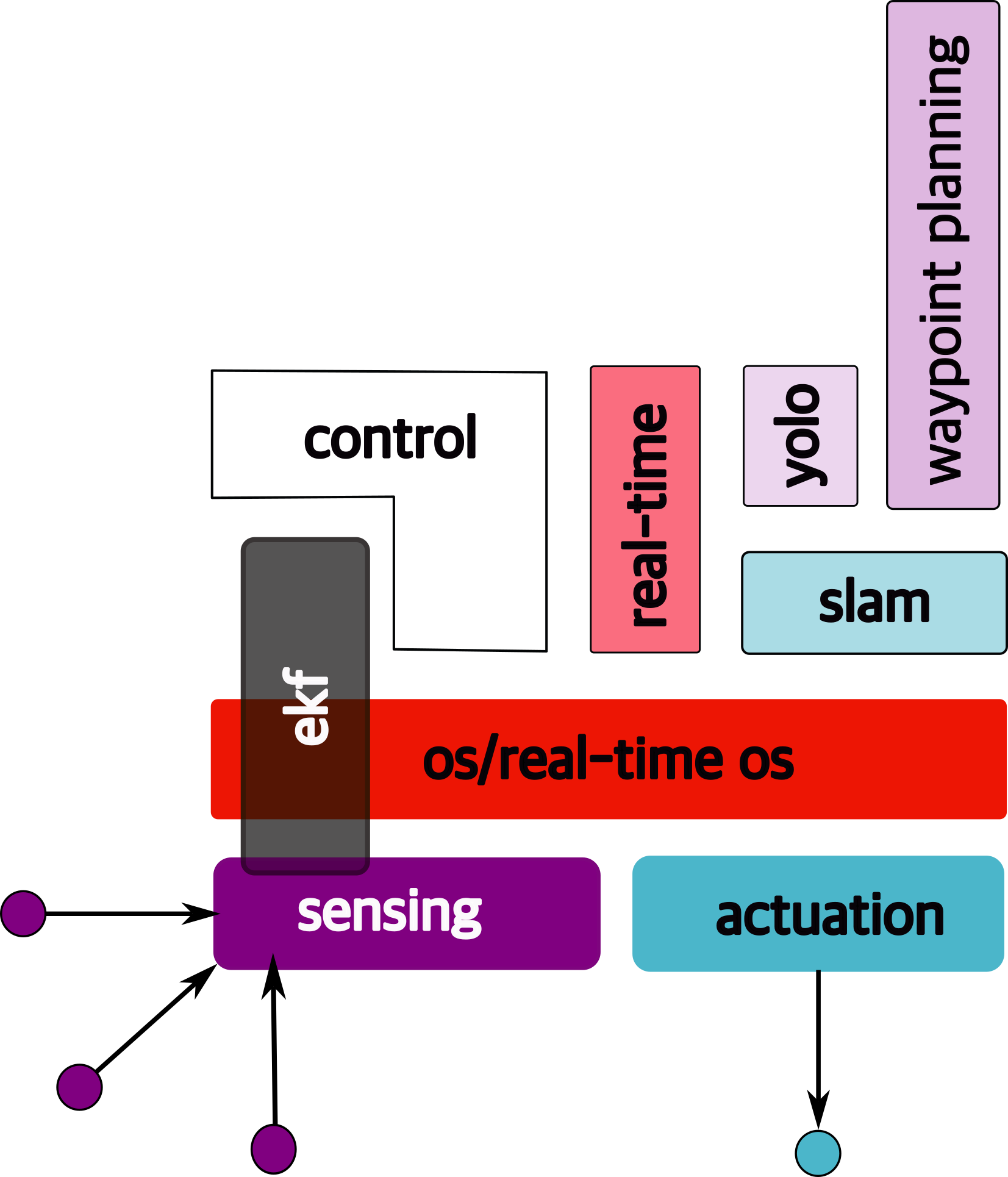

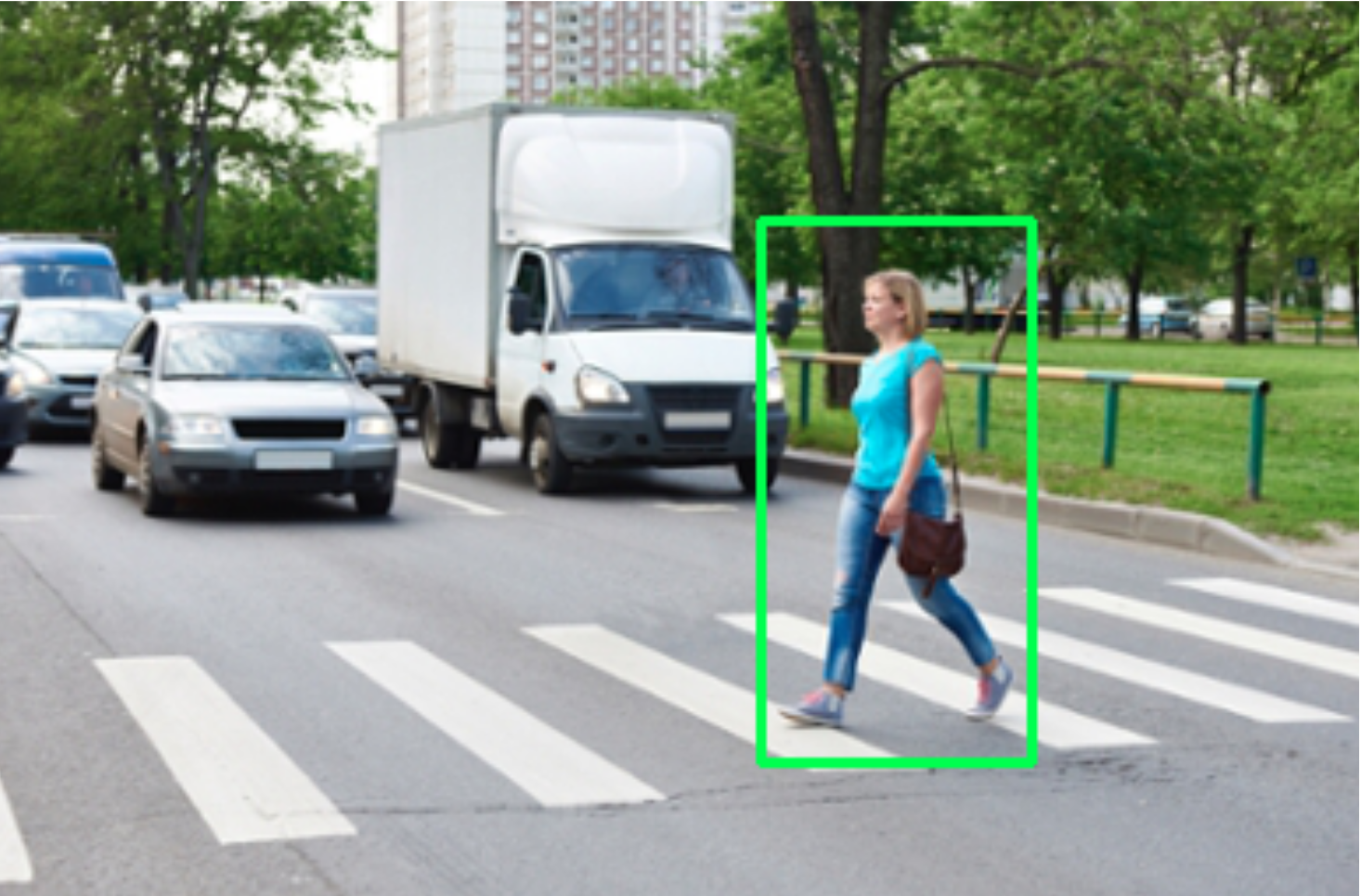

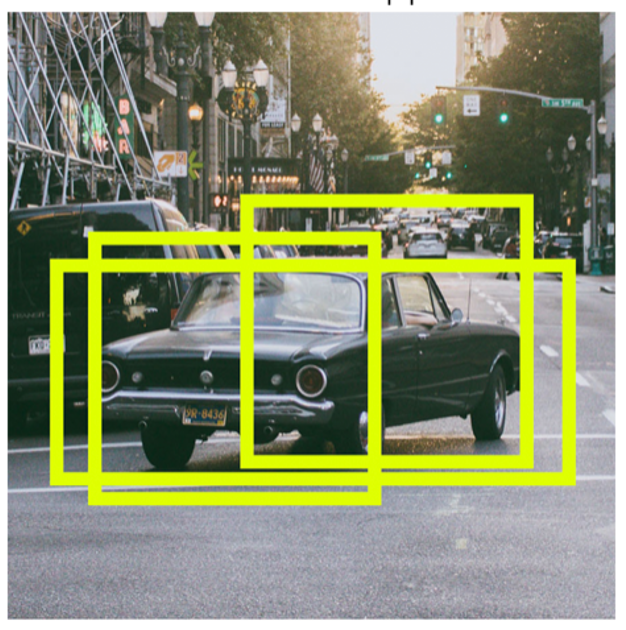

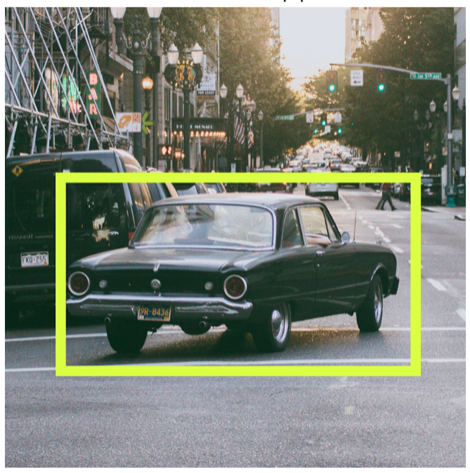

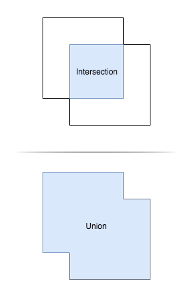

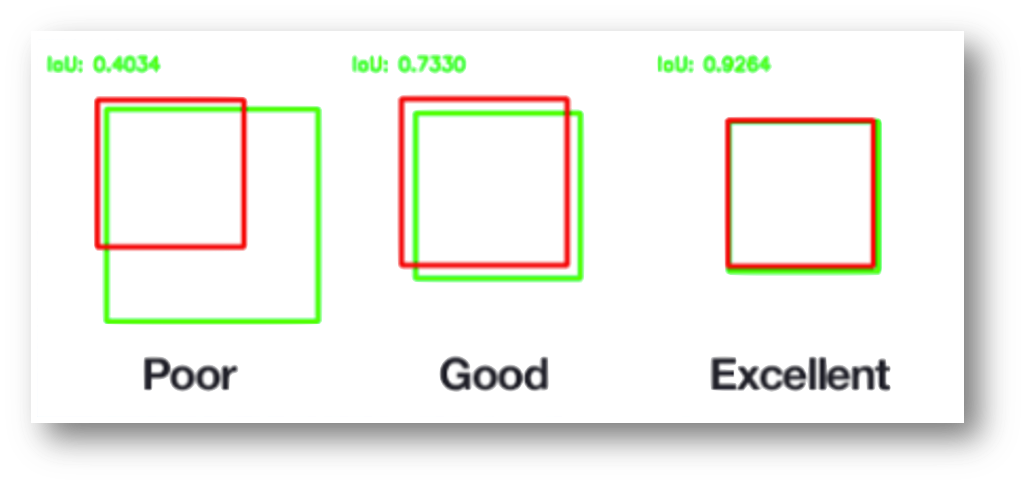

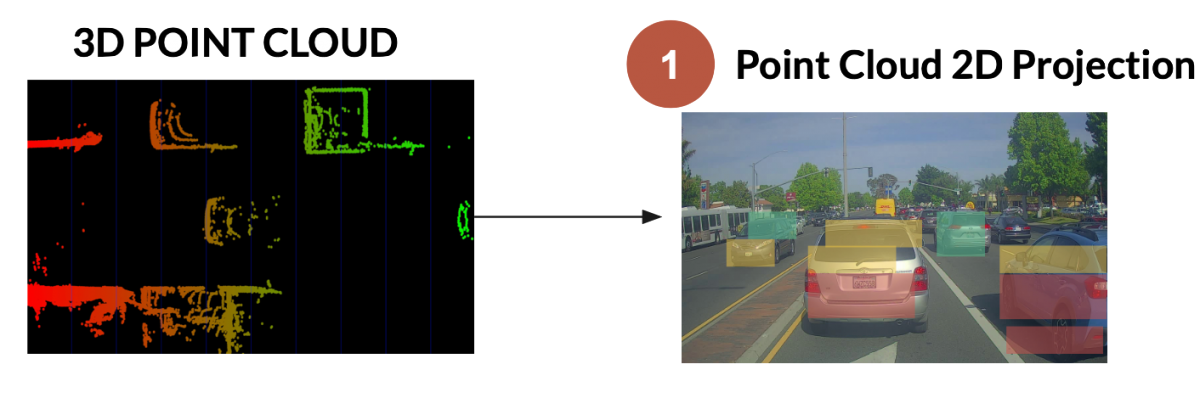

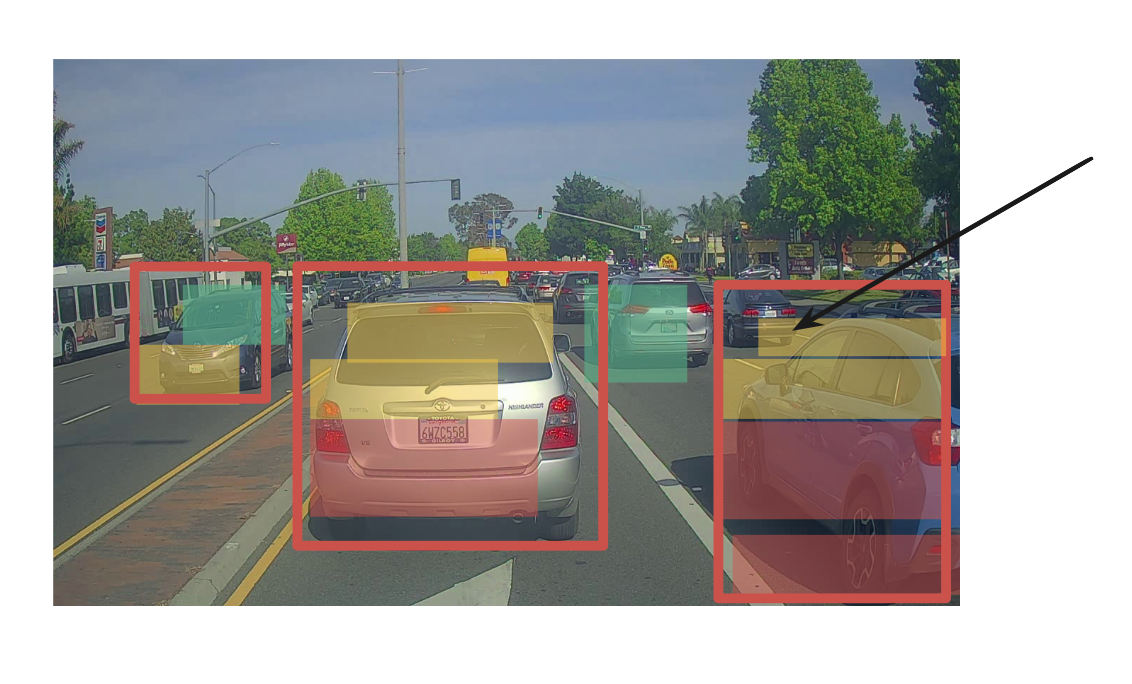

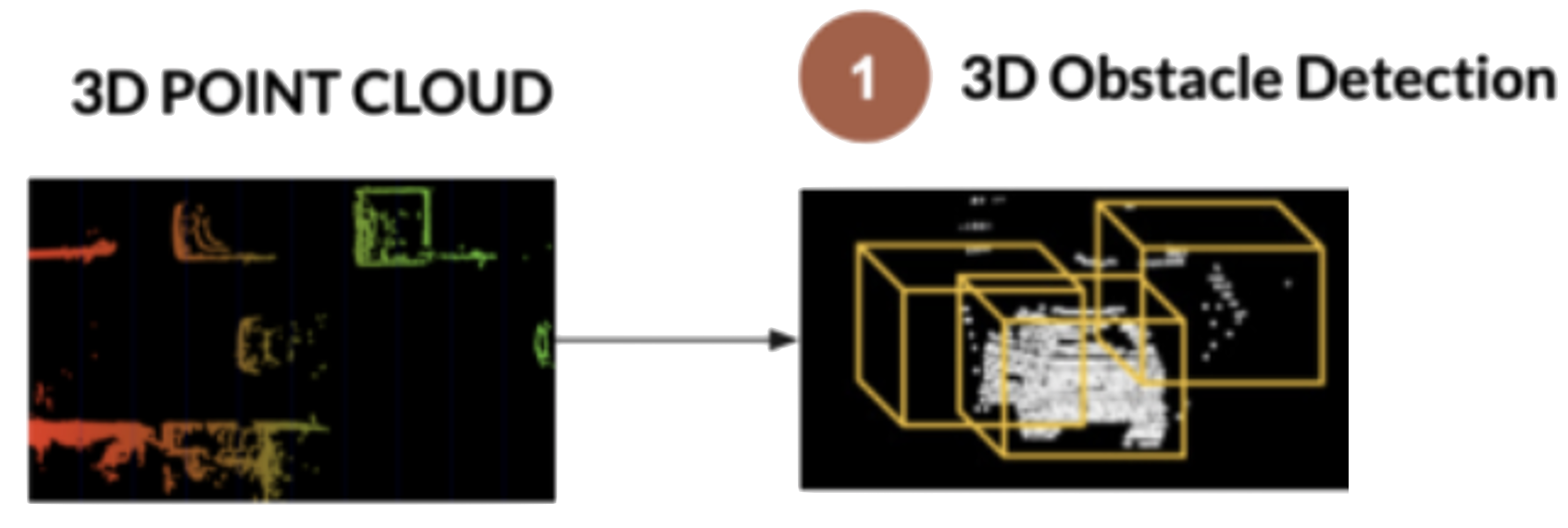

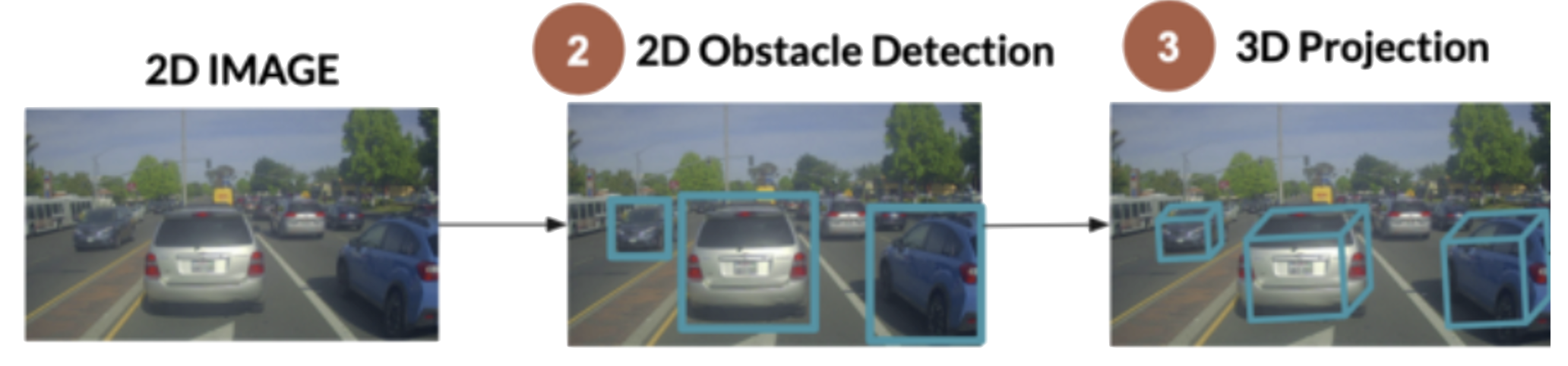

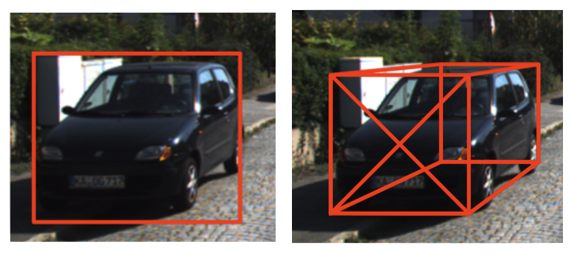

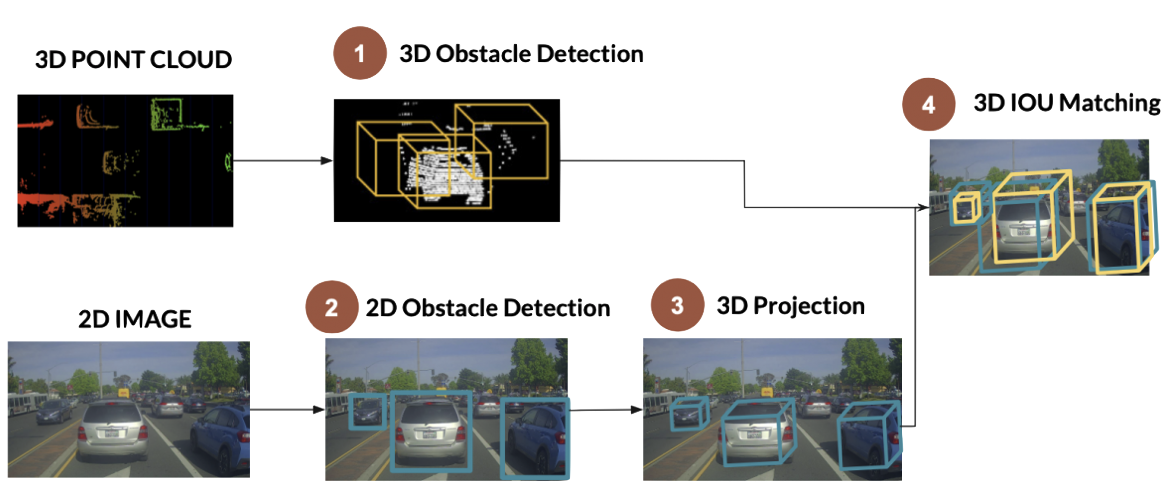

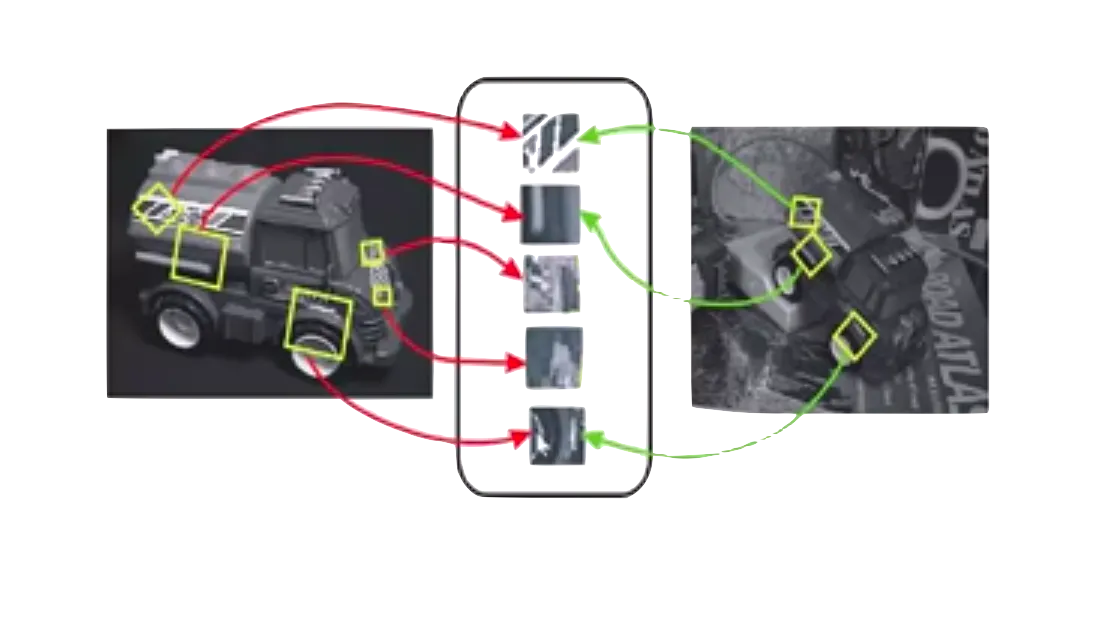

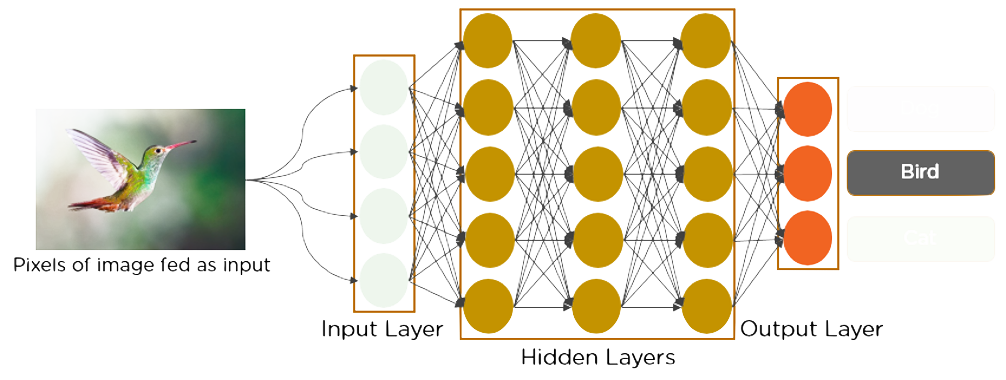

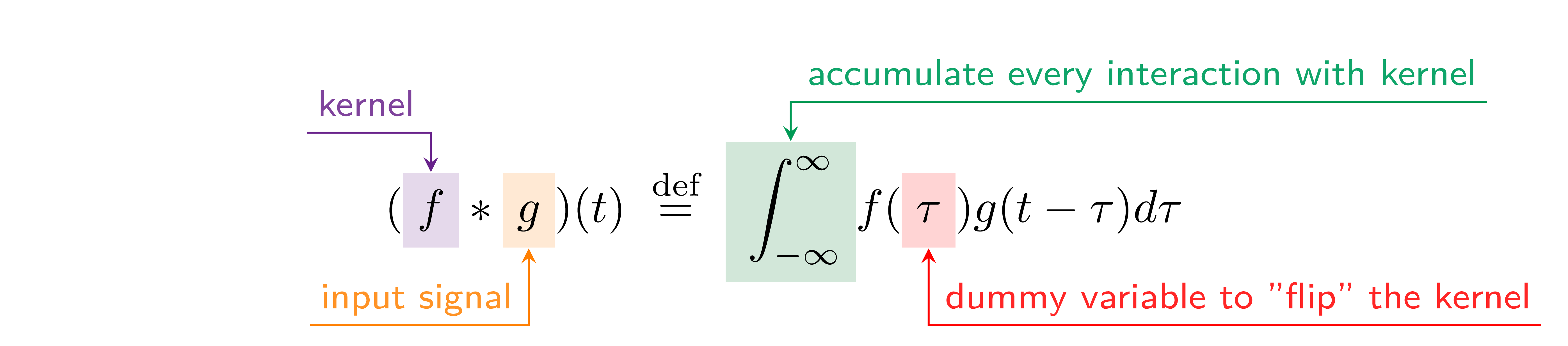

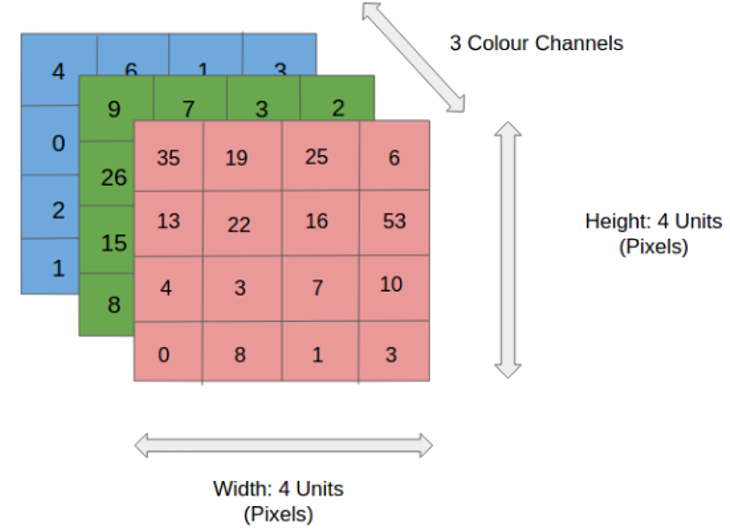

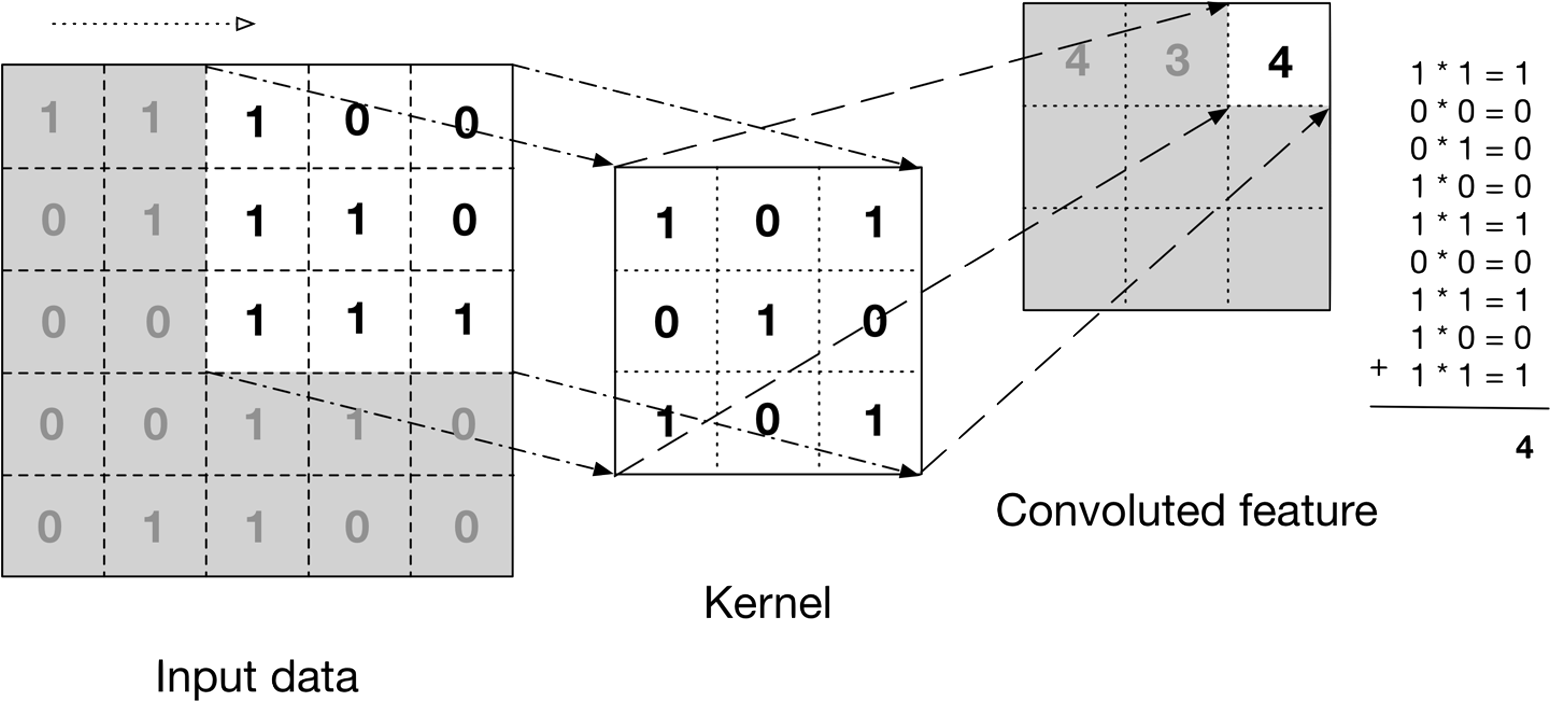

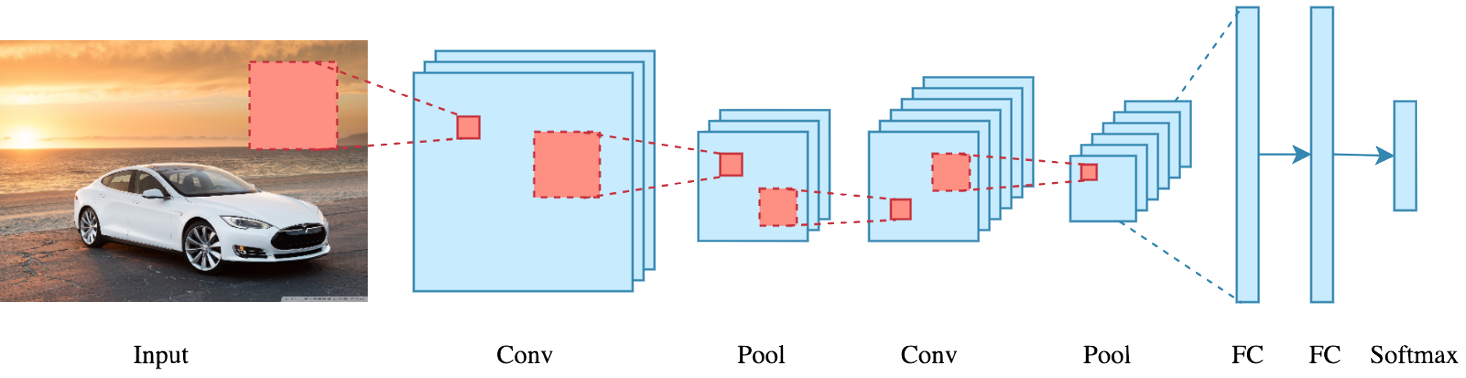

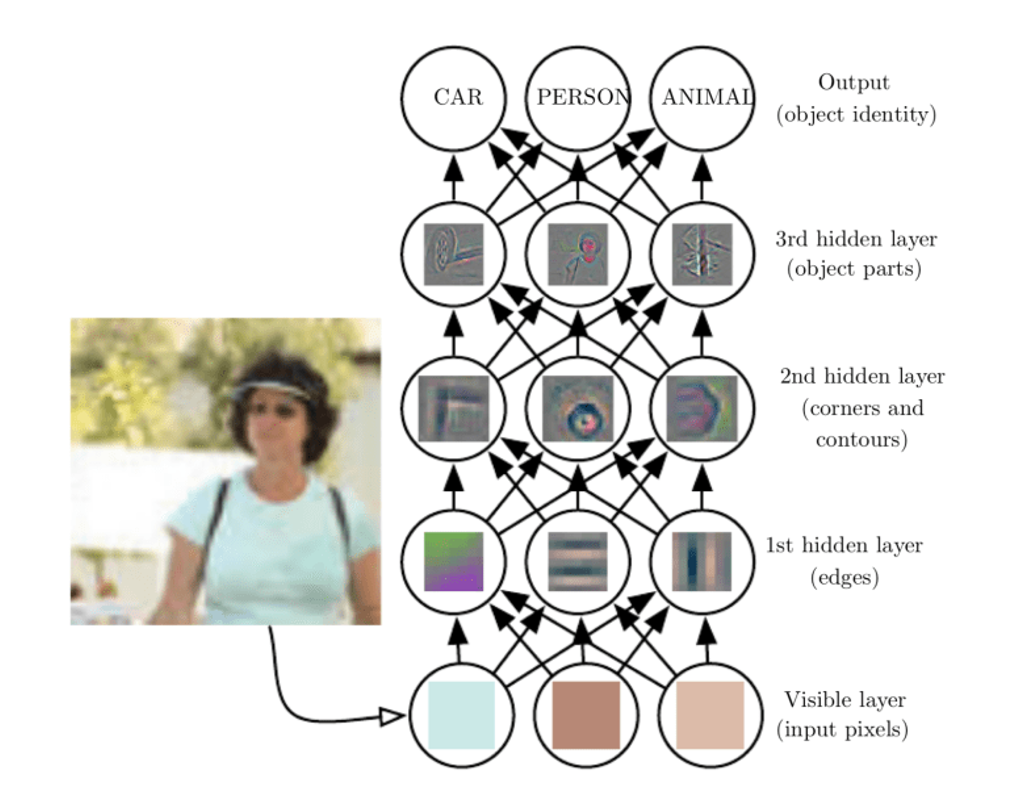

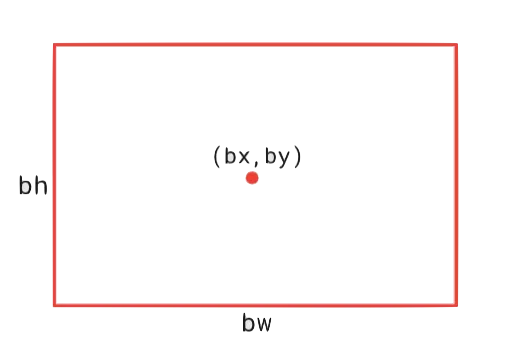

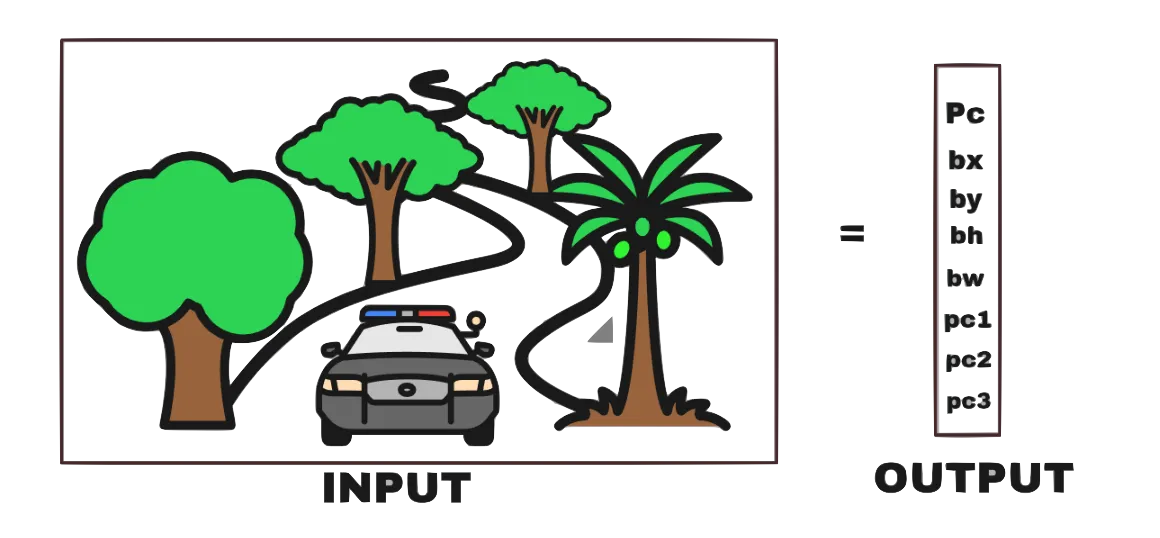

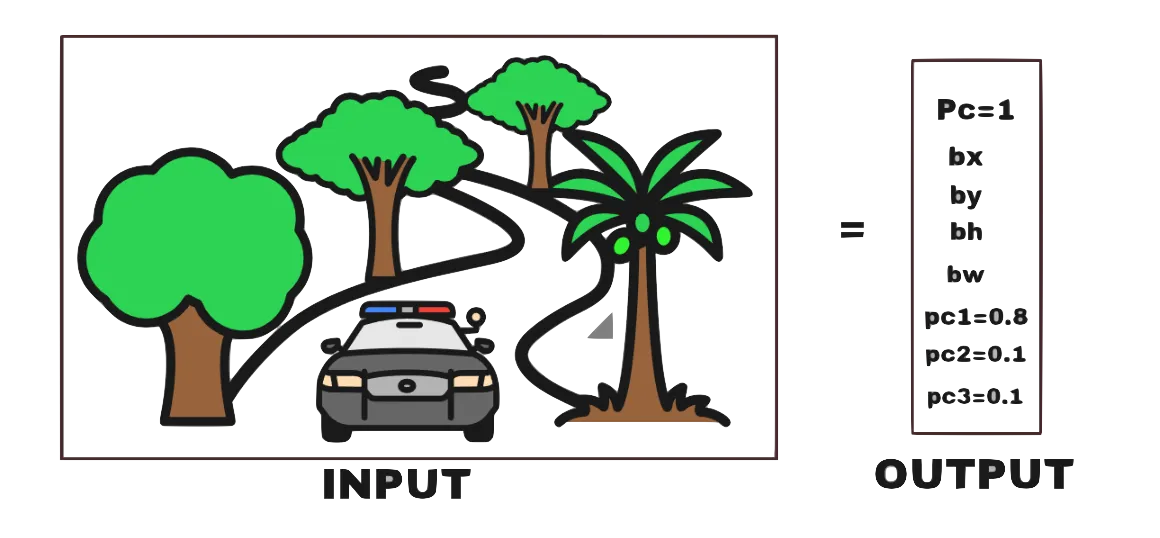

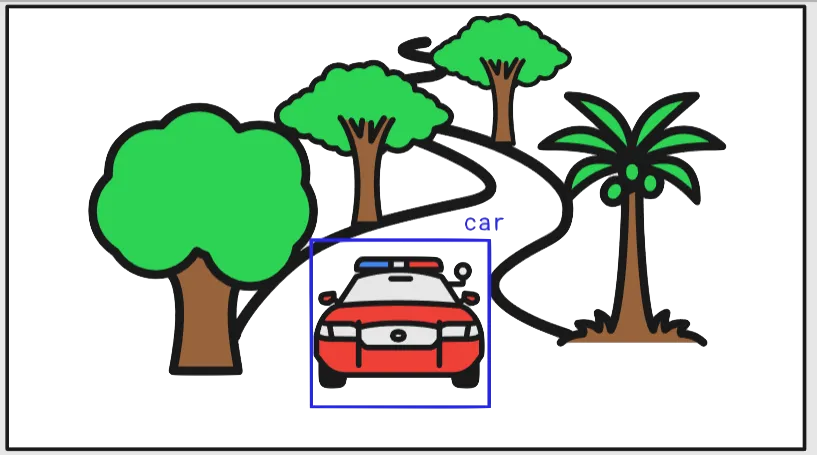

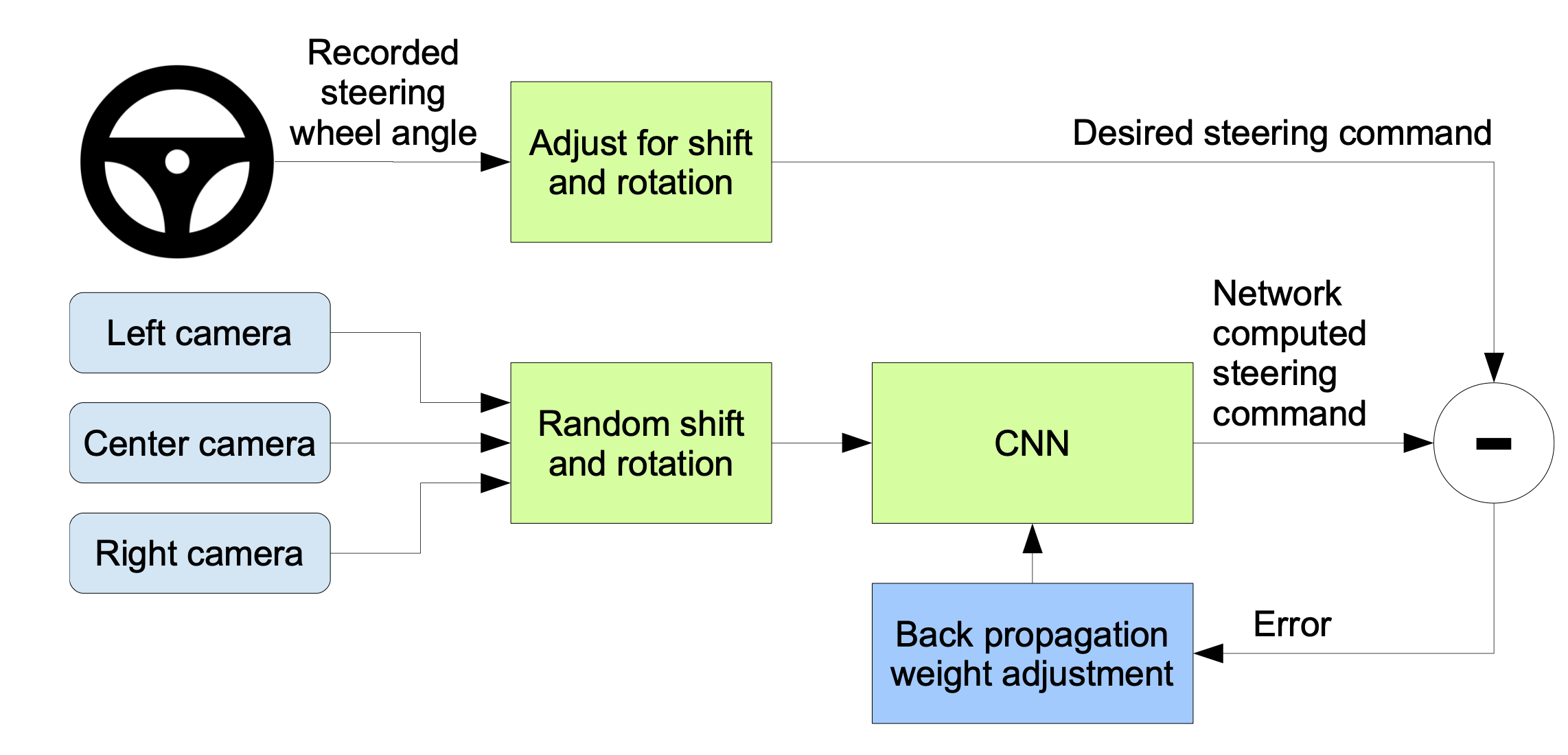

1.6.4 yolo

Is it “you only live once”? Actually this stands for: “you only look once”. It is an object detection model that uses convolutional neural networks (cnns)

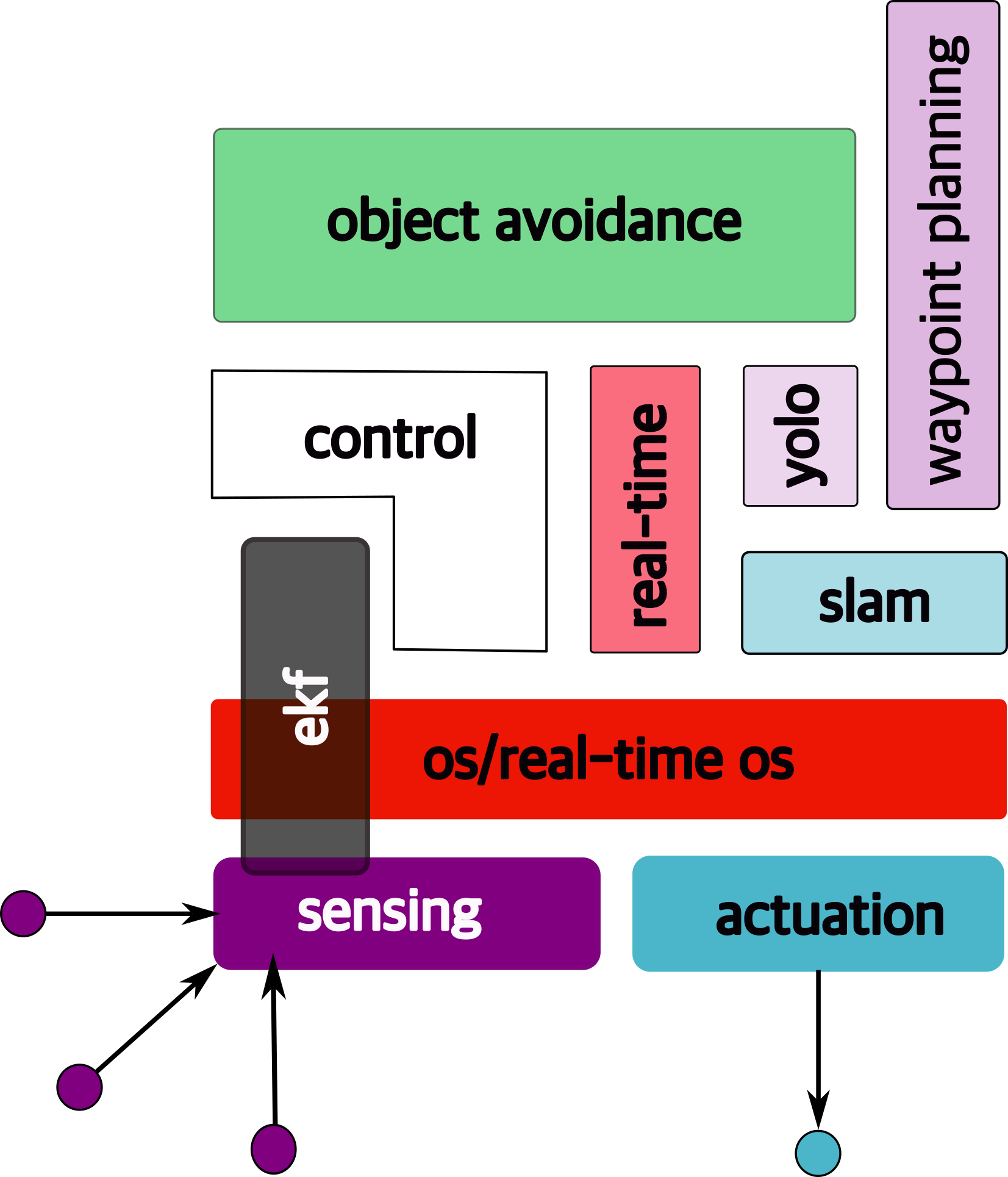

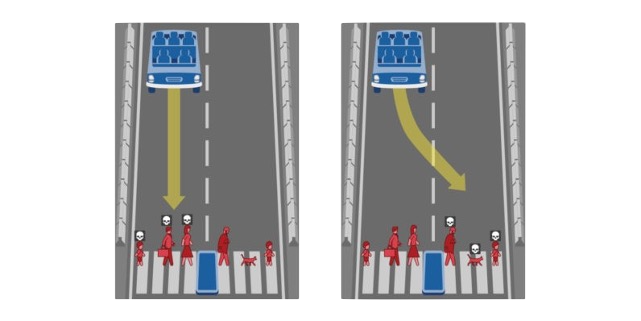

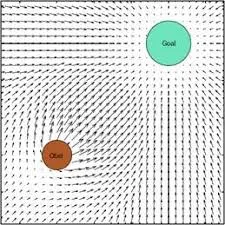

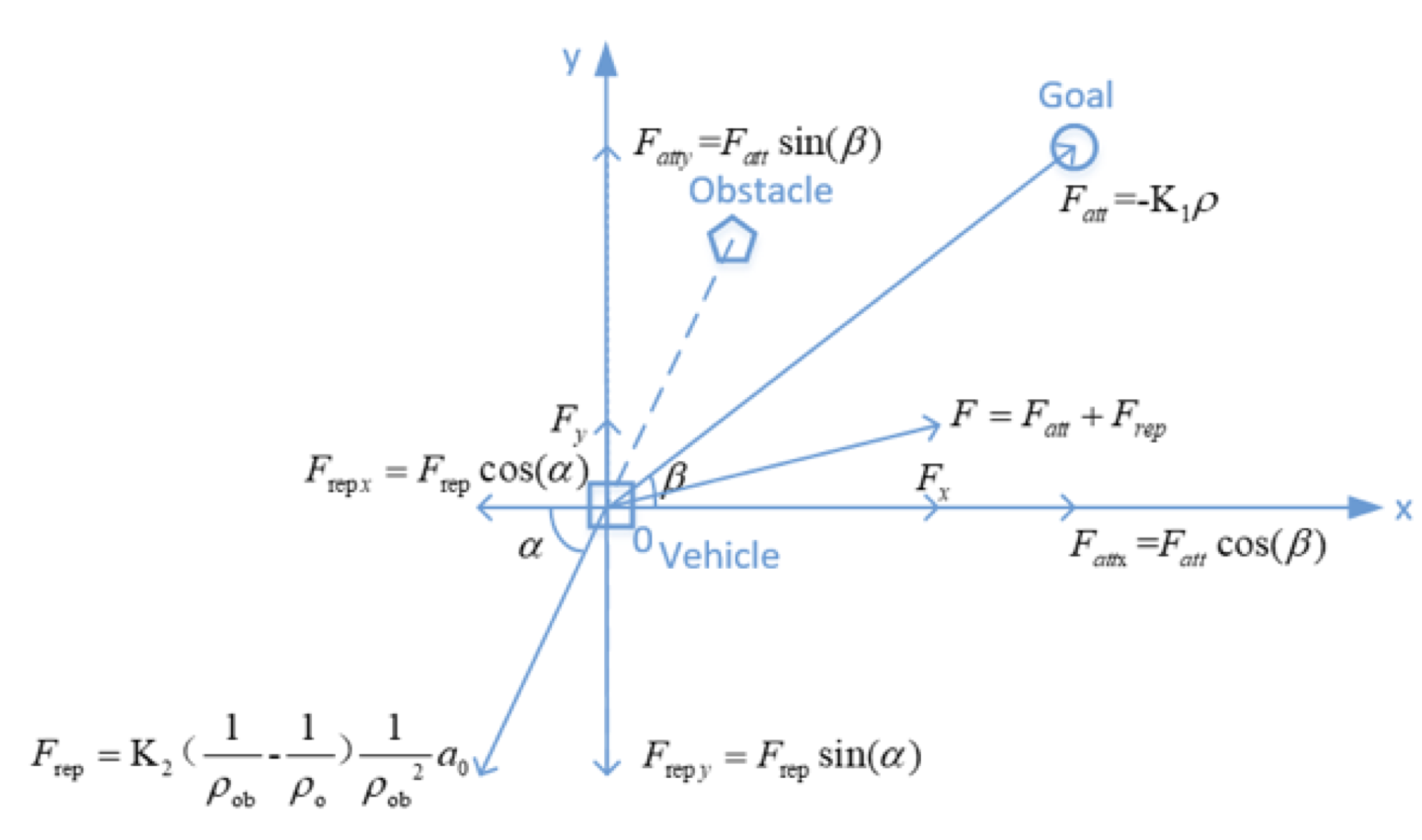

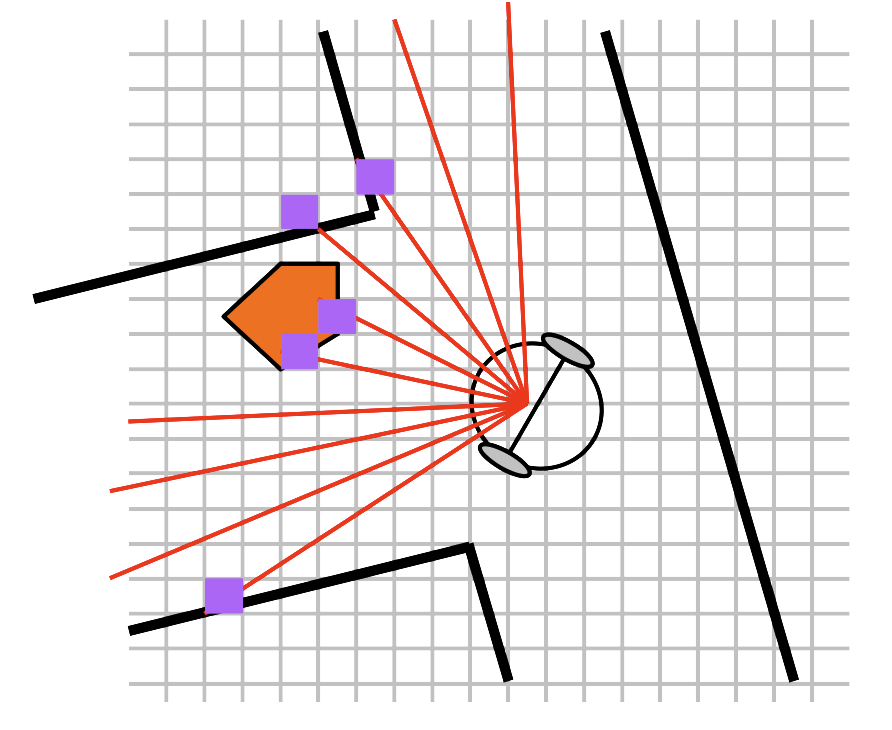

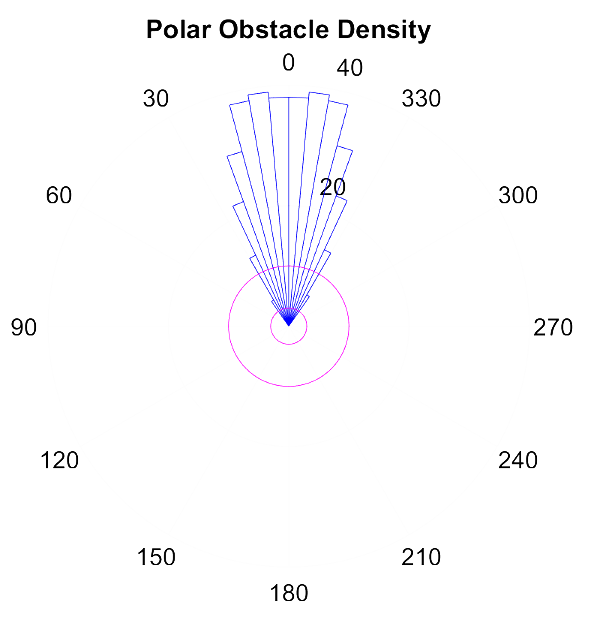

1.6.5 object avoidance

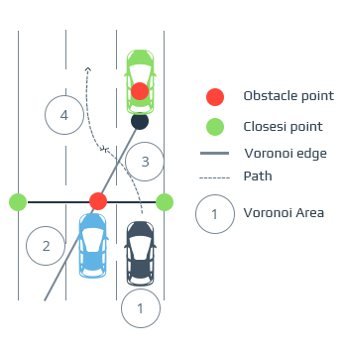

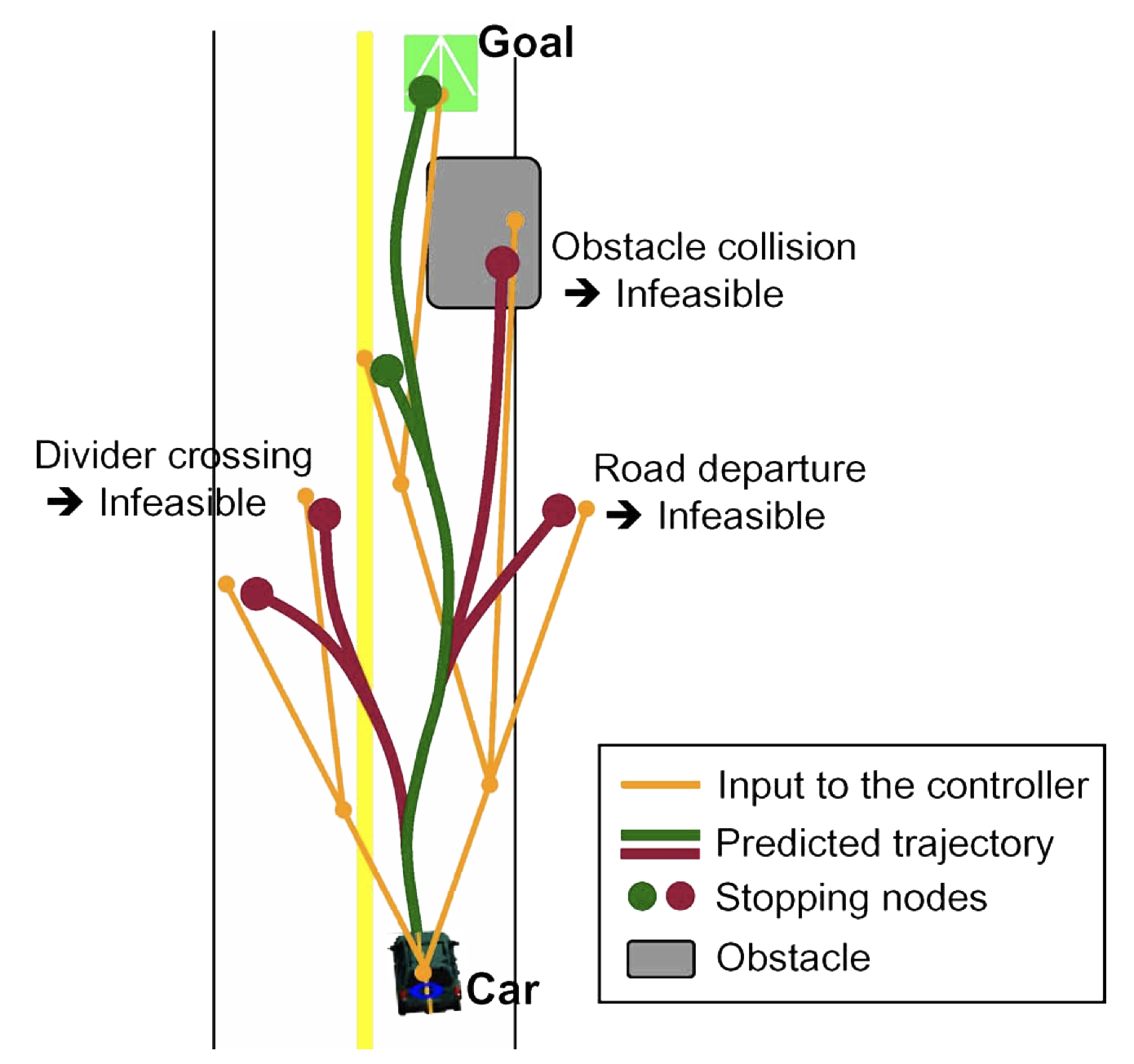

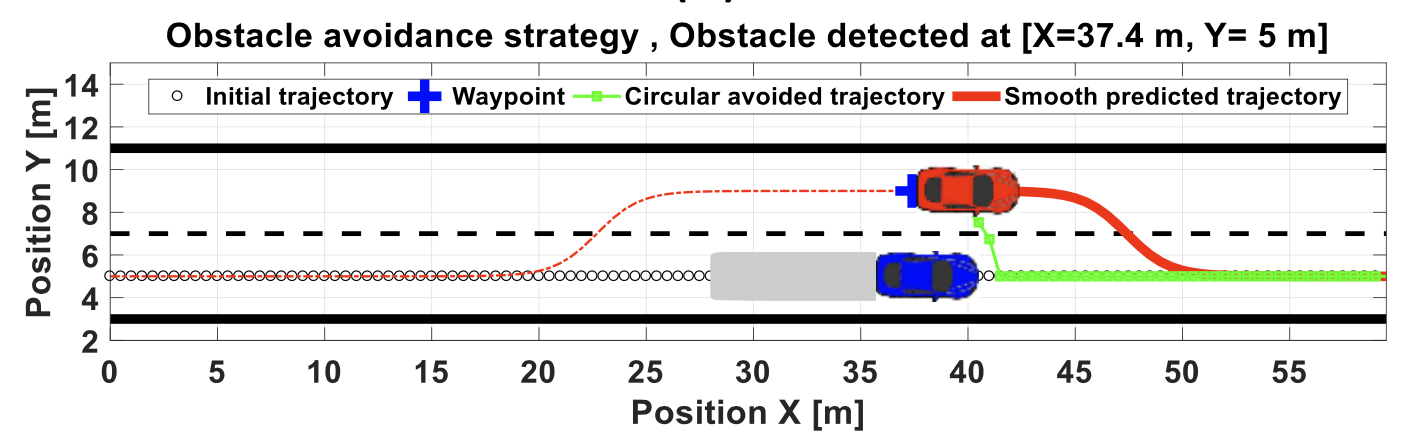

The objective is to avoid objects in the immediate path.

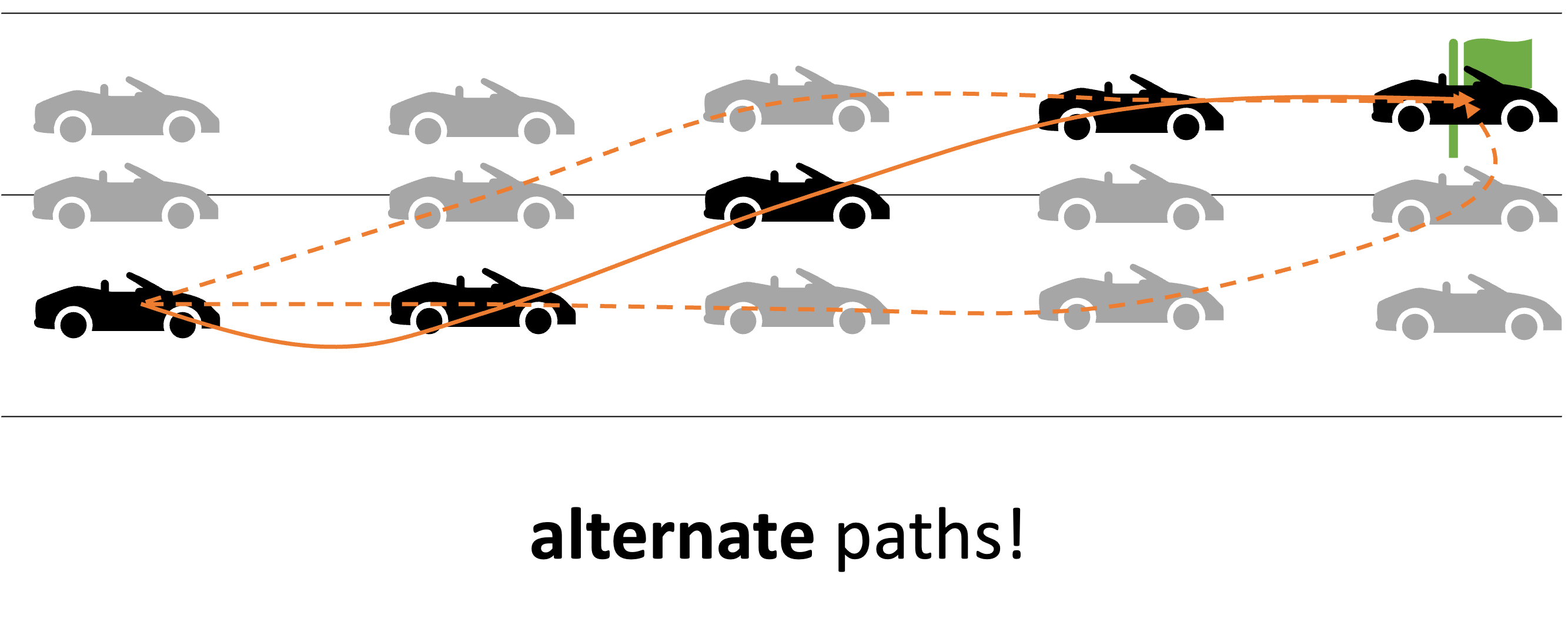

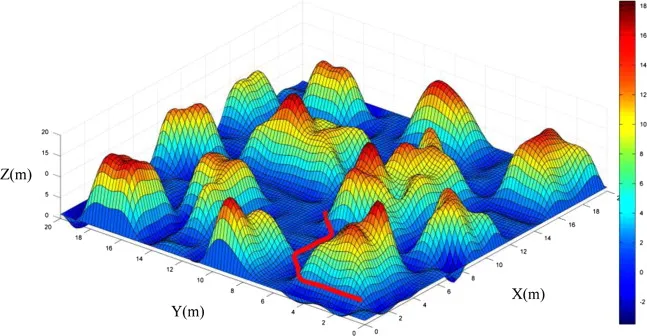

1.6.6 path planning

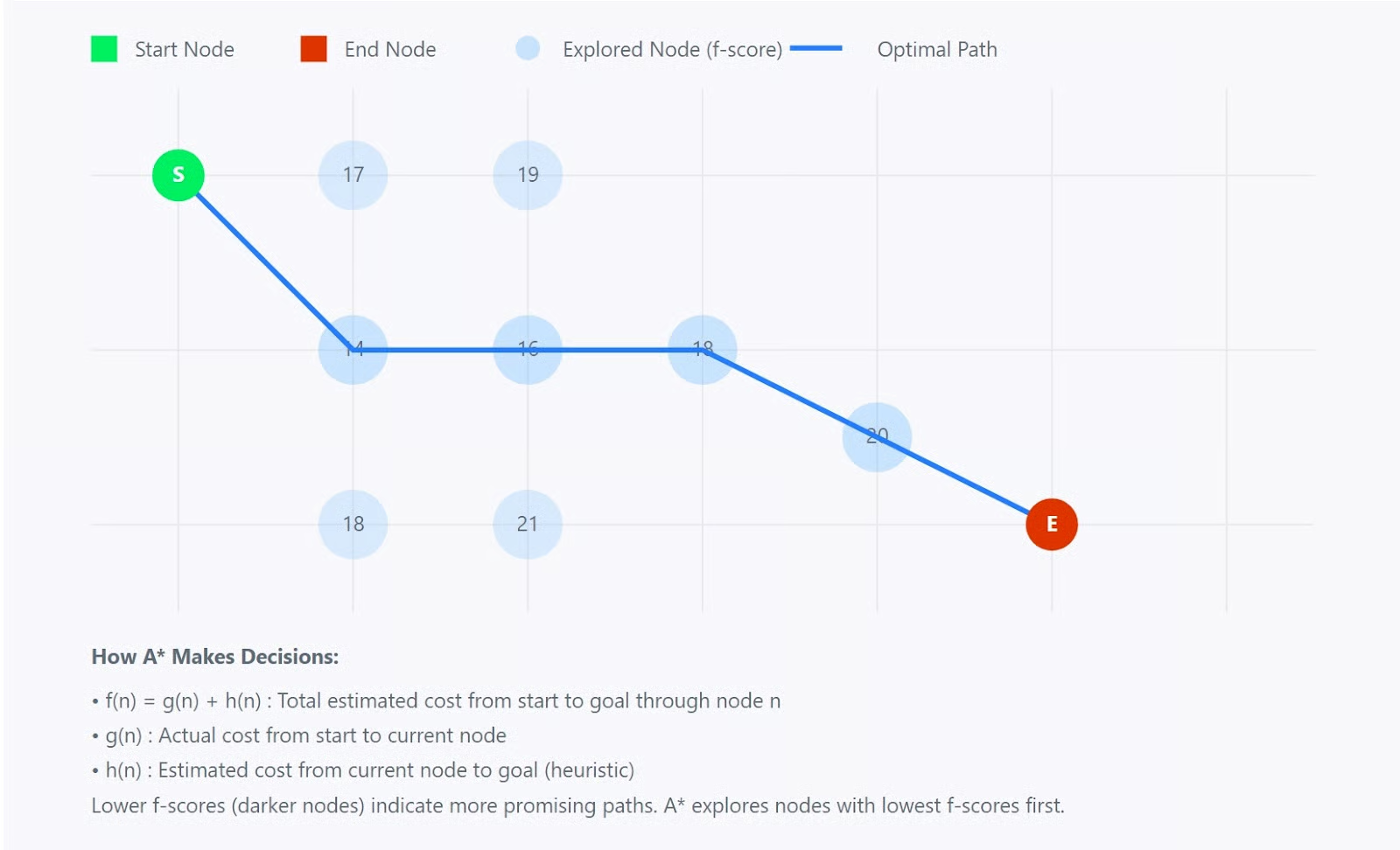

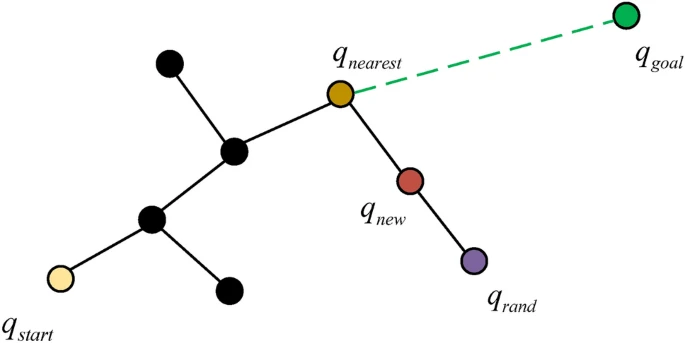

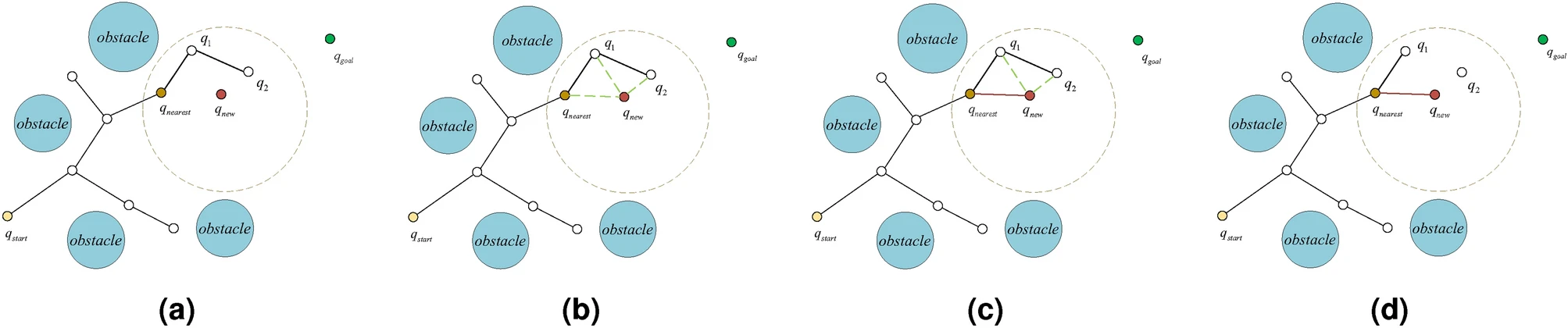

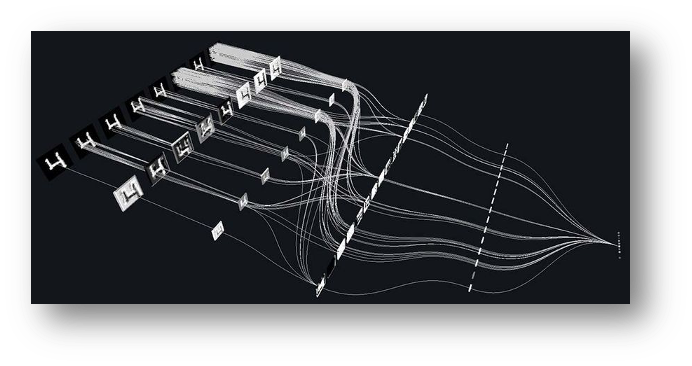

i.e., how to get to destination at the macro level → uses waypoints.

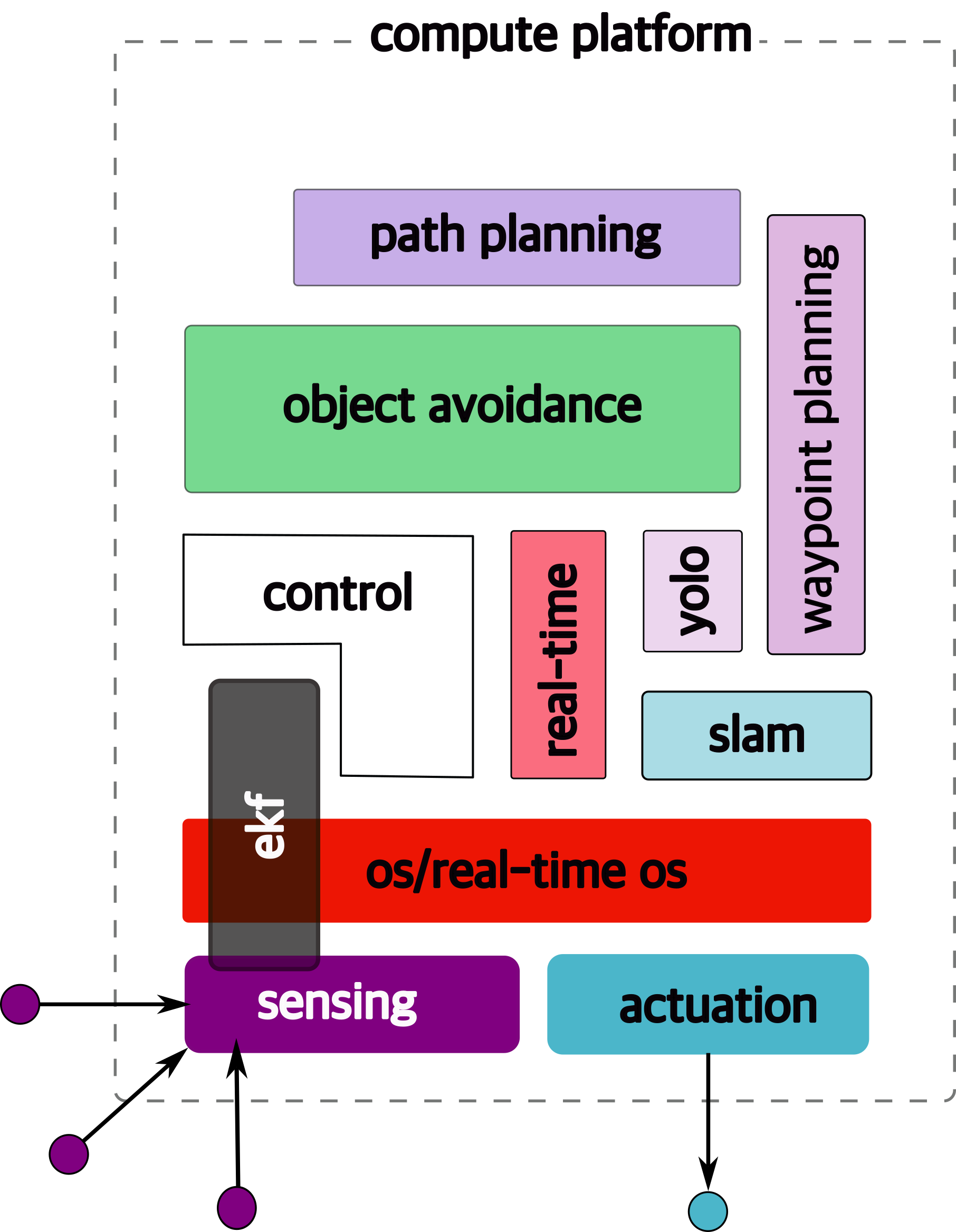

1.6.7 compute platform

To run all of these functions, we need low power, embedded platforms.

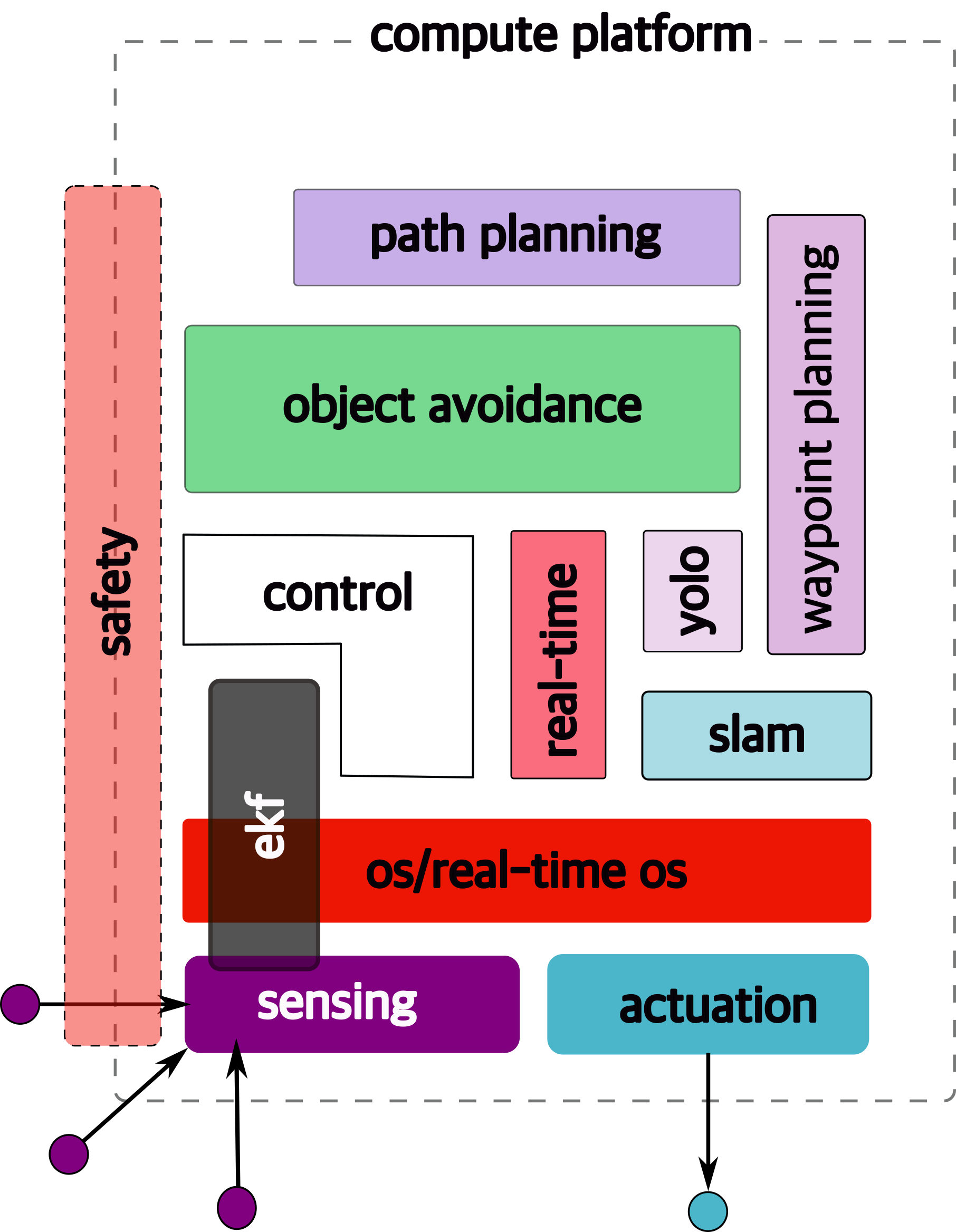

1.6.8 still some non-functional requirements remain

any guesses what they could be?

1.6.9 safety!

Essentially safety of → operator, other people, the

vehicle, environment This is cross-cutting

issue → affected

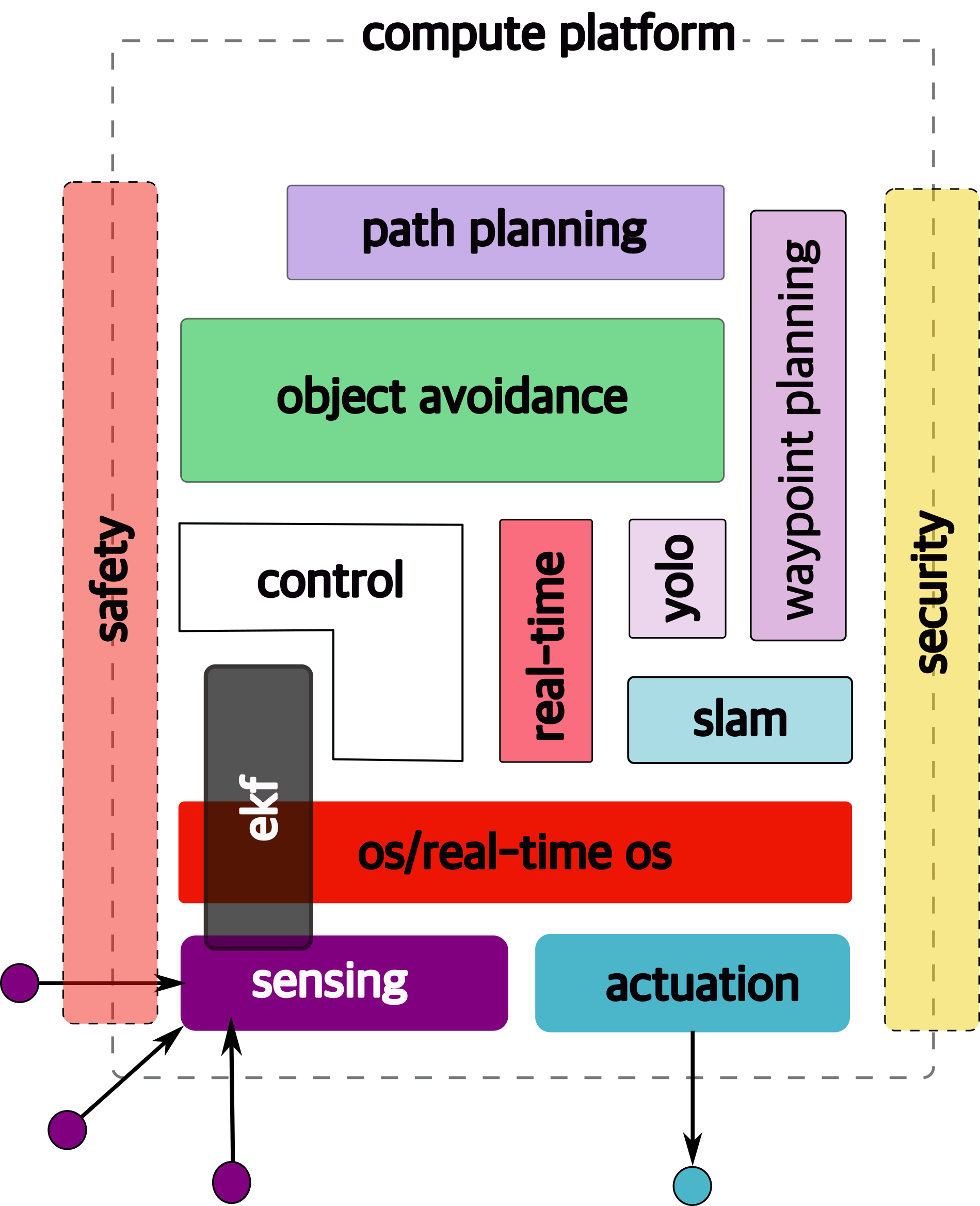

1.6.10 security

Security is another cross-cutting issue →

1.6.11 Course Structure

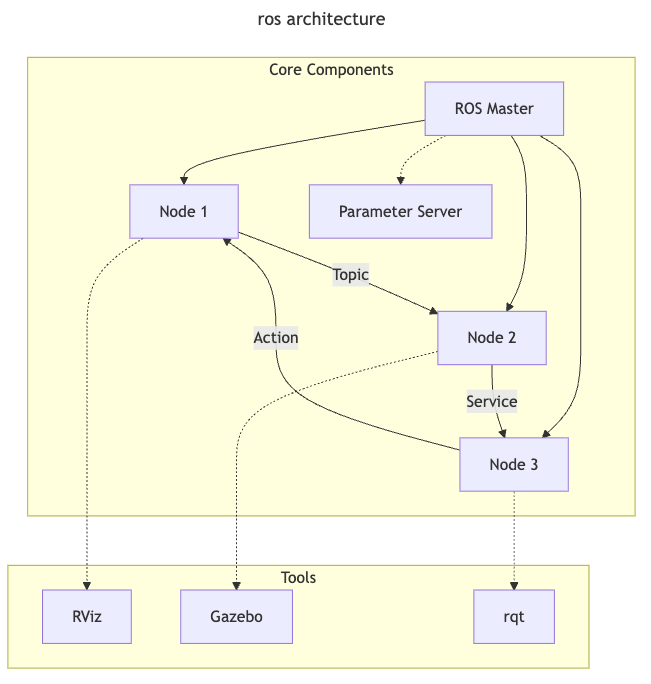

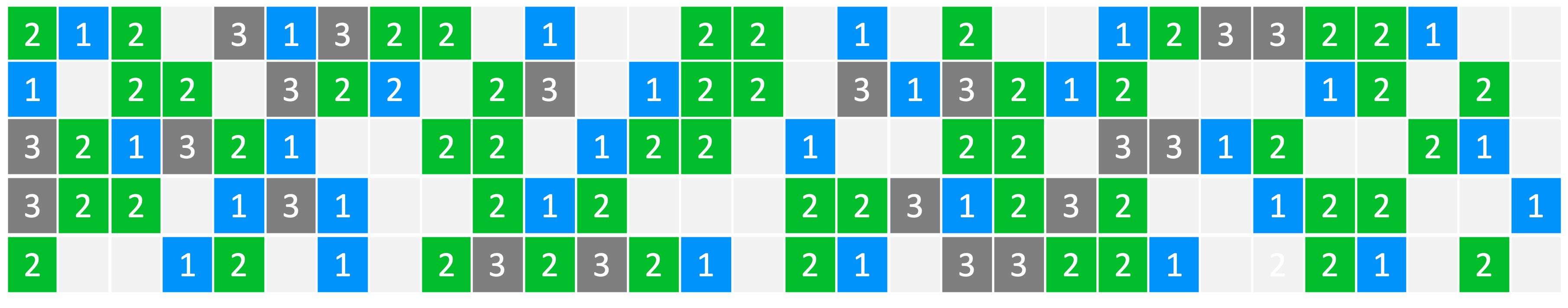

Hence this figure is a (loose) map of this course:

2 Embedded Architectures

Just like “autonomy” describing and “embedded system” is hard. What (typically) distinguishes it from other types of computer systems (e.g., laptops, servers or GPUs even) is that such systems are typically created for specific functionality and often remain fixed and operational for years, decades even.

Embedded systems often trade off between performance and other considerations such as power (or battery life), less memory, fewer peripherals, limited applications, smaller operating system (OS) and so on. There are numerous reasons for this – chief among them is predictability – designers need to guarantee that the system works correctly, and remains safe, all the time. Hence, it must be easy to certify 1 the entire system. This process ensures that the system operates safely.

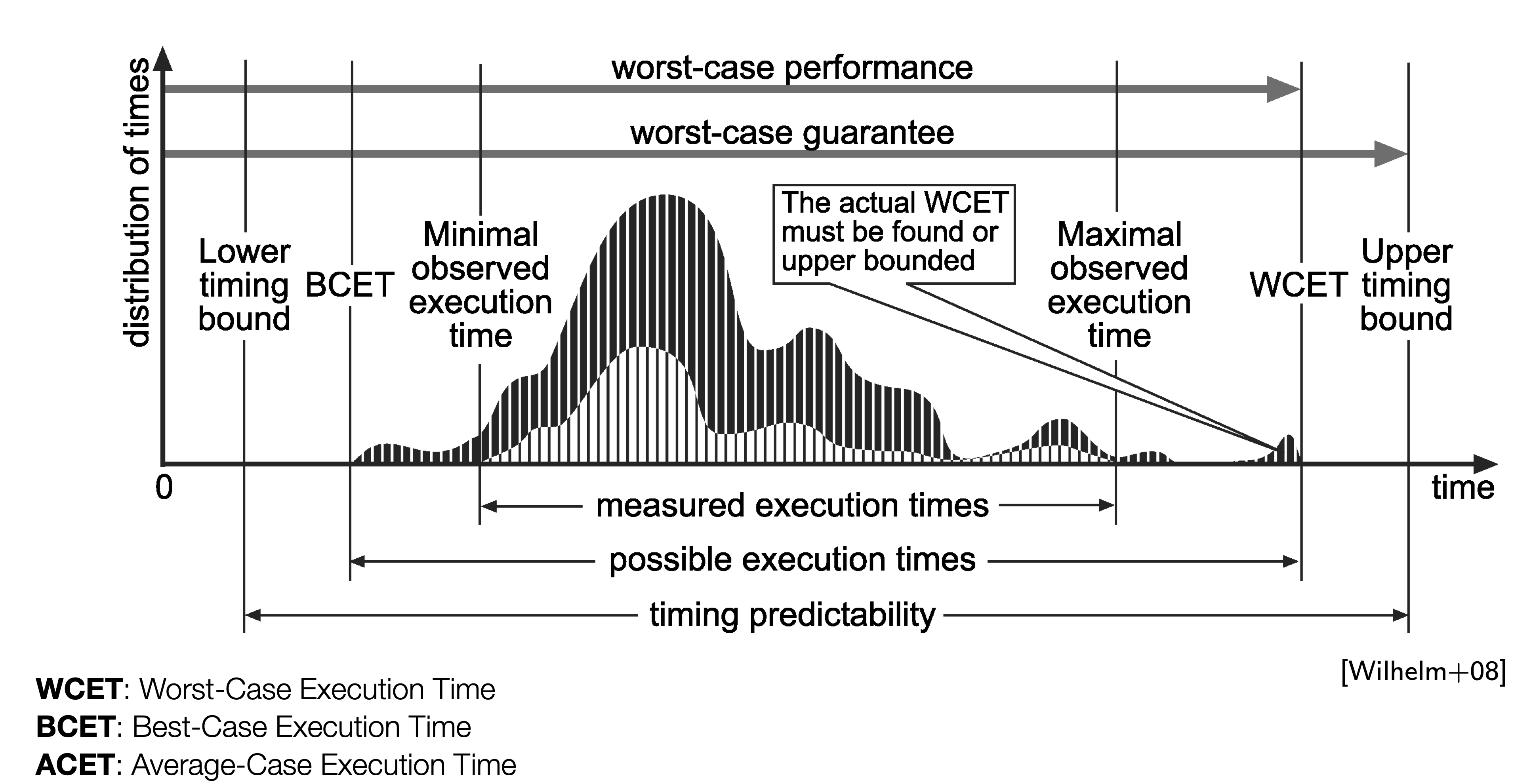

2.1 The wcet problem

One piece of information that is required to ensure predictability and guarentee safety is worst-case execution time (WCET). The WCET/BCET is the longest/shortest execution time possible for a program, on a specific hardware platform – and it has to consider all possible inputs. WCET is necessary to ensure the “schedulability”, resource requirements and performance limits of embedded and real-time programs. There are lots of approaches to computing the WCET, e.g.,

- dynamic/empirical analysis → run the program lots of times (thousands, millions?) on the platform and measure it

- static analysis → analyze the program at compile time to compute the worst-case paths through the program

- hybrid → a combination of the two

- probabilistic → a combination of dynamic analysis+statistical methods

- ML-based methods → applying machine-learning to the problem

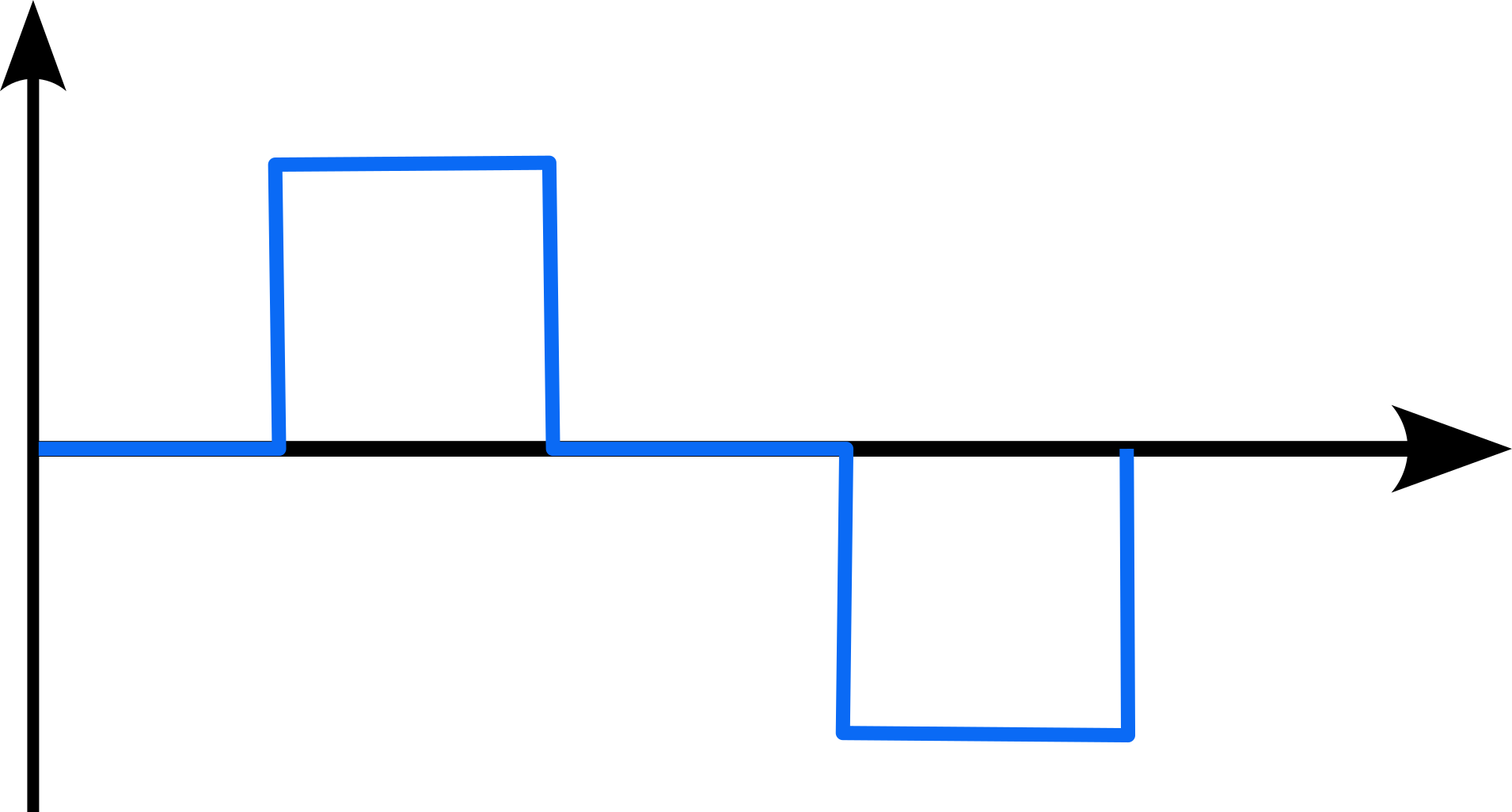

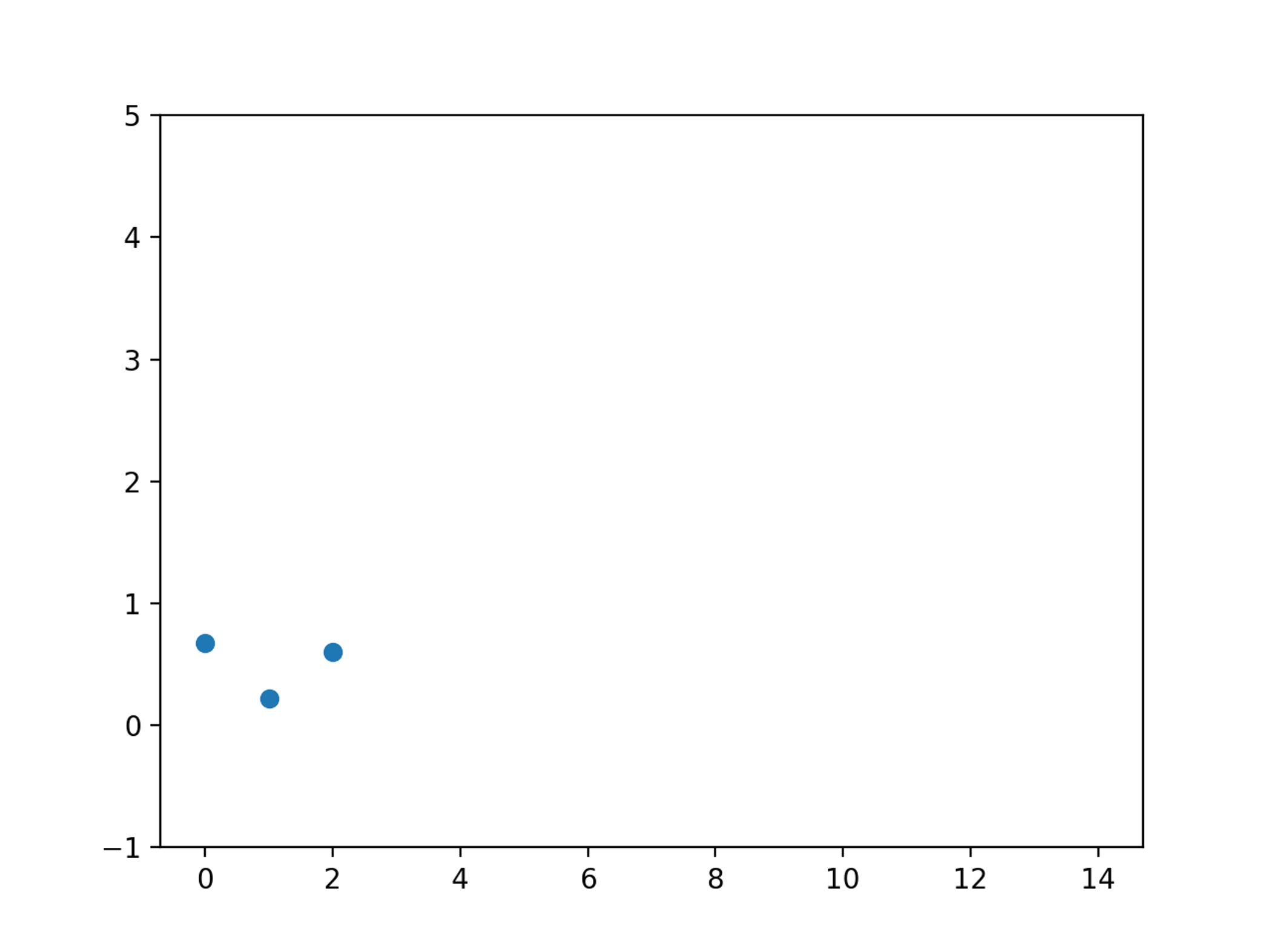

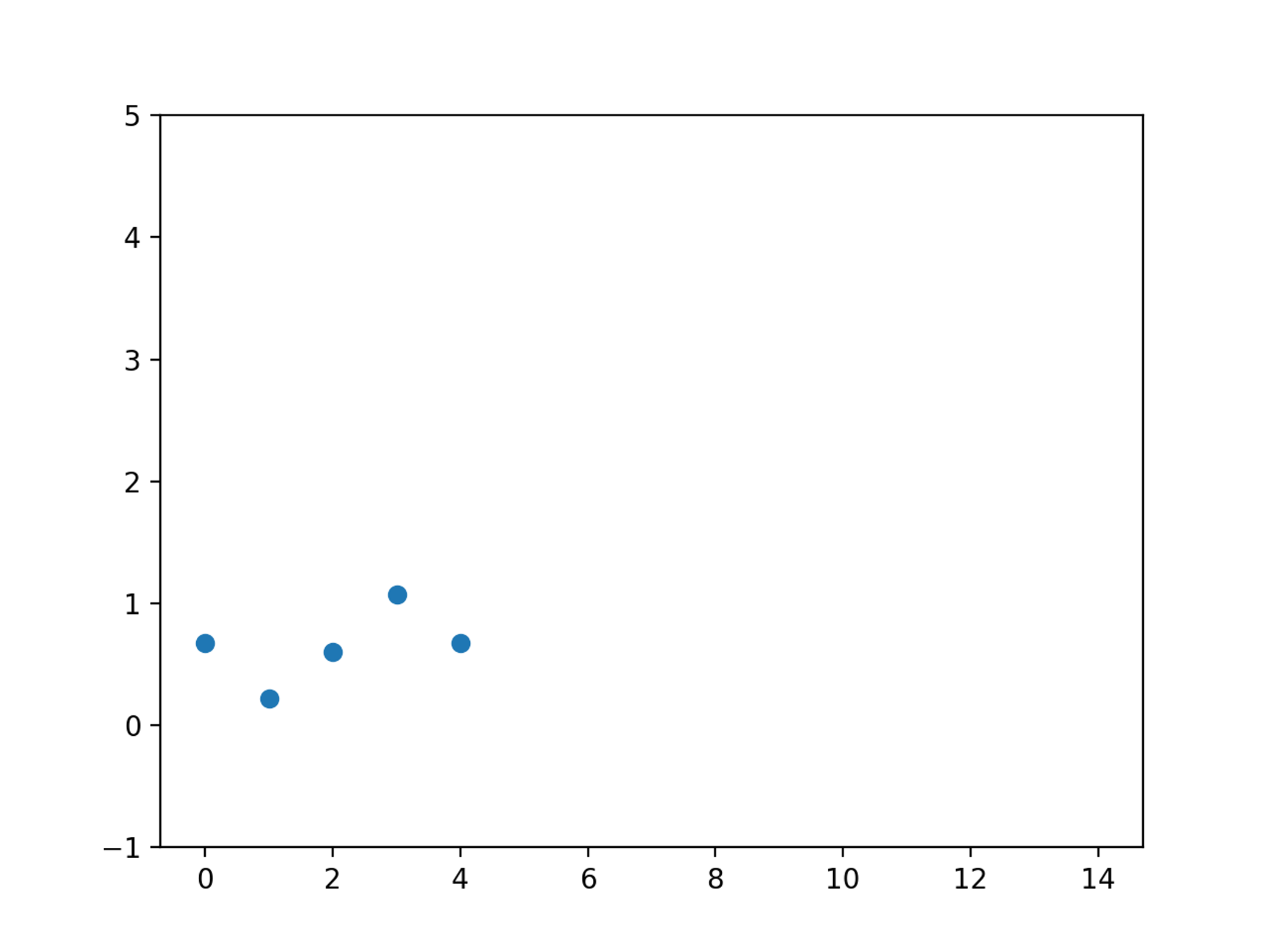

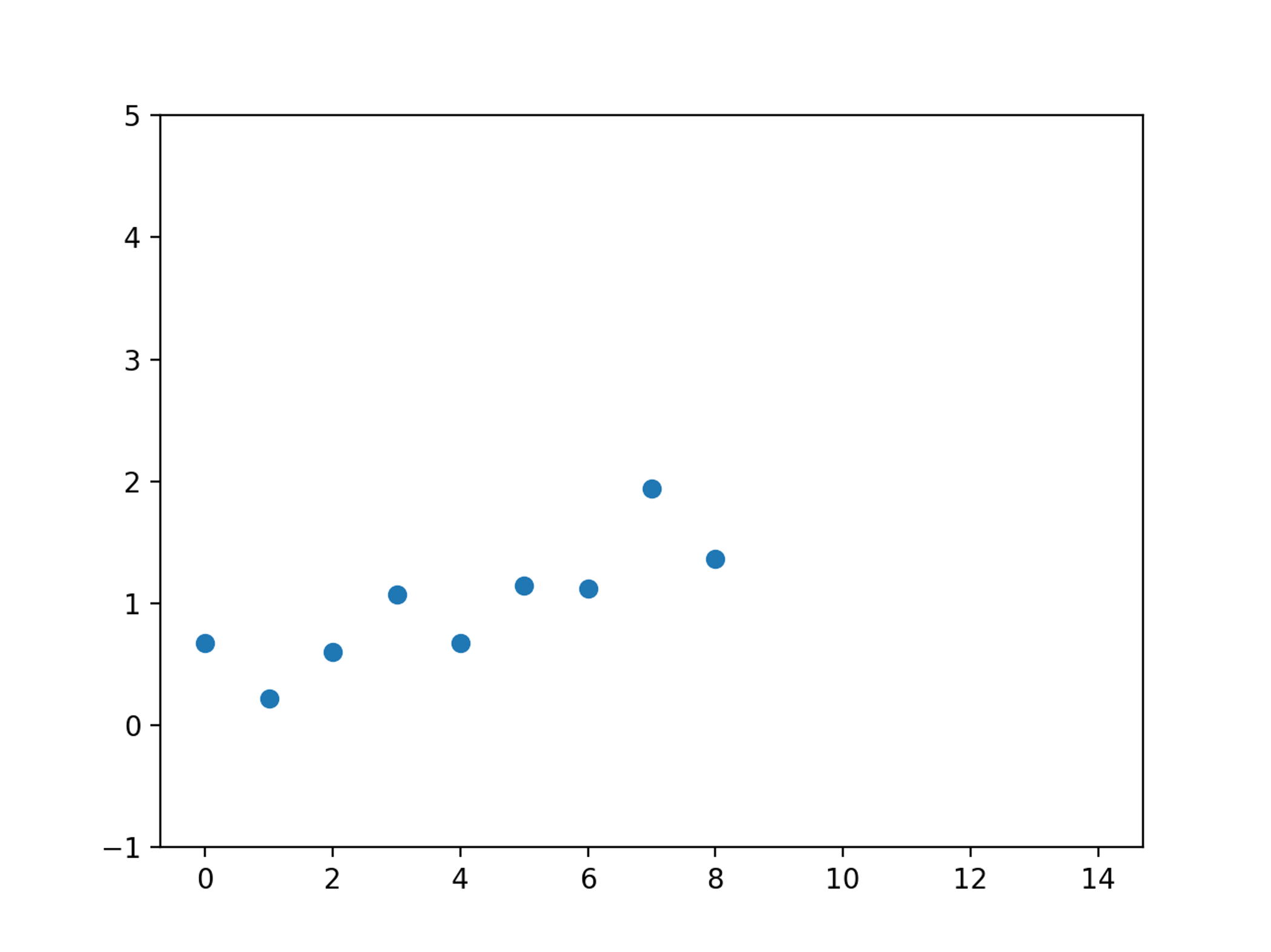

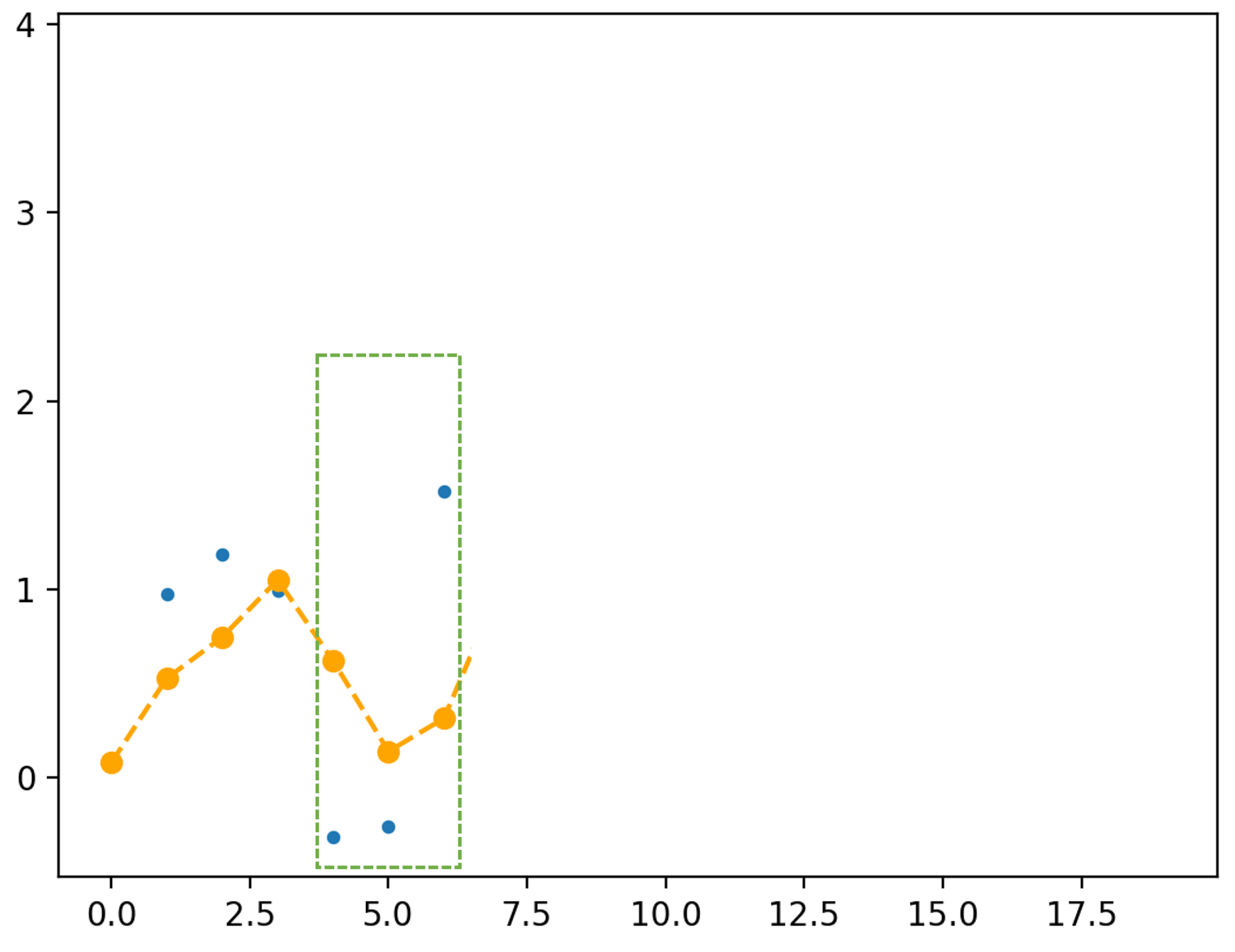

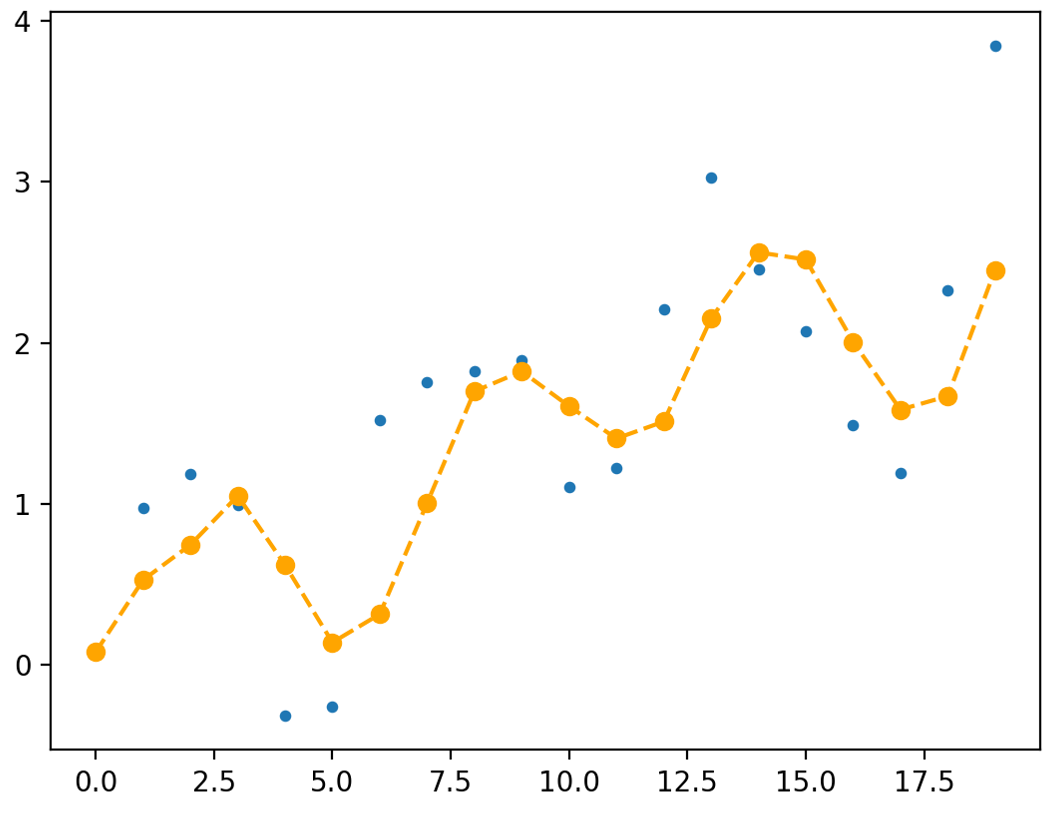

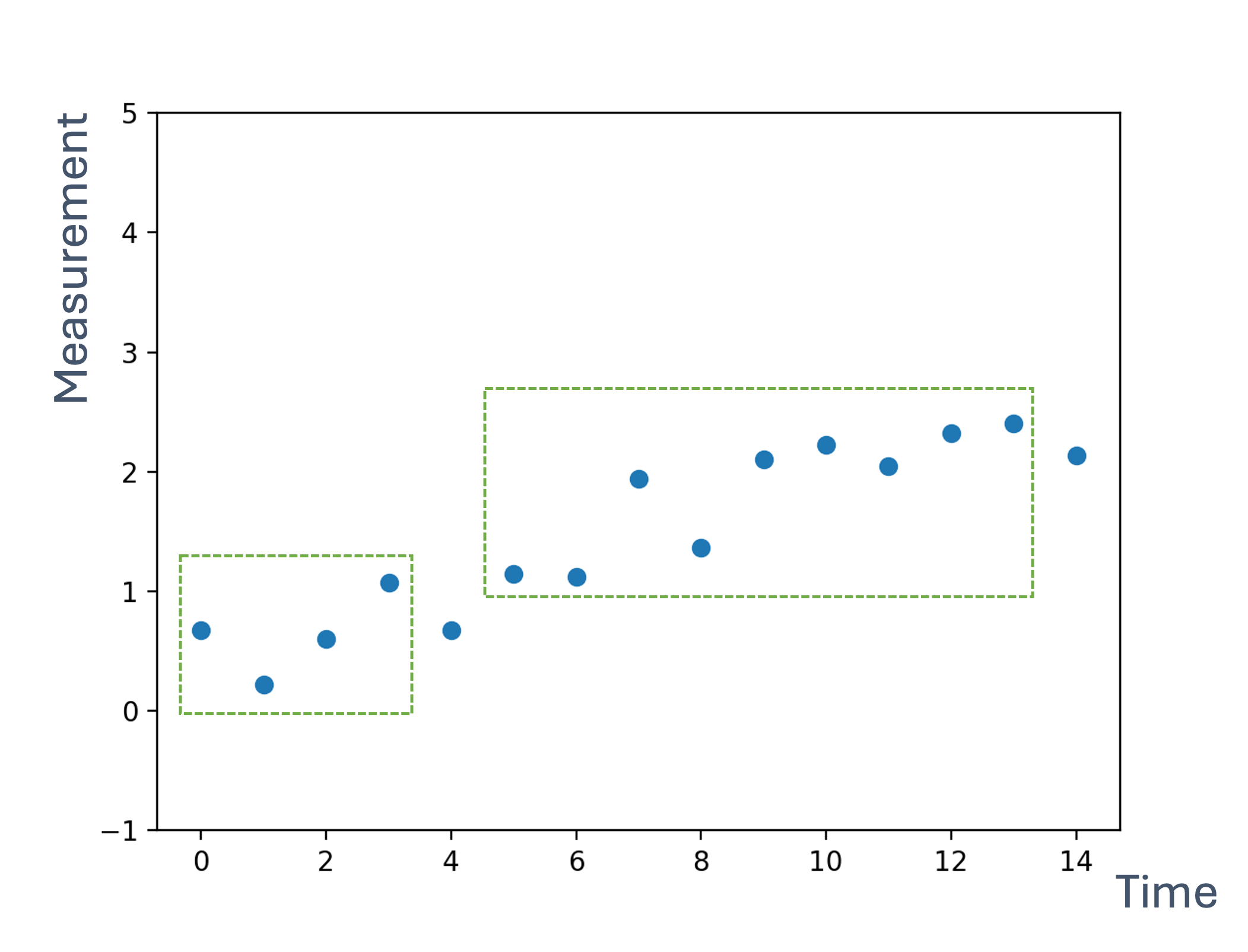

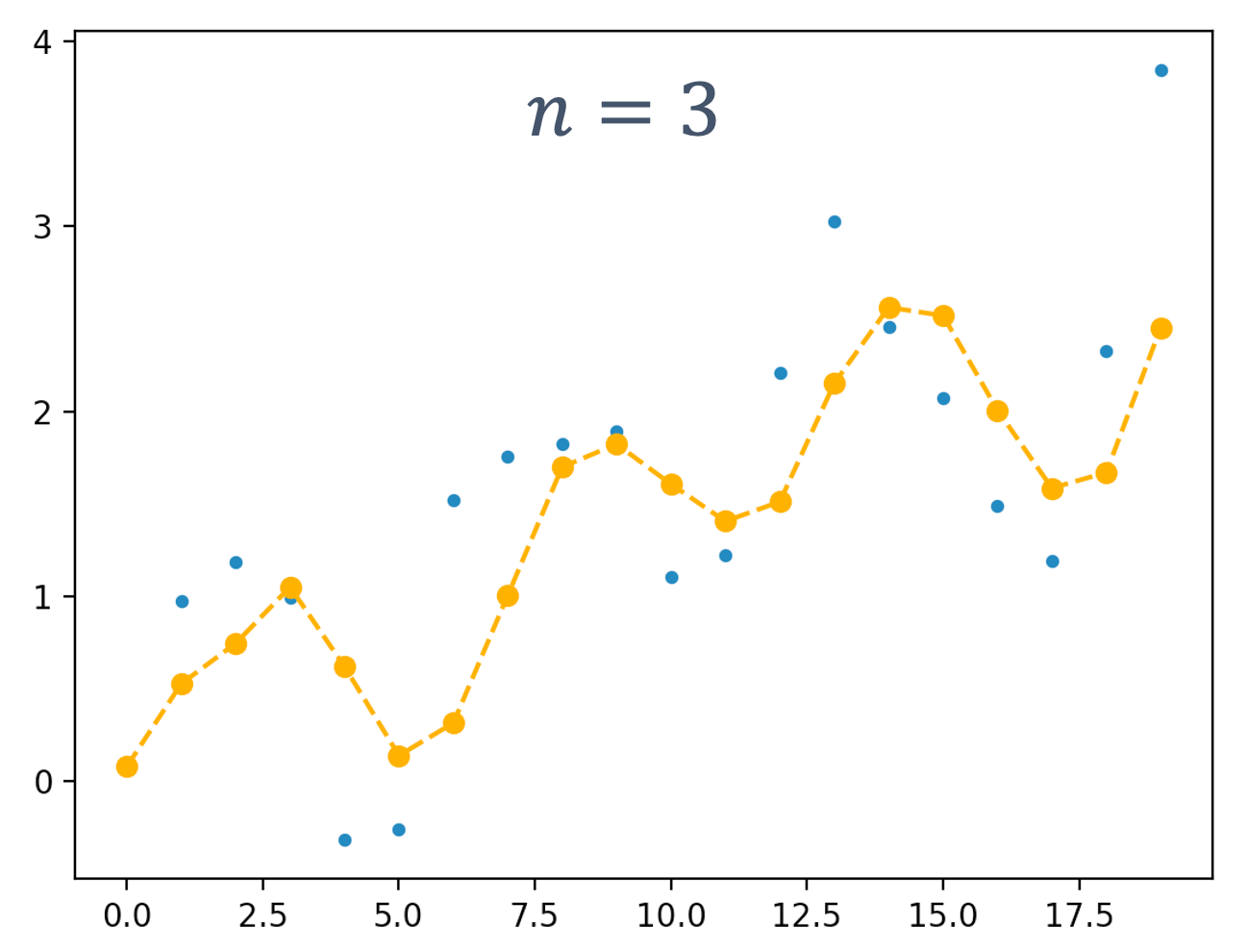

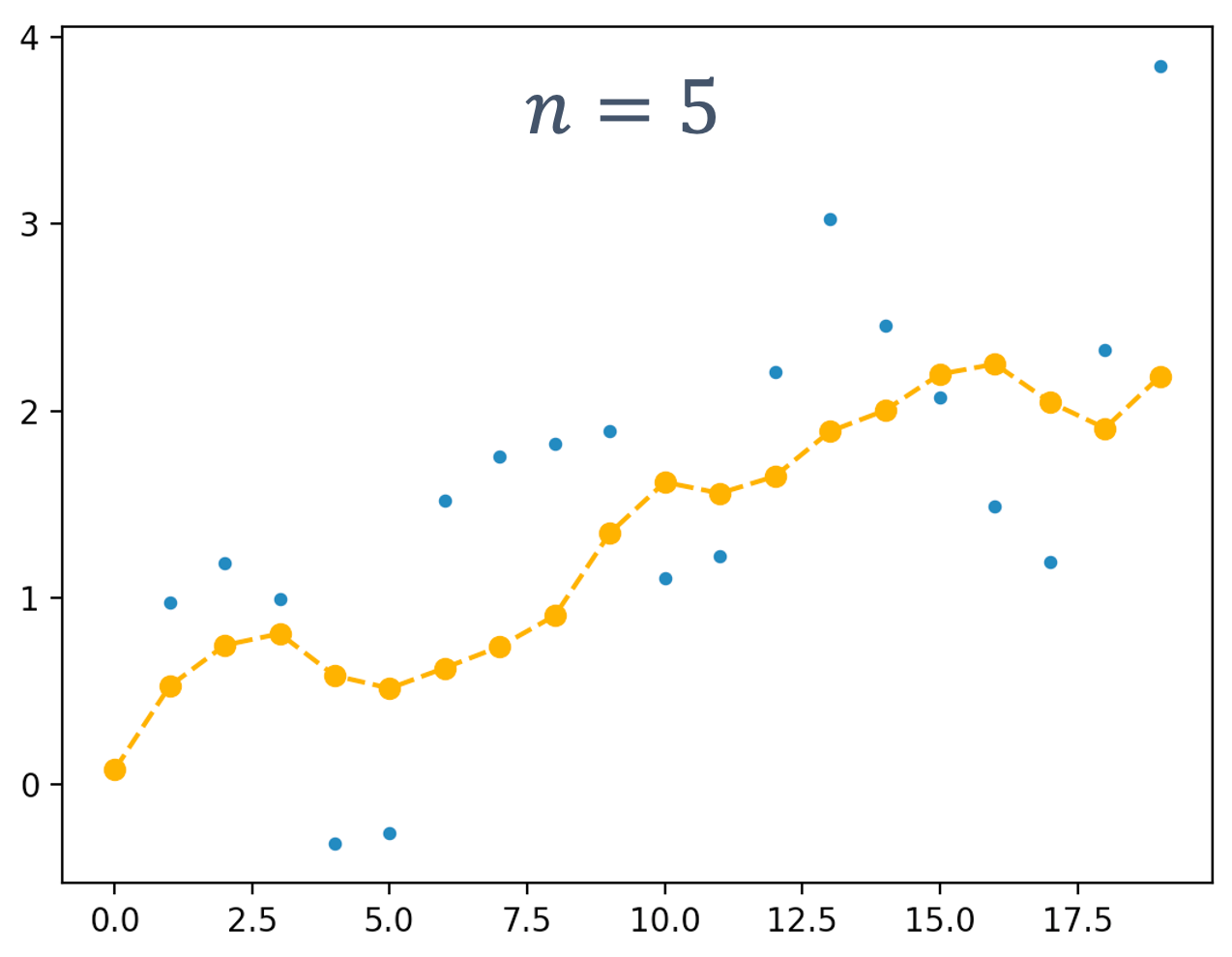

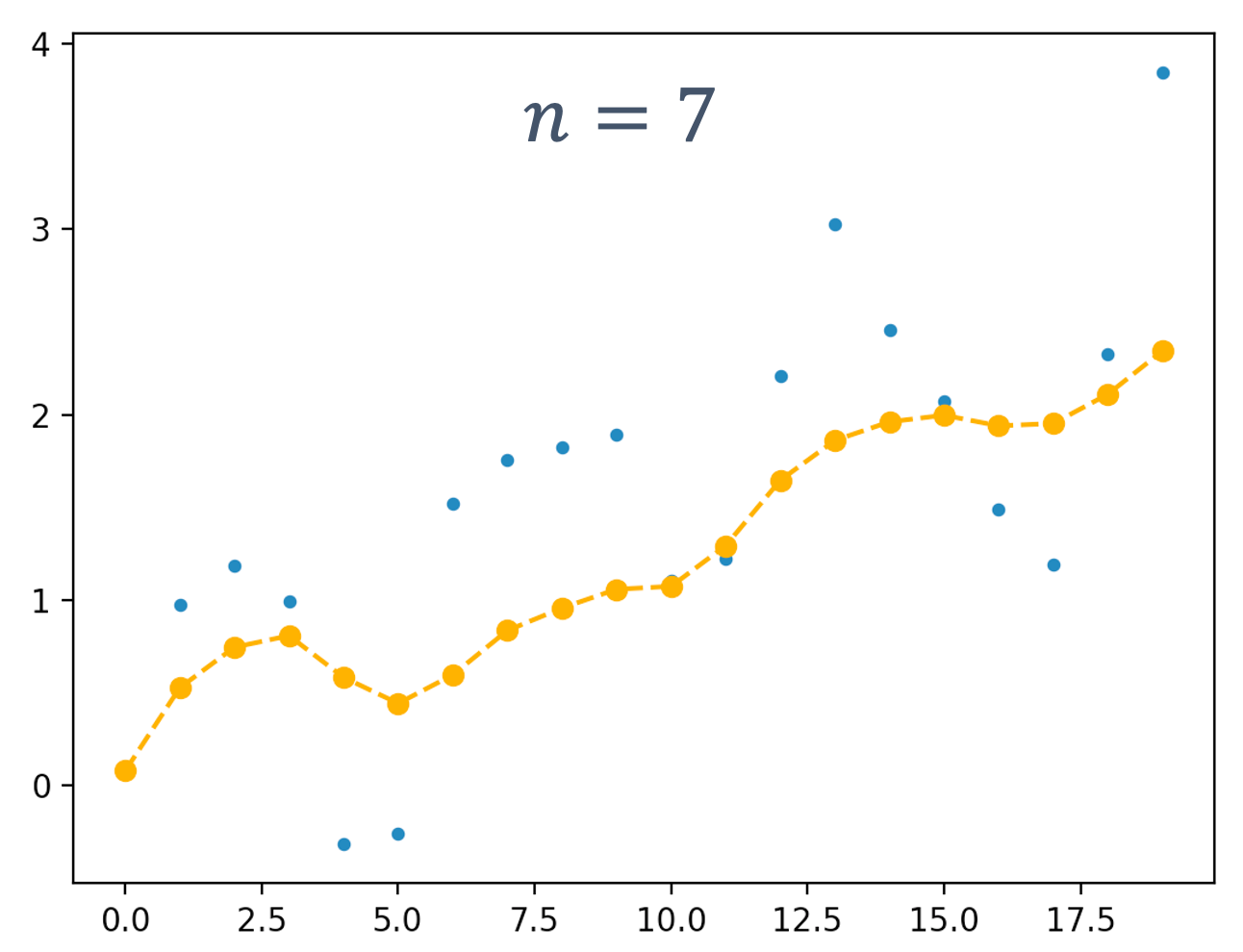

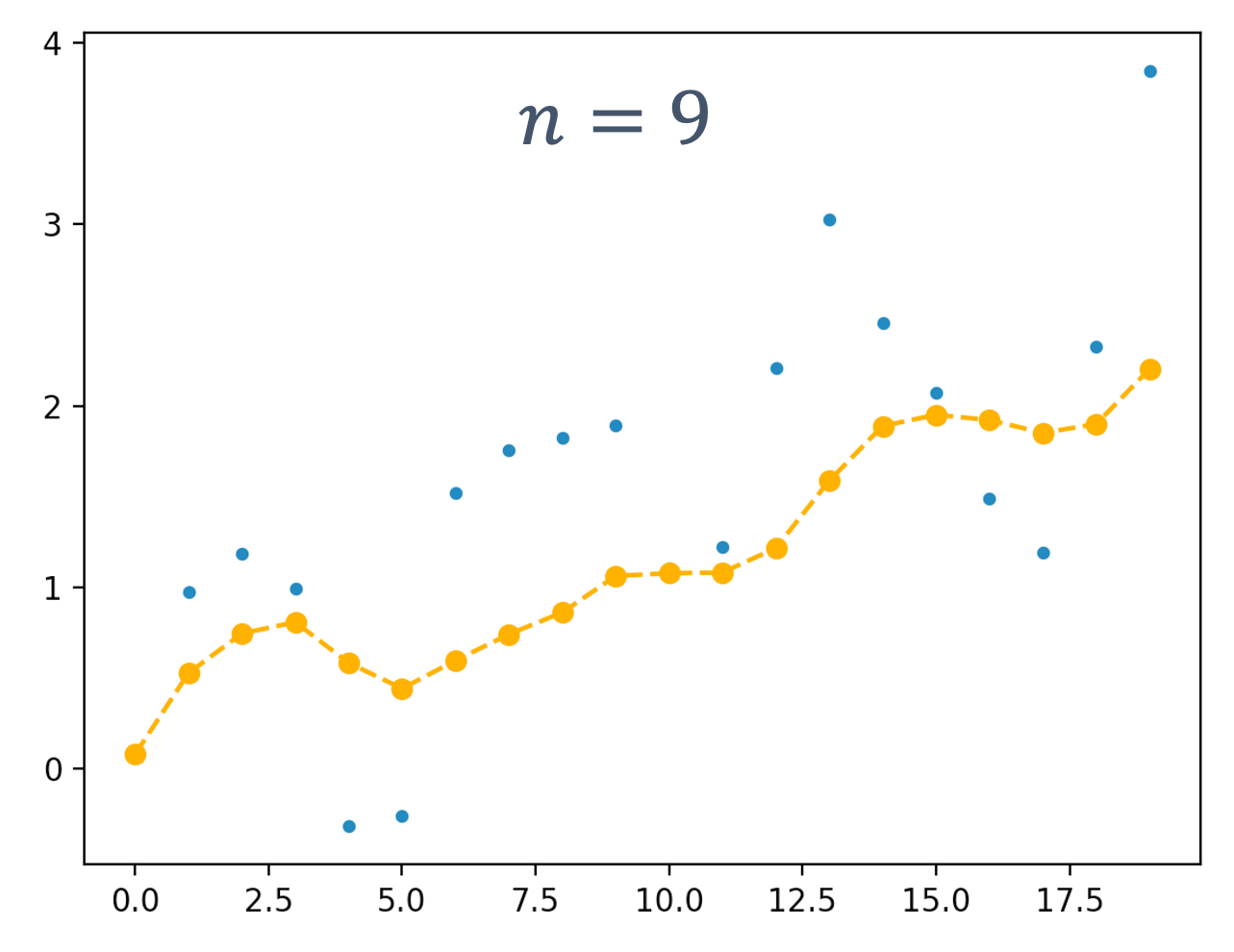

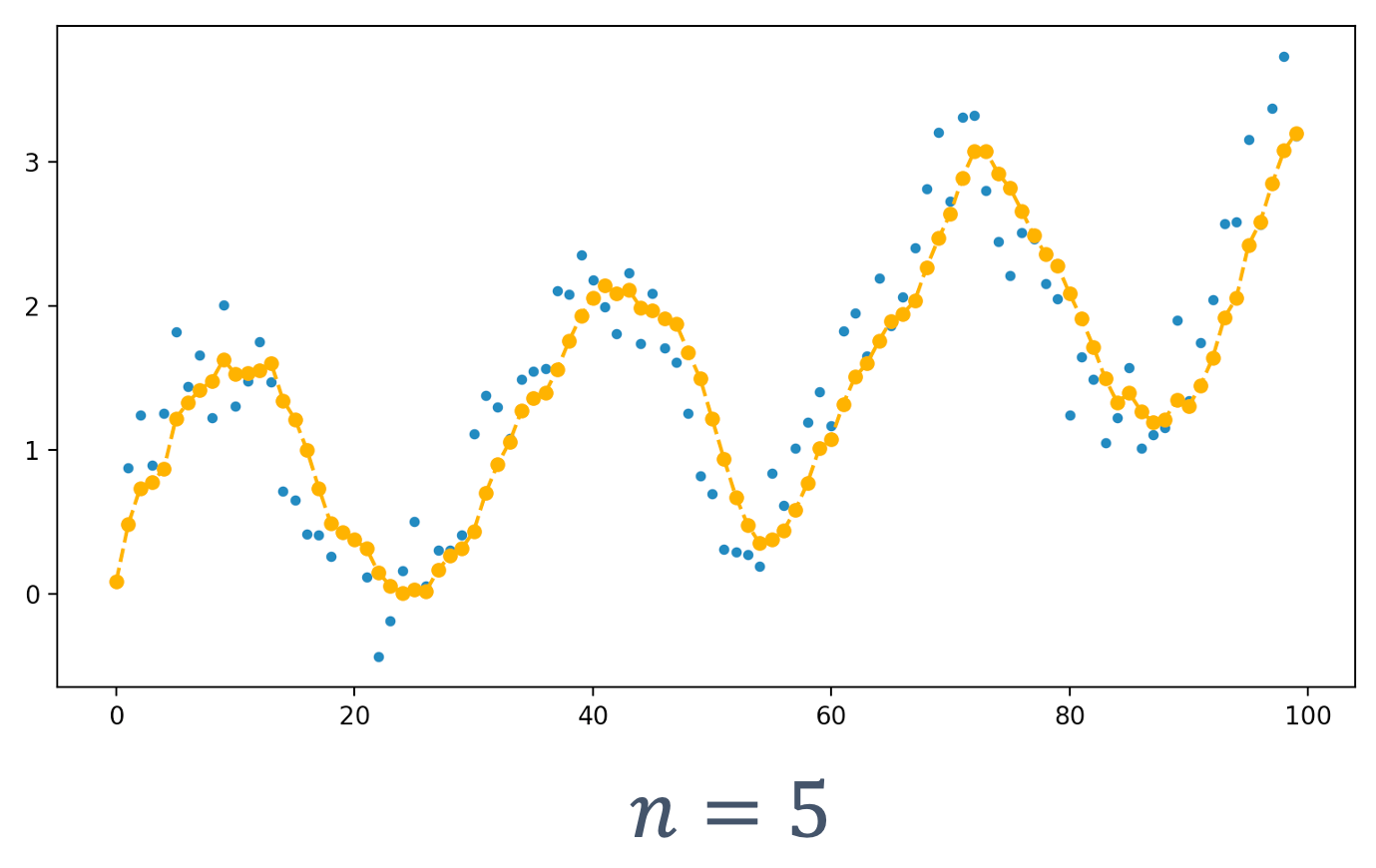

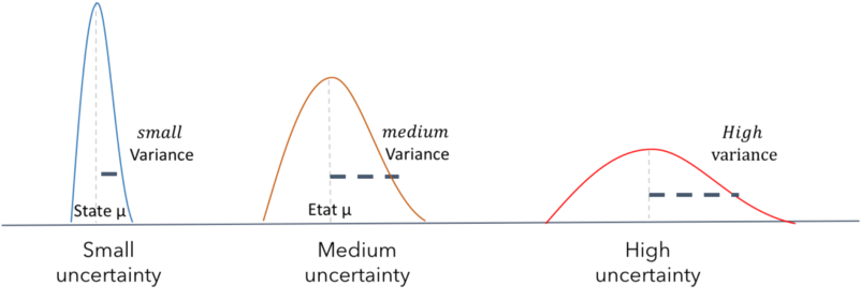

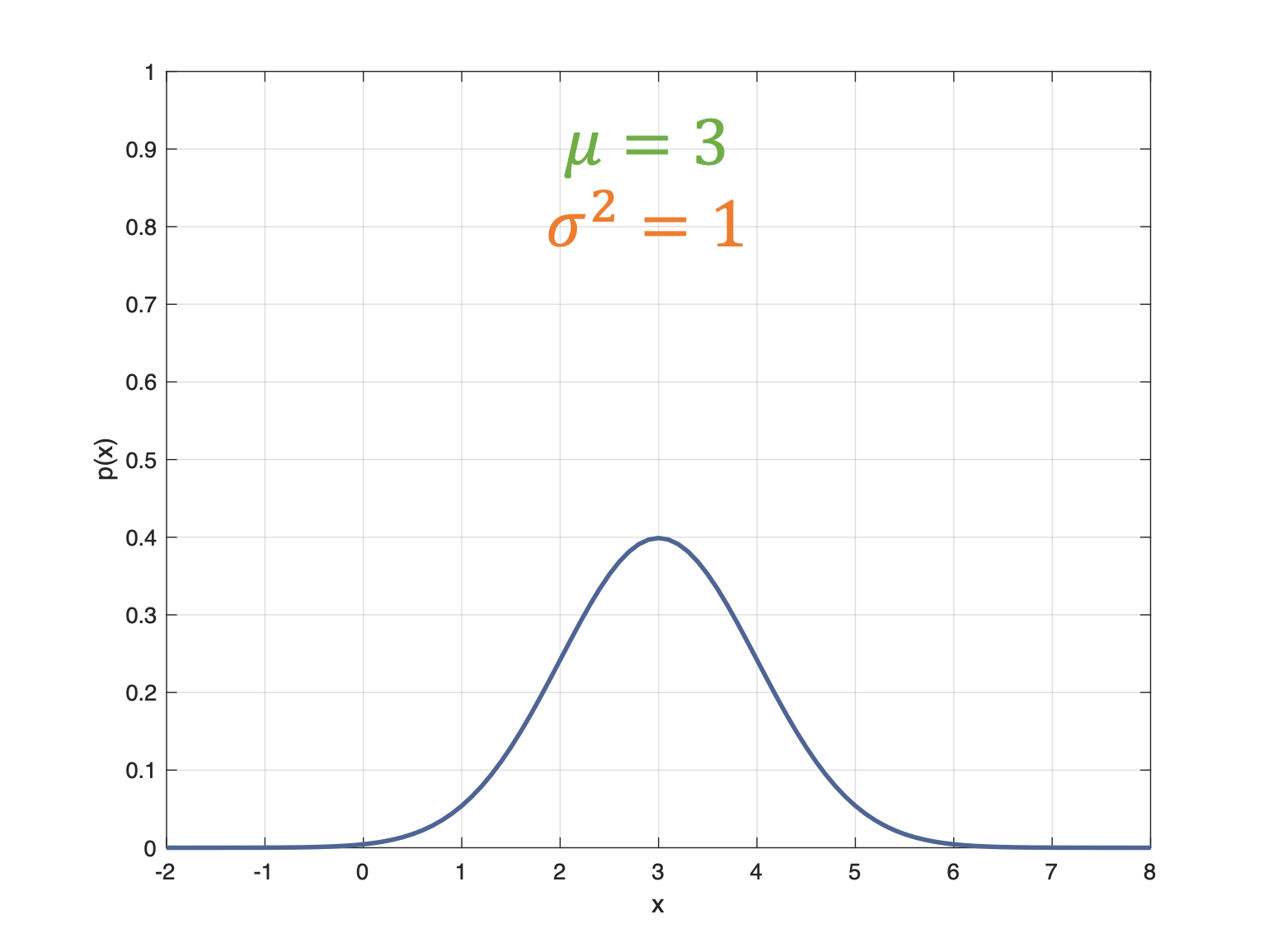

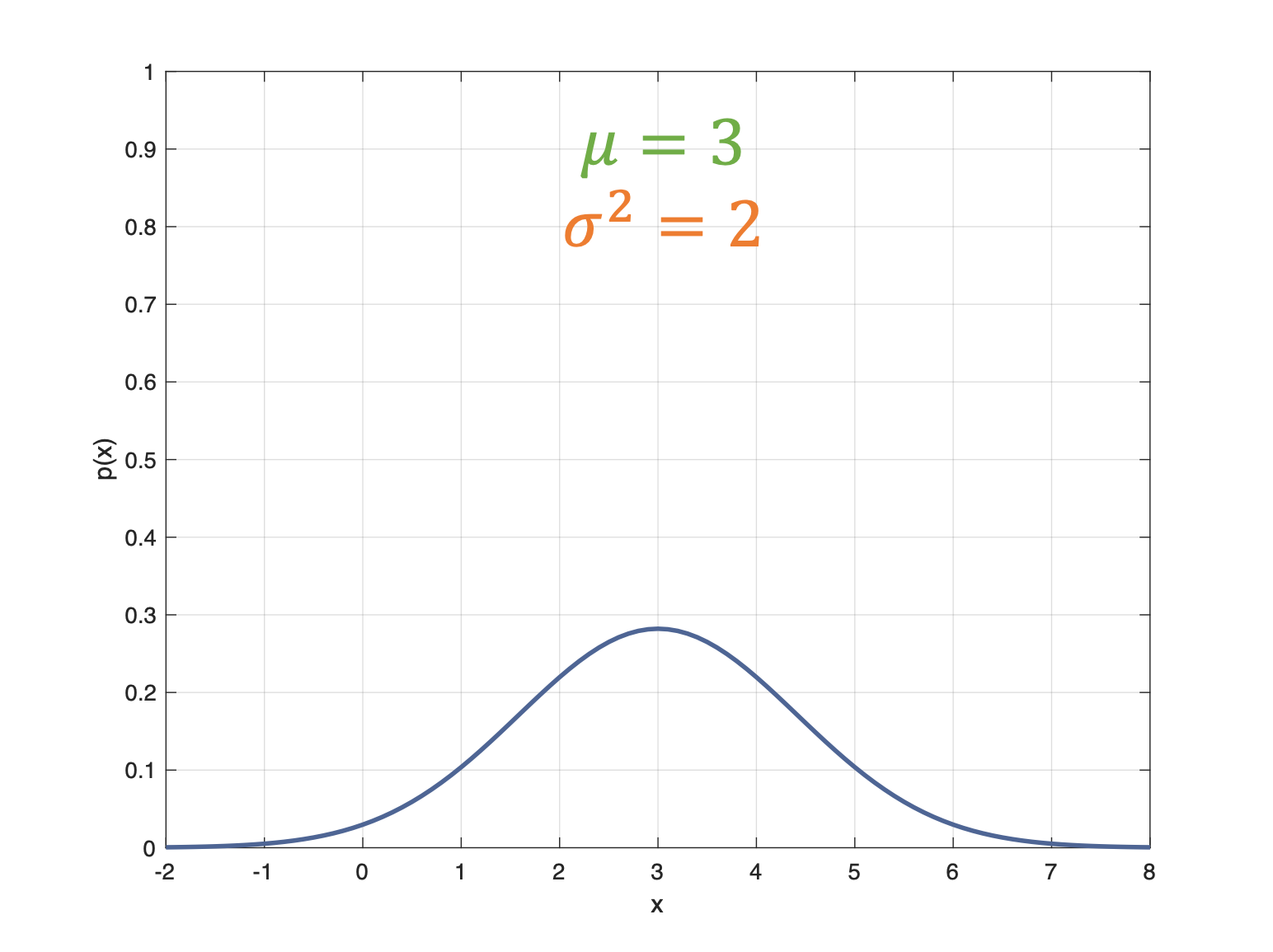

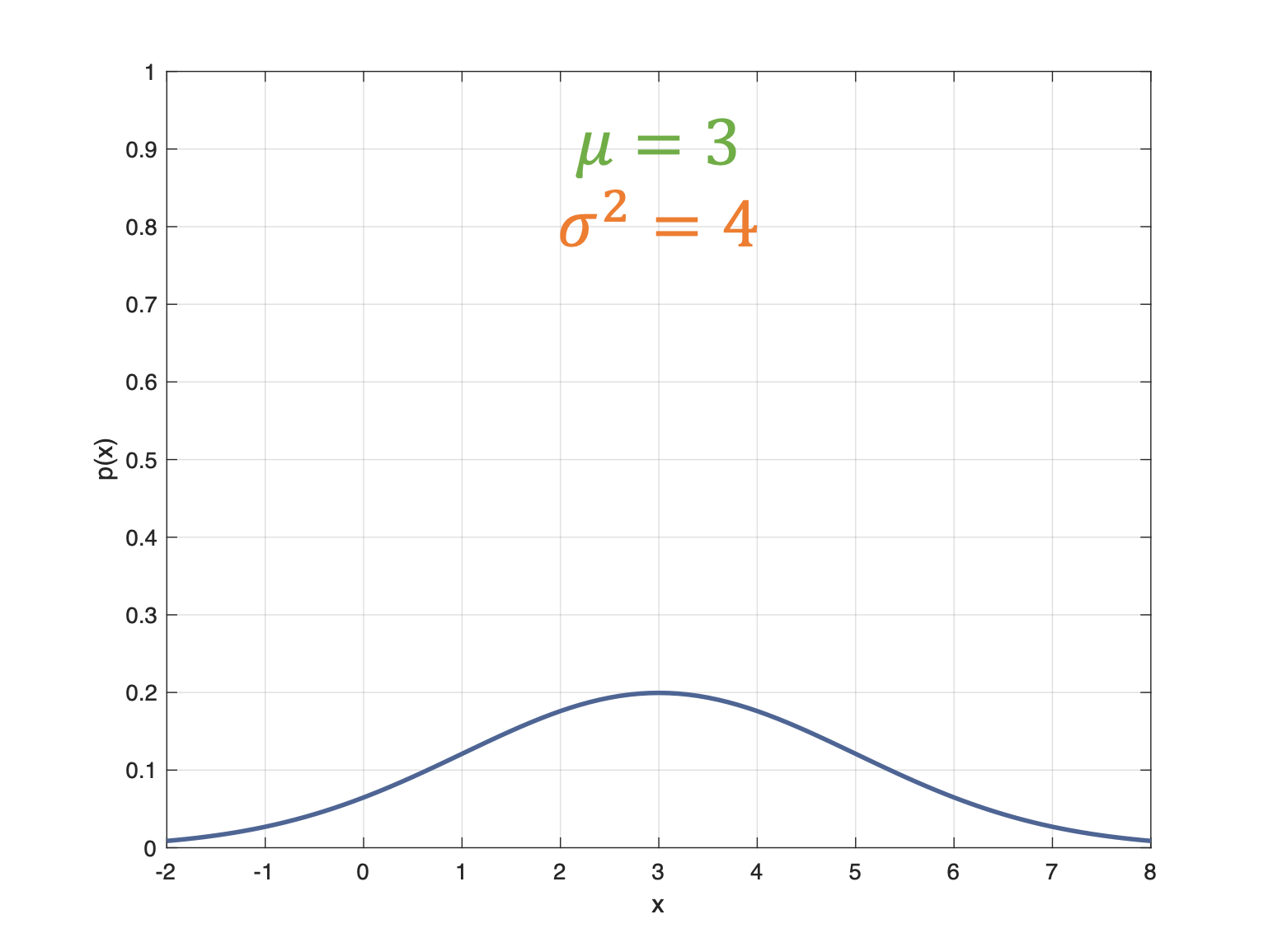

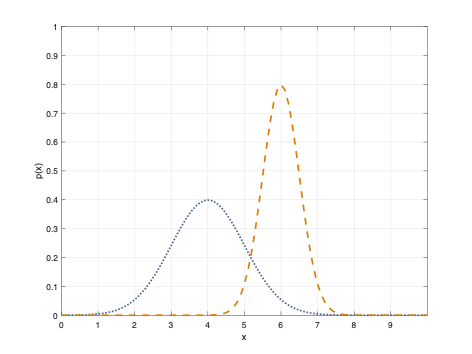

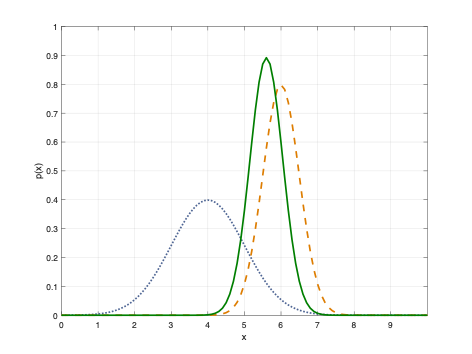

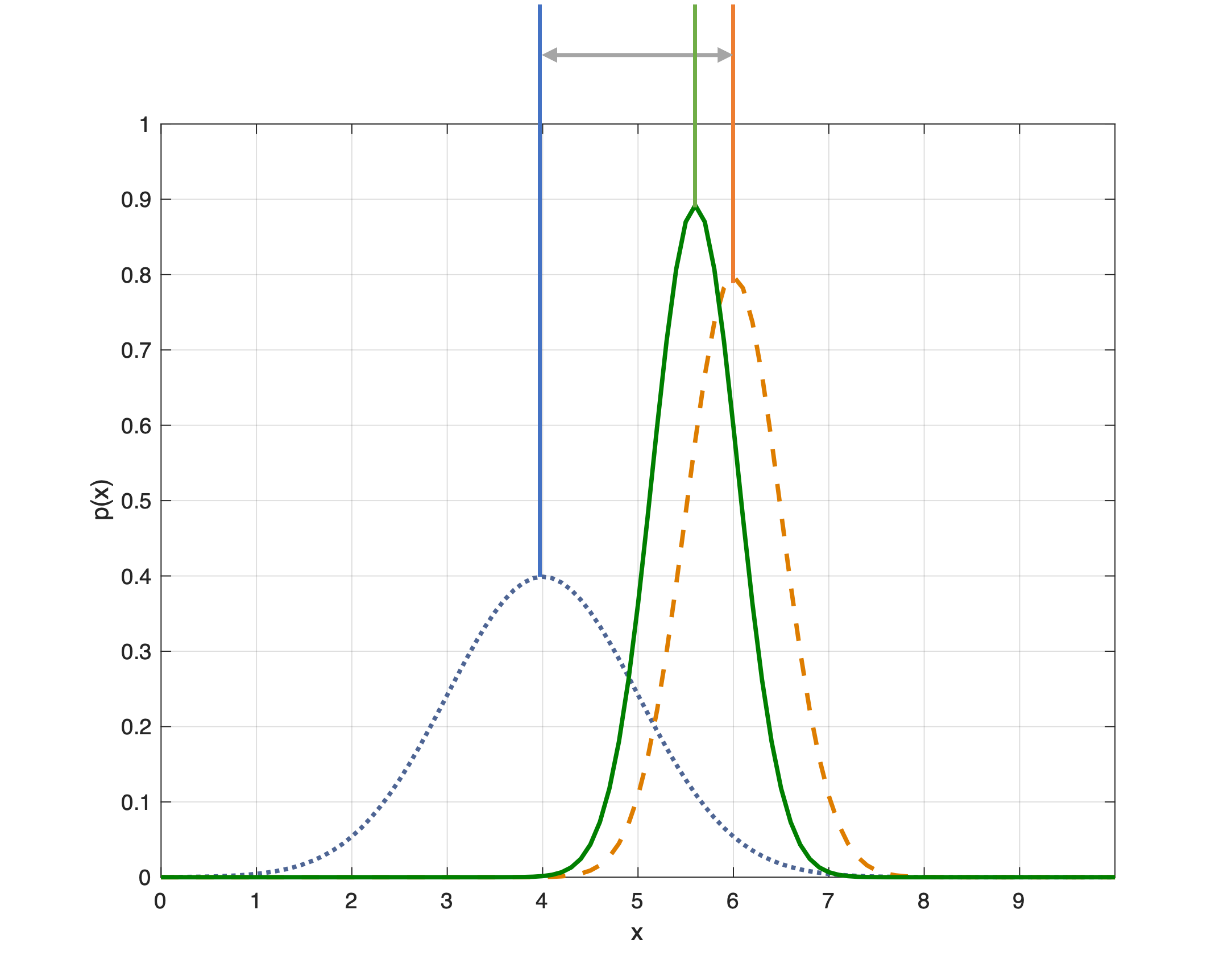

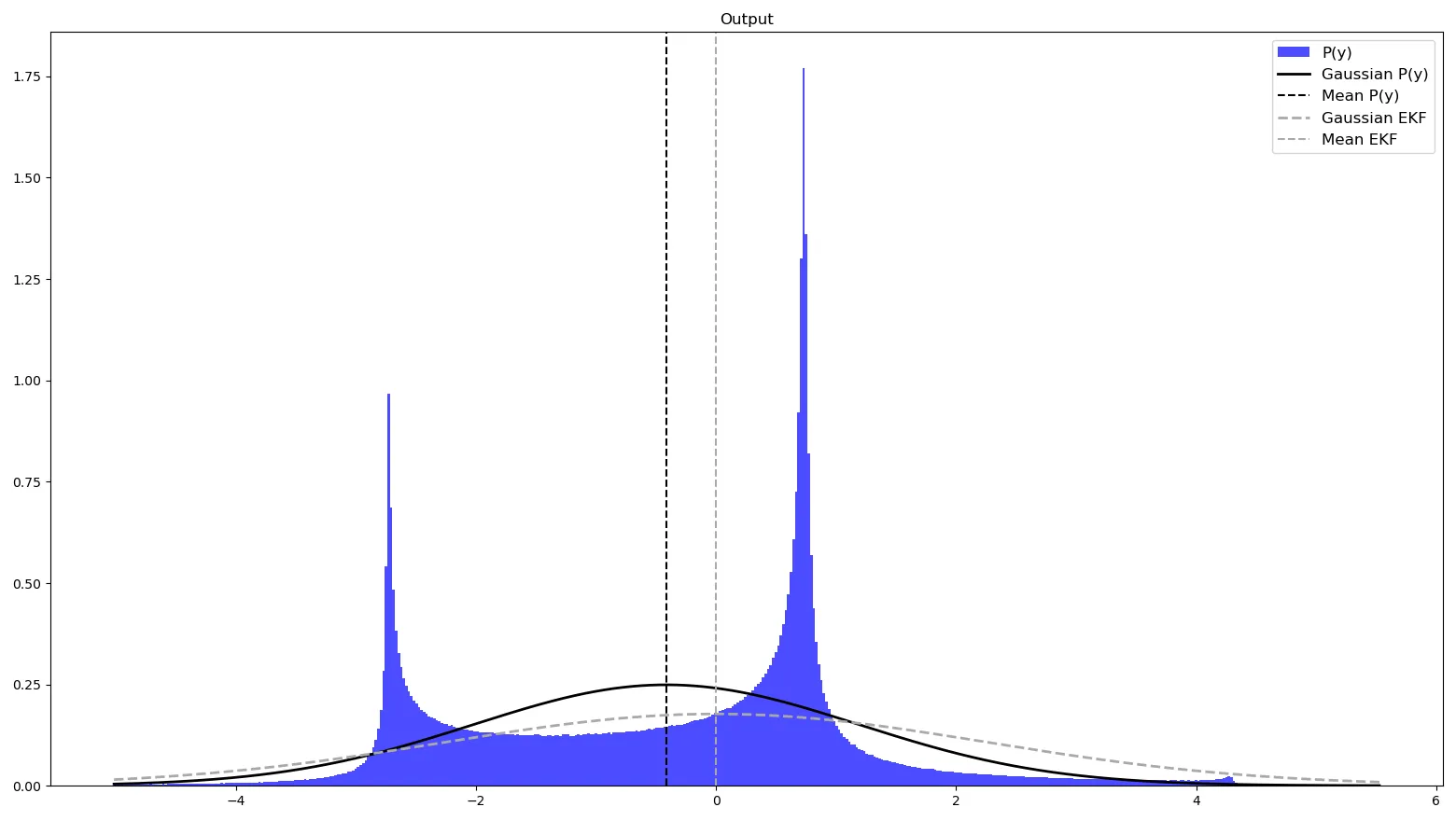

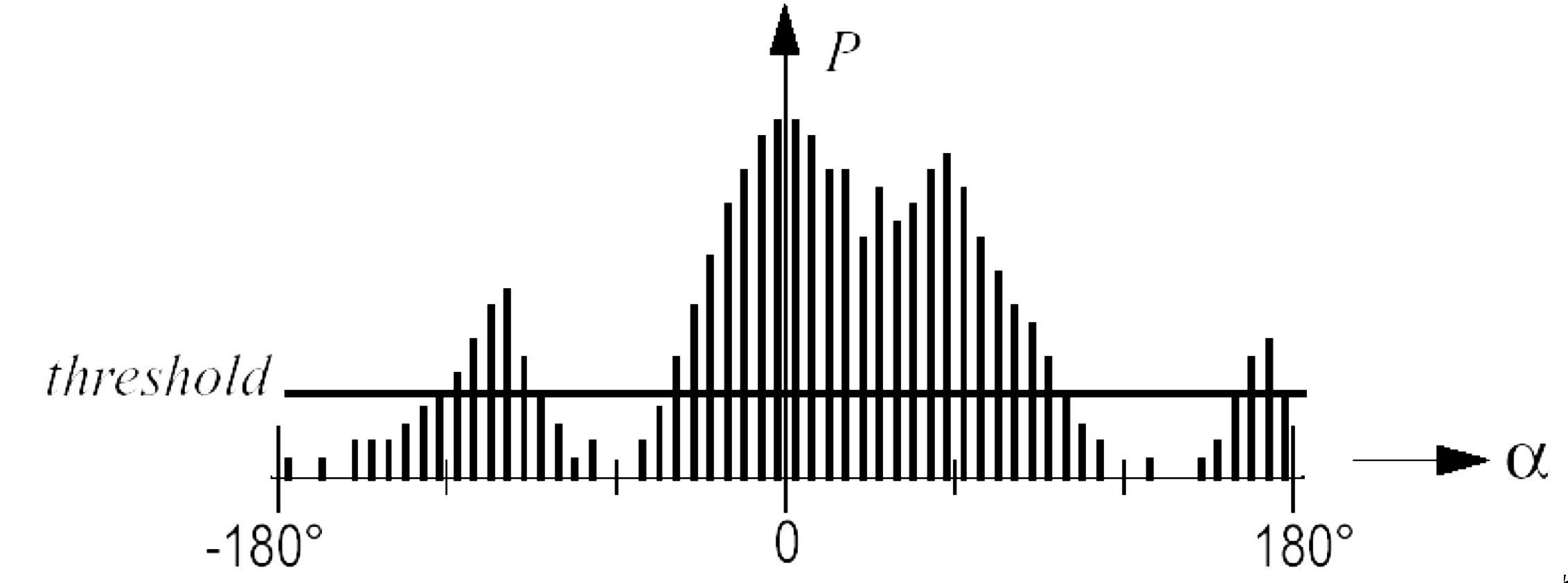

At a high-level, the execution time distributions of applications look like:

WCET analysis is a very active area of research and hundreds of papers have been written about it, since it directly affects the safety of many critical systems (aircraft, power systems, nuclear reactors, space vehicles and…autonomous systems).

There are structural challenges (both in software and hardware) that prevent the computation of proper wcet for anything but trivial examples. For instance, consider,

void main()

{

int max = 10 ;

int sum = 0;

for( int i = 0 ; i < max ; ++i)

sum += i ;

}How do you compute the WCET for this code? Say running on some known processor, P?

Well, there’s some information we need,

- how long each instruction takes to execute on P

- how many loop iterations?

- what is the startup/cleanup times for the program on P?

Let’s assume (from the manual for P), we get the following information,

1 void main() // startup cost = 100 cycles

2 {

3 int max = 15 ; // 10 cycles

4 int sum = 0; // 10 cycles

5 for( int i = 0 ; i < max ; ++i) // 5 cycles, once

6 sum += i ; // 20 cycles each iteration

7 } // cleanup cost = 120 cyclesSo, based on this, we can calculate the total time to execute this program:

wcet = startup_cost + line_3 + line_4 + loop_startup_cost + (line_6 * max) [1]

wcet = 100 + 10 + 10 + 5 + (20 * 15)

wcet = 425 cycles

Now consider this slight change to the above code:

void main( int argc, char* argv[] )

{

int max = atoi( argv[1] ) ; // convert the command line arg to max

int sum = 0;

for( int i = 0 ; i < max ; ++i) // how many iterations?

sum += i ;

}The problem is that equation [1] above fails since we no

longer know the value of max. Hence the

program can run for any arbitrary amount of time,

depending on the given input! Note that

none of the aforemention wcet methods will

help in this case since the input can be completely

arbitrary. Hence, the structure of the software code can

affect wcet calculations.

Another problem is that of hardware (and interactions between hardware and software). Now consider if we modify the original code as,

#define VERY_LARGE_ARRAY+SIZE 1>>18

void main()

{

int first_array[VERY_LARGE_ARRAY_SIZE] ;

int second_array[VERY_LARGE_ARRAY_SIZE] ;

int sum_first = 0;

int sum_second = 0;

for( int i = 0 ; i < VERY_LARGE_ARRAY_SIZE * 2 ; ++i)

{

if( i%2 )

first_sum += first_array[i/2] ;

else

second_sum += second_array[(int)((i/2)+1)] ;

}

}Now, while we can compute, using equation [1] the wcet

from the code perspective (since we know the loop runs for

VERY_LARGE_ARRAY_SIZE * 2 iterations), there

will be significant non-obvious hardware issues, in the

cache. Each iteration is accessing a

different large array. Hence, it will load the

cache with lines from that array and in the very next

iteration the other array will be loaded, also missing

in the cache. For instance,

| iteration | operation | cache state | reason |

|---|---|---|---|

| 1 | first_array loaded |

miss | evicts whatever was previously in cache |

| 2 | second_array loaded |

miss | evicts first_array due to

lack of space |

| 3 | first_array loaded again |

miss | evicts second_array due to

lack of space |

| … | |||

Hence, this program will constantly sufffer cache misses and since caches misses (and reloads) are expensive (in terms of time), the loop’s execution time will balloon out of control! Hence, even though we fixed the code issue (upper bound on number of iterations, hardware artifacts can change the wcet calculations). So now, we need to model cache behavior for each program and data variable! This is notoriously complicated even for the simplest of programs.

Other hardware designs further complicate matters, e.g.,

- processor pipelining

- prefetching

- branch prediction

- multithreading

- multicore systems

- memory buses

- networks-on-chip

- and too many others to recount here…

Any contemporary processor design that improves performance, turns out to be bad for wcet analysis. So, the fewer (or simpler versions of) these features, the better it is for the (eventual) safety and certification of the system.

This is one of the main reasons why embedded (and especially real-time) systems prefer simpler processors (simple pipelines, fewer complex features, simpler memory/cache architectures, if any) since they’re easier to analyze. In fact, many critical systems (e.g., aircraft, cars, etc.) use older processors (often designed in the 1980s and 1990s) – even the ones beind design today!

2.2 Embedded Processors

Just as embedded systems are varied, embedded processors come in a myriad of shapes and sizes as well. From the very small and simple (e.g., DSPs) to the very large and complex (modern multicore chips, some with GPUs!). Here is a (non-exhaustive) list of the types of embedded processors/architectures in use today:

- Microcontrollers

- Digital Signal Processors (DSPs)

- Microprocessors of various designs and architectures (e.g., ARM, x86)

- System-on-a-Chip (SoC)

- Embedded accelerators

- ASICs and FPGAs

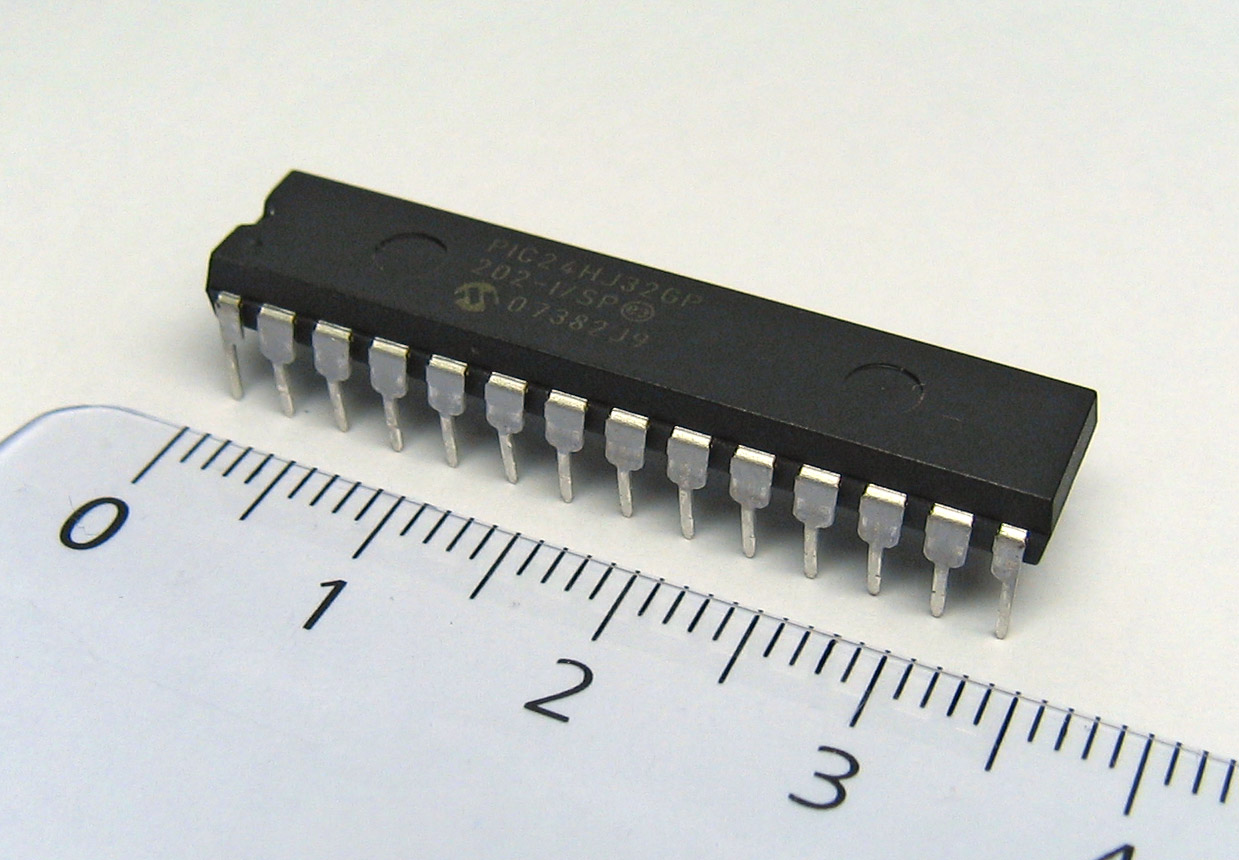

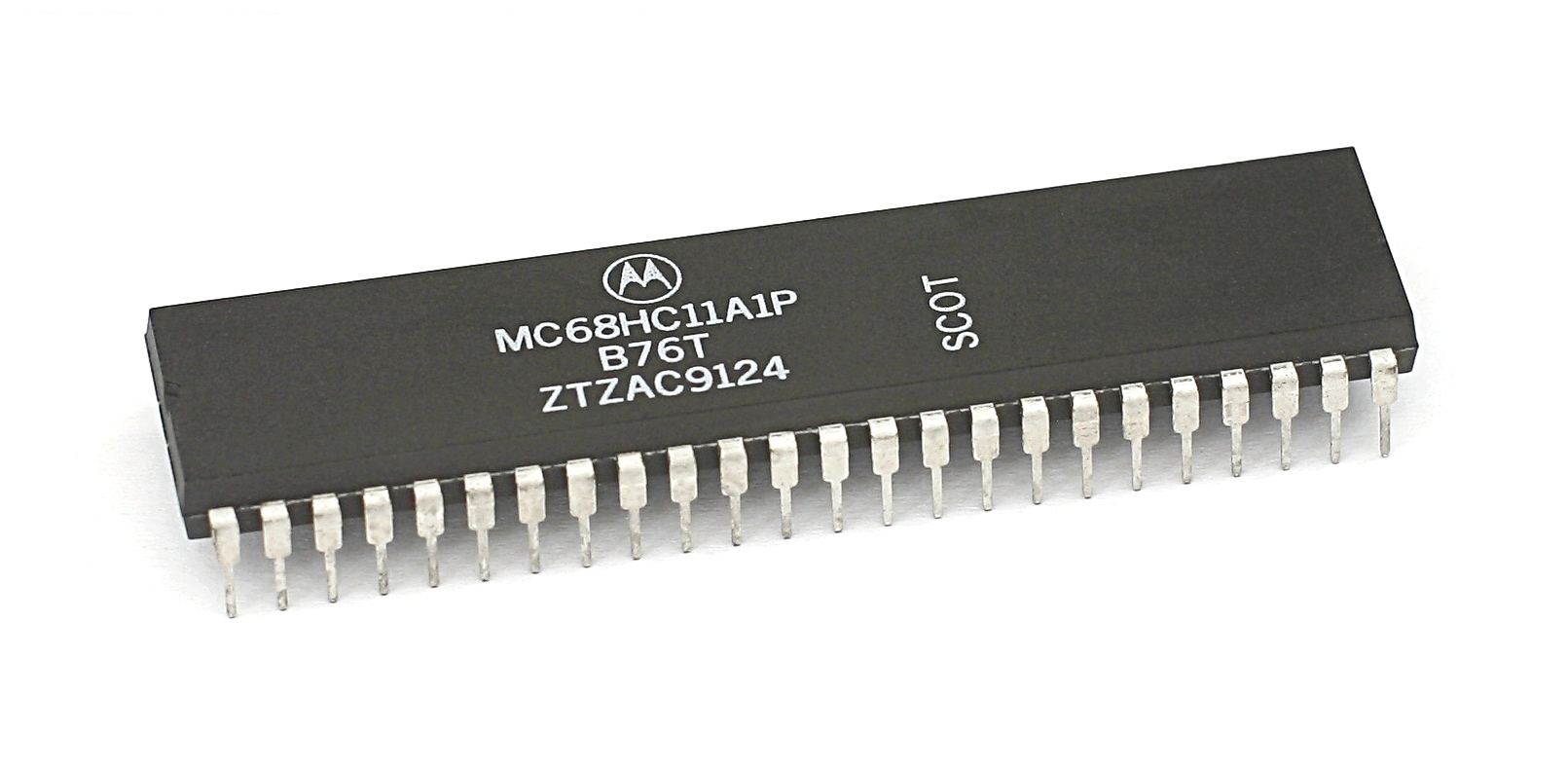

2.2.1 Microcontrollers

According to Wikipedia,

“A microcontroller (MC, UC, or μC) or microcontroller unit (MCU) is a small computer on a single integrated circuit.”

These may be among the most common type of “processors” used in embedded systems. According to many studies, more than 55% of the world’s processors are microntrollers! Microcontrollers are typically used in small, yet critical, systems such as car engine control, implantable medical devices, thermal monitoring, fault detection and classification among millions of other applications.

Microcontrollers hardware features typically include,

| component | details |

|---|---|

| one (sometimes more) CPU cores | typically simple 4 or 8 bit

chips |

| small pipelined architectues | sometimes 2 or 4 stage

pipelines |

| some limited memory | typically a few hundred kilobytes, perhaps in the form of EEPROMs or FLASH |

| some programmable I/O | to interact with the real world |

| low operating frequencies | e.g., 4 KHz; simpler/older processors, yet

more predictable |

| low power consumption | in the milliwatts or microwatts ranges; might even be nanowatts when the system is sleeping |

| interrupts (some programmable) | often real-time (ficed/low latency) |

| several general-purpose I/O (GPIO) pins | for I/O |

| timers | e.g., a programmable interval timer (PIT) |

There are some additional features found on some microcontrollers, viz.,

| component | details |

|---|---|

| analog to digital (ADC) signal convertors | to convert incoming (real-world, sensor) data to a digital form that the uC can operate on |

| digital-to-analog (DAC) convertor | to do the opposite, convert from digital to analog signals to send outputs in that form |

| universal asynchronous transmitter/receiver (UART) | to receive/send data over a serial line |

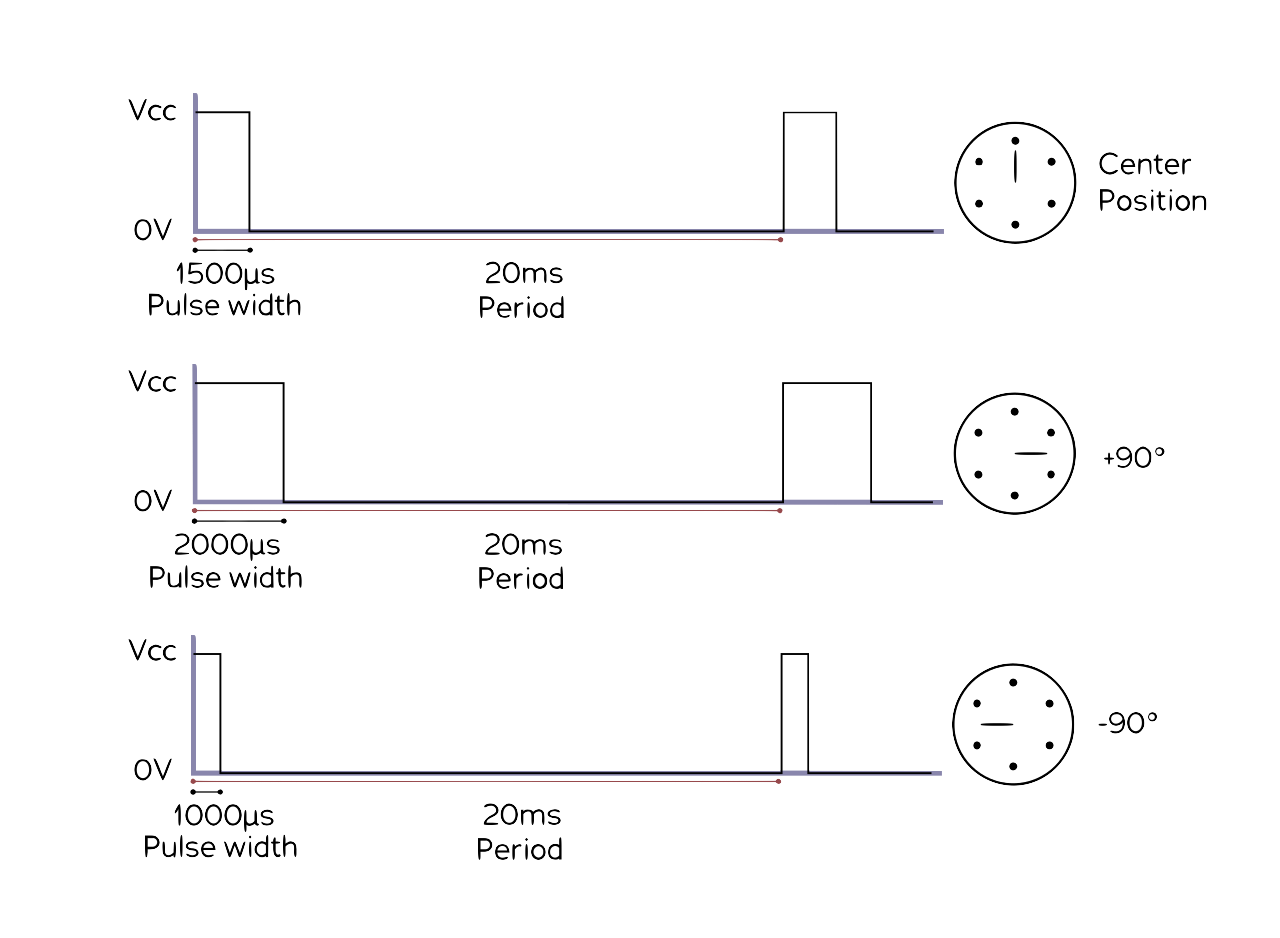

| pulse width modulation (PWM) | so that the CPU can control motors (significant for us in autonomous/automotive systems), power systems, resistive loads, etc. |

| JTAG interace | debugging interface |

Some examples of popular microcontroller families:

Atmel ATmega |

Microchip Technology |

Motorola (Freescale) |

NXP |

Microcontroller programs and data,

- are small –> must fit in memory (since very little expandable memory exists)

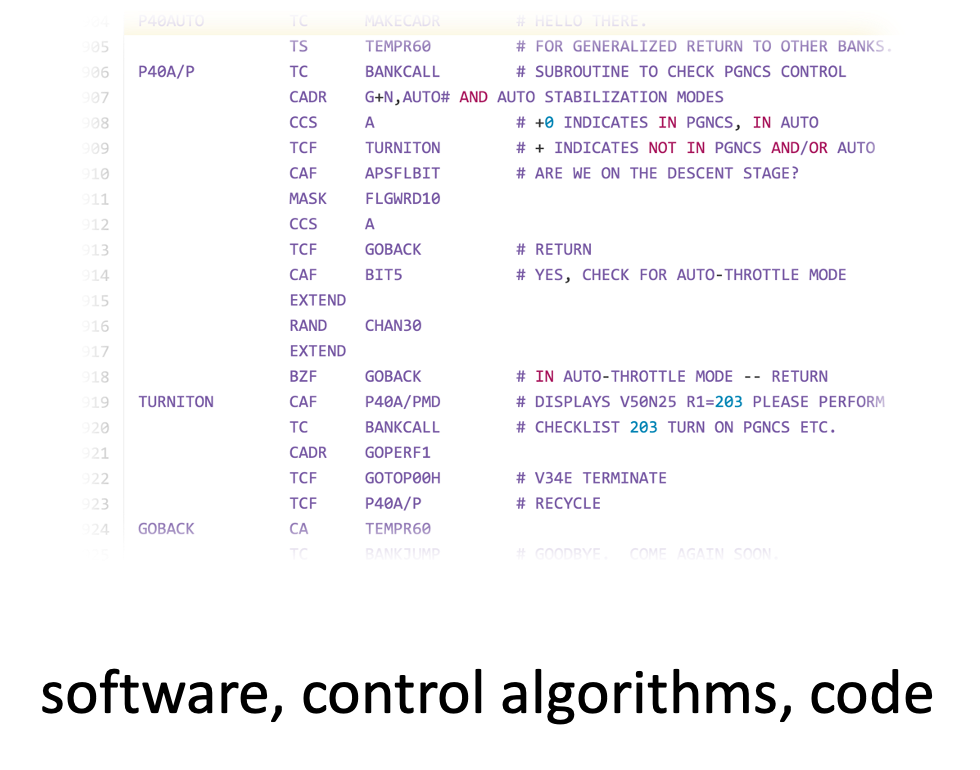

- often directly programmed in assembly!

- sometimes the assembly code might need hand tuning –> for both, performance as well as fitting into the limited memory

- C is another popular language

- no operating systems (or very rare)!

- sometimes have their own special-purpose programming languages or instructions

2.2.2 Digital Signal Processors (DSPs)

DSPs are specialized microcontrollers optimized for digital signal processing. They find wide use in audio processing, radar and sonar, speech recognition systems, image processing, satellites, telecommunications, mobile phones, televisions, etc. Their main goals are to isoloate, measure, compress and filter analog signals in the real world. They often have stringent real-time constraints.

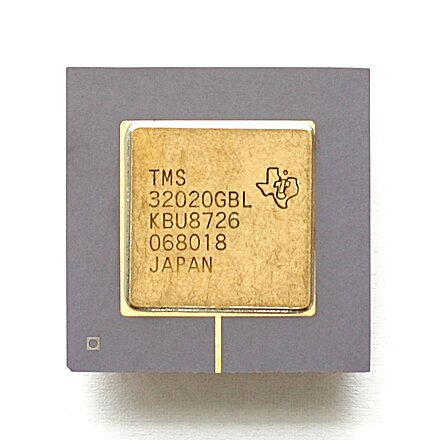

The Texas Instruments DSP chip, TMS320 Series is one of the most famous example of this type of system:

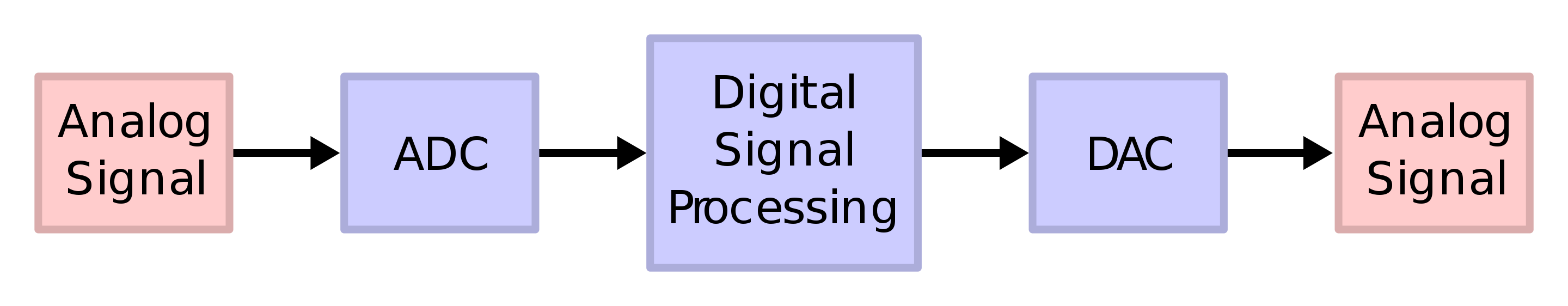

Typical digital signal processing (of any kind) requires repetitive mathematical operations over a large number of samples, in real-time, viz., - analog to digital conversion - maniupulation (the core algorithm) - digital to analog conversion

Often, the entire process must be completed with low latency, even within a fixed deadline. They also have low power requirements since DSPs are often used in battery-constrained devices such as mobile phones. Hence, the proliferation of specialized DSP chips (instead of pure software implementations, which also exist; MATLAB has an entire DSP System Toolbox).

Typical DSP architecture/flow (credit: Wikipedia):

These types of chips typically have custom instructions

for optimizing certain (mathematical) operations (apart from

the typical add, subtract,

multiply and divide), e.g., -

saturate; caps the minimum or maximum value

that can be held in a fixed-point representation -

ed ; euclidian distance -

accumulate instructions ; for multiply-and-accumulate

operations, i.e., a ← a + (b * c)

See the Microchip instruction set details for more information for a typical DSP ISA.

DSPs require optimization of streaming data and hence, - require optimized memories and caches → fetch multiple data elements at the same time - code may need to be aware of, and explicitly manipulate caches - may have rudimentary OS but no virtual memory

2.2.3 Microprocessors

Microprocessors are, then, general-purpose chips (as opposed to microcontrollers and DSPs) that are also used extensively in embedded systems. They are used in systems that need more heavy duty computing/memory and/or more flexibility in terms of programming and management of the system. They use a number of commodity processor architectures (e.g,, ARM, Intel x86).

Main features of microprocessors:

| component | details |

|---|---|

| cores | single or multicore; powerful |

| pipelines | more complex pipelines; better performance, harder to analyze (e.g., wcet) |

| clock speeds | higher clock speeds; 100s of khz, or even

GHz |

| ISA | common ISA; well understood, not custom |

| memory | significant memory; megabytes, even gigabytes |

| cache hierarchies | multiple levels, optimized |

| power consumption | much higher, but can be reduced (e.g., via voltage and frequency scaling) |

| size, cost | often higher |

| interrupts, timers | more varied, easily programmable |

| I/O | more interfaces, including commodity ones like USB |

| security | often includes additional hardware security features, e.g., ARM TrustZone. |

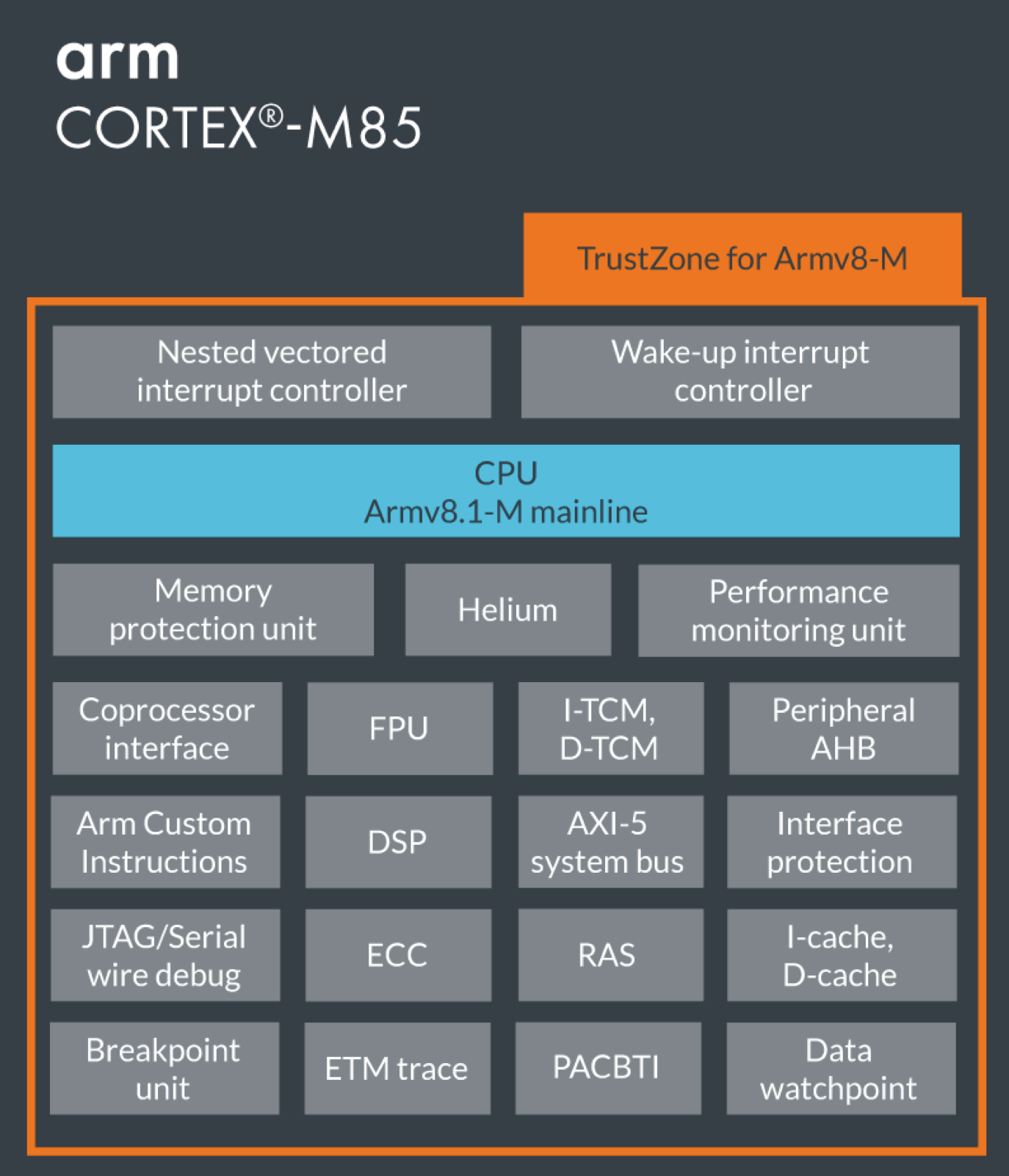

The ARM M-85 Embedded Microprocessor architecture:

When compared to microcontrollers (or even SoCs), most microprpcessors do not include components such as DSPs, ADCs, DACs, etc. It is possible to augment the microprocessor to include this functionality → usually by connecting one or more microcontrollers to it!

On the software side, microprocessors typically have the most flexibility:

- general purpose operating systems (e.g., Linux, Android, Windows, UNIX, etc.)

- most programming languages and infrastructures (even Docker!)

- large number of tooling, analysis, debugging capabilities

- complex code can run, but increases analysis difficulty

Due to their power (and cost) these types of systems are only used when really necessary or in higher-end systems such as mobile phones and autonomous cars.

2.2.4 System-on-a-Chip (SoC)

An SoC integrates most components in and around a processor into a single circuit, viz.,

- processor/chip → could be a microcontroller or even a microprocessor

- memory and memory interfaces

- I/O devices

- buses (memory and I/O)

- storage (e.g., flash) and sometimes even secondary storage

- radio modems

- (sometimes) accelerators such as GPUs

All of these are placed on a single substrate.

SoCs are often designed in C++,

MATLAB, SystemC, etc. Once the

hardware architectures are defined, additional hardware

elements are written in hardware description languages,

e.g., register transfer levels (RTL) 2.

Additional components could include,

- DAC

- ADC

- radio and signal processing

- wireless modems

- programmable logic.

- networks on chip (NoC) 3

In some sense, an SoC is an integration of a processor with peripherals. New hardware elements

Some examples of modern SoCs:

Broadcom Soc from Raspberry Pi

Apple M1 SoC

The integration of all hardware components has some interesting side-effects:

| effect | benefit | problems |

|---|---|---|

| tight integration | better performance, fewer latencies | cannot replace individual components |

| custom code/firmware | better use of hardware | not reusable in other systems |

| custom software libraries | easier programming of SoC | reduces code reusability in other systems |

| low power consumption | better battery life, less heat | (potentially) slower |

Depending on the processor/microcontroller that sits at the center of the SoC, the software stack/capabilities can vary. Many commons SoCs exhibit the following software properties:

- usually use contemporary operating systems, though optimized for embedded/SoC systems → e.g., Raspbian aka Rasberry Pi OS. Hence, they can handle multiprocessing, virtual memory, different scheduling policies, etc.

- can be programmed using most common programming

languages →

C,C++,python,java, evenlisp!

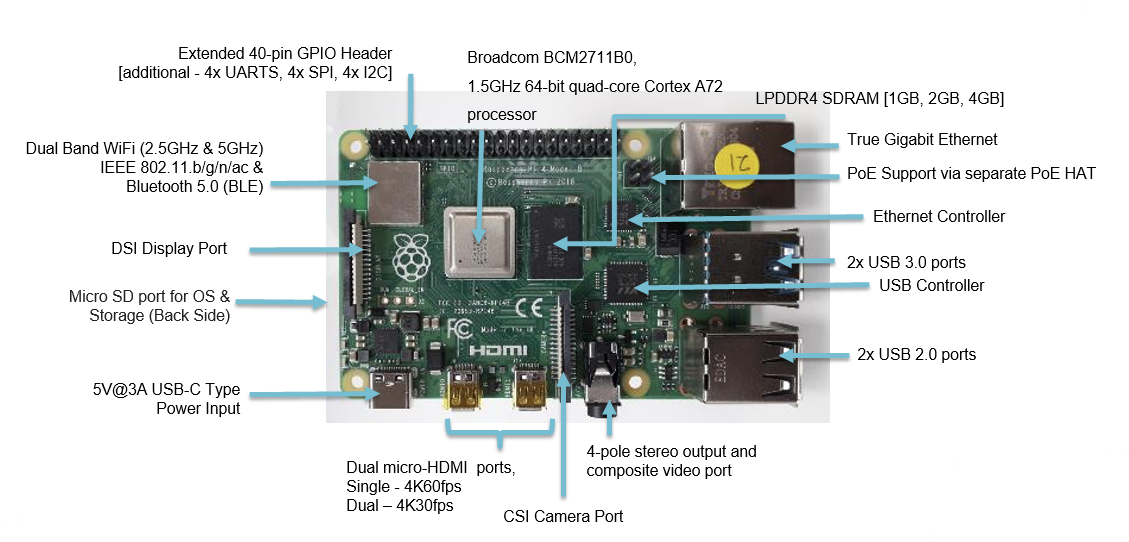

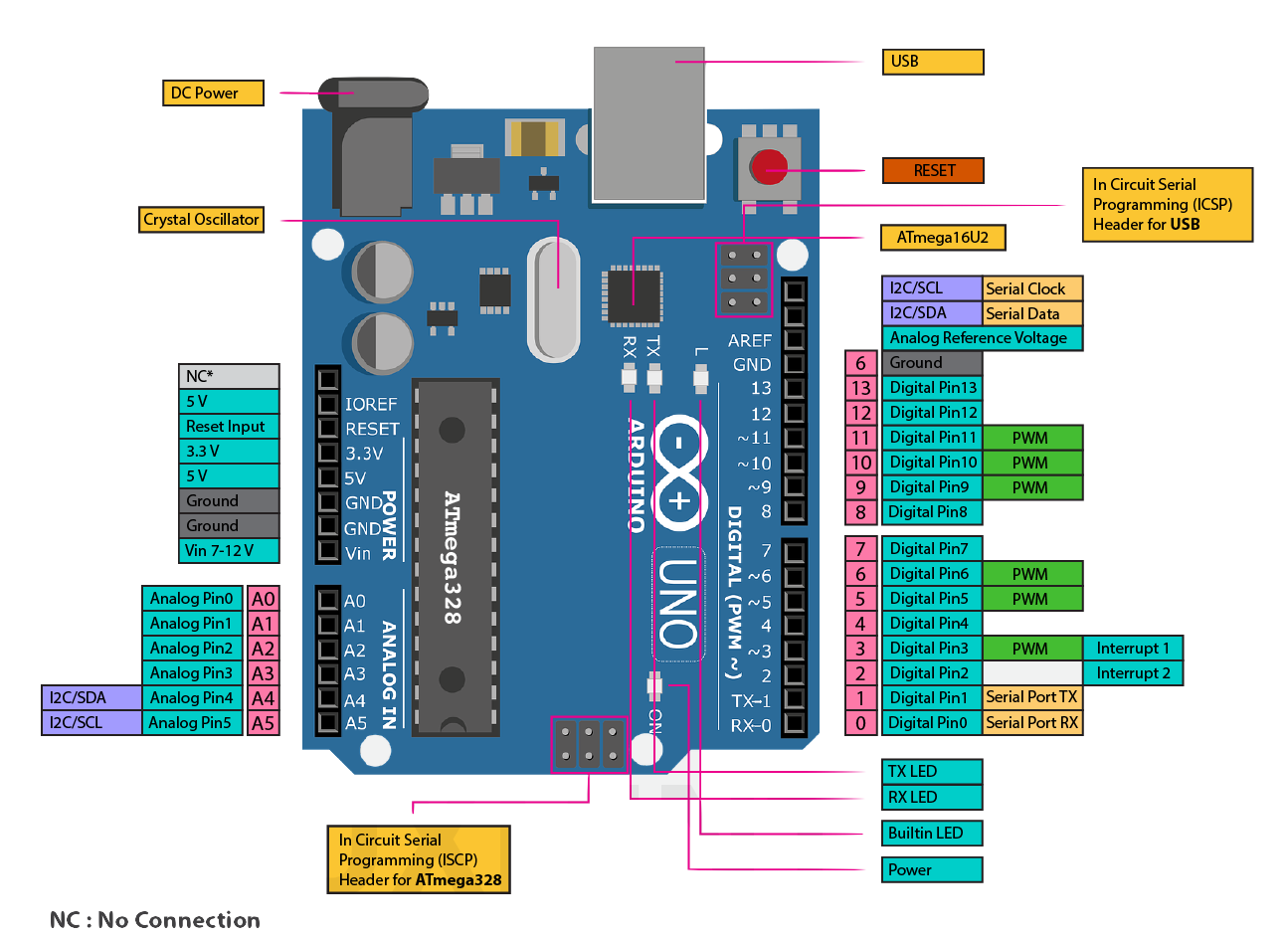

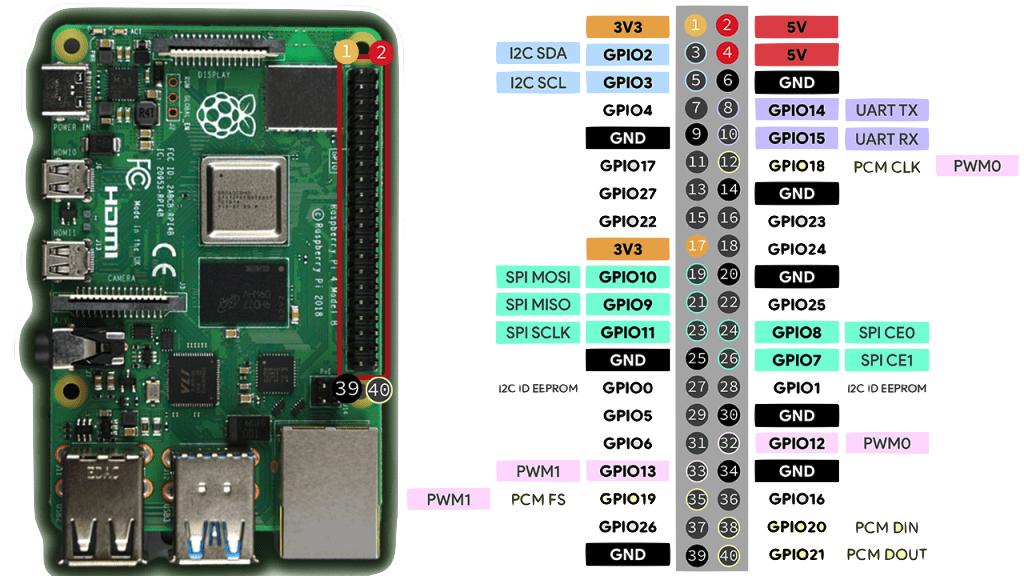

The Raspberry Pi is a common example of a system that uses a Broadcom BCM series of SoCs. We use the BCM2711 SoC in our course for the Raspberry Pi 4-B.

2.2.5 Embedded Accelarators (e.g. GPU-enabled systems)

There are hardware platforms that include accelerators in embedded systems, e.g., GPUs, AI-enabled silicon, extra programmable FPGA fabric, security features, etc. The main idea is that certain computation can be offloaded to these accelerators while the main CPU continues to process other code/requests. The accelerators are specialized for certain computations (e.g., parallel matrix multiplications on GPUs, AES encryption). Some chips include FPGA fabric where the designer/user can implement their own custom logic/accelerators.

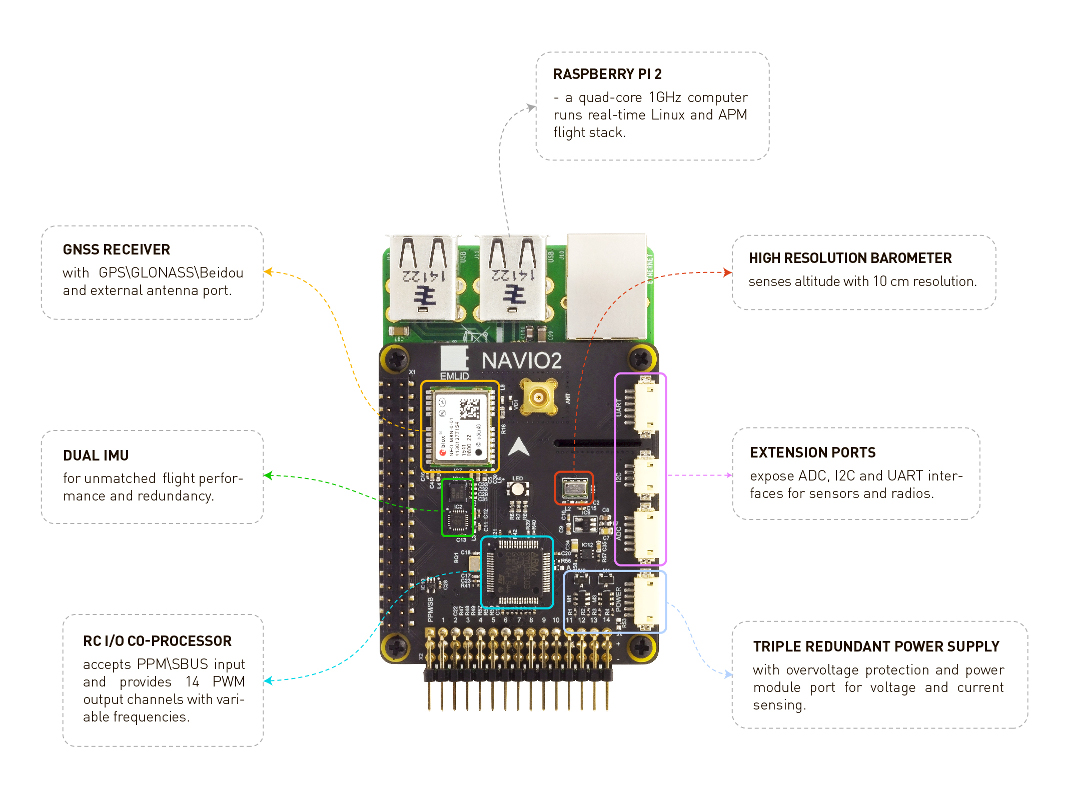

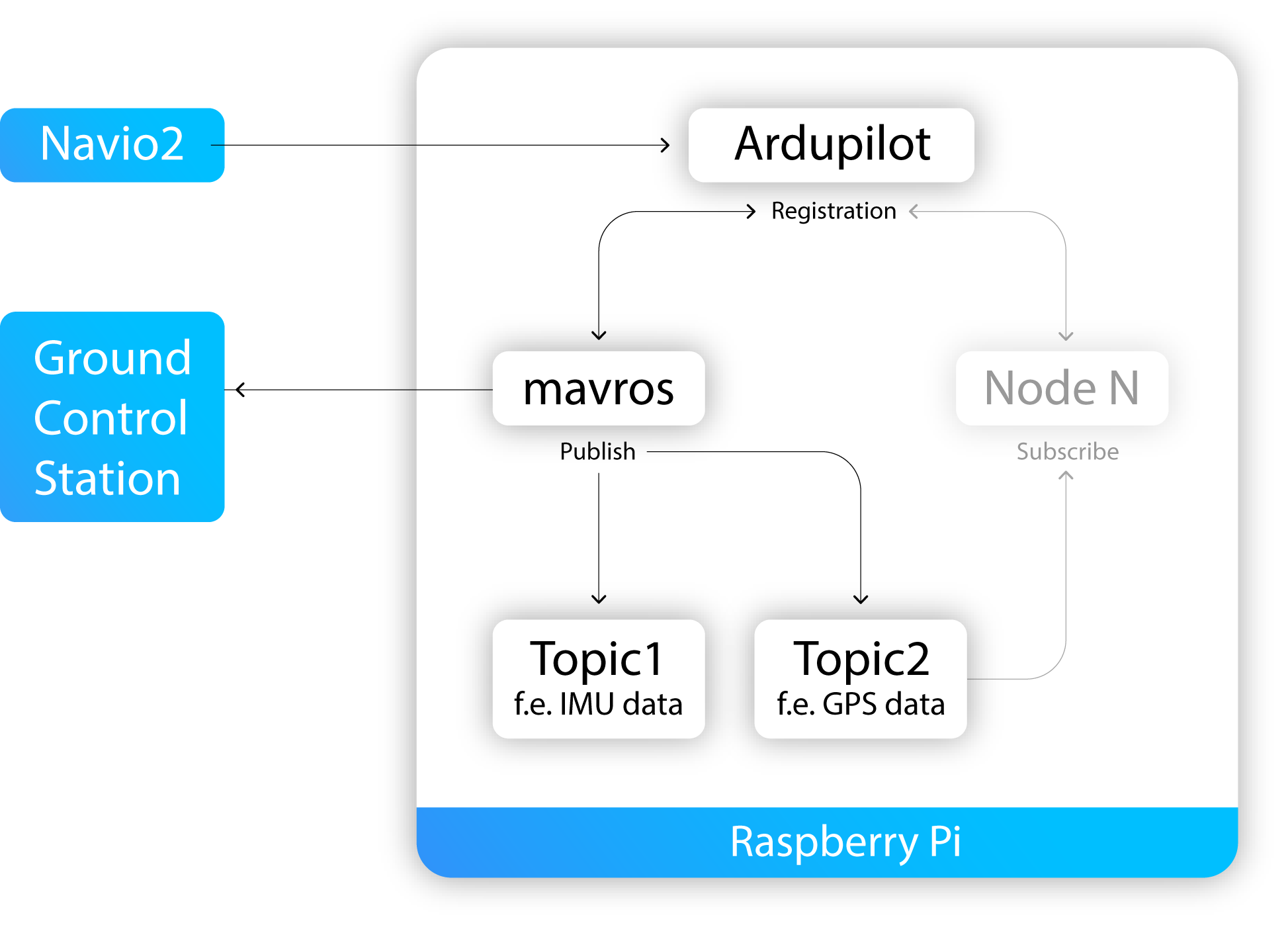

In a loose sense, the Navio2 can be considered as a hardware coprocessor for the Raspbery Pi.

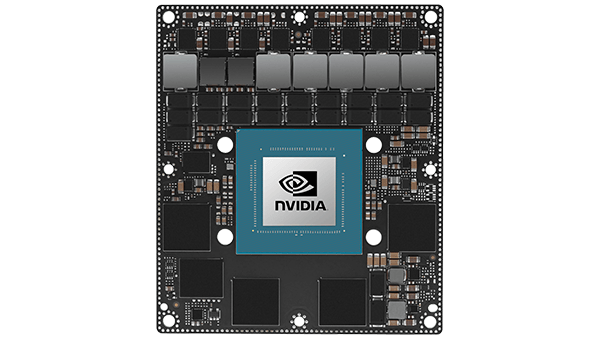

The NVidia Jetson Orin is a good example of an AI/GPU focussed embedded processor:

This system’s specifications:

- 1300 MHz clock speeds

- 64 GB Memory

- 256 bit memory bus

- 204 GB/s bandwidth

- supports a variety of graphics features (DirectX, OpenGL, OpenCL, CUDA, Vulkan and Shader Models )

- maximum of 60W power

- 275 trillion operations/s (TOPS)!

These systems are finding a lot of use in autonomous systems since they pack so much processing power into such a small form factor

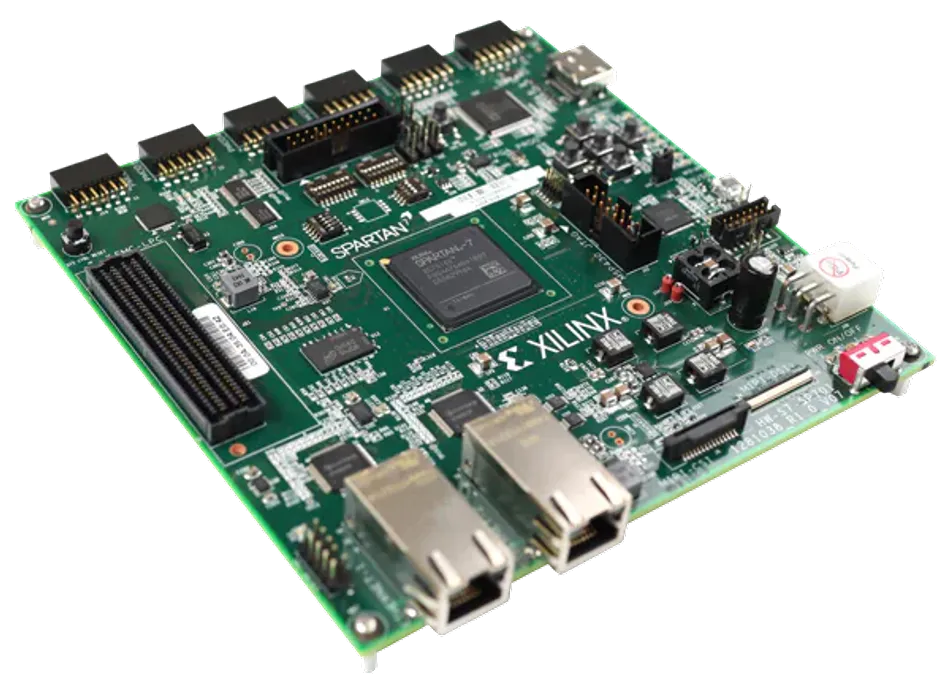

2.2.6 ASICs and FPGAs

Application-specific integrated circuits (ASICs) and field programmable gate arrays (FPGAs). These platforms combine the advantages of both, hardware (speed) and software (flexibility/programmability). They are similar, yet different. Both are semiconductor devices that include programmable logic gates but an ASIC is static – i.e., once the board has been “programmed” it cannot be changed while an FPGA, as the name implies, allows for “reprogramming”.

ASICs are custom-designed for specific applications and provide high efficiency and performance. FPGAs are reprogramamble devices that provide significant flexibility. Many designers also used it for prototyping hardware components (before they are eventually included either in the processors or custom ASICs). The choice between ASICs and FPGAs depends entirely on the application requirements and other factors such as cost.

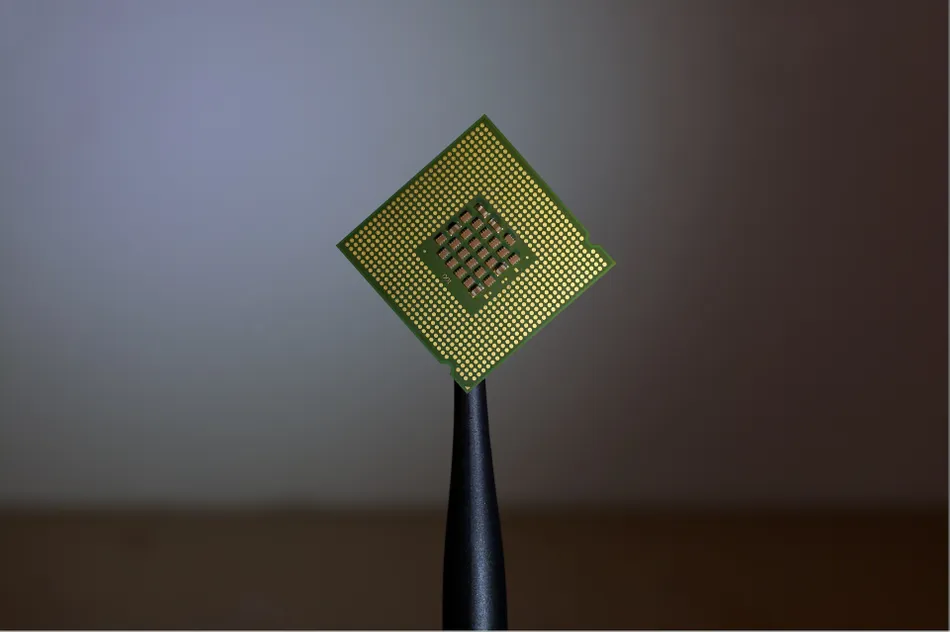

|

|

| An ASIC | Xilinx Spartan FPGA |

2.2.6.1 ASICs

These are specialized semiconductor devices – to implement a custom function, e.g., cryptocurrency mining, nuclear reactor control, televisions. ASICs are tailored to their specific applications. Once created, it cannot be reprogrammed or modified. ASICs are created using a process known as photolithography, a method to prepare nanoparticles, that allows components to be “etched” on to a silicon wafer.

The ASIC design process, while expensive and time consuming, becomes valuable for high-volume products as the per-unit cost decrease when production nunbers increase.

| advantages | disadvantages |

|---|---|

| high performance | lack of flexibility |

| low power consumption | high initial costs |

| small form factor | long development time |

| ip protection | obsolescence risk |

| good for mass production | risks with manufacturing yields |

| can integrate multiple functions | design complexity |

2.2.6.2 FPGAs

These are also semiconductor devices but they can be preprogrammed to implement various circuits and functions. Designers can change the functionality after the curcuits have been embossed onto the hardware. Hence, they’re good for systems that might require changes at design time and rapid prototyping. An FPGA is a collection of programmable logic and interconnects. They include lookup tables (LUTs) and other parts that can be used to develop multiple, fairly wide-ranging, functions. The programmable blocks can be connected to each other via the interconnects. Some FPGAs even come with additional flash memory.

FPGAs are programmed using hardware description languages such as Verilog/VHDL.

| advantages | disadvantages |

|---|---|

| flexibility | lower performance |

| shorter development time | higher power consumption |

| upgradability | high design complexity |

| lower (initial) costs | higher per-unit costs |

| better processing capabilities | design complexity |

| lower obsolescence risks | larger form factor |

2.3 Communication and I/O

Embedded systems need to communicate and/or interface with various elements:

- the physical world via sensors and actuators

- computers for programming (of the embedded system) or for data transfer

- with other embedded systems/nodes

- handheld devices

- with the internet (either public or to access back end servers)

- satellites?

Hence a large number of communication standards and I/O interfaces have been developed over the years. Let’s look at a few of them:

- serial (UART) → e.g., RS 232

- synchronous → I2C, SPI

- general-purpose I/O → GPIO

- debugging interface → JTAG

- embedded internal communication → CAN

- other broadly used protocols → USB, Ethernet/WiFi, Radio, Bluetooth

2.3.1 UART | RS-232

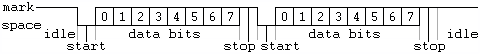

Serial communication standards are used extensively across many domains, mainly due to their simplicity and low hardware overheads. The most common among these are the asynchronous serial communication systems.

From Wikipedia:

Asynchronous serial communication is a form of serial communication in which the communicating endpoints’ interfaces are not continuously synchronized by a common clock signal. Instead of a common synchronization signal, the data stream contains synchronization information in form of start and stop signals, before and after each unit of transmission, respectively. The start signal prepares the receiver for arrival of data and the stop signal resets its state to enable triggering of a new sequence.

The following figure shows a communication sample that demonstrates these principles:

We see that each byte has a start bit,

stop bit and eight data bits. The

last bit is often used as a parity bit. All of

these “standards” (i.e., the start/stop/parity bits) must be

agreed upon ahead of time.

A universal asynchronous receiver-transmitter (UART) then is a peripheral device for such asynchronous commnication; the data format and transmission speeds are configurable. It sends data bits one-by-one (from least significant to most). The precise timing is handlded by the communication channel.

The electric signalling levels are handled by an external driver circuit. Common signal levels:

Here we will focus on the RS-232 standard since it is most widely used UART signaling level standard today. The full name of the standard is: “EIA/TIA-232-E Interface Between Data Terminal Equipment and Data Circuit-Termination Equipment Employing Serial Binary Data Interchange” (“EIA/TIA” stands for the Electronic Industry Association and the Telecommunications Industry Association). It was introduced in 1962 and has since been updated four times to meet evolving needs.

The RS-232 is a complete standard in that it specifies,

- (common) voltage and signal levels

- (common) pin and wiring configurations

- (minimal) control information between host/peripherals

The RS-232 specifies the electrical, functional and mechanical characteristics to meet all of the above criteria.

For instance, the electrical characteristics are defined in the following figure:

Details:

- high level [logical

0] (aka “marking”) →+5Vto+15V(realistically+3Vto+15V) - low level [logical

1] (aka “spacing”) →-5Vto-15V(realistically-3Vto-15V)

Other properties also defined, e.g., “slew rate”, impedance, capacitive loads, etc.

The standard also defines the mechanical interfaces, i.e., the pin connector:

While the official standard calls for a 25-pin connector, it is rarely used. Instead, the 9-pin connector (shown on the right in the above figure) is in common use.

You can read more details about the standard here: RS 232

2.3.2 Synchronous | I2C and SPI

Synchronous Serial Interfaces (SSIs) are a widely used in industrial applications between a master device (e.g. controller) and a slave device (e.g. sensor). It is based on the RS-422 standards and has a high protocol efficiency as well multiple hardware implementations.

SSI properties:

- differential signalling

- simplex (i.e., unidirectional communication only)

- non-multiplexed

- point-to-point and

- uses time-outs to frame the data.

2.3.2.1 I2C

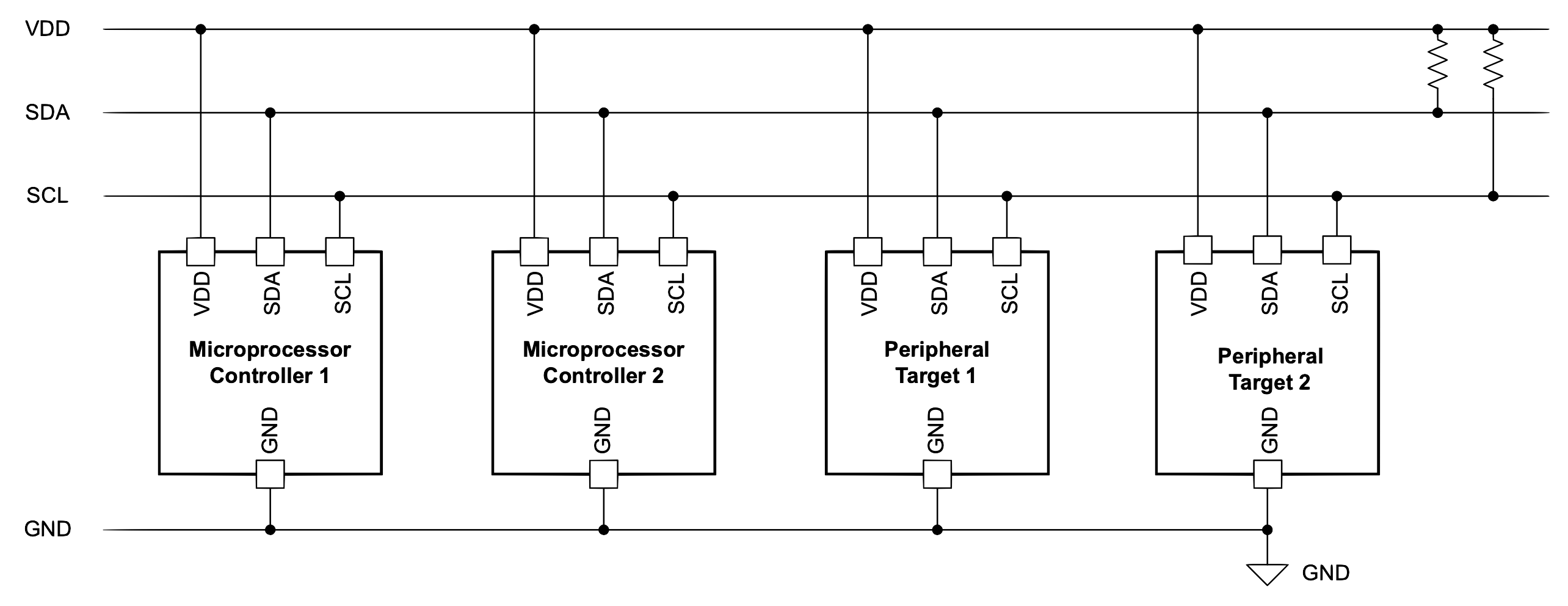

The Inter-Integrated Circuit (I2C, IIC, I2C) is a synchronous, multi-controller/multi-target (historically termed as multi-master/multi-slave), single-ended, serial communication bus. I2C systems are used for attaching low-power integrated circuits to processors and microcontrollers – usually for short distance or intra-board communication.

I2C components are found in a wide variety of products, e.g.,

- EEPROMs

- VGA/DVI/HDMI connectors

- NVRAM chips

- real-time clocks

- reading hardware monitors and sensors

- controlling actuators

- DAC/ADC

- controlling LCD/OLEDs displays

- changing computer display settings (contrast, brightness, etc.)

- controlling speaker volume

- and many many more

The main advantage of I2C is that a microcontroller can control a network of chips with just two general-purpose I/O pins (serial data line and a serial clock line) and software. A controller device can communicate with any target device through a unique I2C address sent through the serial data line. Hence the two signals are:

| line | voltage | description |

|---|---|---|

| serial data line (SDL) | +5V |

transmit data to or from target devices |

| serial clock line (SCL) | +3V |

synchronously clock data in or out of the target device |

Both are bidirectional and pulled up with resistors.

Here is a typical implementation of I2C:

An I2C chip example (used for controlling certain TV signals):

I2C is half-duplex communication where only a single controller or a target device is sending data on the bus at a time. In comparison, the serial peripheral interface (SPI) is a full-duplex protocol where data can be sent to and received back at the same time. An I2C controller device starts and stops communication, which removes the potential problem of bus contention. Communication with a target device is sent through a unique address on the bus. This allows for both multiple controllers and multiple target devices on the I2C bus.

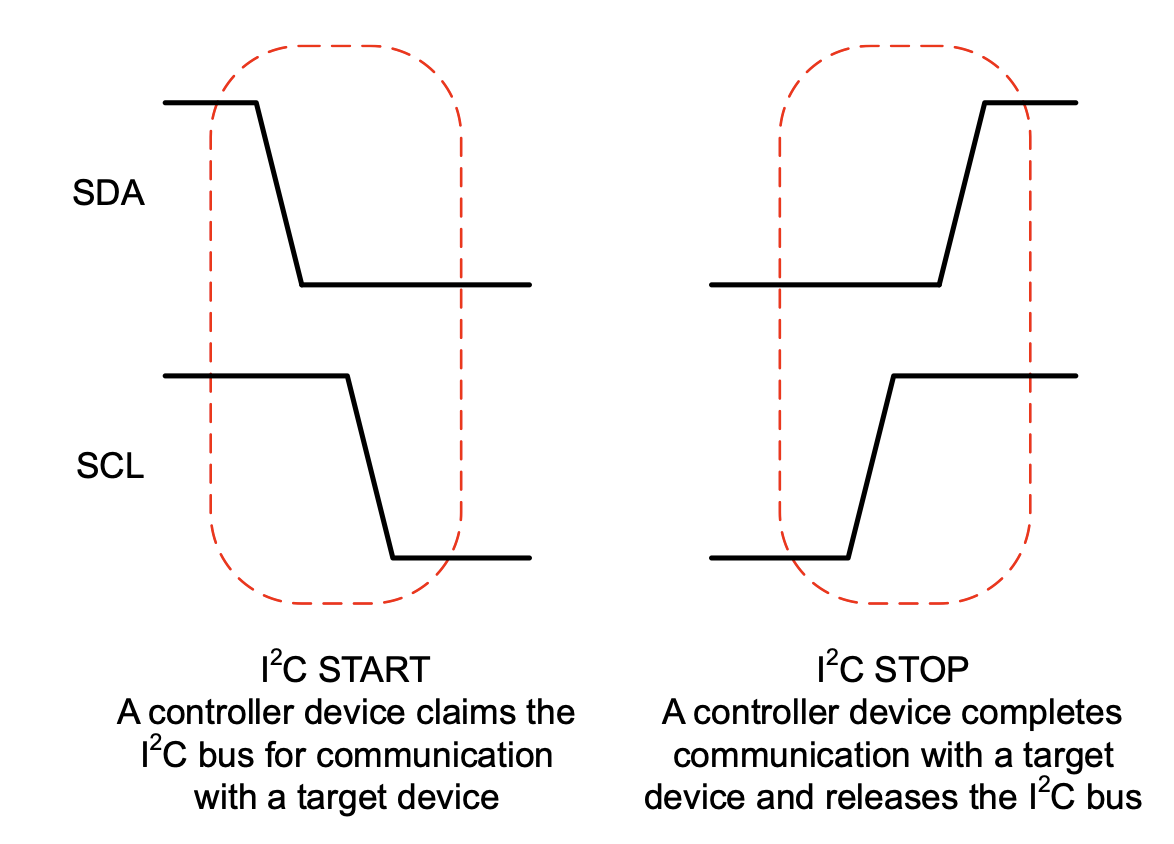

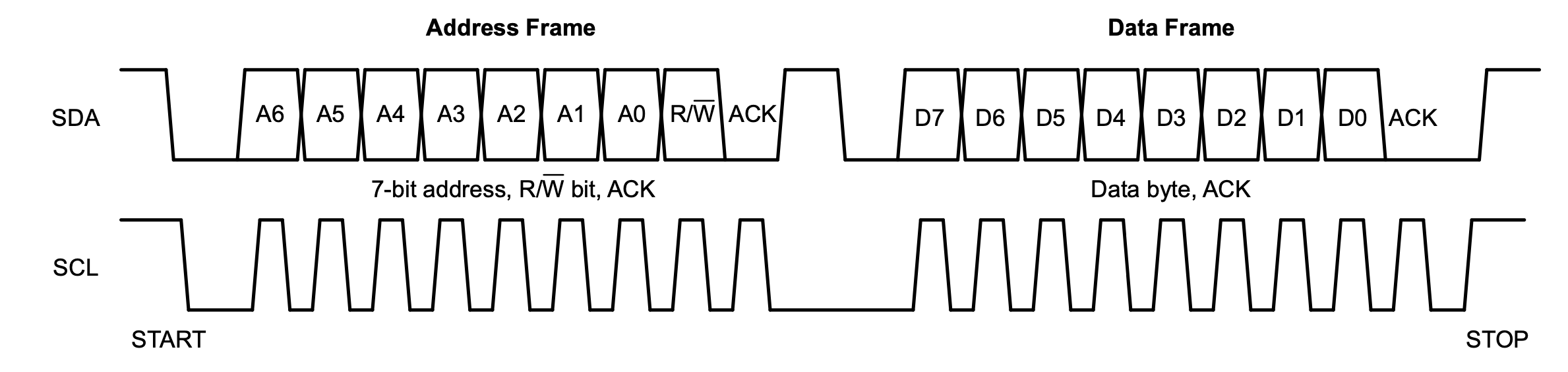

I2C communication details (initiated from the controller device):

| condition | description |

|---|---|

I2C START |

the controller device first pulls the SDA low and then pulls the SCL low |

I2C STOP |

the SCL releases high and then SDA releases high |

I2C communication is split into: frames.

Communciation starts when one controller sends an

address frame after a START. This

is followed by one or more data frames, each

consisting of one byte. Each frame also has

an acknowledgement bit. An example of two I2C

communication frames:

You can read more at: I2C.

2.3.2.2 SPI

The Serial Peripheral Interface (SPI) has become the de facto standard for synchronous serial communication. It is used in embedded systems, especially between microcontrollers and peripheral ICs such as sensors, ADCs, DACs, shift registers, SRAM, etc.

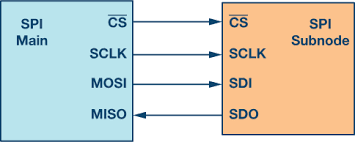

The main aspect of SPI is that one main device orchestrates communication with one ore more sub/peripheral devices by driving the clock and chip select signals.

SPI interface properties:

- synchronous

- full duplex

- main-subnode (formerly called “master-slave”)

- data from the main or the subnode is synchronized on the rising or falling clock edge

- main and subnode can transmit data at the same time

- interface can be 3 or 4-wire (4 wire version is more popular)

| microchip SPI | basic SPI Interface |

|---|---|

|

|

The SPI interface contains the following wires:

| signal | description | function |

|---|---|---|

SCLK |

serial clock | clock signal from main |

CS |

chip/serial select | To select which host to communicate with |

MOSI |

main out, subnode In | serial data out (SDO) for host to target communication |

MISO |

main in, subnode Out | serial data in (SDI) for target to host communication |

The main node generates the clock signal. Data

transmissions between main ahd sub nodes is synchronized by

that clock signal generated by main. SPI devices support

much higher clock frequencies than I2C. The

CS signal is used to select the subnode. Note

that this is an active low signal,

i.e., a low (0) is a selection and a

high (1) is a disconnect. SPI is a full-duplex

interface; both main and subnode can send data at the same

time via the MOSI and MISO lines respectively. During SPI

communication, the data is simultaneously transmitted

(shifted out serially onto the MOSI/SDO bus) and received

(the data on the bus (MISO/SDI) is sampled or read in).

Example: the following example demonstrates the significant savings and simplification in systems design (reduce the number of GPIO pins required).

Consider the ADG1412 switch being managed by a microcontroller as follows:

Now, as the number of switches increases, the requirement

on GPIO pins also increases significantly. A

4x4 configuration requires 16 GPI

pins, thus reducing the number of pins available for the

microcontroller for other tasks, as follows:

One approach to reduce the number of pins would be to use a serial-to-parallel convertor:

This reduces the pressure on the number of GPIO pins but still introduces additional circuitry.

Using an SPI-enabled microcontroller reduces the number of GPIOs required and and eliminates the overheads of the needing additional chips (serial-to-paralle convertor):

In fact, using a different SPI configuration (“daisy-chain”), we can optimize the GPIO count even further!

You can read more about SPI here.

2.3.3 General-Purpose I/O (GPIO)

A GPIO is a signal pin on an integrated circuit or board that can be used to perform digital I/O operations. By design, it has no predefined purpose → can be used by hardware/software developers to perform functions they choose, e.g.,

- GPIO pins can be enabled or disabled.

- GPIO pins can be configured to be input or output.

- input values are readable, often with a 1 representing a high voltage, and a 0 representing a low voltage.

- input GPIO pins can be used as “interrupt” lines, which allow a peripheral board connected via multiple pins to signal to the primary embedded board that it requires attention.

- output pin values are both readable and writable.

GPIOs can be implemented in a variety of ways,

- as a primary function of the microcontrollers, e.g., Intel 8255

- as an accessory to the chip

While microcontrollers may use GPIOs are their primary external interface, many a time the pins may be capable of other functions as well. In such instances, it may be necessary to configure the pins using other functions.

Some examples of chips with GPIO pins:

| Intel 8255 | PIC microchip | ASUS Tinker |

|---|---|---|

|

|

|

| 24 GPIO pins | 29 GPIO pins | 28 GPIO pins |

GPIOs are used in a diverse variety of applications, limited only by the electrical and timing specifications of the GPIO interface and the ability of software to interact with GPIOs in a sufficiently timely manner.

Some “properties”/applications of GPIOs:

- GPIOs use standard logic levels and cannot supply significant current to output loads

- high-current output buffers or relays can be used to control high-power devices

- input buffers, relays, or opto-isolators translate incompatible signals to GPIO logic levels

- GPIOs can control or monitor other circuitry on a board, such as enabling/disabling circuits, reading switch states, and driving LEDs

- multiple GPIOs can implement bit banging communication interfaces like I²C or SPI

- GPIOs can control analog processes via PWM, adjusting motor speed, light intensity, or temperature

- PWM signals from GPIOs can be converted to analog control voltages using RC filters

GPIO interfaces vary widely. Most commonly, they’re simple groups of pins that can switch between input/output. On the other hand, each pin can be set up differently → set up/accept/source different voltages/drive strengths/pull ups and downs.

Programming the GPIO:

- usually pin states are exposed via different interfaces, e.g., memory-mapped I/O peripherals or dedicated I/O port instructions

- input values can be used as interrupts (IRQs)

For more information on programming/using GPIOs, read these: GPIO setup and use, Python scripting the GPIO in Raspberry Pis, general purpose I/O, GPIO setup in Raspberry Pi.

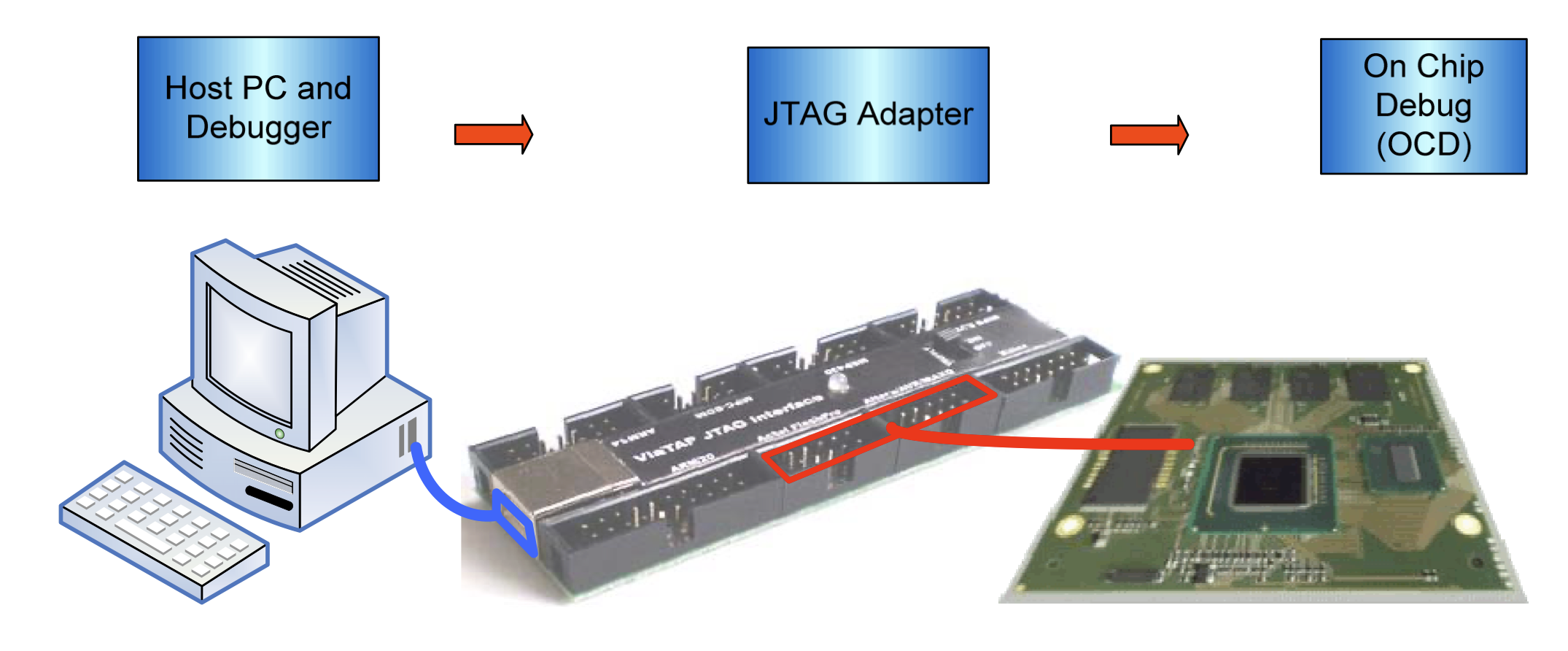

2.3.4 JTAG Debugging Interface

The JTAG standard (named after the “Joint Test Action Group”), technically the IEEE Std 1149.1-1990 IEEE Standard Test Access Port and Boundary-Scan Architecture, is an industry standard for testing and verification of printed circuit boards, after manufacture.

“JTAG”, depending on the context, could stand for one or more of the following:

- implementation of IEEE 1149.x for Board Test, or Boundary Scan testing

- appliance used to program on board flash or eeprom devices on a circuit board

- hardware device used to debug microprocessor software

- hardware device used to test a board using Boundary Scan

The basic building block of a JTAG OCD is the Test Access Point or TAP controller. This allows access to all the custom features within a specific processor, and must support a minimum set of commands. On-chip debugging is a combination of hardware and software.

| type | description |

|---|---|

| hardaware | on chip debug (OCD) |

| software | in-circuit-emulator (ICE)/JTAG emulator |

The off-chip parts are actually PC peripherals that need corresponding drivers running on a separate computer. On most systems, JTAG-based debugging is available from the very first instruction after CPU reset, letting it assist with development of early boot software which runs before anything is set up. The JTAG emulator allows developers to access the embedded system at the machine code level if needed! Many silicon architectures (Intel, ARM, PowerPC, etc.) have built entire infrastructures and extensions around JTAG.

A high-level overview of the JTAG architecture/use:

JTAG now allows for,

- processors can not be halted, single-stepped or run freely

- can set code breakpoints for both, code in RAM as well as ROM/flash

- data breakpoints are available

- bulk data download to RAM

- access to registers and buses, even without halting the processors!

- complex logic routines, e.g., ignore the first seven accesses to a register from one particular subroutine

JTAG allows for device programmer hardware allows for transfering data into internal, non-volatile memory of the system! Hence, we can use JTAGs to program devices such as FPGAs. In fact, many memory chips also have JTAG interfaces. Some modern chips also allow access to the the (internal and external) data buses via JTAG.

JTAG interface: depending on the actual interface, JTAG has 2/4/5 pins. The 4/5 pin versions are designed so that multiple chips on a board can have their JTAG lines daisy-chained together if specific conditions are met.

Schematic Diagram of a JTAG enabled device:

The various pins signals in the JTAG TAP are:

| signal | description |

|---|---|

TCK |

synchronizes the internal state machine operations |

TMS |

sampled at the rising edge of TCK to

determine the next state |

TDI |

data shifted into the device’s test or programming

logic; sampled at the rising edge of TCK when

the internal state machine is in the correct state |

TDO |

represents the data shifted out of the device’s test or

programming logic and is valid on the falling edge of

TCK when the internal state machine is in the

correct state |

TRST |

optional pin which, when available, can reset the tap controller’s state machine |

The TAP controller implements the following state machine:

To use the JTAG interface,

- host is connected to the target’s JTAG signals

(

TMS,TCK,TDI,TDO, etc.) through some kind of JTAG adapter - adapter connects to the host using some interface such as USB, PCI, Ethernet, etc.

- host communicates with the TAPs by manipulating

TMSandTDIin conjunction withTCK - host reads results through

TDO(which is the only standard host-side input) TMS/TDI/TCKoutput transitions create the basic JTAG communication primitive on which higher layer protocols build

For more information about JTAG, read: Intel JTAG Overview, Raspberry Pi JTAG programming, Technical Guide to JTAG and the JTAG Wikipedia Entry is quite detailed.

2.3.5 Controller Area Network (CAN)

CAN is a vehicle bus standard to enable efficient communication between electronic control units (ECUs). CAN is,

- broadcast-based

- message-oriented

- uses arbitration → for data integrity/prioritization

CAN does not need a a host controller. ECUs connected via the CAN bus can easily share information with each other. all ECUs are connected on a two-wire bus consisting of a twisted pair: CAN high and CAN low. The wires are often color coded:

| CAN high | yellow |

| CAN low | green |

| CAN wiring | multi-ecu CAN setup |

An ECU in a vehicle consists of:

| components | internal architecture |

|---|---|

|

|

Any ECU can broadcast on the CAN bus and the messages are accepted by all ECUs connected to it. Each ECU can either choose to ignore the message or act on it.

what are the implications for security?

While there is no “standard” CAN connector (each vehicle may use different ones), the CAN Bus DB9 connector has become the de facto standard:

The above figure shows the various pins and their signals.

CAN Communication Protocols: CAN is split into:

| layer | relation to OSI stack |

|---|---|

|

|

All communication over the CAN bus is done via the

CAN frames. The standard CAN frame

(with an 11-bit identifier) is shown below:

While the lower-level CAN protocols described so far work on the two lowest layers of the OSI networking stack, it is still limiting. For instance, the CAN standard doesn’t discuss how to,

- decode RAW data

- handle larger data (more than 8 bytes)

Hence, some higher-order protocols have been developed, viz.,

| protocol | description |

|---|---|

| OBD2 | on-board diagnostics in cars/trucks for diagnostics, maintenance, emissions tests |

| UDS | Unified Diagnostic Services (UDS) used in automotive ECUs for diagnostics, firmware updates, routine testing |

| CCP/XCP | used in embedded control/industrial automation for off-the-shelf interoperability between CAN devices |

| SAE J1939 | for heavy-duty vehicles |

| NMEA 2000 | used in maritime industry for connecting e.g. engines, instruments, sensors on boats |

| ISOBUS | used in agriculture and forestry machinery to enable plug and play integration between vehicles/implements, across brands |

There also exist other higher-order protocols (numbering in the thousands) the most prominent of which are: ARINC, UAVCAN, DeviceNet, SafetyBUS p, MilCAN, HVAC CAN.

More details about CAN and its variants: CAN Bus Explained.

2.3.6 Other Broadly Used Protocols

Autonomous (and other embedded systems) use a variety of other communication protocols in order to interface with the external world and/or other systems (either other nodes in the system or external components such as back end clouds).

Note that since many of these are well known and publicly documented, we won’t elaborate much here.

Here are some of the well known communication protocols, also used in embedded systems:

| protocol | links |

|---|---|

| USB | How USB works: part 1, part2, part 3; USB in a Nutshell (very detailed). |

| Ethernet | Reliable Embedded Ethernet, Embedded Ethernet and Internet (book, online) |

| WiFi | WiFi Sensing on the Edge (paper) |

| Bluetooth | Bluetooth Basics, Bluetooth Low Energy |

| Radio | Embedded Development with GNU Radio |

2.5 References

3 Sensors and Sensing

An embedded/autonomous system perceives the physical world via sensors – either to gather information about its environment or to model its own state. Hence it is a critical component in the sensing → planning → actuation loop and a critical component in the design of embedded and autonomous systems.

|

|

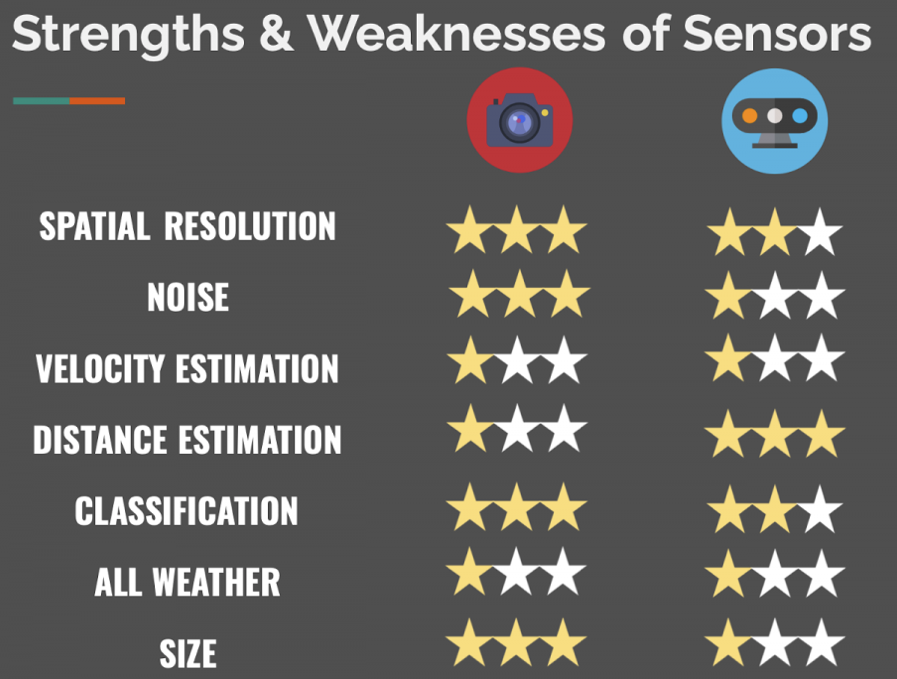

Modern autonomous systems used a wide array of sensors. This is necessary due to:

- there is a need to measure different quantities, e.g., GPS, velocity, objects, etc.

- sensor measurements often have errors → hence, we need multiple sensors, often using different physical properties to measure the same thing; e.g., LiDar and cameras can both be used to detect objects in front of, and around, an autonomous vehicle.

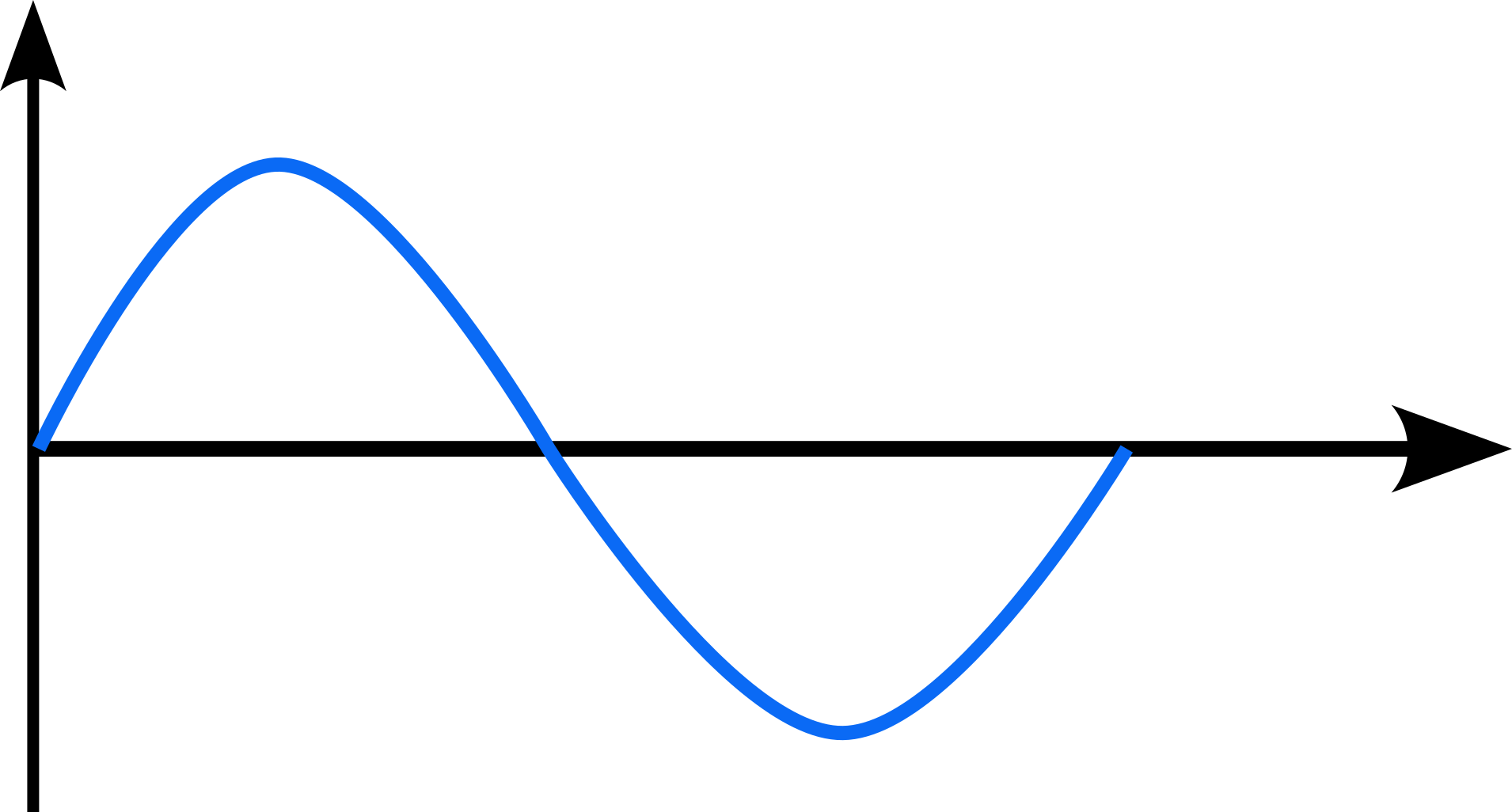

At its core,

a sensor captures a physical/chemical/environmental quantity and converts it to a digital quantity.

(hence the need for an Analog-to-Digital Convertor (ADC) as we shall see later)

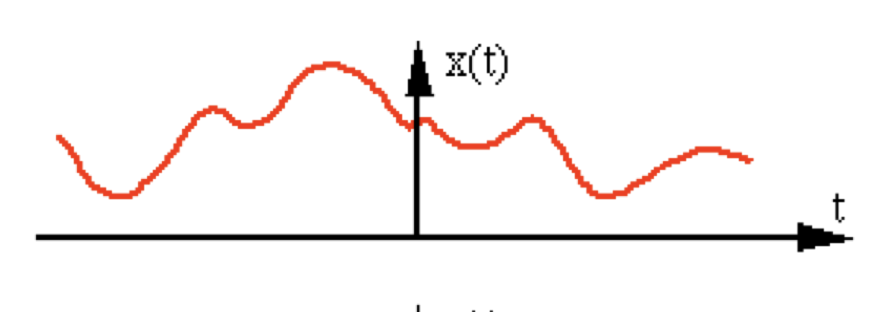

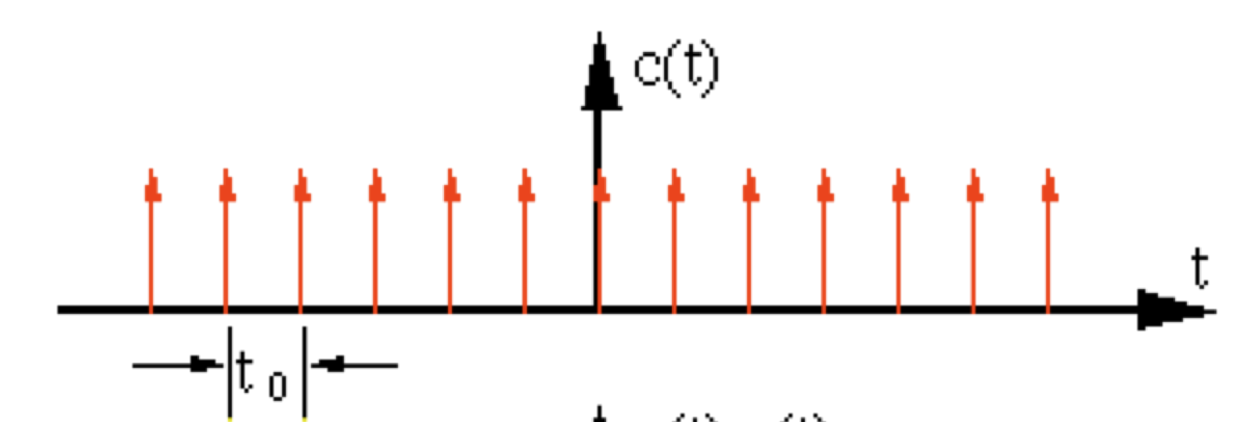

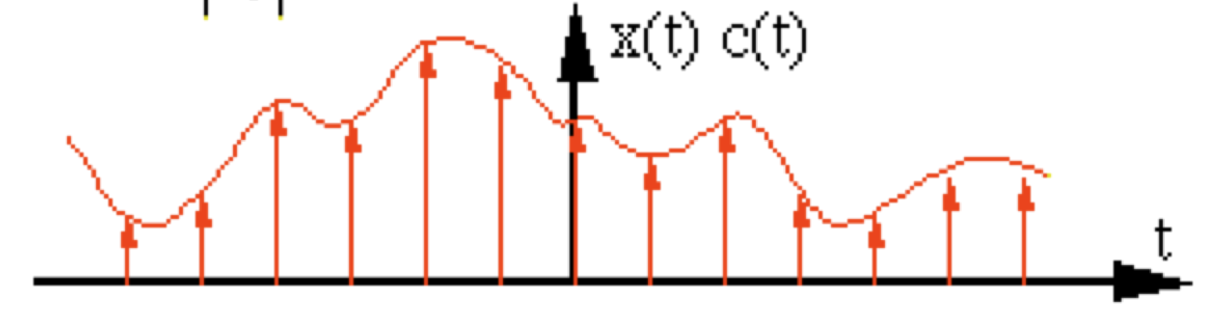

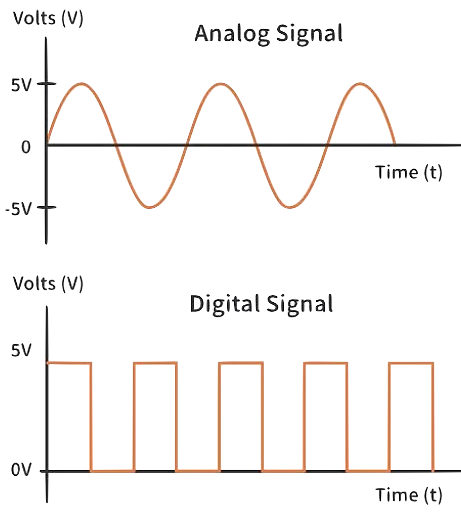

By definition, sensors generate signals.

A signal, s, is defined as a mapping from the

time domain to a value domain:

s : Dt ↦ Dv

where,

| symbol | description |

|---|---|

| Dt | continuous or discrete time domain |

| Dv | continuous or discrete value domain |

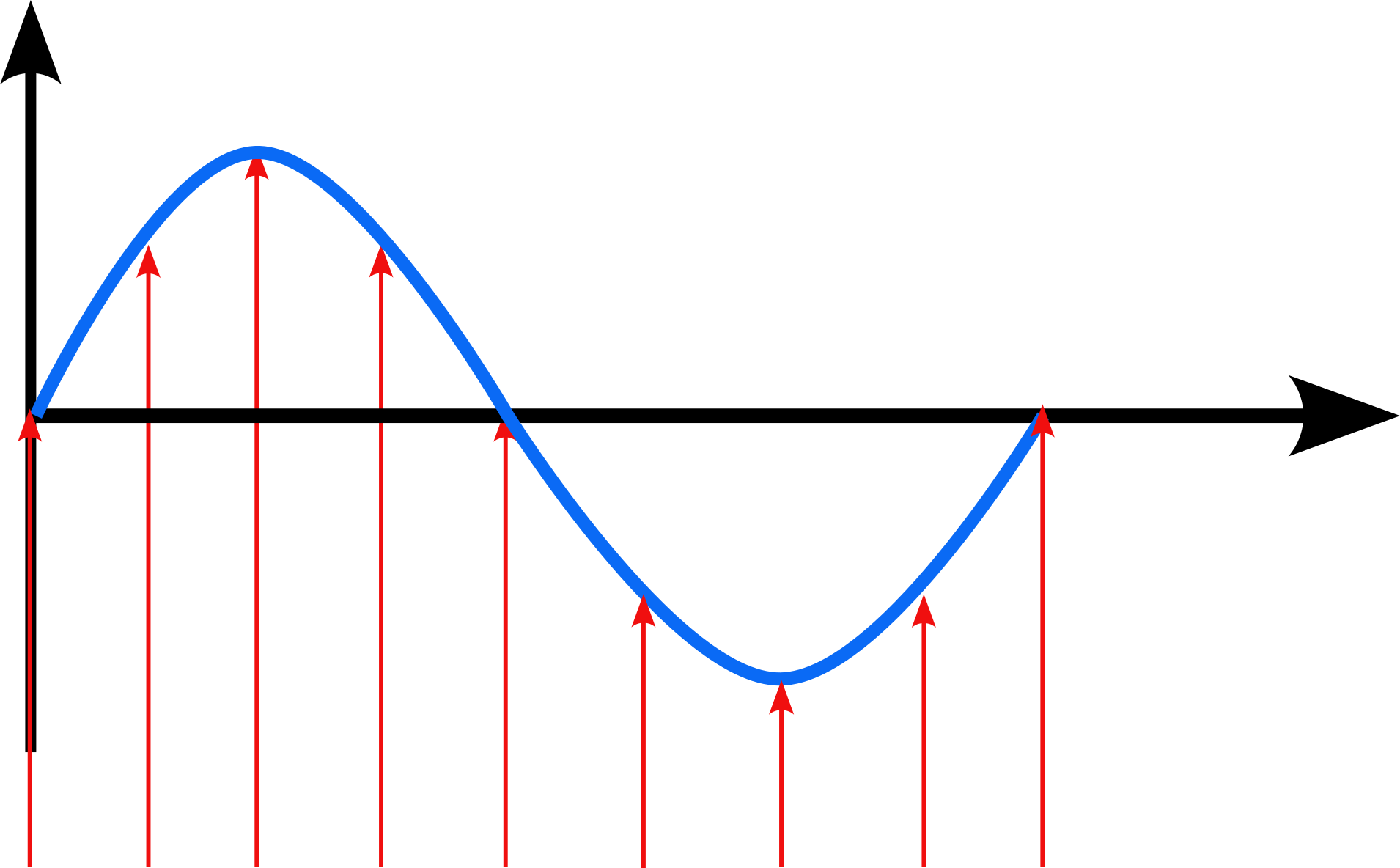

Note: remember that computers require discrete sequences of physical values. Hence, we need to convert the above into the discrete domain. The way to achieve this: sampling:

The figure shows a continuous signal being sampled (in red arrows). We will discuss sampling and related issues later in this topic.

3.1 Types of Sensors

Sensors come in various shapes and sizes. Usually designers of autonomous systems will develop a “sensor plan that will consider,

- required functionality

- sensor range(s)

- cost

Hence, each autonomous system will likely have its own set of sensors (or sensor plan). Typical sensors found on modern autonomous systems can be classified based on the underlying physics used:

| physical property | sensor |

|---|---|

| internal measurements | IMU |

| external measurements | GPS |

| “bouncing” electromagnetic waves | LiDAR, RADAR, mmWave Radar |

| optical | cameras, infrared sensors |

| accoustic | ultrasonic sensors |

Some of the above can be combined to generate other sensing patterns, e.g., stereo vision using multiple cameras or camera+LiDAR.

We will go over some of these sensors and their underlying physical principles.

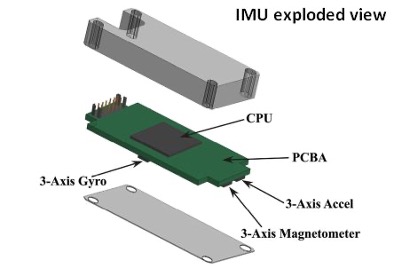

3.1.1 Inertial Measurement Units (IMU)

These sensors define the movement of a vehicle, along the three axes, in addition to other behaviors like acceleration and directionality. An IMU typically includes the following sensors:

|

|

|

|

As we see from the first picture above, an IMU also has a CPU (typically a microcontroller) to manage/collect/process the data from the sensors.

The functions of the three sensors are:

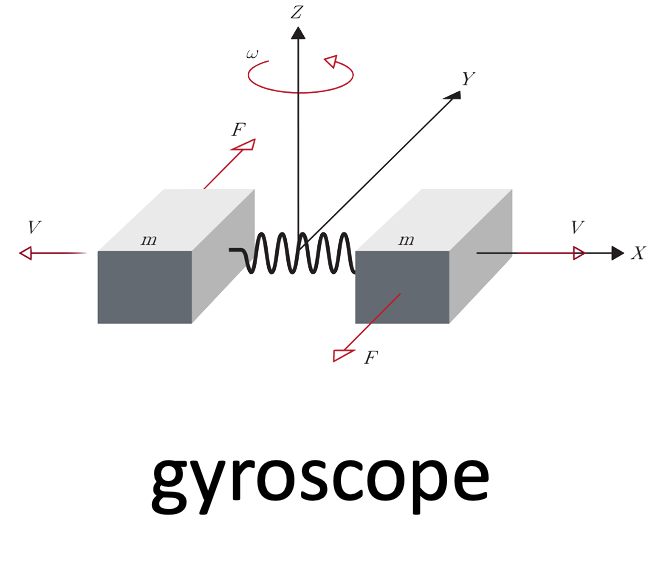

- gyroscope: is an inertial sensor that measure an object’s angular rate with respect to an inertial reference frame. It measures the following movements:

|

|

|

| “yaw” | “pitch” | “roll” |

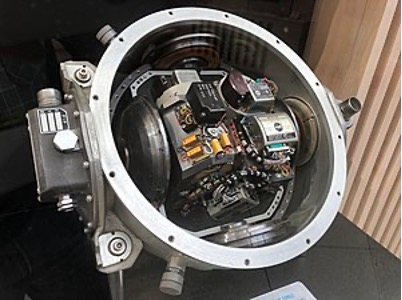

IMUs come in all shapes and sizes. These days they’re very small but the original IMU’s ver really large, as evidenced by the one used in the Apollo space missions:

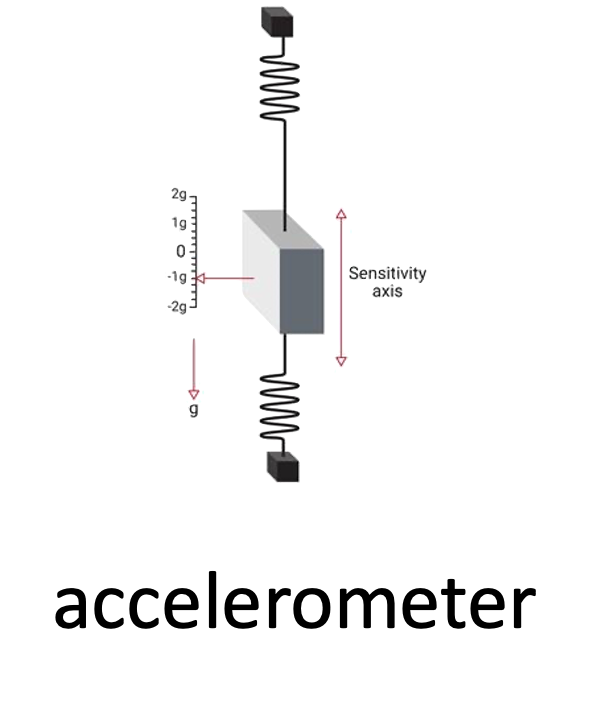

accelerometer: is the primary sensor responsible for measuring inertial acceleration, or the change in velocity over time.

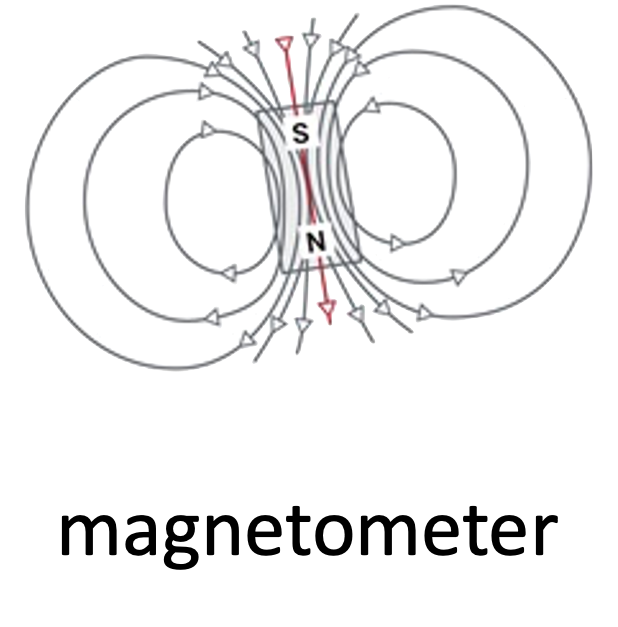

magnetometer: measures the strength and direction of magnetic field – to find the magnetic north

3.1.2 Bouncing of Electromagnetic Waves | LiDAR and mmWave

A very common principle for measuring surroundings is to bounce electromagnetic waves off nearby objects and measuring the round trip times. Shorter times indicate closer objects while longer times indicate objects that are farther away. RADAR is a classic example of this type of sensor and its (basic) operation is shown in the following image (courtesy NOAA):

While many autonomous vehicles use RADAR, we will focus on other technologies that are more prevalent and provide much higher precision, viz.,

- LiDAR

- millimeter Wave RADAR (mmWave)

3.1.2.1 Light Detection and Ranging (LiDAR)

LiDAR is a sensor that uses (eye safe) laser beams for mapping surroundings and creating 3D representation of the environment. So lasers are used for,

- imaging

- detection

- ranging

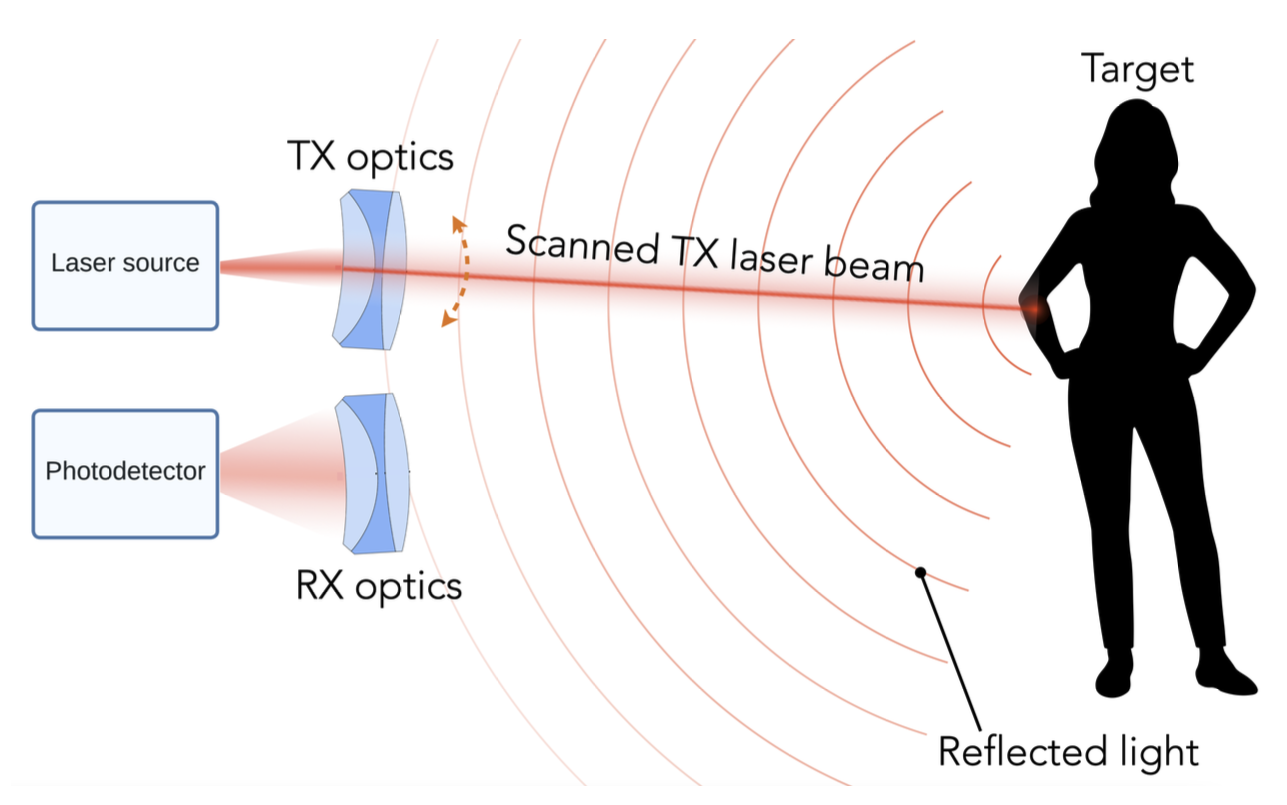

We can use LiDAR to distance, angle as well as the radial velocity of some objects – all relative to the autonomous system (rather the sensor). So, in practice, this is how it operates:

We define a roundtrip time, $ au$, as

the time between when a pulse is sent out from the

transmitter (TX) to when light reflected from

the object is detected at the receiver

(RX).

So, the target range (i.e., the distance to te object), R, is measured as:

R = raccau2

where, c is the speed of light.

More details (from Mahalati): > Lasers used in lidars have frequencies in the 100s of Terahetrz. Compared to RF waves, lasers have significantly smaller wavelengths and can hence be easily collected into narrow beams using lenses. This makes DOA estimation almost trivial in lidar and gives it significantly better reso- lution than MIMO imaging radar.

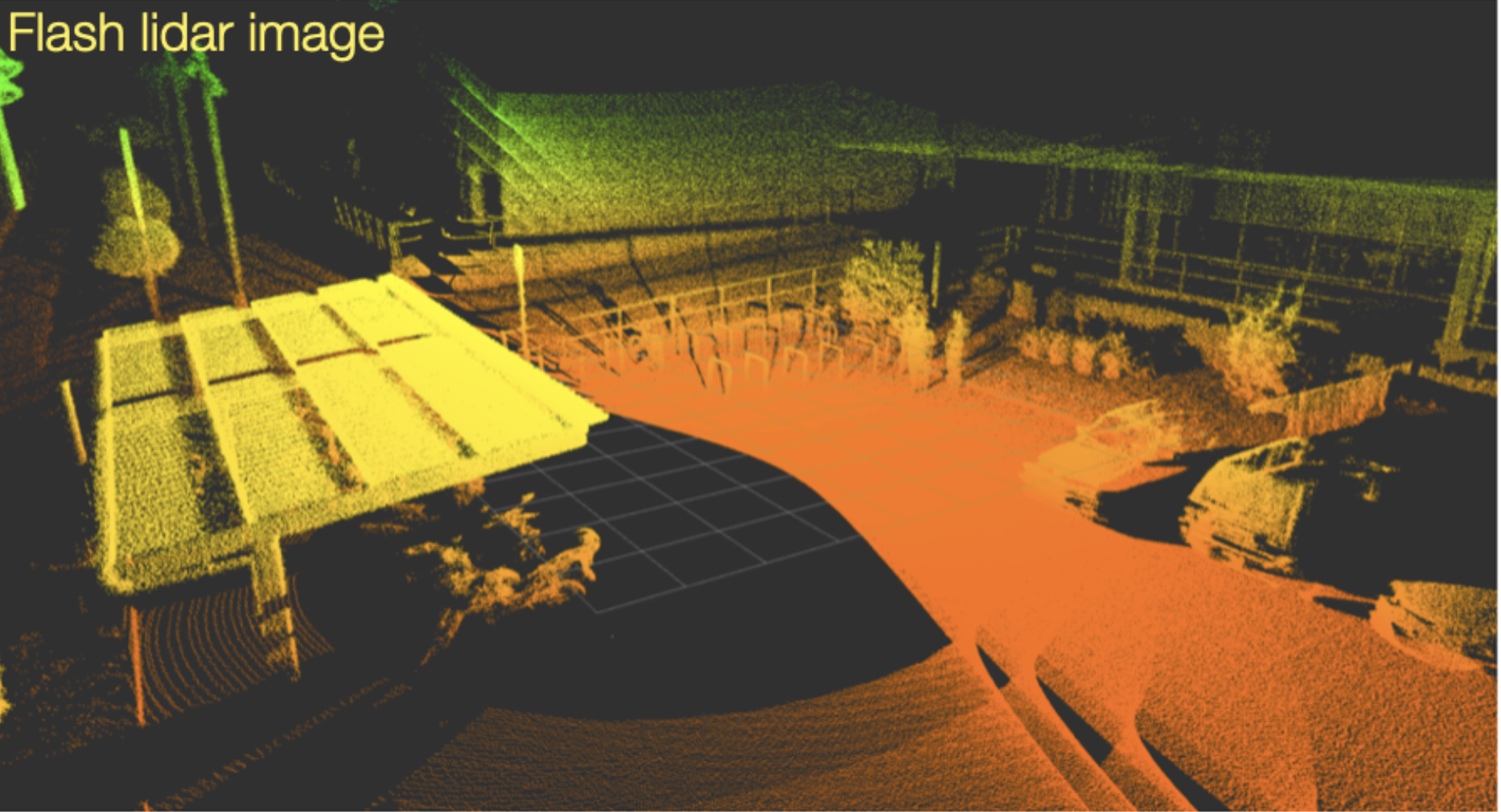

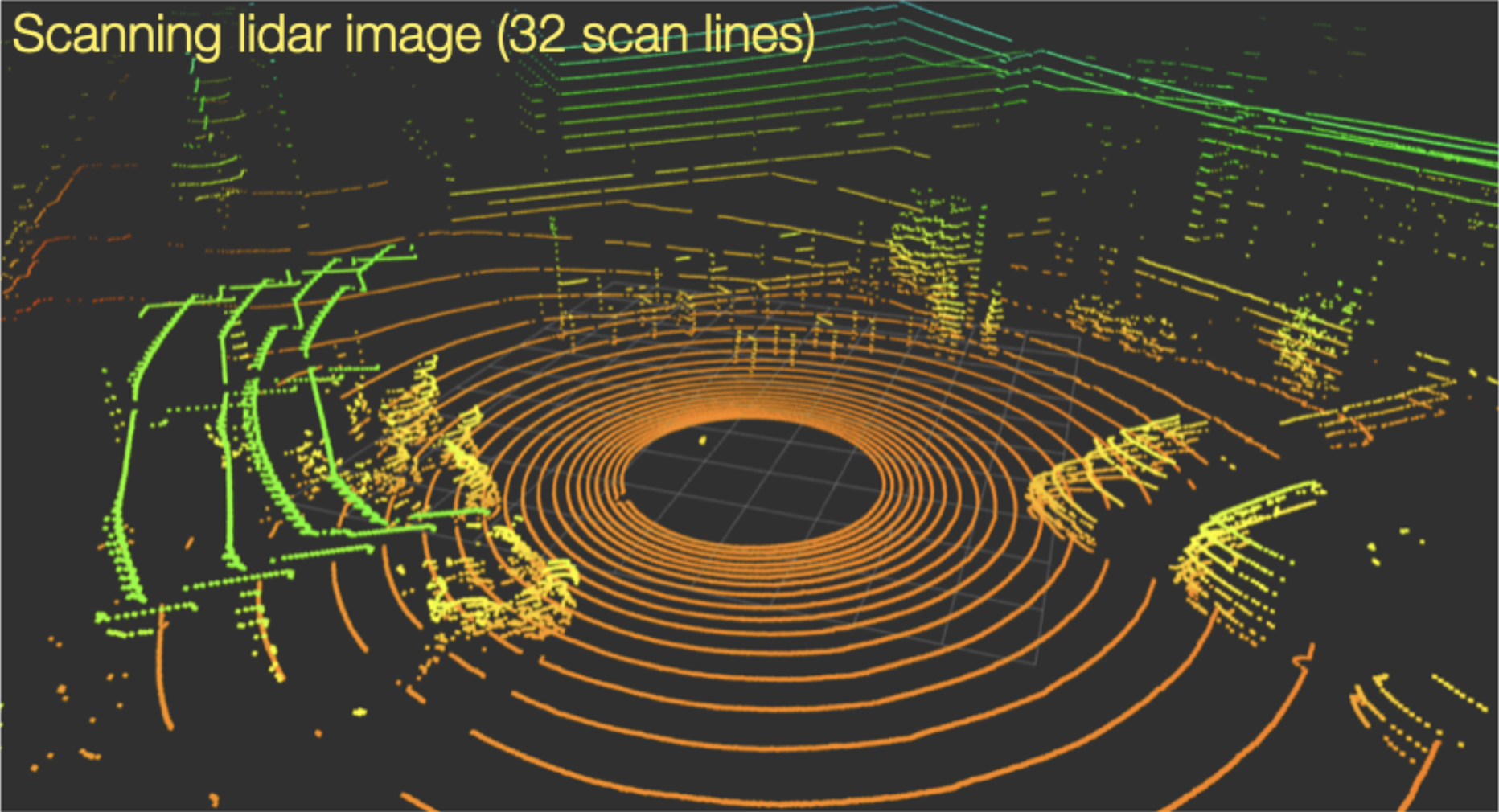

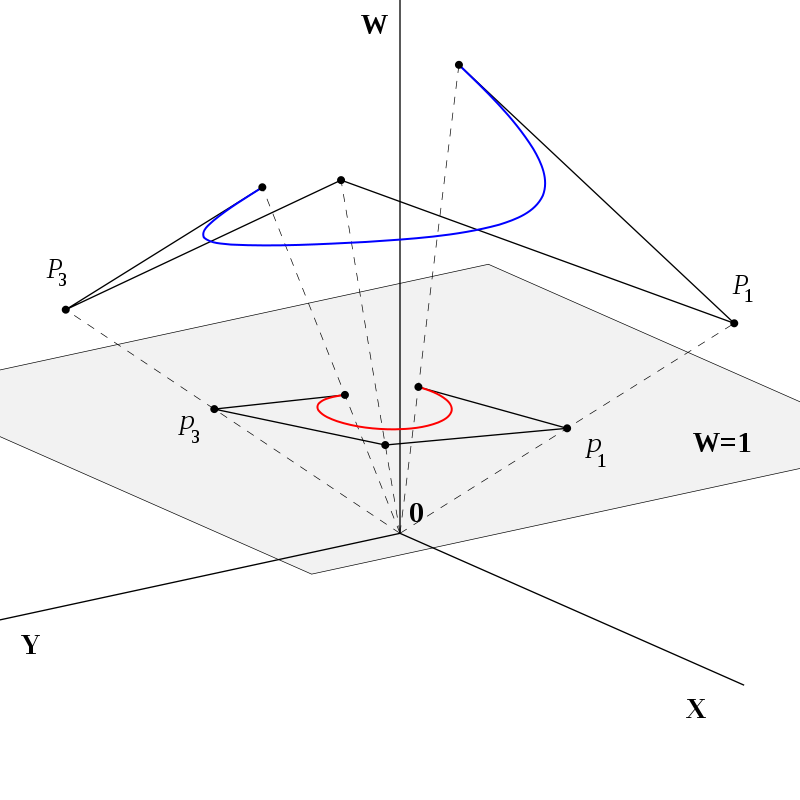

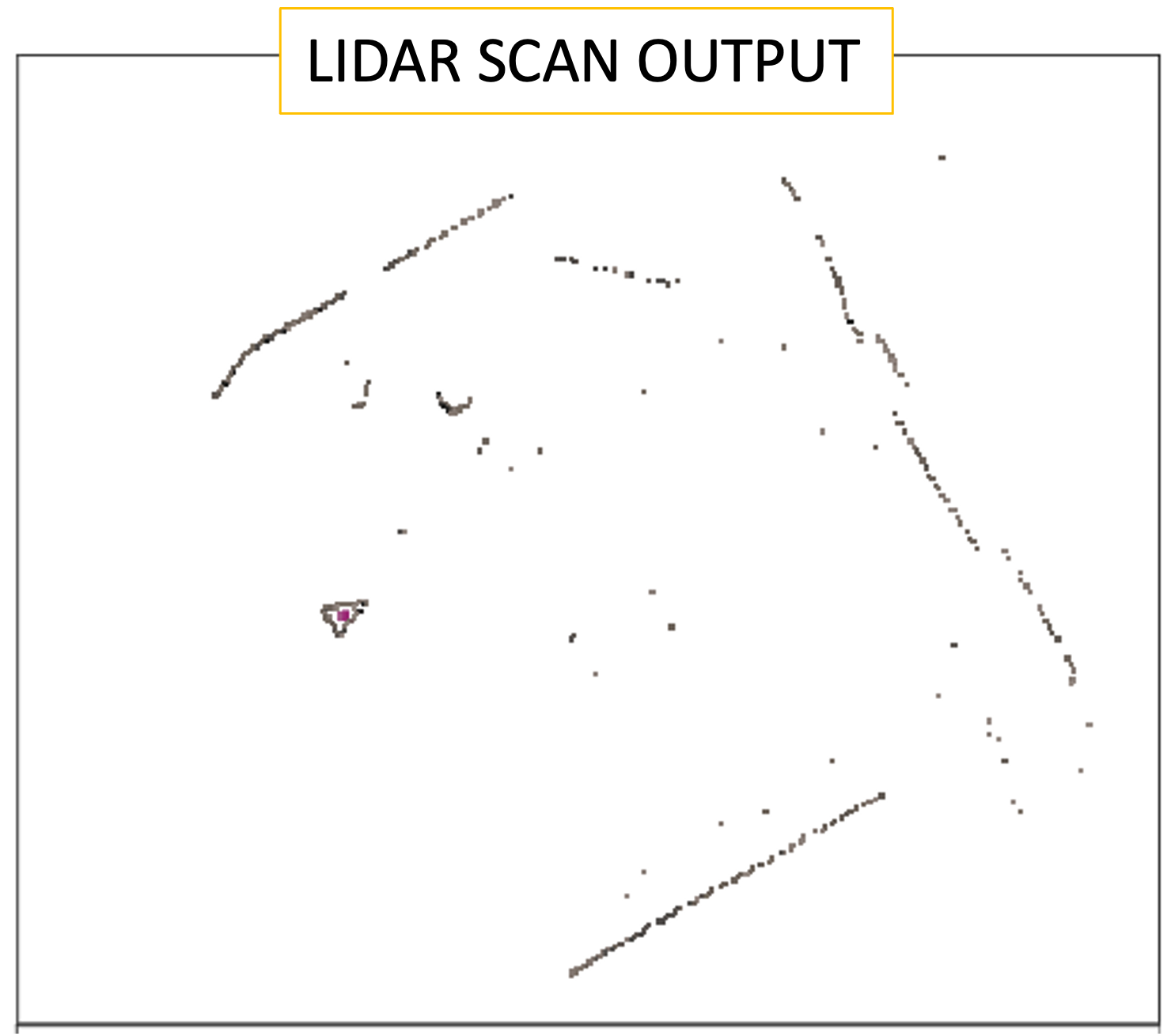

The end product of LiDAR is essentially a point cloud, defined as:

a collection of points generated by a sensor. Such collections can be very dense and contain billions of points, which enables the creation of highly detailed 3D representations of an area.

In reality, point cloud representations around autonomous vehicles end up looking like:

Point clouds provide valuable information, viz.,

- 3D coordinates, (x, y, z)

- strength of returned signal → provides valuable information about the density of the object (or even material composition)!

- additional attributes: return number, scan angle, scan direction, point density, RGB color values, and time stamps → each can be used for refining the scan.

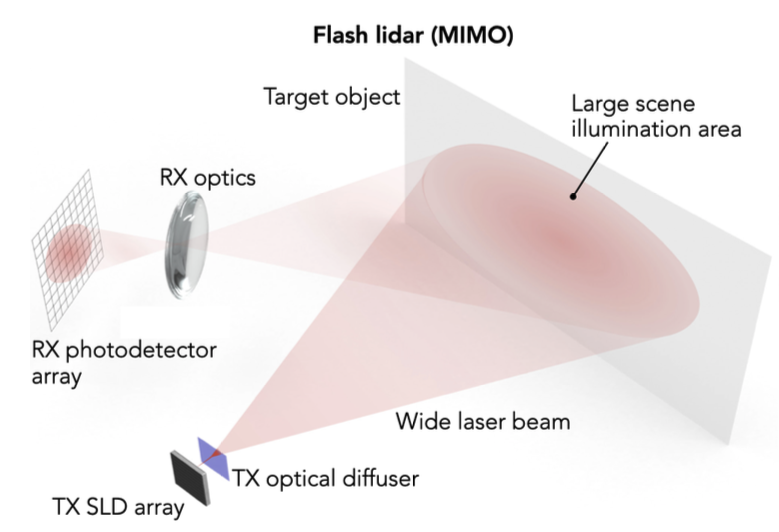

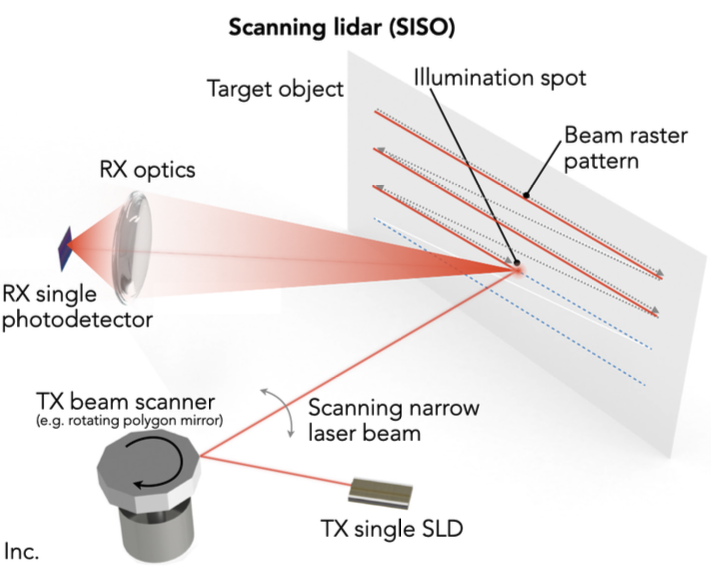

There are two types of scene illumination techniques for LiDAR:

| type | illumination method | detector |

|---|---|---|

| flash lidar | entire scene using wide laser | receives all echoes on a photodetector array |

| scanning lidar | very narrow laser beams, scan illumination spot with laser beam scanner | single photodetector to sequentially estimate $ au$ for each spot |

| flash lidar | scan lidar | |

|---|---|---|

| architecture |  |

|

| resolution determined by | photodetector array pizel size (like camera) | laser beam size and spot fixing |

| frame rates | higher (up to 100 fps) |

lower (< 30 fps) |

| range | shorter (quick beam divergence, like photography) | longer (100m+) |

| use | less common | most common |

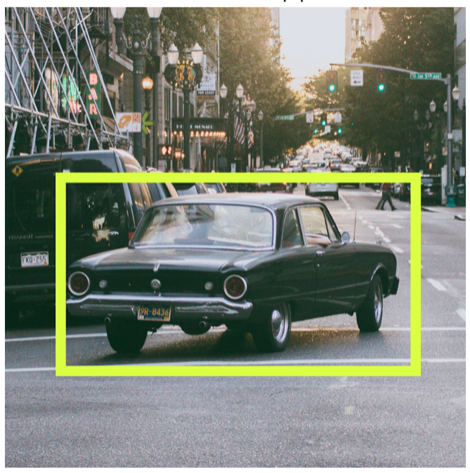

Now, consider the following scene (captured by a camera):

Compare this to the LiDAR images captured by the two methods:

| flash lidar | scan lidar (16 scan lines) | scan lidar (32 scan lines) |

|---|---|---|

|

|

|

A “LiDAR scan line” refers to a single horizontal line of laser pulses emitted by a LiDAR sensor, essentially capturing a cross-section of the environment at a specific angle as the sensor rotates, creating a 3D point cloud by combining multiple scan lines across the field of view; it’s the basic building block of a LiDAR scan, similar to how a single horizontal line is a building block of an image.

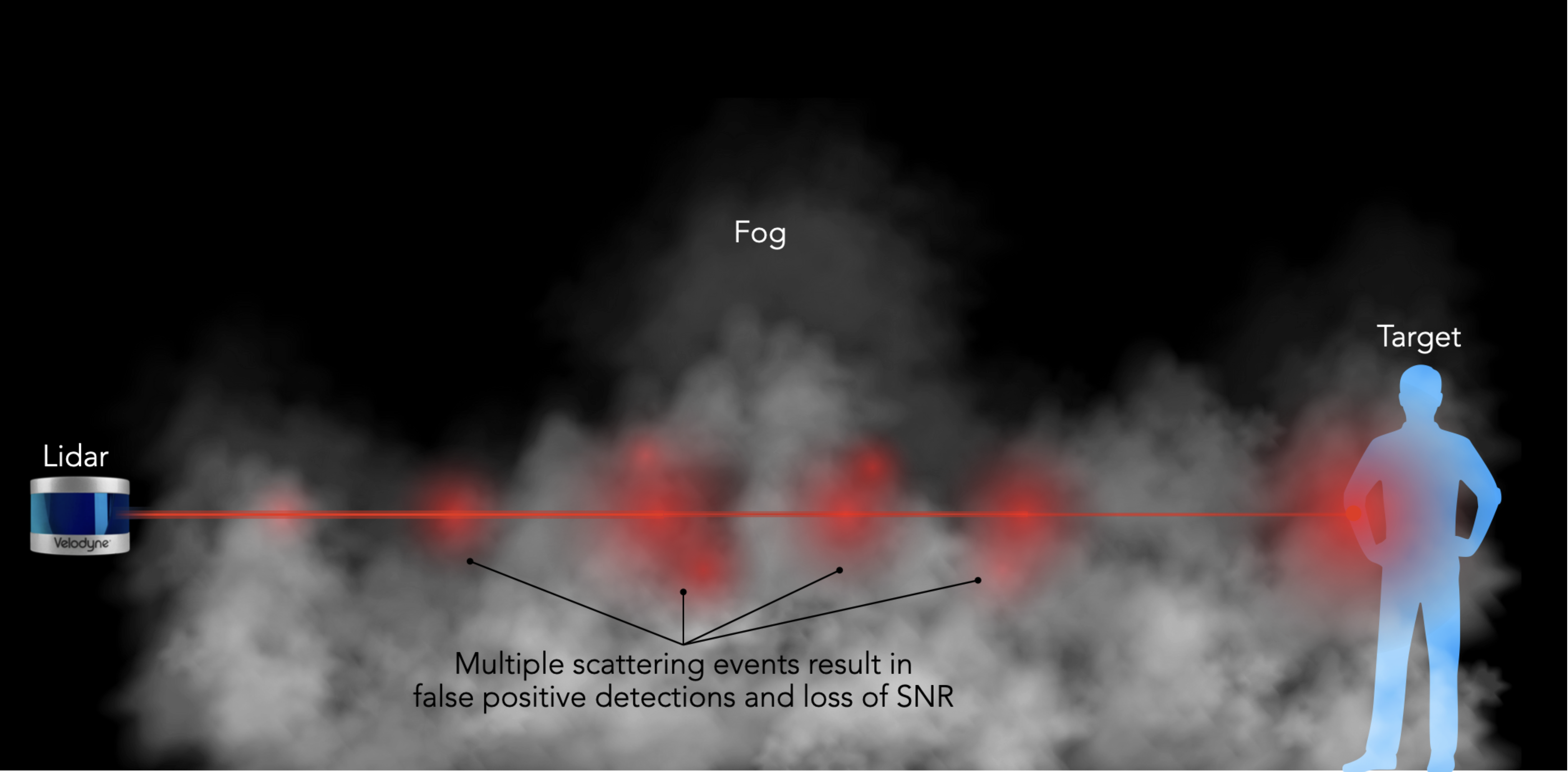

Potential Problems:

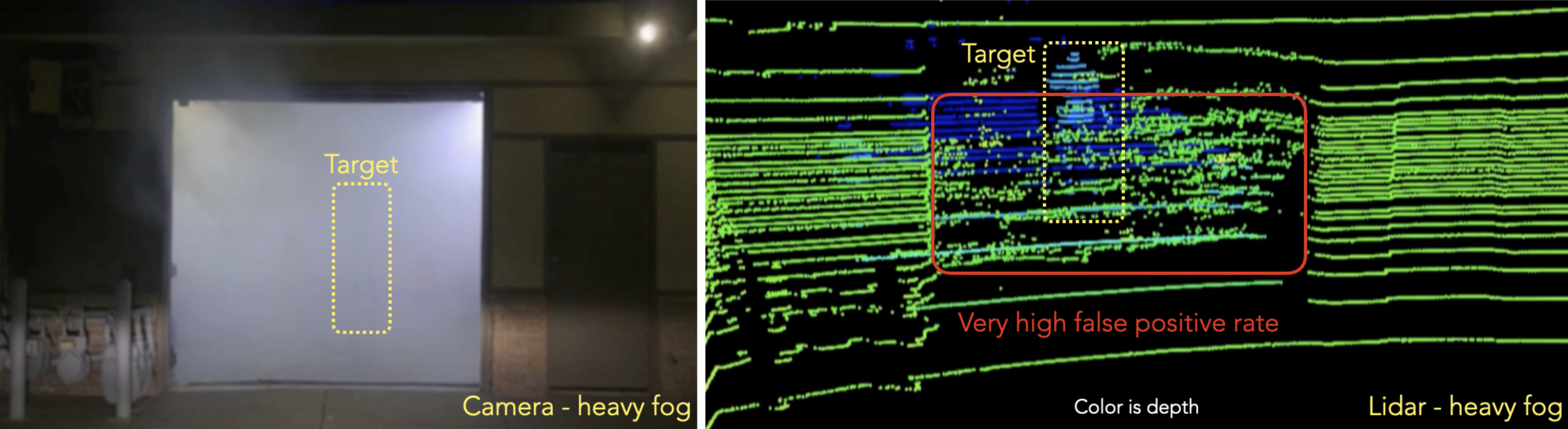

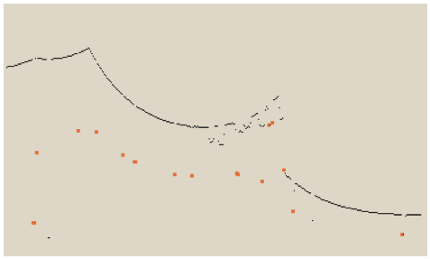

Atmospheric/environmental conditions can negatively affect the quality of the data captured by the LiDAR. For instance, fog can scatter the laser photons resulting in false positives.

As we see from the above image, the scattering due to the fog results in the system “identifying” multiple objects even though there is only one person in the scene.

Here are additional examples from the Velodyne VLP-32C sensor:

- light fog (camera vs LiDAR)

The LiDAR does a good job isolating the main subject with very few false positives.

- heavy fog (camera vs LiDAR)

The LiDAR struggles to isolate the main subject with very high false positives.

In spite of these issues, LiDAR is one of the most popular sensors used in autonomous vehicles. They’re getting smaller and more precise by the day; also decreasing costs means that we will see a proliferation of these types of sensors in many autonomous systems.

For an in-depth study on LiDARs, check this out: Stanford EE 259 LiDAR Lecture.

3.1.2.2 Millimeter Wave Radar [mmWave]

Short wavelengths like the *millimeter wave (mmWave**) in the electromagnetic spectrum allows for:

- smaller antennae

- integration of entire RADAR circuitry in a single chip!

- spectrum of 10 millimeters (

30 GHz) to 1 millimeter (300 GHz)

|

|

As we see from the above images, the sensors can be very small, yet very precise → some can detect movements up to 4 millionths of a meter!

Advantages of mmWave:

| Advantage | Description |

|---|---|

| small antenna caliber | narrow beam gives high tracking, accuracy; high-level resolution, high-resistance interference performance of narrow beam; high antenna gain; smaller object detection |

| large bandwidth | high information rate, details structural features of the target; reduces multipath, and enhances anti-interference ability; overcomes mutual interference; high-distance resolution |

| high doppler frequency | good detection and recognition ability of slow objectives and vibration targets; can work in snow conditions |

| good anti-blanking performance | works on the most used stealth material |

| robustness to atmospheric conditions | such as dust, smoke, and fog compared to other sensors |

| operation under different lights | radar can operate under bright lights, dazzling lights, or no lights |

| insusceptible to ground clutter | allowing for close-range observations; the low reflectivity can be measured using mmwave radar |

| fine spatial resolution | for the same range, mmwave radar offers finer spatial resolution than microwave radar > |

mmWave is also used for in-cabin monitoring of drivers!

Limitations:

- line of sight operations

- affected by water content, gases in environments

- affected by contaminated environment and physical obstacles

Resources:

For a more detailed description of mmWave RADAR, read: Understanding mmWave RADAR, its Principle & Applications

For programming a LiDAR, see: how to program a LiDAR with an Arduino.

3.1.3 Ultrasonic

Much like lidars, we can use reflected sounds waves to detect objects. They work by emitting high-frequency sound waves, typically above human hearing, and then listening for the echoes that bounce back from nearby objects. The sensor calculates the distance based on the time it takes for the echo to return, using the speed of sound. Popular modules like the HC-SR04 (Used in Lab#2) are easy to integrate with microcontrollers such as Arduino and Raspberry Pi. These sensors are widely used in robotics for obstacle avoidance, automated navigation, and liquid level sensing.

However, unlike optical (electromagnetic waves) detectors, ultrasonic sensors, while useful for basic distance measurements, cannot replicate the functionalities of LiDAR systems due to several key limitations. Unlike LiDAR, which employs laser beams to generate high-resolution, three-dimensional point clouds, ultrasonic sensors emit sound waves that provide only limited, single-point distance data with lower precision. LiDAR offers greater accuracy and longer range, enabling detailed mapping and object recognition essential for applications like autonomous vehicles and advanced robotics. Additionally, LiDAR systems can cover a wider field of view and operate effectively in diverse environments by rapidly scanning multiple directions, whereas ultrasonic sensors typically have a narrow detection cone and struggle with complex or cluttered scenes. Furthermore, LiDAR’s ability to capture data at high speeds allows for real-time processing and dynamic obstacle detection, which ultrasonics cannot match. This is because comparitively, it sounds waves take a lot of time to return since they’re much slower in speed compared to light waves (360m/s vs 299,792,458m/s). These differences in data richness, accuracy, and versatility make ultrasonic sensors unsuitable substitutes for the sophisticated capabilities offered by LiDAR technology.

We’ll be using ultrasonic distance finders in futures MPs to stop our rovers from colliding into objects. Since our rovers don’t moove to fast and complexity is relatively low, only a ultrasonic sensor would suffice.

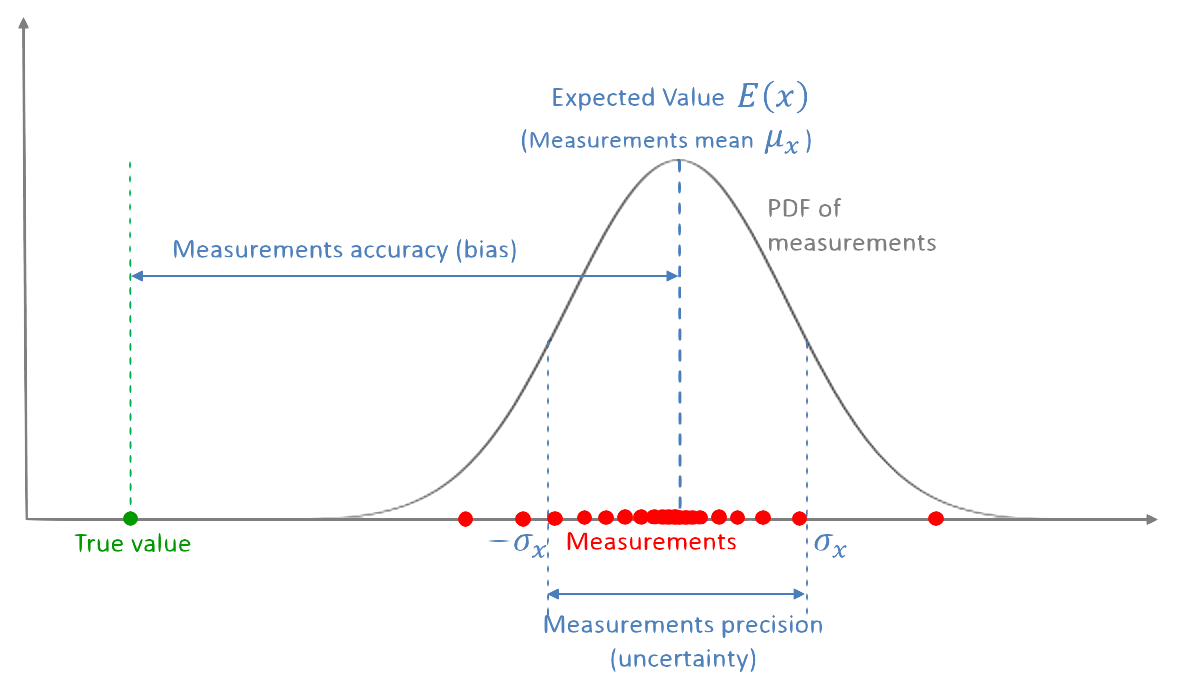

3.2 Errors in Sensing

Since sensors deal with and measure the physical world, errors will creep in over time.

Some typical errors in the use of physical sensors:

| error type | description |

|---|---|

| sensor drift | over time the sensor measurements will “drift”, i.e., a gradual change in its output → away from average values (e.g., due to wear and tear) |

| constant bias | bias of an accelerometer is the offset of its output signal from the actual acceleration value. A constant bias error causes an error in position which grows with time |

| calibration errors | ‘calibration errors’ refers to errors in the scale factors, alignments and linearities of the gyros. Such errors tend to produce errors when the device is turning. These errors can result in additional drift |

| scale factor | scale factor is the relation of the accelerometer input to the actual sensor output for the measurement. Scale factor, expressed in ppm, is therefore the linear growth of input variation to actual measurement |

| vibration rectification errors | vibration rectification error (VRE) is the response of an accelerometer to current rectification in the sensor, causing a shift in the offset of the accelerometer. This can be a significant cumulative error, which propagates with time and can lead to over compensation in stabilization |

| noise | random variations in the sensor output that do not correspond to the actual measured value |

Each error type must be dealt with in different ways though one of the commomn ways to prevent sensor errors from causing harm to autonomous systems → sensor fusion, i.e., use information from multiple sensors before making any decisions. We will dicuss sensor fusion later in this course.

3.3 Analog to Digital Convertors (ADCs)

As mentioned earlier, a sensor maps a physical quantity from the time domain to the value domain,

s : Dt ↦ Dv

where,

| symbol | description |

|---|---|

| Dt | continuous or discrete time domain |

| Dv | continuous or discrete value domain |

Remember that computers require discrete sequences of physical values since microcontrollers cannot read values unless it is digital data. Microcontrollers can only see “levels” of voltage, which depends on the resolution of the ADC and the system voltage.

Hence, we need to convert the above into the discrete domain, i.e., we require Dv to be composed of discrete values.

According to Wikipedia,

A discrete signal or discrete-time signal is a time series consisting of a sequence of quantities. Unlike a continuous-time signal, a discrete-time signal is not a function of a continuous argument; however, it may have been obtained by sampling from a continuous-time signal. When a discrete-time signal is obtained by sampling a sequence at uniformly spaced times, it has an associated sampling rate.

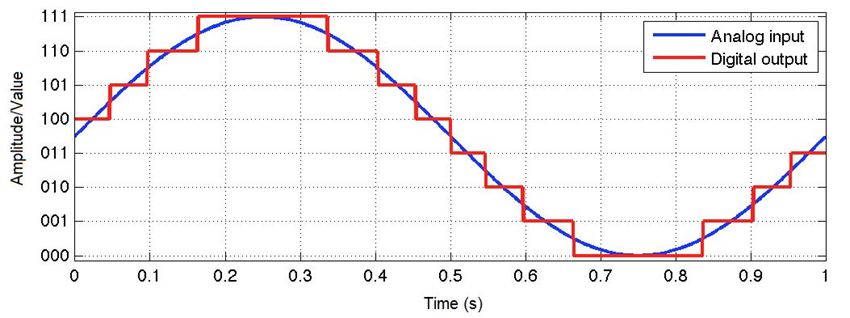

A visual respresentation of the sampling rate and how it correlates to the sampling of an analog signal:

| analog signal | sampling rate | sampling |

|---|---|---|

|

|

|

Hence, a device that converts analog signals to digital data values is called → an analog-to-digital convertor (ADC). This is one of the most common circuits/microcontrollers in embedded (and hence, autonomous) systems. Any sensor that measures a physical property must pass its values through an ADC so that the sensor values can be used by the system (the embedded processor/microcontroller, really).

This is best described using an example:

The analog signal is discretized into the digital signal after passing through an ADC.

ADCs follow a sequence:

- sample the signal

- quantify it to determine the resolution of the signal

- set binary values

- send it to the system to read the digital signal

Hence, two important aspects of an ADC are:

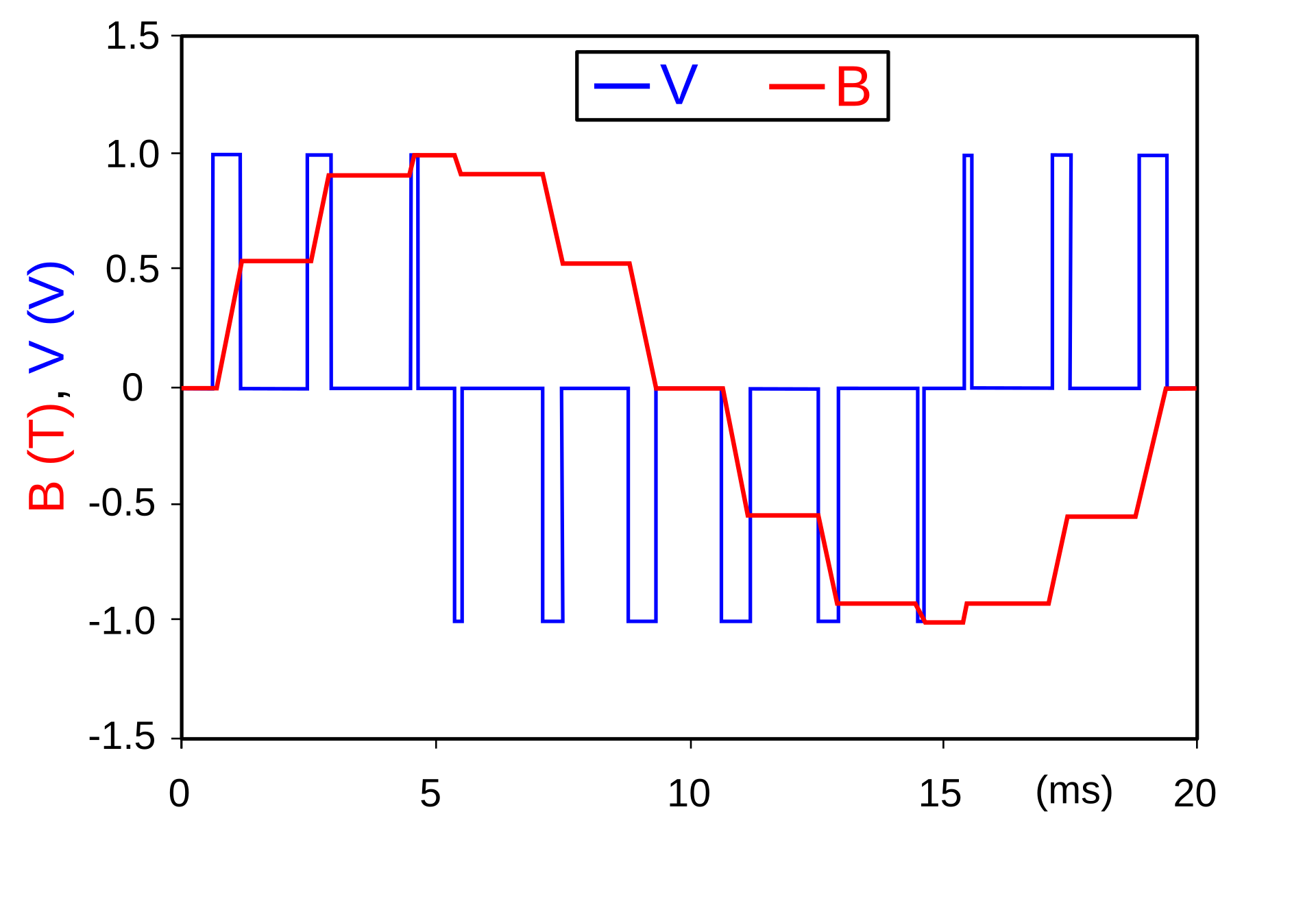

3.3.1 ADC Sampling Rate

The sampling rate (aka Sampling Frequency) is measured in samples per second (SPS or S/s). It dictates how many samples (data points) are taken in one second. If an ADC records more samples, then it can handle higher frequencies.

The sample rate, fs is defined as,

fs = rac1T

where,

| symbol | definition |

|---|---|

| fs | sampling rate/frequency |

| T | period of the sample |

Hence, in the previous example,

| symbol | value |

|---|---|

| fs | 20 Hz |

| T | 50 ms |

While this looks slow (20 Hz), the digital

signal tracks the original analog signal quite faithfully →

the original signal itself is quite slow

(1 Hz).

Now, if the sampling signal is considerably slower than the analog signal, then it loses fidelity and we see aliasing, where the reconstructed signal (the digital one in the case) differs from the original. Consider the following example of such a case:

As we see from the above figure, the digital output is nothing like the original. Hence, this (digital) output will not be of much use to the system.

Nyquist-Shannon Sampling Theorem:

to accurately reconstruct a signal from its samples, the sampling rate must be at least twice the highest frequency component present in the signal

If the sampling frequency is less than the Nyquist rate, then aliasing starts to creep in.

Hence,

fNyquist = 2 * fmax

where,

| symbol | definition |

|---|---|

| fNyquist | Nyquist sampling rate/frequency |

| fmax | the maximum frequency that appears in the signal |

For instance, if your analog signal has a maximum

frequency of 50 Hz then your sampling frequency

must be at least, 100 Hz. If this

principle is followed, then it is possible to

accurately reconstruct the original signal

and its values.

Note that sometimes noise can introduce additonal (high) frequencies into the system but we don’t want to sample those (for obvious purposes). Hence, it is a good idea to add anti-aliasing fitlers to the analog signal before it is passed to the ADC.

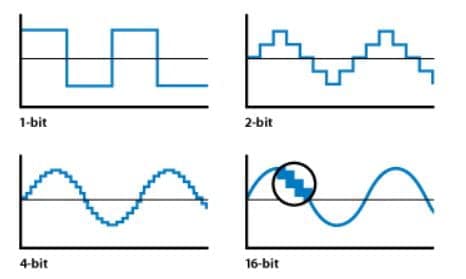

3.3.2 ADC Resolution

An ADC’s resolution is directly related to the precision of the ADC, determined by its bit length. The following examples shows the fidelity of the reconstruction, based on various bit lengths:

Increasing bit lengths the digital signal more closely represents the analog one.

There exists a correlation between the bit length and the voltage of the signal. Hence, the true resolution of the ADC is calculated using the bit length and the voltage as follows:

StepSize = racVrefN

where,

| symbol | definition |

|---|---|

| StepSize | resolution of each level in terms of voltage |

| Vref | voltage reference/range of voltages |

| N = 2n | total “size” of the ADC |

| n | bit size |

This is easier to understand with a concrete example:

consider a sine wave with a voltage,

5 Vthat must be digitized.

If our ADC precision is12 bits, then we get

N = 212 = 4096

Hence, StepSize = 5V/ 4096 which is0.00122V(or1.22mV)

Hence, the system can tell when a voltage level changes by1.22 mV!

(Repeat the exercise for say, bit length, n = 4)

Visual Example:

The above maybe intuitively understood as follows:

Consider the following signal:

Now, if we want to sample this signal, we can obtain measurements at:

The figure shows 9 measurements.

Suppose, the ADC registers have a width of:

2 bits. Hence it can store at most:

4 values.

Since is is not possible to store

9 values → 2 bits, we must select

only 4 values omn the digital

side.

We then get the following representation:

which, to be honest, is not really a good representation of the original signal!

Now, consider the case where the ADC registers have a bit

width: 4 bits →

16 values! Hence, we can easily store

all 9 values easily.

So, we can get a digital representation as follows:

We see that this is a better representation, but

still not exact. We can increase the bit length but at

this point we are limited by the sampling as well. Since we

only have 9 samples, adding more bits won’t

help.

Hence, to get a better fidelity representation of the original signal, we see that sampling frequency and resolution need to be increased, since they determine the quality of output we get from an ADC.

Resources

- for more details about ADC, read: Analog-to-Digital Convertor Basics

- an in-depth explanation of how ADCs work: Iowa State CpreE 288 Course Slides

- more details with videos: Analog to Digital Conversion, EE319K Univ. of Texas

- Programming an ADC: 1, 2

4 Real-Time Operating Systems

Real-Time Operating Systems (RTOS) are specialized operating systems designed to manage hardware resources, execute applications and process data in a predictable manner. The main aim of this focus on “predictability” is to ensure that critical tasks complete in a timely fashion. Unlike general-purpose operating systems (GPOS) like Windows or Linux, which prioritize multitasking and user experience, RTOS focuses on meeting strict timing constraints, ensuring that tasks are completed within defined deadlines. This makes RTOS essential for systems where timing accuracy and reliability are critical, such as in embedded systems, autonomous driving, industrial automation, automotive systems, medical devices and aerospace applications, among others.

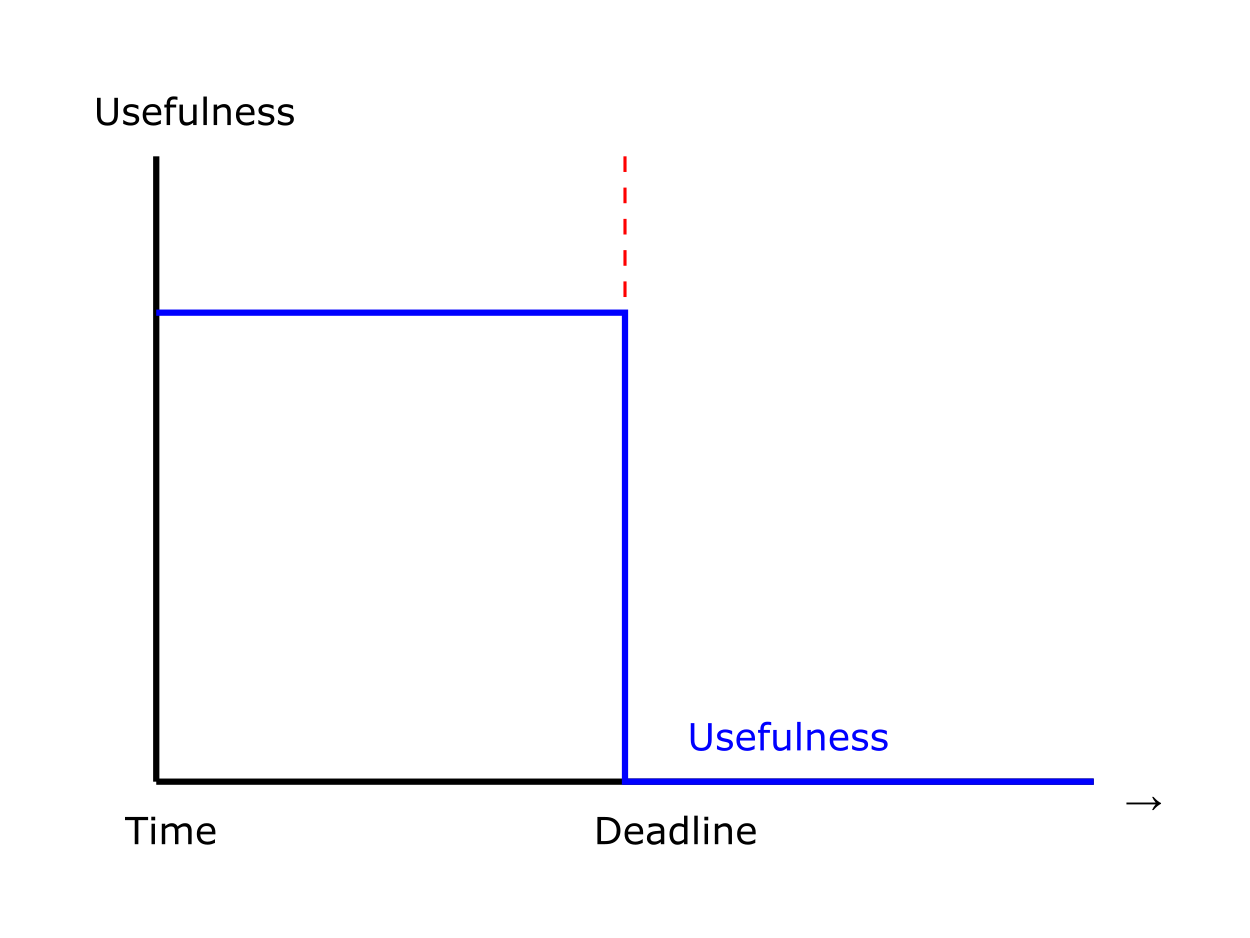

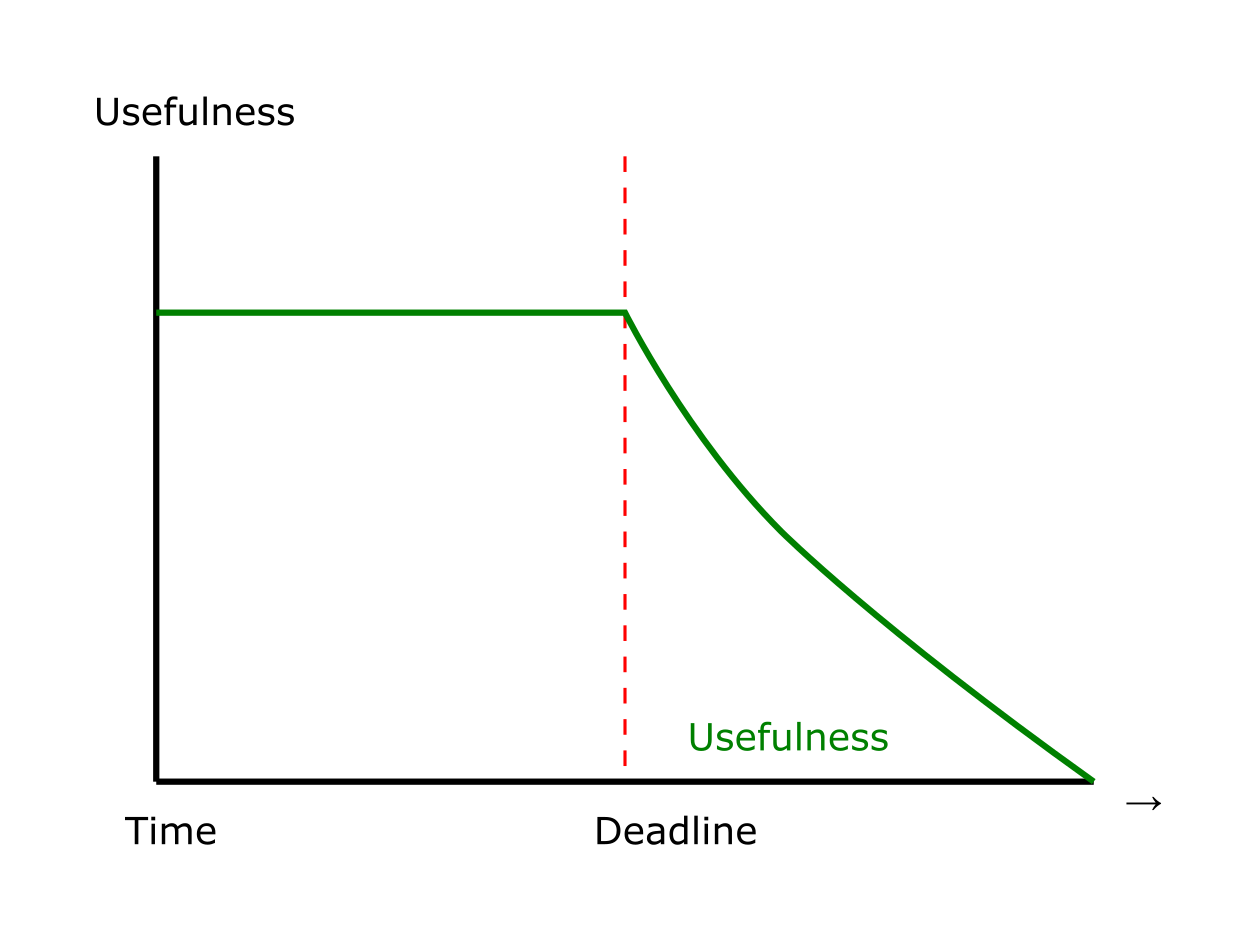

Hence, real-time systems (RTS), and RTOSes in general, have two criteria for “correctness”:

| criteria | description |

|---|---|

| functional correctness | the system should work as expected, i.e., carry out its intended function without errors |

| temporal correctness | the functionally correct operations must be completed within a predefined timing constraint (deadline) |

To place ourselves in the context of this course, this is where we are:

We haven’t looked at the actuation part but we will come back to it later.

4.0.1 Key characteristics for RTOS

| characteristic | description |

|---|---|

| determinism | primary feature of an RTOS is its ability to perform tasks within guaranteed time frames; this predictability ensures that high-priority tasks are executed without delay, even under varying system loads |

| task scheduling | RTOS uses advanced scheduling algorithms (e.g., priority-based, round-robin or earliest-deadline-first) to manage task execution; RT tasks are often assigned priorities and the scheduler ensures that higher-priority tasks preempt lower-priority ones when necessary |

| low latency | RTOS minimizes interrupt response times and context-switching overhead, enabling rapid task execution and efficient handling of time-sensitive operations (e.g., Linux spends many milliseconds handling interrupts such as disk access!) |

| resource management | RTOS provides mechanisms for efficient allocation and management of system resources, such as memory, CPU and peripherals, to ensure optimal performance |

| scalability | RTOS is often lightweight and modular, making it suitable for resource-constrained environments like microcontrollers and embedded systems |

| reliability and fault tolerance | many RTOS implementations include features to enhance system stability, such as error detection, recovery mechanisms and redundancy |

4.1 Kernels in RTOS

As with most operating systems, the kernel provides the essential services in an RTOS. In hard real-time systems, the kernel must guarantee predictable and deterministic behavior to ensure that all tasks meet their deadlines. In this chapter we focus on kernel aspects that are specific to RTS.

The RTOS kernel deals with,

- task management

- communication and synchronization

- memory management

- timer and interrupt handling

- performance metrics

4.1.1 Tasks, Jobs, Threads

The design of RTOSes (and RTS in general) deal with tasks, jobs and, for implementation-specific details, threads.

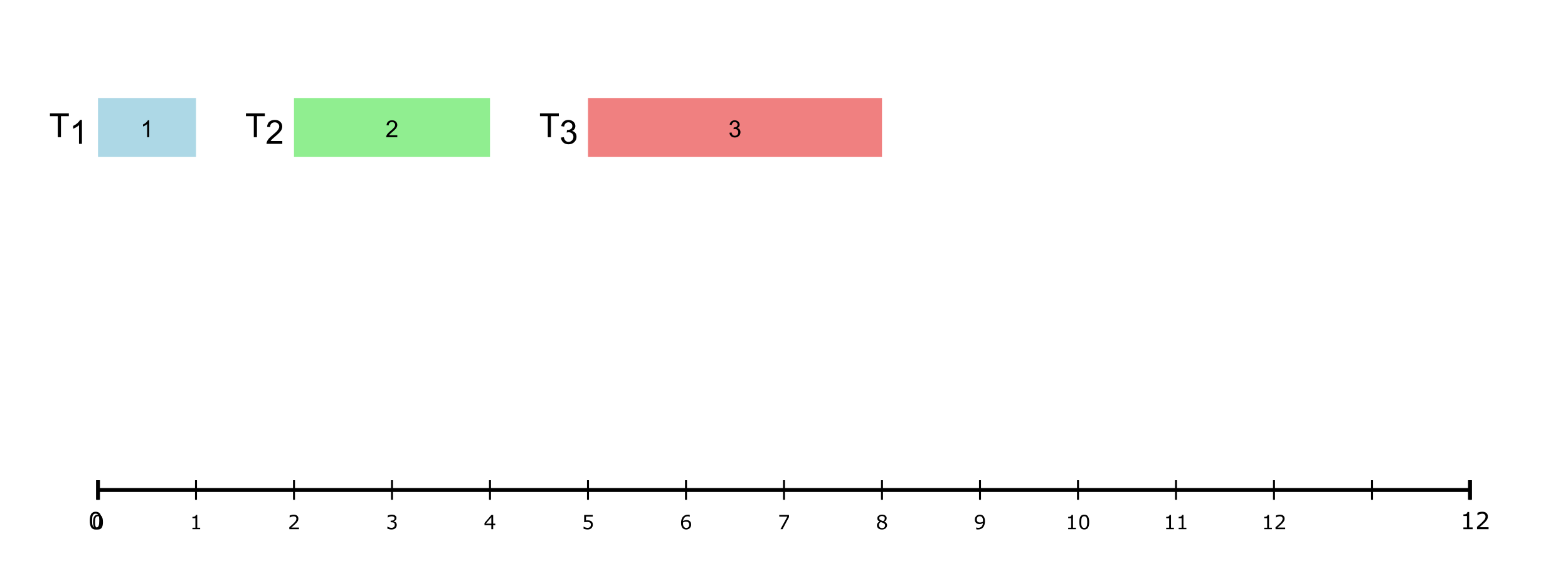

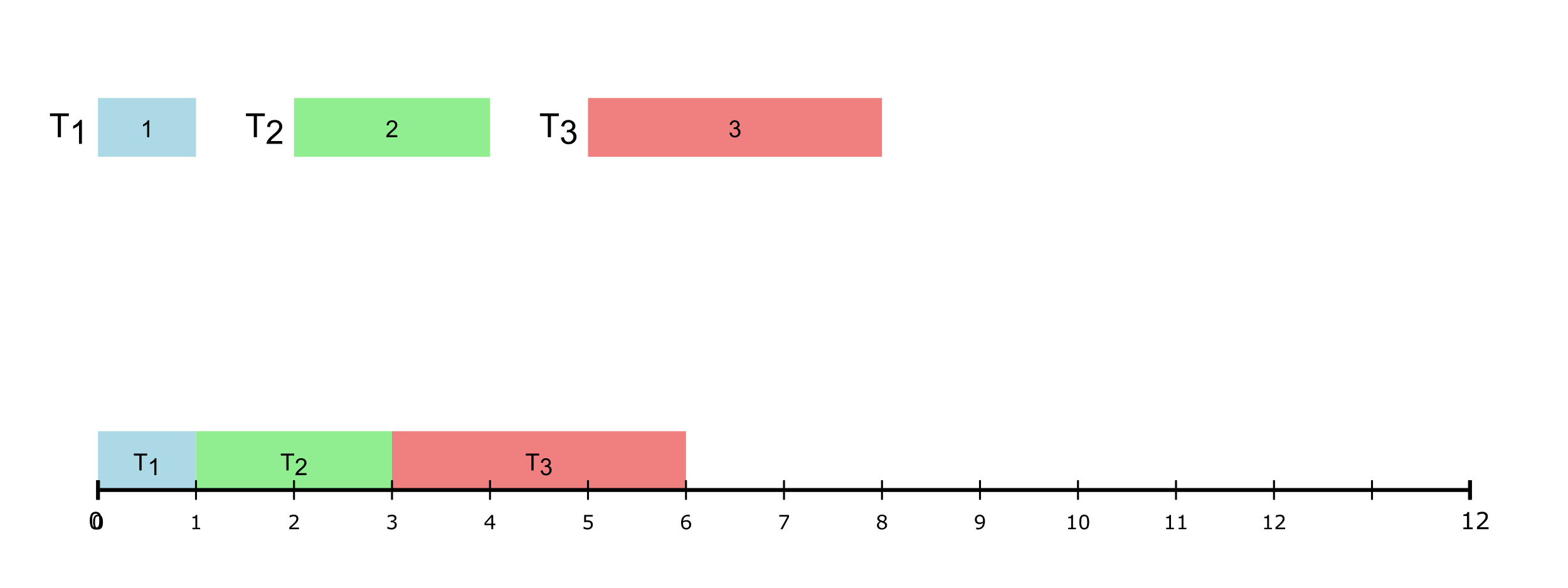

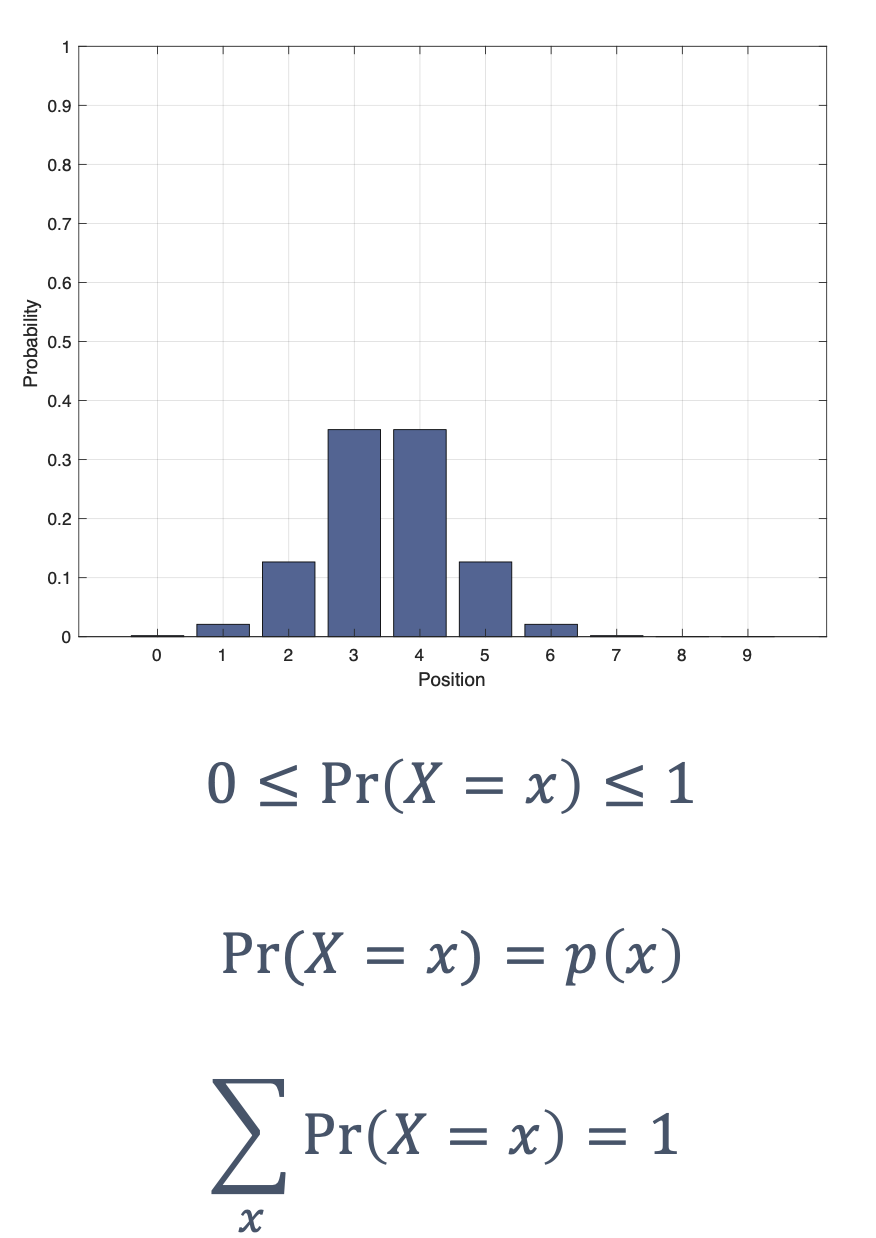

A real-time task, τi is defined using the following parameters: (ϕi, pi, ci, di) where,

| Symbol | Description |

|---|---|

| ϕi | Phase (offset for the first job of a task) |

| pi | Period |

| ci | Worst-case execution time |

| di | Deadline |

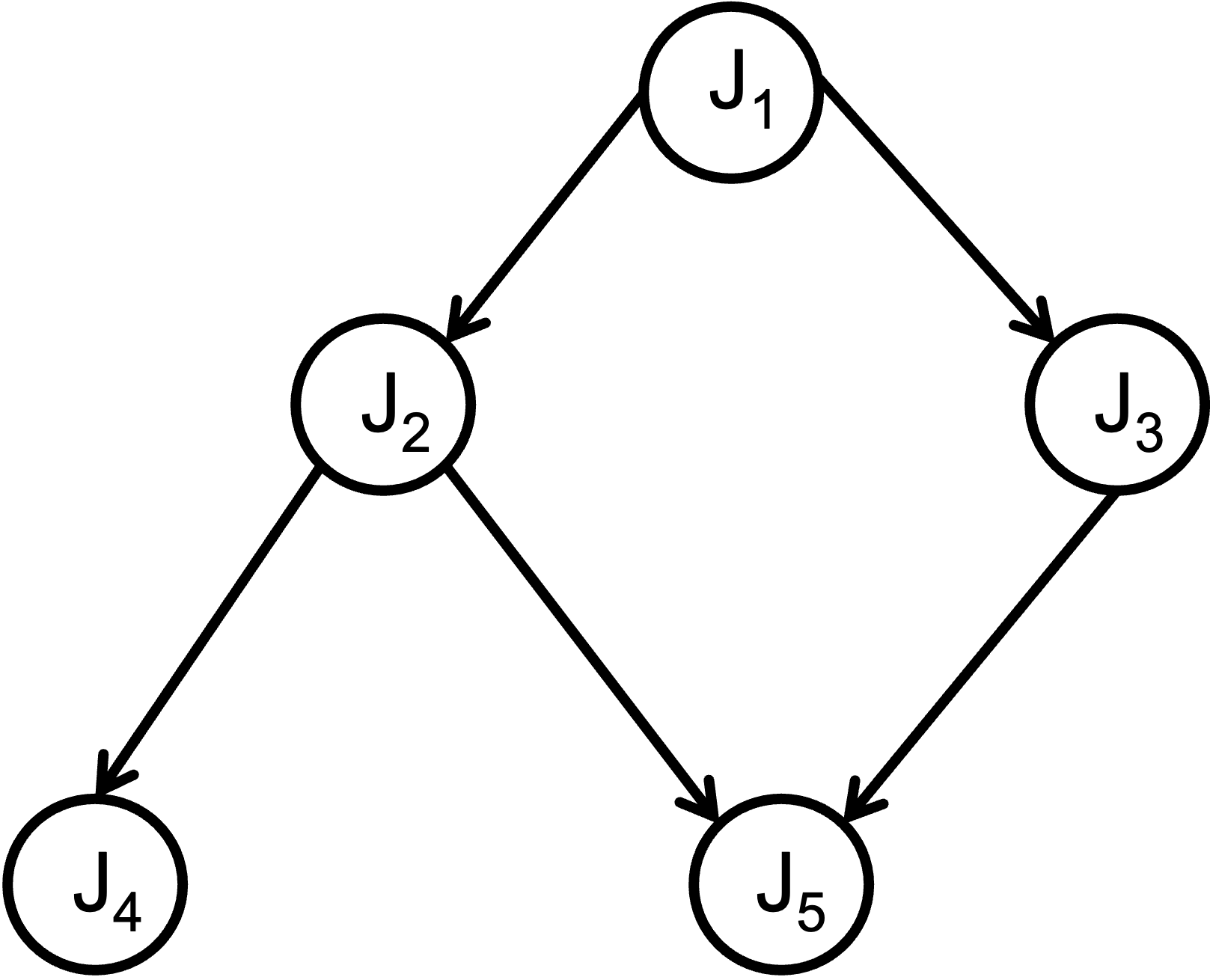

Hence, a real-time tast set (of size ‘n’) is collection of such tasks, i.e., τ = τ1, τ2, ...τn. Given a real-time task set, the first step is to check if the task set is schedulable, i.e., check whether all jobs of a task will meet their deadlines (a job is an instance of a task). For this purpose, multiple schedulability tests have been developed, each depending on the scheduling algorithm being used.

- remember that task is a set of parameters.

- We “release” multiple “jobs” of each task, each with its own deadline

- if all jobs of all tasks meet their deadlines, then the system remains safe.

A thread, then, is an implementation of task/job – depending on the actual OS, it could be either, or both.

At a high level, here is a comparison between tasks, jobs and threads (note: these details may vary depending on the specific RTOS):

| aspect | task | job | thread |

|---|---|---|---|

| definition | a task is a unit of work that represents a program or function executing in the RTOS | a job is a specific instance or execution of a task, often tied to a particular event or trigger | a thread is the smallest unit of execution within a task, sharing the task’s resources |

| granularity | coarse-grained; represents a complete function or program | fine-grained; represents a single execution of a task | fine-grained; represents a single flow of execution within a task |

| resource ownership | owns its resources (e.g., stack, memory, state) | does not own resources; relies on the task’s resources | shares resources (e.g., memory, address space) with other threads in the same task |

| scheduling | scheduled by the RTOS kernel based on priority or scheduling algorithm | not directly scheduled; executed as part of a task’s execution | scheduled by the RTOS kernel, often within the context of a task |

| concurrency | tasks run concurrently, managed by the RTOS scheduler | jobs are sequential within a task but may overlap across tasks | threads run concurrently, even within the same task |

| state management | maintains its own state (e.g., ready, running, blocked) | state is transient and tied to the task’s execution | maintains its own state but shares the task’s overall context |

| isolation | high isolation; tasks do not share memory or resources by default ++ | no isolation; jobs are part of a task’s execution | low isolation; threads share memory and resources within a task |

| overhead | higher overhead due to separate stacks and contexts | minimal overhead, as it relies on the task’s resources | moderate overhead, as threads share resources but require context switching |

| use case | used to model independent functions or processes (e.g., control loops) | used to represent a single execution of a task (e.g., processing a sensor reading) | used to parallelize work within a task (e.g., handling multiple i/o operations) |

| example | a task for controlling a motor | a job for processing a specific motor command | a thread for reading sensor data while another thread logs the data |

(++ sometimes tasks do contend for resources, so we need to mitigate access to them, via locks, semaphores, etc. and then have to deal with thorny issues such as priority inversions)

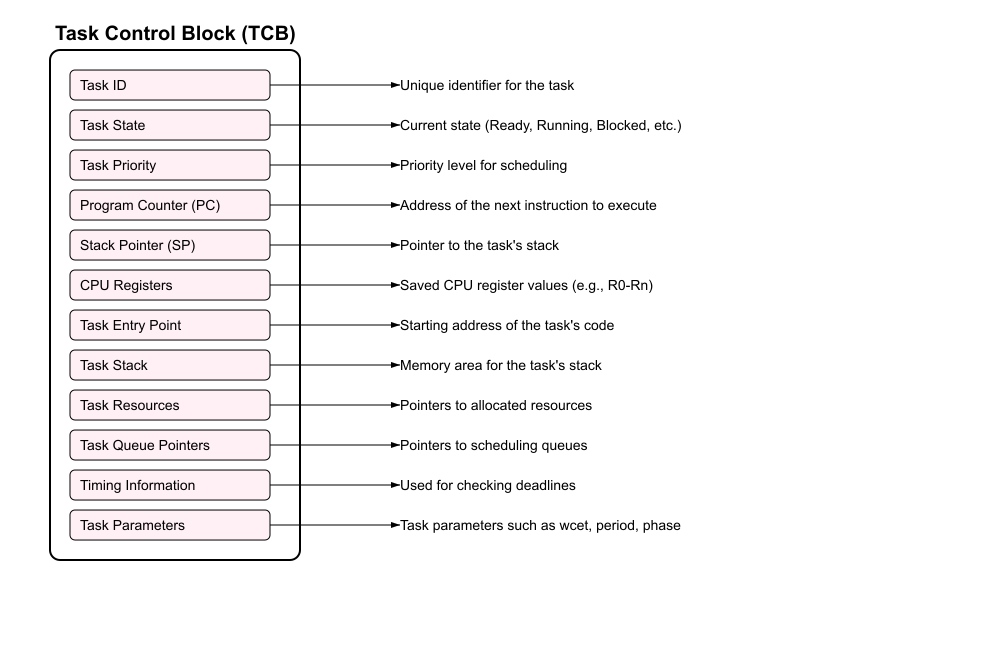

A task is often described using a task control block (TCB):

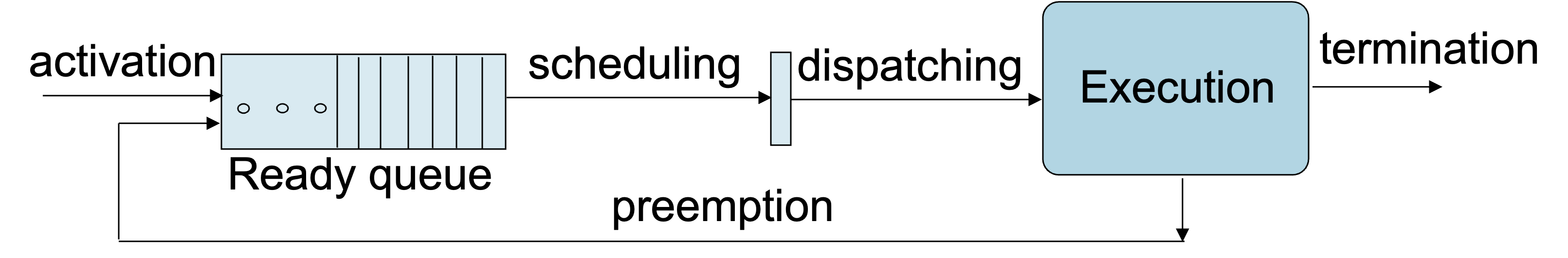

Tasks typically cycle through a set of states, for instance (taken from the FreeRTOS real-time OS):

While the READY, RUNNING and

BLOCKED states are similar to those in

general-purpose operating systems (GPOS), periodic

RTOSes must introduce an additional state:

IDLE or

SUSPENDED:

- periodic task enters this state when it (rather one ‘job’) completes its execution → has to wait for the beginning of the next period

- to be awakened by the timer (i.e., to launch

the next instance/job), the task must notify the end of its

cycle by executing a specific system call,

end cycle→ puts the job in the IDLE state and assigns the processor to another ready job - at the right time, each periodic task in IDLE state → awakened by kernel and inserted in the ready queue

This operation is carried out by a routine activated by a timer → verifies, at each tick, whether some task(job) has to be awakened.

TCBs are usually managed in kernel queues (the implementation details may vary depending on the particular RTOS).

Context Switch Overheads:

One of the main issues with multitasking and preepmtion is that of context switch overheads, i.e., the time and resources required to switch from one task to another. For instance, consider this example of two tasks running on an ARM Cortex-M4:

void Task1(void) {

while(1) {

// Task 1 operations

LED_Toggle();

delay_ms(100);

}

}and

void Task2(void) {

while(1) {

// Task 2 operations

ReadSensor();

delay_ms(200);

}

}When switching between Task1 and Task2, an RTOS might need to:

- save

16general-purpose registers - save the program counter and stack pointer

- update the memory protection unit settings

- load the new task’s context (program into memory, registers, cache, etc.)

So, on the ARM Cortex-M4,

| effect | cost |

|---|---|

| basic context switch | 200-400 CPU cycles |

| cache and pipeline effects, total overhead | 1000+ cycles |

frequent switching (e.g., every 1 ms) |

could consume 1-2% of CPU time! |

These costs can add up, especially if the system has,

- many RT tasks and frequent preemption

- high-frequency/short period jobs that execute frequently

- if tasks contend with each other for shared resources

Hence and RTOS must not only be cognizant of such overheads but also actively manage/mitigate them. Some strategies could include:

- better task/schedule design: e.g., group related operations to reduce context switches

void Task_Sensors(void) {

while(1) {

// Handle multiple sensors in one task

ReadTemperature();

ReadPressure();

ReadHumidity();

delay_ms(500);

}

}- priority-based scheduling: e.g., high priority task gets more CPU

void CriticalTask(void) {

// Set high priority

setPriority(HIGH_PRIORITY);

while(1) {

ProcessCriticalData();

delay_ms(50);

}

}- optimizing memory layouts: e.g., align task stacks to cache line boundaries

#define STACK_SIZE 1024

static __attribute__((aligned(32)))

uint8_t task1_stack[STACK_SIZE];Note: these are not comprehensive and other strategies could be followed, for instance avoiding multitasking altogether! All functions could be implemented in a single process that runs a giant, infinite loop known as a cyclic executive. Newer RTOSes shun ths cyclic executive in favor of the multitasking model since the latter provides more flexibility, control and adaptability but many critical systems (especially older, long-running ones) still use the cyclic executive. For instance, nuclear reactors, chemical plants, etc.

In any case, a precise understanding of these overheads is crucial for:

- setting appropriate task priorities

- determining minimum task periods

- calculating worst-case execution times

- meeting real-time deadlines

- optimizing system performance

There is significant (ongoing) work, both in industry as well as academia, on how to get a handle on context switch overheads while still allowing for flexibility and modularity in the development of RTS.

4.1.2 (Inter-Task) Communication and Synchronization

RTOSes use various mechanisms like semaphores, mutexes, message queues and event flags for communication and synchronization between tasks. Here are some examples:

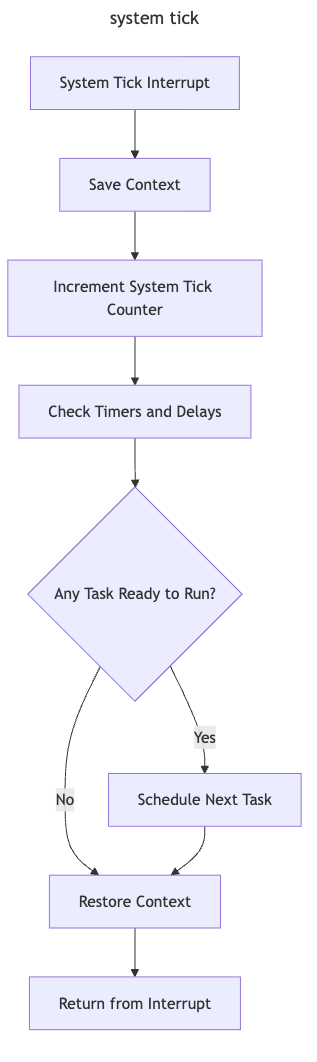

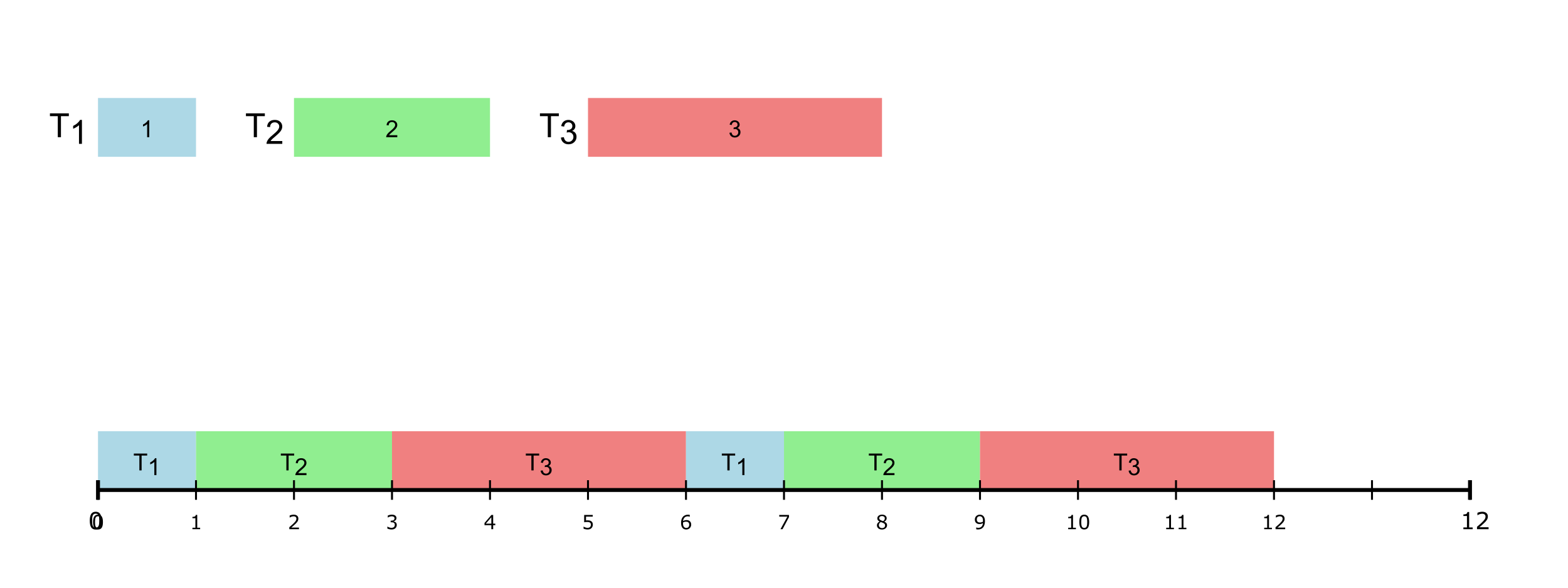

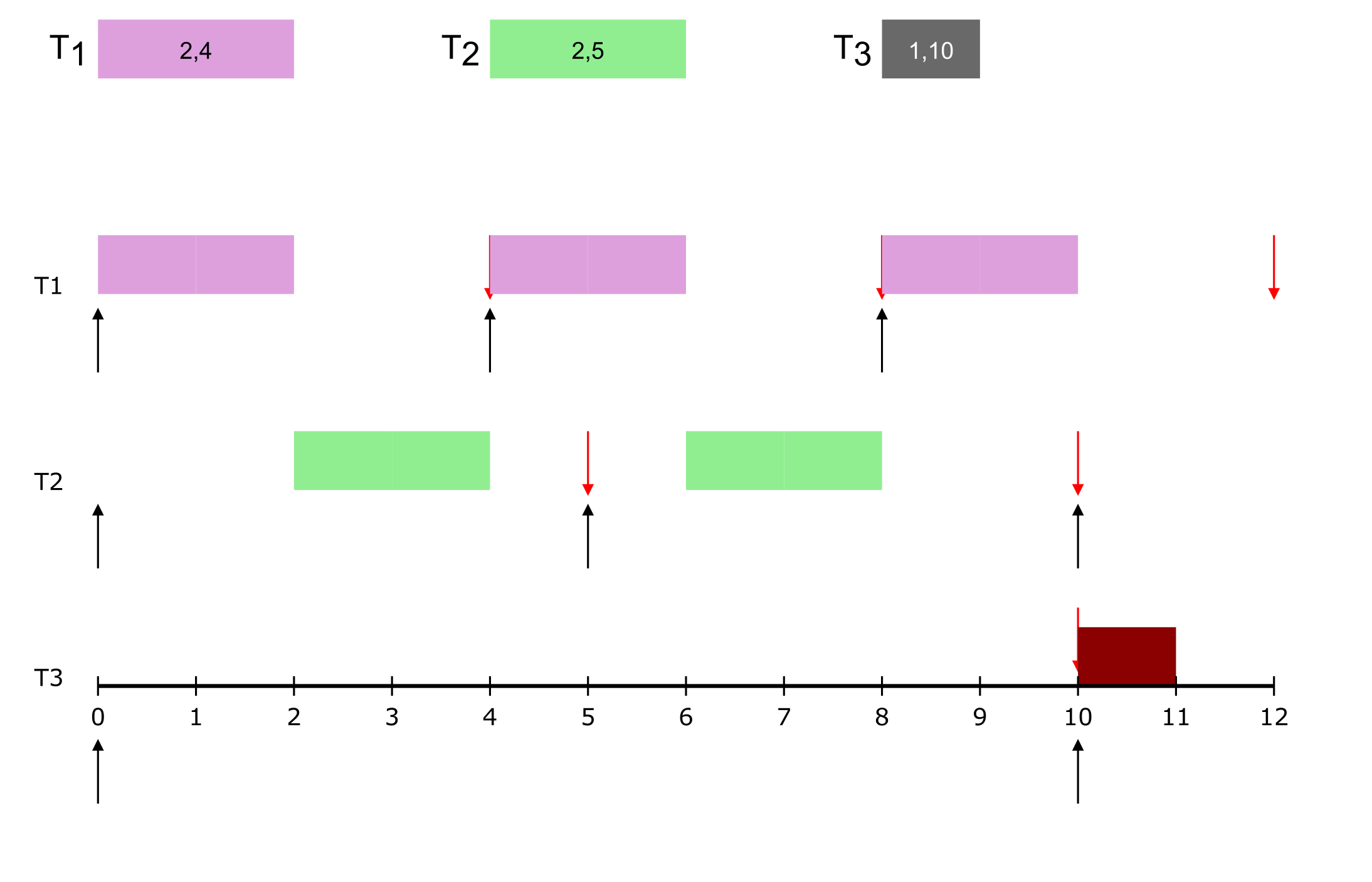

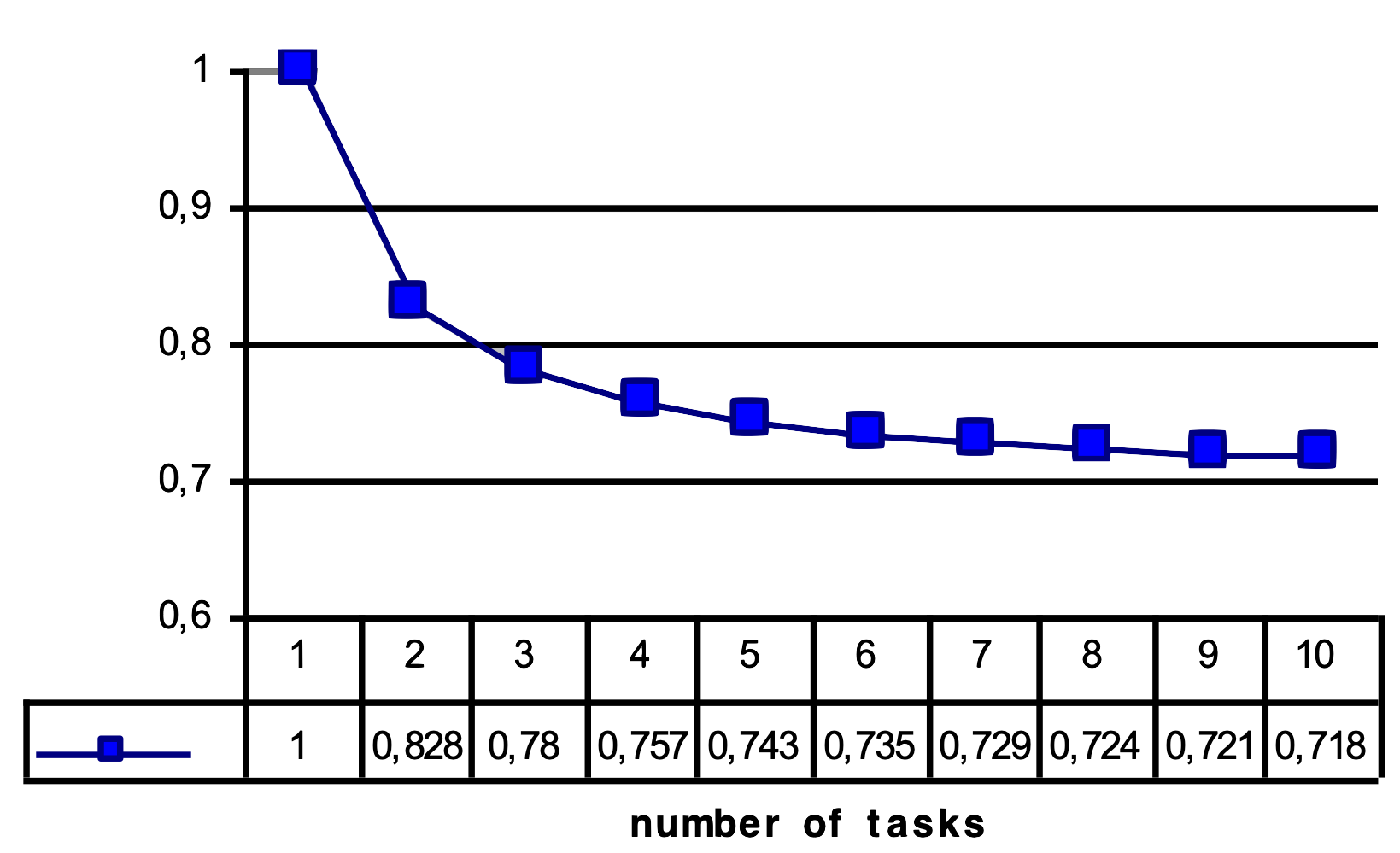

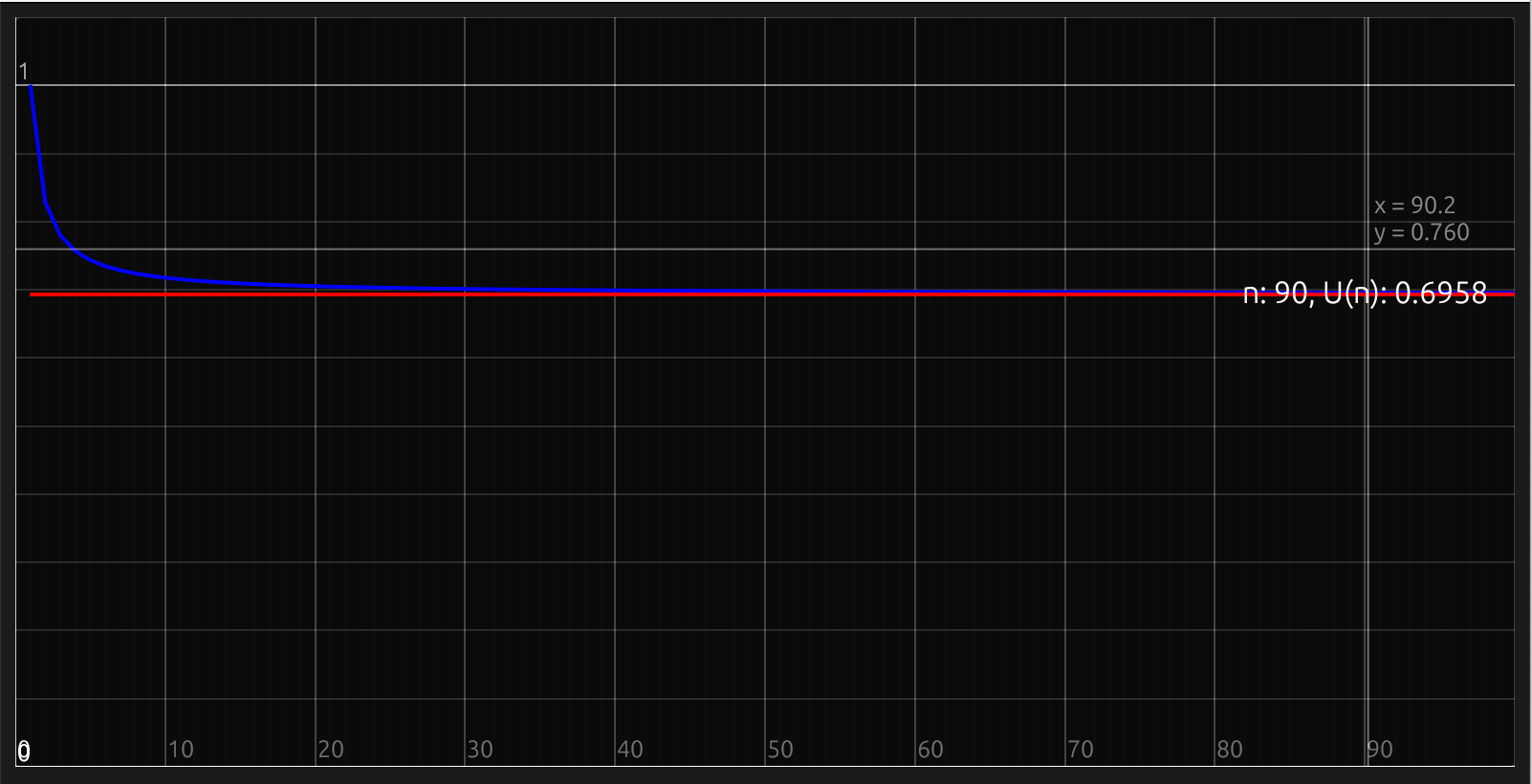

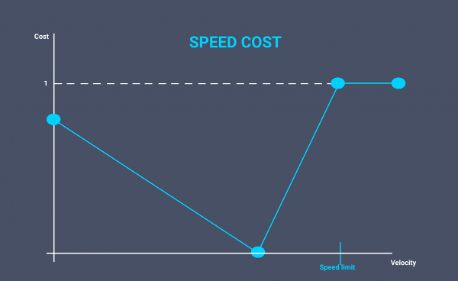

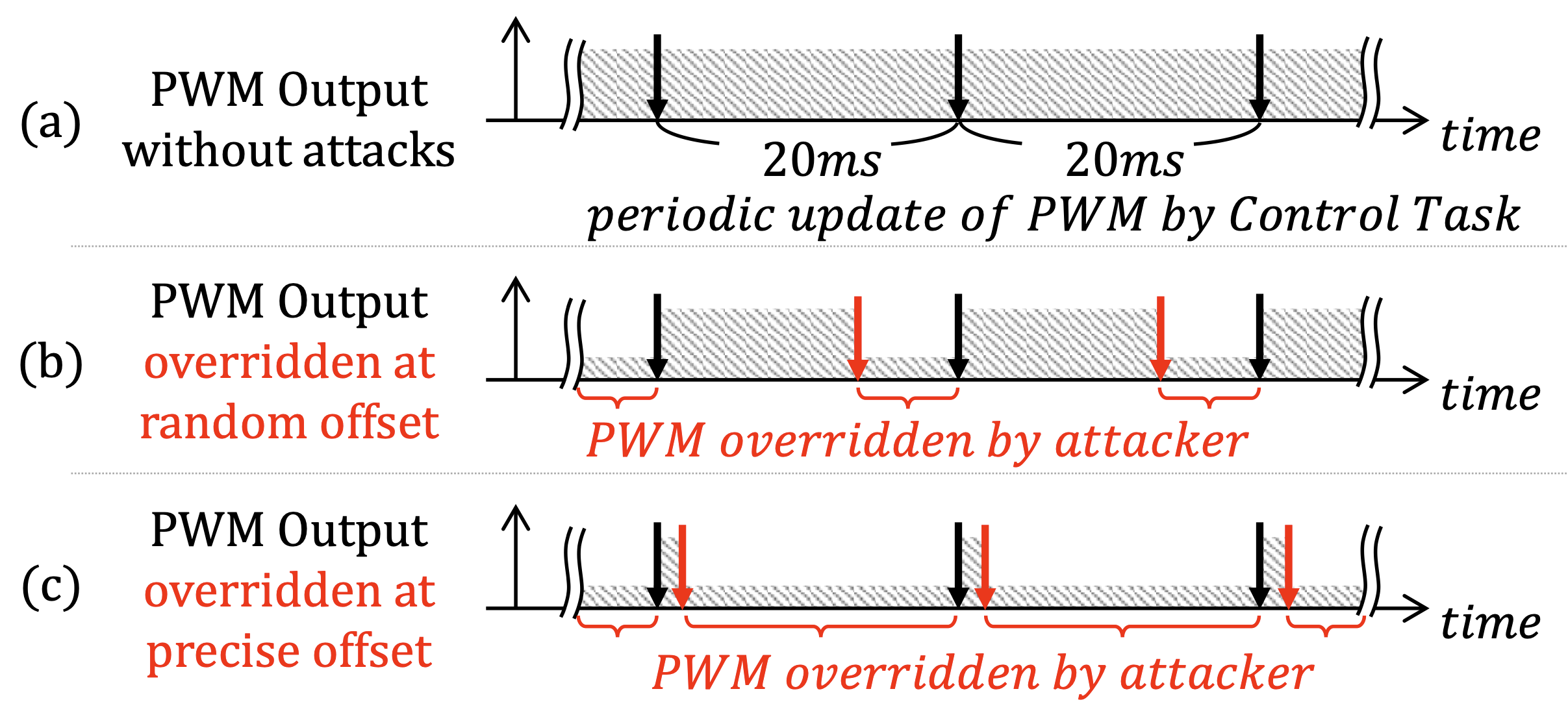

- Semaphores: